Figure 1: 3D surface mesh of a face. |

In biomedical applications, the need for patient-specific treatment has grown in the last several years. As a consequence, decisions for some medical procedures are based heavily on imaging of patient anatomy using ultrasound, computer tomography (CT), or magnetic resonance (MR) images. In some cases, the images are augments with a computer simulation before a surgical procedure is carried out. The patient images are segmented, and various anatomical features are identified by automatic or semiautomatic algorithms. These features need to be accurately meshed for the purpose of computer simulations. The meshes need to accurately capture the geometry of the anatomical features and be of "good quality", whereby even the definition of quality in mesh is a topic of discussion and research.

Our research has focused on the generation of such meshes from volumetric data, which is obtained from the segmented images. For our purposes, we consider the data to contain variations in intensity that tell the observer which material (arteries, lungs, etc.,) is present at a given location in the domain. By querying for the data for such information on tissues and this structures, and using algorithms like those we have developed, one can generate a high quality, geometrically-accurate mesh. The mesh then becomes the basis for further visualization, analysis, or simulations.

Our approach

The generation of meshes that conform to several constraints, such as anisotropy and boundary conformation, is still a challenging problem requiring great computational effort. Many of these constraints are often in conflict with one another, preventing successful automatic mesh generation. We take the approach of explicitly decoupling the problem of conforming to boundaries from all other aspects of meshing. In the absence of the need to respect internal boundaries, other goals of meshing become much easier to achieve.

Our approach begins by building a nonconforming tetrahedral background mesh. The elements of this mesh are created with the desired properties in terms of size and shape, but with no regard for internal tissue boundaries. Next, we apply a single cleaving step to conform the mesh to material boundaries without greatly disturbing the characteristics of elements in the initial mesh. Near where we conform the mesh, we do expect some degradation of these properties to occur, but we minimize their effects through a carefully designed set of operations.

Our proposed method is a generalization of those reported by the well-known isosurface stuffing and lattice cleaving algorithms. Both algorithms exhibit the same behavior, albeit for a more limited class of boundaries (in the case of isosurface stuffing) and input background meshes (both algorithms require body-centered cubic (BCC) lattice inputs). Both algorithms also target only element quality, as measured by bounds on the dihedral angles, whereas, in this work, we also focus on element size and adaptivity (in terms of edge lengths) and anisotropy (in terms of edge orientations).

This work introduces a new strategy for building boundary-conforming meshes out of nonconforming volumetric meshes. Specifically, we make the following contributions to the literature: (1) a new meshing strategy for boundary-conforming tetrahedral meshes that works in the presence of nonmanifold boundaries, (2) a method for computing sizing fields of multimaterial volumetric data, and (3) a variational system for distributing mesh vertices (in the absence of boundaries) relative to these sizing fields.

The first release of Cleaver (Cleaver1) operated on a structured body-centered cubic lattice. Cleaver2 is a complete rewrite of the data structures and access patterns used in Cleaver1. Cleaver2 is agnostic to the underlying data structure and has added the sizing field computation functionality. Our progress over the last year has been on two fronts: (1) implementing Cleaver2 and, in parallel, (2) developing Cleaver3, which produces unstructured meshes. One challenge in this development is managing the memory requirements—as the size of the volume increases, so does the memory footprint. In order to overcome the resulting memory management obstacles, we have developed a multithreaded version of Cleaver2, which can process the volume in smaller segments that fit into the memory in parallel. Once these smaller segments of the volume are processed, they can then be merged to form the final output. This split-and-process approach to meshing allows us to control the number of volume segments that we currently hold in memory and thereby avoid memory thrashing, while providing a good speed-up. We are planning to release the multithreaded Cleaver2 soon. The major improvement of Cleaver3 is that incorporates unstructured meshing and here we focus mainly on the algorithms developed for Cleaver3.

Methodology

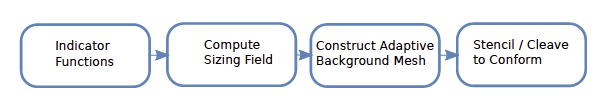

We have developed and implemented algorithms that are separately capable of handling the specific pieces of the following pipeline, as shown in Figure 2.

|

| Figure 2: Proposed meshing pipeline for conforming volumetric meshing. |

Sizing Field: Mesh elements must be adaptively sized for the purpose of geometric fidelity and PDE solution accuracy while simultaneously reducing the number of elements needed for an accurate numerical simulation–this trade off between accuracy and computational overhead is at the core of most relevant simulations. A sizing field for the purpose of mesh generation should possess the following properties: (1) it should be small near thin features and high-curvature regions, (2) it should progressively increase is size for larger features and lower-curvature regions, and (3) it should satisfy what are known as "Lipschitz continuity conditions", i.e., achieve a acceptable level of smoothness.

Our algorithm for computing the sizing field is an extension of an approach borrowed from previous literature. It computes a "distance transform" (DT) three times to capture three different criteria. First, a DT is computed starting from the material boundary surface. The DT is computed again from the medial axis. The values of this DT at the boundary locations indicate the feature size at those boundaries. The DT is then computed a third time from the boundary, but this time the initial distance at the boundary is assumed to be the feature size and the gradient of the DT is limited to a desired value. The result is a sizing field over the whole domain that represents a robust compromise between competing constraints.

Particle Systems for Adaptive Background Meshes: In our approach to adaptive mesh generation, we first distribute particles over the whole domain and then use the classic Delaunay tetrahedralization algorithm to construct a background mesh. For this, we apply an electrostatic particle simulation technique in which the particles are assumed to be negatively charged and the domain is assumed to possess a static charge density that attracts the particles to regions of interest. This static charge density is determined in part by the sizing field describe above. Once a stable point distribution is reached, we use the open-source software Tetgen to generate a tetrahedral mesh.

Unstructured Cleaving: The original mesh in Cleaver does not respect internal material boundaries, which can result in reduced simulation precision. In a subsequent step to produce surface conforming meshes from the unstructured background mesh, we adapt the original lattice cleaving algorithm to produce unstructured meshes that can then be made to conform to internal boundaries. Lattice cleaving, like another technique known as "isosurface stuffing", imposes a set of simple mesh modification rules to still guarantee element quality even as the mesh conforms to boundaries.

Preliminary results

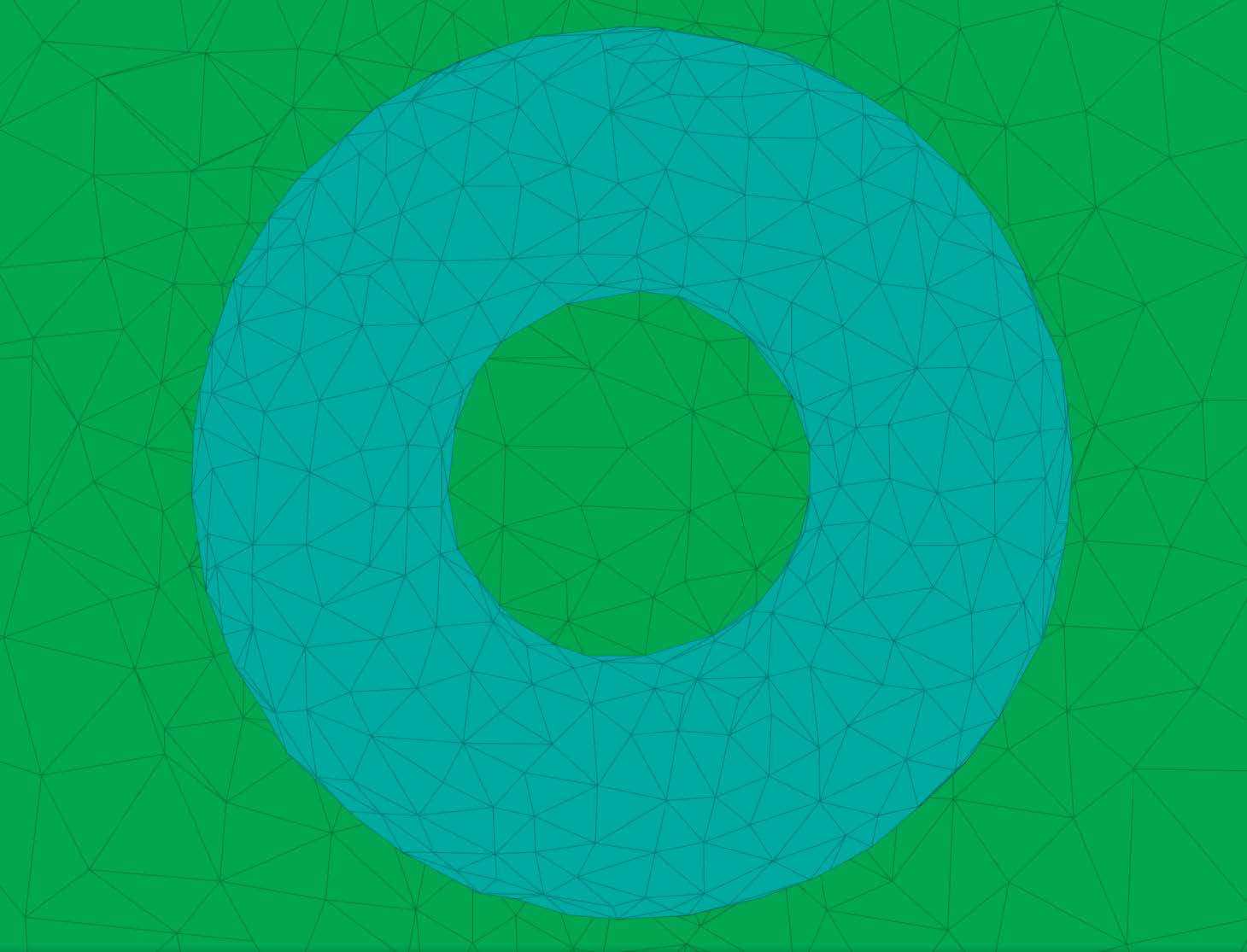

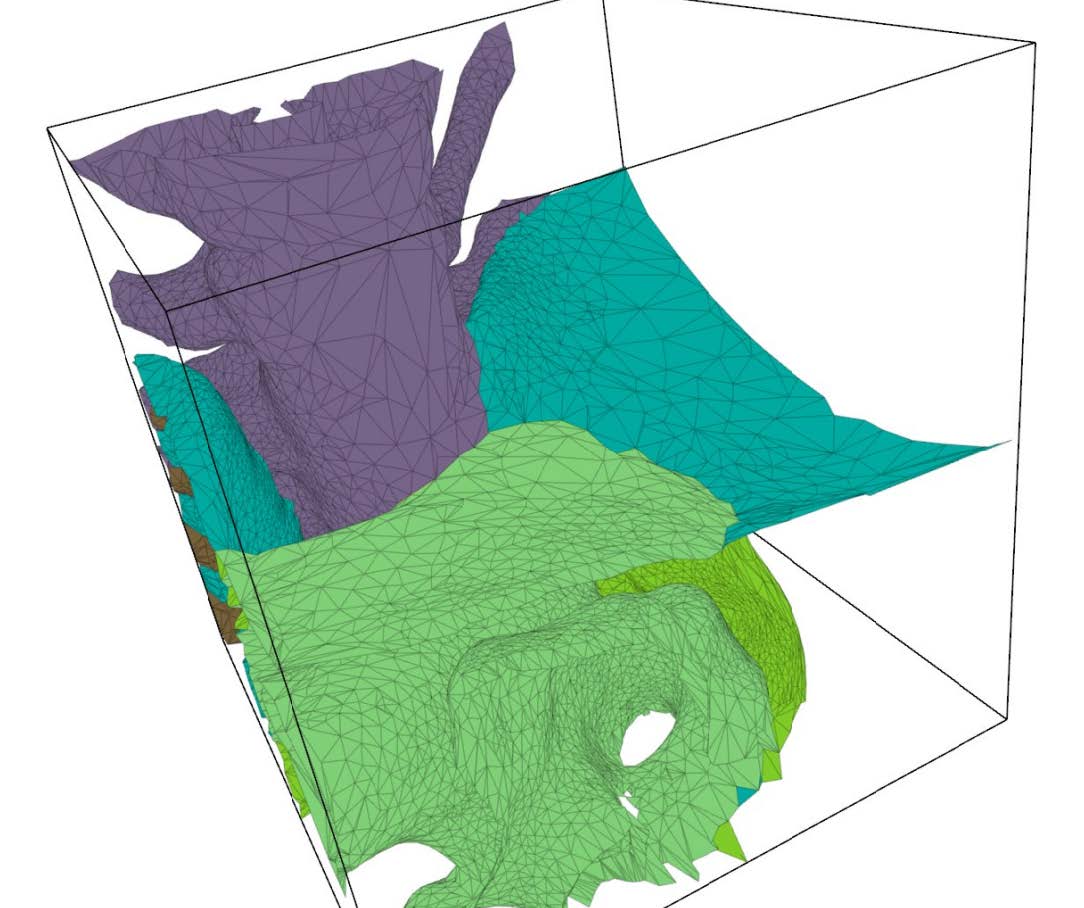

Figure 3 shows cut-away and surface views of two examples of unstructured, adaptive tetrahedral meshes. Since the background mesh adapts to the feature size, the cleaving algorithm has sufficient resolution to capture the surfaces and their topology properly, while remaining coarse in areas where fine resolution is unnecessary.

|

|

| Figure 3: Left: Cut away view of a torus. Right: Surface view of multimaterial torso dataset. | |

Future goals

The imminent improvements we envision for Cleaver will result in accelerations in the speed of generating meshes while maintaining their quality. In the current implementation, the decision to cleave or warp an element is based on a parameter we call "α", which we currently set rather conservatively in order to minimize distortion and the likelihood of forming poor quality mesh elements. It is possible to improve selection of a useful value for α by optimizing such that the worst possible element in the mesh still achieves the highest reasonable quality. We will explore several techniques to achieve this optimization. Also, the particle distribution scheme we currently employ is rather time consuming and we envision improvements to the efficiency of this scheme. We are also well into the process of developing a parallel implementation of the algorithms described in this report to achieve further improvements in efficiency. At each step of this process, we will interact closely with out DBP partners and collaborators to ensure not only theoretical improvements but also that our tools produce, useful, efficient tools for patient specific modeling.