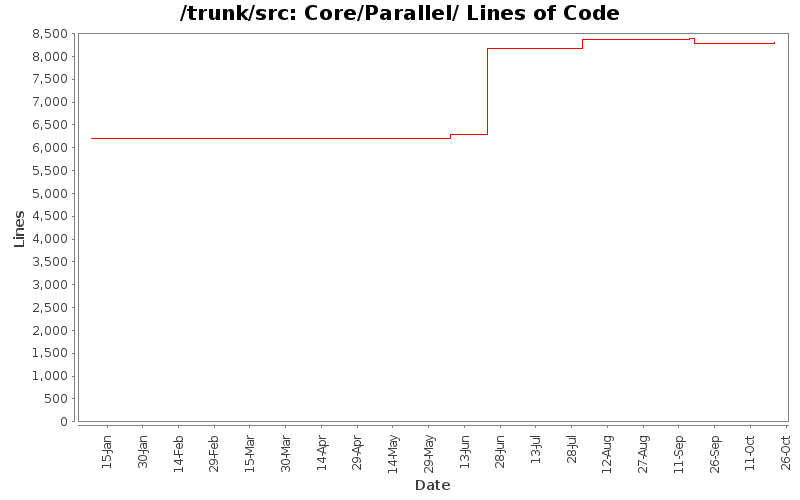

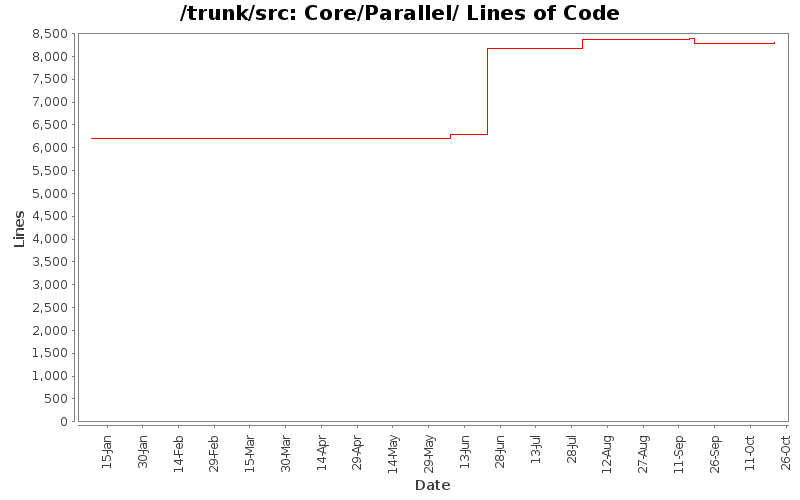

Lines of Code

| Author | Changes | Lines of Code | Lines per Change |

|---|---|---|---|

| Totals | 89 (100.0%) | 2707 (100.0%) | 30.4 |

| dav | 6 (6.7%) | 1893 (69.9%) | 315.5 |

| ahumphrey | 52 (58.4%) | 779 (28.8%) | 14.9 |

| jas | 29 (32.6%) | 24 (0.9%) | 0.8 |

| bpeterson | 2 (2.2%) | 11 (0.4%) | 5.5 |

This allows for non-packed MPI messages to work correctly. Specifically if multiple items are being sent in a single message.

11 lines of code changed in 2 files:

Some cleanup before working toward support for multiple "Normal" task-graphs.

This work aims to support a single compilation phase for >1 distinct TGs, and will cycle between them on specific timesteps. Precisely this support is for radiation and non-radiation timesteps within Arches. This way we avoid the continual hit from TG recompilation for RMCRT radiation timesteps.

Ripped out some unused source and header files from 1994.

3 lines of code changed in 2 files:

Remove SingleProcessor Scheduler/LoadBalancer.

Most of the work here has been in removing the need for Parallel::determineIfRunningUnderMPI(), as running Uintah with MPI is now an invariant, even with only a single process. We ALWAYS run Uintah with MPI.

The last simple step will be to remove usage of Parallel::usingMPI() (which now simply returns true), and also do away with the "-mpi" command line option. Right now sus has been modified to silently ignore "-mpi" and once the nightly RT scripts have been modified, we can deprecate usage.

Note that the following examples of a single process run are synonymous and all use the MPI scheduler with 1 rank:

./sus input.ups

./sus -mpi input.ups

mpirun -np 1 ./sus input.ups

mpirun -np 1 ./sus -mpi input.ups

80 lines of code changed in 7 files:

More concurrency work on MPI recv engine - also moving to straight mutex sync on task queues (no CrowdMonitor). Ultimately these queues need to be lock-free data structures.

Cleaned up ProcesorGroup, along with some other misc formatting/cleanup while under the hood.

Updates to tsan_suppression file

247 lines of code changed in 4 files:

Introduction of the Lockfree Pool data structure and the new CommunicationList. Using this now to replace CommRecMPI and its use of the problematic MPI_Testsome() and MPIWaitsome() calls. We now store individual requests in a lock/wait/contention free Pool and call MPI_Test() and MPI_Wait on individual MPI_Requests.

This fixes the MPI_Buffer memory leak seen in the threaded scheduler, in which multiple threads think they will recieve a message, allocate a buffer and then only one thread does the actual recieve and calls the after-communcation handler to clean up the buffer. This memory leak was most pronounced at large scale with RMCRT due to the global halo requirement.

Also backing out the support for non-uniform ghost cells across AMR levels for now, until the issue of some required messages not being generated from coarse radiation mesh to some ranks. This has to do within partial dependencybatches being created due to an incomplete processor neighborhood list across levels.

MISC:

* cleaned up some old TAU remnants in doc directory and build system

* refactor and cleanup in MPIScheduler

* removed unused source code, including the old ThreadedScheduler (only one threaded scheduler now - Unified)

* cleaned up non-existent entreis in environmentalFlags.txt

203 lines of code changed in 3 files:

Reorganize the way we include MPI. Previously files were including the mpi_defs.h (configure generated file) to get mpi.h. Now they

include UintahMPI.h (from Core/Parallel/).

Note: MPI is now required (though for all intents and purposes it already was). You can still run a serial version of the code,

you just must compile/link with MPI first.

M configure

M configure.ac

- Fix check for xml2-config.

- Fix check for mpi.h location when using 'built-in' (fixes issue with non-mpich MPIs).

- Check to see if MPI handles const correctly.

- Fix some indentation.

- No longer define HAVE_MPI or HAVE_MPICH as we require MPI now.

- Added defines for MPI_CONST_WORKS, and MPI_MAX_THREADS and MPI3_ENABLED (thought the last 2 are hard-coded and need

to have configure tests written for them).

M include/sci_defs/mpi_testdefs.h.in

- Added MPI_CONST_WORKS, MPI_MAX_THREADS, and MPI3_ENABLED #defines.

- Moved the MPI wrappers into UintahMPI.h (thus out of this configure generated file).

D include/sci_mpi.h

- Removed sci_mpi.h as it is not used and its only purpose was to allow for compilation without MPI. This

is no-longer allowed (and probably hadn't worked in a long time).

A Core/Parallel/UintahMPI.h

- Added the new file UintahMPI.h which is used to #include <mpi.h> and wrap the MPI calls.

- These wrappers were in mpi_testdefs.h.

- Replaced most of the "const" with MPICONST so that on non-const-compliant MPIs they

can be turned off (this is done through a configure check).

- Grouped a lot of the UINTAH_ENABLE_MPI3 sections into single #if's instead of having a #if for each line.

M CCA/Components/Arches/fortran/sub.mk

- Remove tabs from where they are not supposed to be.

M CCA/Components/MPM/PetscSolver.h

M CCA/Components/ICE/PressureSolve/HypreStandAlone/Hierarchy.cc

M CCA/Components/ICE/PressureSolve/HypreStandAlone/mpitest.cc

M CCA/Components/ICE/Advection/FluxDatatypes.h

M CCA/Components/Schedulers/OnDemandDataWarehouse.h

M CCA/Components/Schedulers/BatchReceiveHandler.h

M CCA/Components/Schedulers/templates.cc

M CCA/Components/Schedulers/Relocate.h

M CCA/Components/Schedulers/MPIScheduler.cc

M CCA/Ports/PIDXOutputContext.h

M Core/Grid/Variables/Stencil4.cc

M Core/Grid/Variables/ReductionVariableBase.h

M Core/Grid/Variables/Stencil7.cc

M Core/Grid/Variables/SoleVariableBase.h

M Core/Parallel/ProcessorGroup.h

M Core/Parallel/PackBufferInfo.h

M Core/Parallel/BufferInfo.h

M Core/Disclosure/TypeDescription.h

M Core/Util/DOUT.hpp

M Core/Util/InfoMapper.h

M StandAlone/tools/mpi_test/async_mpi_test.cc

M StandAlone/tools/mpi_test/mpi_hang.cc

M StandAlone/tools/mpi_test/mpi_test.cc

M StandAlone/tools/fsspeed/fsspeed.cc

- Proper indentation.

- Replaced mpi_defs.h with UintahMPI.h.

- Use <> for includes and not "" per Uintah conding standard.

- Added comment for the #endif of the #ifndef compiler guard for the .h file.

Moved some #ifndef .h compiler guards to the very top of the file.

M testprograms/Malloc/test11.cc

M testprograms/Malloc/test12.cc

M testprograms/Malloc/test13.cc

- Fix indentation.

- "__linux" doesn't exist on some systems, so added "__linux__".

Probably could remove the __linux, but not sure so I didn't.

M VisIt/libsim/visit_libsim.cc

- Use #include <>.

M StandAlone/sus.cc

- Remove the code for old MPI versions. We don't support MPI v1 any more.

1893 lines of code changed in 6 files:

Redirect all MPI calls through the lightweight wrapper (header only)

This allows for standardized error checking and easy collection of runtime stats. All MPI funtions can be called from the wrapper by replacing:

MPI_ with Uintah::MPI::

e.g.

Uintah::MPI::Isend(...)

Uintah::MPI::Reduce(...)

Also enables MPI3 wrappers when MPI3 is available and protects against these when not. Will want MPI3 for non-blocking collectives. MPI3 is availalbe on Mira but not Titan yet.

* Note src/scripts/wrap_mpi_calls.sh has been added to the src tree. This has the sed foo to do this replacement src tree-wide.

* Have tested this with OpenMPI, MPICH, IntelMPI and also built on Titan and Mira.

21 lines of code changed in 6 files:

Revert -r55443, until folks can get compilers upgraded and buildbot MPI is updated.

21 lines of code changed in 5 files:

Redirect all MPI calls through the lightweight wrapper (header only)

This allows for standardized error checking and easy collection of runtime stats. All MPI funtions can be called from the wrapper by replacing:

MPI_ with Uintah::MPI::

e.g.

Uintah::MPI::Isend(...)

Uintah::MPI::Reduce(...)

Also enables MPI3 wrappers when MPI3 is available and protects against these when not. Will want MPI3 for non-blocking collectives. MPI3 is availalbe on Mira but not Titan yet.

* Note src/scripts/wrap_mpi_calls.sh has been added to the src tree. This has the sed foo to do this replacement src tree-wide.

* Have tested this with OpenMPI, MPICH, IntelMPI and also built on Titan and Mira.

21 lines of code changed in 5 files:

Revert r55429.

Still having an MPICH/OpenMPI compatibility problem.

21 lines of code changed in 5 files:

Redirect all MPI calls through the lightweight wrapper (header only)

This allows for standardized error checking and easy collection of runtime stats. All MPI funtions can be called from the wrapper by replacing:

MPI_ with Uintah::MPI::

e.g.

Uintah::MPI::Isend(...)

Uintah::MPI::Reduce(...)

Also enables MPI3 wrappers when MPI3 is available and protects against these when not. Will want MPI3 for non-blocking collectives. MPI3 is availalbe on Mira but not Titan yet.

* Note src/scripts/wrap_mpi_calls.sh has been added to the src tree. This has the sed foo to do this replacement src tree-wide.

20 lines of code changed in 4 files:

Removal of src/Core/Thread and related refactoring throughout the code-base.

This is the first step in a series of infrastrucutre overhauls to modernize Uintah. Though this all passes local RT (both CPU and GPU tests), I expect some fallout we haven't considered and will be standing by to deal with any issues. Once the dust settles, we will move to replacing Core/Malloc with jemalloc.

* We are now using the standard library for all multi-threading needs within the infrastructure, e.g. std::atomic, std::thread, std::mutex, etc.

* The Unified Scheduler is now the only multi-threaded scheduler, e.g. ThreadedMPIScheduler no longer exists (though the source will soon be placed into an attic).

* Threads spawned by the Unified Scheduler are detached by default (not joinable), allowing for easy, clean and independent execution. There are no longer ConditionVariables used to signal worker threads, just a simple enum for thread-state.

* What was Core/Thread/Time.* is now Core/Util/Time.* - a next step will be to migrate all internal timers, etc to use std::chrono.

* NOTE: Though much cleanup has occurred with this commit, there is still significant cleanup and formatting to be done. The scope of this commit neccessitates a more incremental approach.

142 lines of code changed in 6 files:

Remove superfulous, outdated and unused classes/files.

0 lines of code changed in 5 files:

More changes from SCIRun to Uintah. Delete unused SCIRun build_script files.

3 lines of code changed in 1 file:

Move non-conflicting classes that were in SCIRun namespace to Uintah namespace.

4 lines of code changed in 6 files:

Update copyright date to 2016.

17 lines of code changed in 22 files: