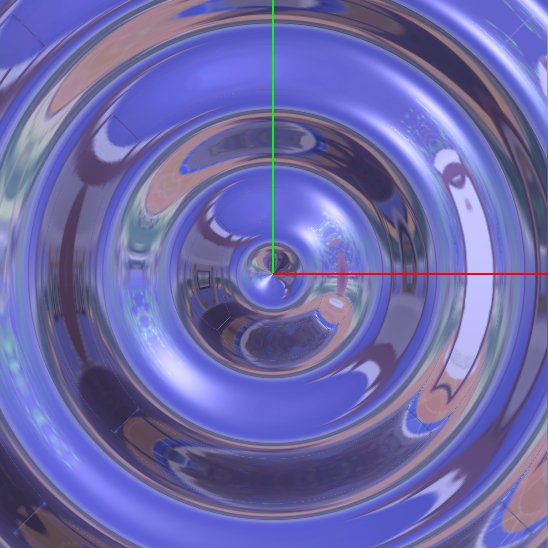

There are two light sources, both coming from outside through the window. One of them is a strong sun light, the other is a weaker light that represents light coming from the outside environment. I set the angle and intensity of these light sources to make the scene have the look of a morning at 9am. The window blinds prevents much of the light to come in, thus a large part of the scene is lit by indirect light, giving it a soft, smoothing feel. I used Blinn-Phong shading model with a Fresnel reflection term for all objects here. I separated the direct and indirect lighting so that I didn't have to use 32 (Anti-Aliasing) x 512 (Monte Carlo) samples for each pixel. I also played a bit with pre-filtering the photon map but did not get very far with it. Pre-filtering didn't seem to help in my case (I used a hash grid instead of a kd-tree to store the photon map, so a nearest-point query is not quite cheaper than an intersecting sphere query, and is awkward to do).

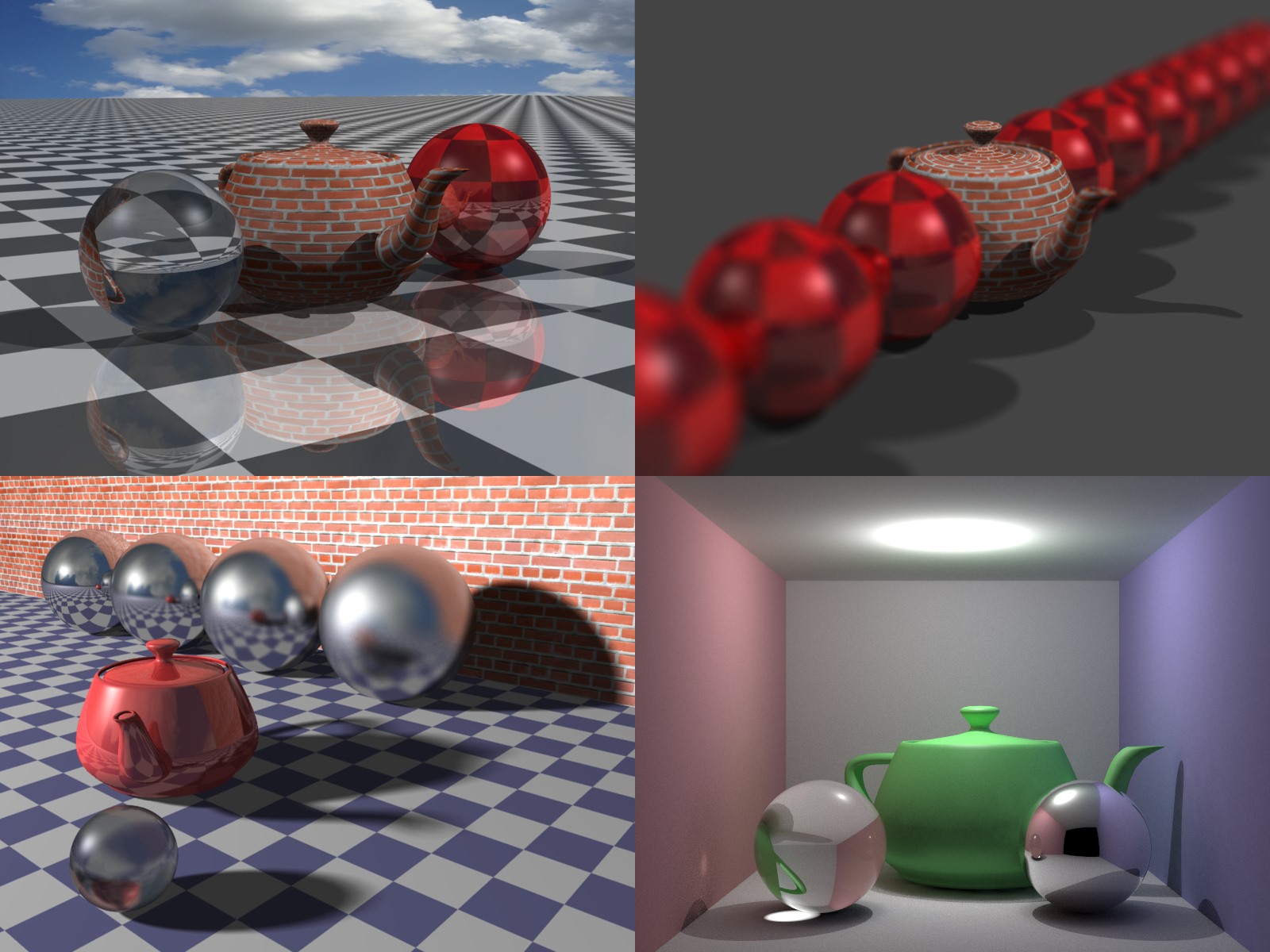

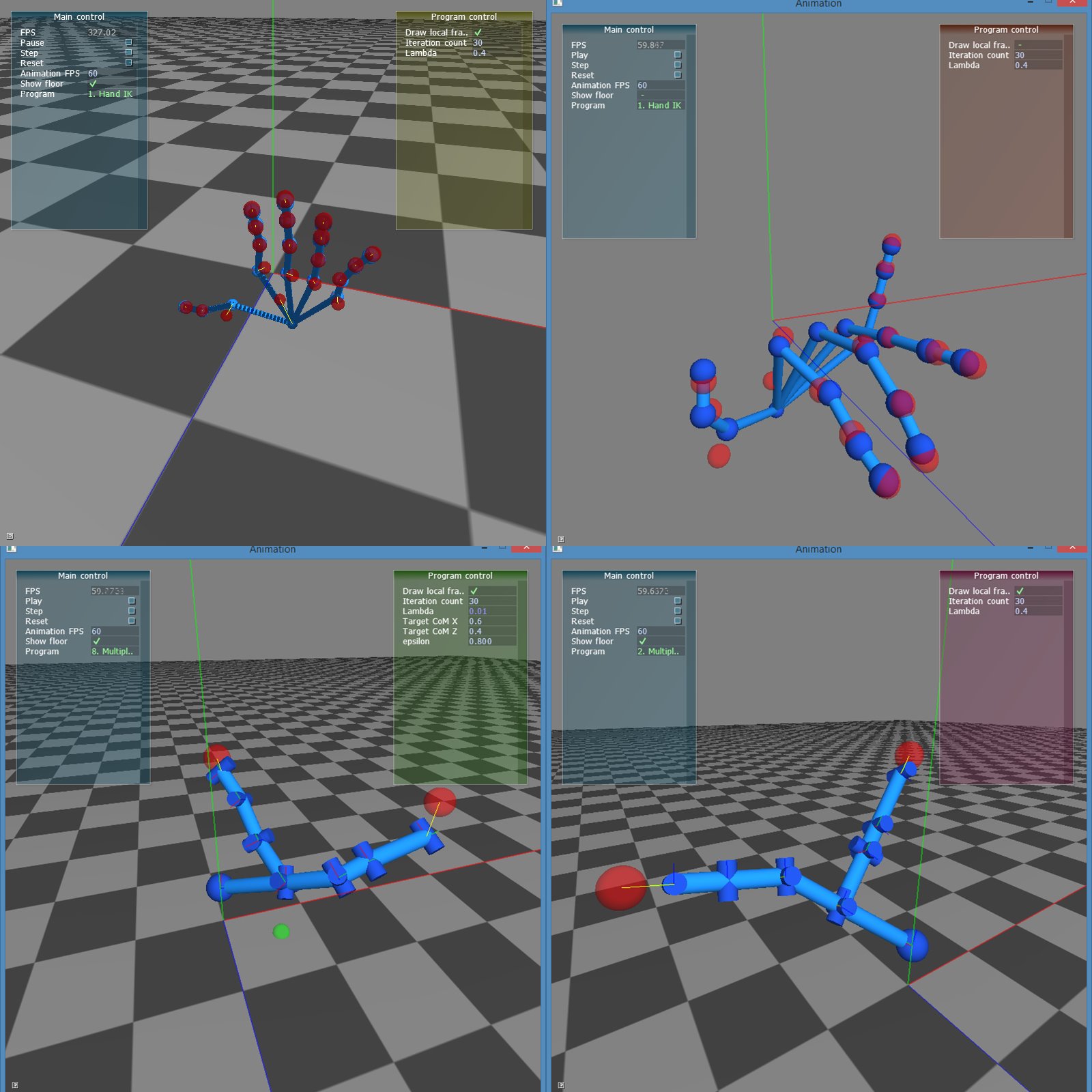

using Damped Least Squares

Reference: Samuel R. Buss and Jin-Su Kim, Selectively Damped Least Squares for Inverse Kinematics, Journal of Graphics Tools, 10:37–49, 2004.

for Failure Analysis of Chip Fabrication

- Automatically matches and aligns die image to design layout

- Aligns images of unknown scale, translation, rotation, and skew

- Handles a wide range of image magnification

- Works with images that show multiple layers of the chip

- Supports SEM, FIB, and Optical Microscope images

- Detects repeated patterns

- Is robust to fabrication defects and image noise

I was initially the sole developer for this software, and later lead a small team to continue developing during my employment at Core Resolution, a Singapore-based software startup. ImageMapper solves a unique problem of matching an image to a design layout (which is not an image but rather a set of polygons). I designed and implemented all the algorithms employed for this task. Since this is a commercial software, there is no public code or documentation of the algorithms that I can publicly share.

in Processing

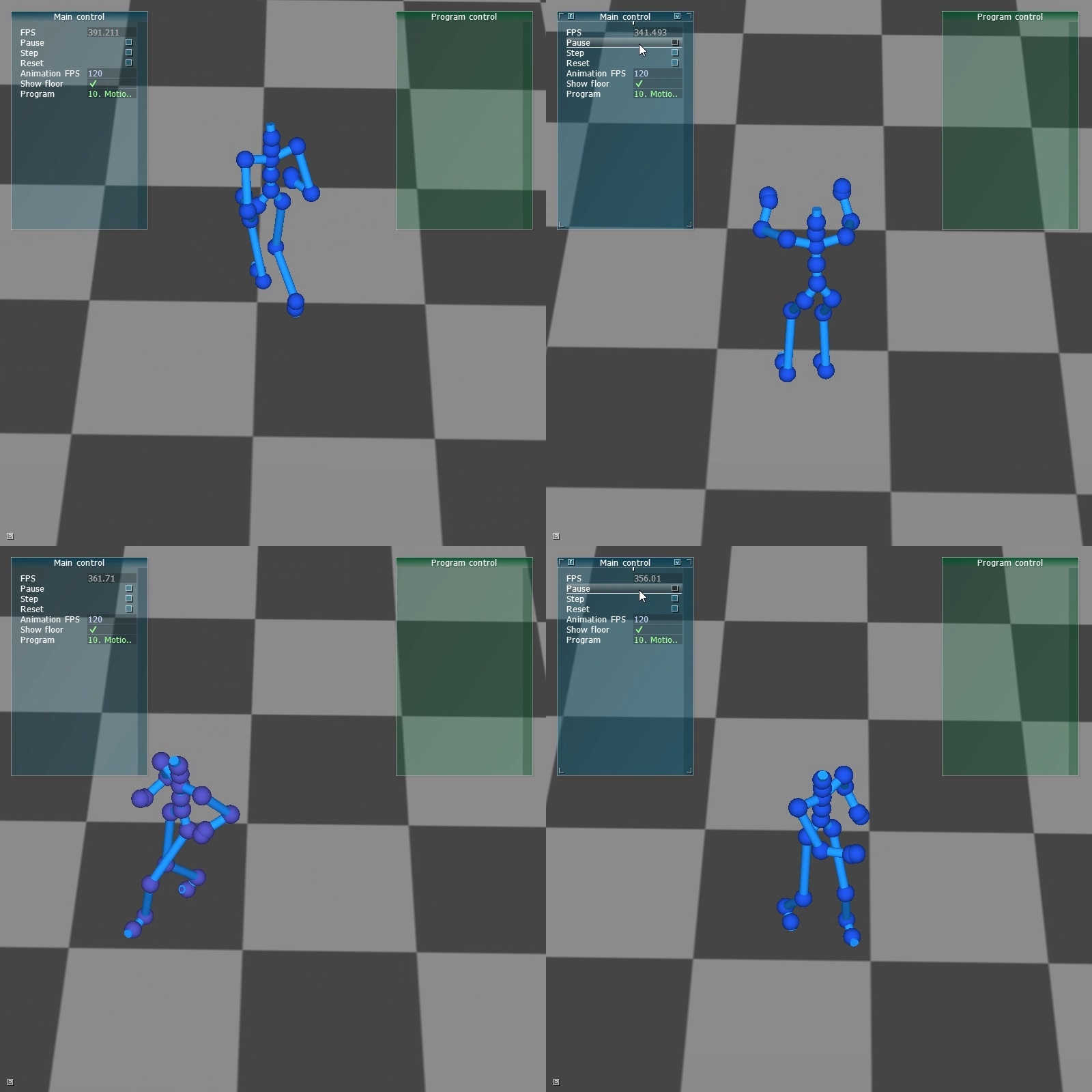

- Dam break. A column of water initially occupying half of tank is collapsing, creating back and forth waves from one side of the tank to the other.

- Water drop. A volume of water is initially put in the air, above a water bed. It then falls down, creating some waves.

- Sink. A volume of water is falling down to the level below through a hole in the center.

- Wave. A tank initially contains some water. One of the walls move back and forth periodically, creating large waves to the other side.

- Ball. A ball is dropping on a volume of water. The ball then move back and forth periodically, pushing the water around.

- A column of water is put into a container with the U shape ( ). The water flows from one side to the other until equilibrium is reached.

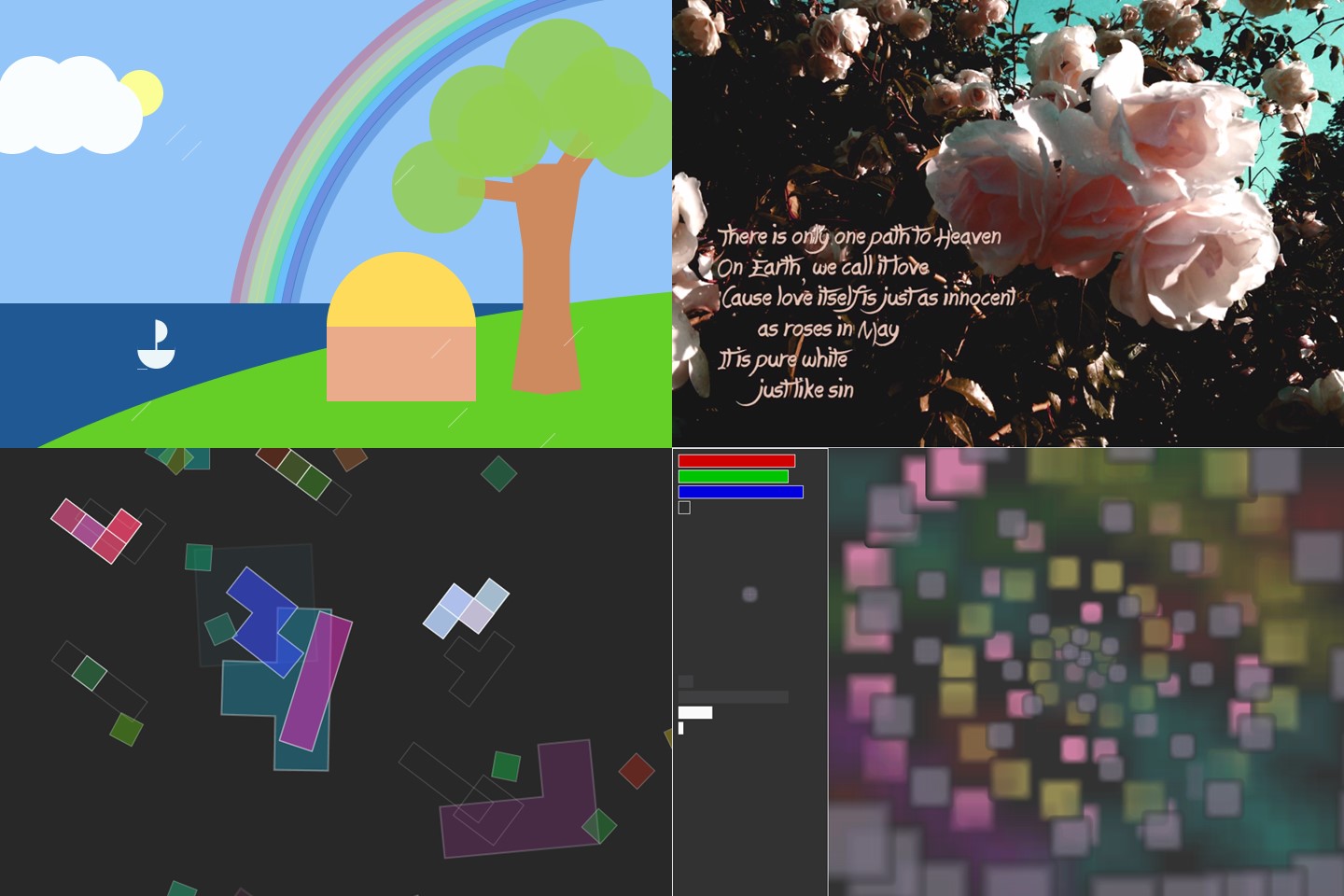

Computational Photography Papers

Flash/No flash I implement the Flash/No-Flash technique, following the paper very closely. The only difference is in the calculation of shadow mask. I use a simple heuristics: pixels that are in shadows caused by the flash have intensities below some threshold. Reference: Digital Photography with Flash and No-Flash Image Pairs, Georg Petschnigg, Richard Szeliski, Maneesh Agrawala, Michael Cohen, Hugues Hoppe, Kentaro Toyama, ACM Transactions on Graphics, Volume 23 Issue 3, 2004, Pages 664 - 672

Seam Carving I implement the dynamic programming algorithm described in the seam carving paper. The implementation follows the paper exactly. I do not implement the part where users can edit the energy field. Image enlarging seems to be glossed over in the paper. My algorithm enlarges an image by selecting k seams out of nk smallest seams (n = 1, 2, 3, etc). When tracing back the k seams, if any two of them “merge” at some stage, I push them further away from each other. This is to prevent the stretching effect when the k seams are very close to one another. Reference: Seam Carving for Content-Aware Image Resizing, Shai Avidan, Ariel Shamir, ACM Transactions on Graphics, Volume 26 Issue 3, 2007, Article No. 10

Focal Stacking My focal stacking algorithm works by doing a deblur on a weighted sum of the input image stack. For each pixel in each input image, a sharpness value is computed by summing the squared gradients in x and y directions. My algorithm then sums all input image using the sharpness values as weights. It’s as if the result image was taken using a camera with a translating detector described in Hajime Nagahara’s paper. This image can then be deblurred with a PSF that has a similar shape to the one used in that paper (see the Results section). The Mask image is computed using the same weights, but with original pixel values being an input image’s position in the stack. Reference: Flexible Depth of Field Photography, Hajime Nagahara, Sujit Kuthirummal, Changyin Zhou, Shree K. Nayar, Proceedings of the 10th European Conference on Computer Vision (ECCV '08'): Part IV, Pages 60 - 73

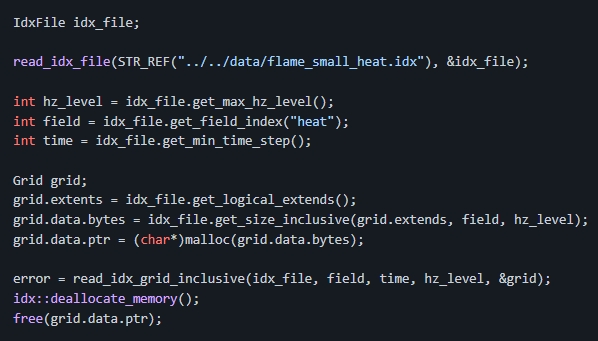

Tone Mapping I implement four different tone mapping methods. The first one is a linear map. The second one is Erik Reinhard’s log-average method based on equations (1) and (2) in his paper. The third one is Reihard’s global operator (equation (3)). The fourth method is Reinhard’s dodge and burn method (equation (5), (6), (7), and (8)). All methods except the first one follow the paper exactly. Reference: Photographic Tone Reproduction for Digital Images, Erik Reinhard, Michael Stark, Peter Shirley, James Ferwerda, ACM Transactions on Graphics, Volume 21 Issue 3, 2002, Pages 267 - 276

- GLSL vertex and fragment shader to perform procedural bump mapping and reflection mapping

- GLSL fragment shader to compute the inclusive all-prefix-sums (inclusive scan) of an input array on the GPU

- CUDA kernels to perform discrete convolution

- CUDA kernels to compute a sorted version of an input integer array with all the duplicate elements removed, using stream compaction and the scan-and-scatter approach