SCI Publications

2016

U. Rüde, K. Willcox, L. C. McInnes, H. De Sterck, G. Biros, H. Bungartz, J. Corones, E. Cramer, J. Crowley, O. Ghattas, M. Gunzburger, M. Hanke, R. Harrison, M. Heroux, J. Hesthaven, P. Jimack, C. Johnson, K. E. Jordan, D. E. Keyes, R. Krause, V. Kumar, S. Mayer, J. Meza, K. M. Mørken, J. T. Oden, L. Petzold, P. Raghavan, S. M. Shontz, A. Trefethen, P. Turner, V. Voevodin, B. Wohlmuth, C. S. Woodward.

“Research and Education in Computational Science and Engineering,” Subtitled “Report from a workshop sponsored by the Society for Industrial and Applied Mathematics (SIAM) and the European Exascale Software Initiative (EESI-2),” Aug, 2016.

Over the past two decades the field of computational science and engineering (CSE) has penetrated both basic and applied research in academia, industry, and laboratories to advance discovery, optimize systems, support decision-makers, and educate the scientific and engineering workforce. Informed by centuries of theory and experiment, CSE performs computational experiments to answer questions that neither theory nor experiment alone is equipped to answer. CSE provides scientists and engineers of all persuasions with algorithmic inventions and software systems that transcend disciplines and scales. Carried on a wave of digital technology, CSE brings the power of parallelism to bear on troves of data. Mathematics-based advanced computing has become a prevalent means of discovery and innovation in essentially all areas of science, engineering, technology, and society; and the CSE community is at the core of this transformation. However, a combination of disruptive developments---including the architectural complexity of extreme-scale computing, the data revolution that engulfs the planet, and the specialization required to follow the applications to new frontiers---is redefining the scope and reach of the CSE endeavor. This report describes the rapid expansion of CSE and the challenges to sustaining its bold advances. The report also presents strategies and directions for CSE research and education for the next decade.

M. Sajjadi, S.M. Seyedhosseini, T. Tasdizen.

“Disjunctive Normal Networks,” In Neurocomputing, Vol. 218, Elsevier BV, pp. 276--285. Dec, 2016.

DOI: 10.1016/j.neucom.2016.08.047

Artificial neural networks are powerful pattern classifiers. They form the basis of the highly successful and popular Convolutional Networks which offer the state-of-the-art performance on several computer visions tasks. However, in many general and non-vision tasks, neural networks are surpassed by methods such as support vector machines and random forests that are also easier to use and faster to train. One reason is that the backpropagation algorithm, which is used to train artificial neural networks, usually starts from a random weight initialization which complicates the optimization process leading to long training times and increases the risk of stopping in a poor local minima. Several initialization schemes and pre-training methods have been proposed to improve the efficiency and performance of training a neural network. However, this problem arises from the architecture of neural networks. We use the disjunctive normal form and approximate the boolean conjunction operations with products to construct a novel network architecture. The proposed model can be trained by minimizing an error function and it allows an effective and intuitive initialization which avoids poor local minima. We show that the proposed structure provides efficient coverage of the decision space which leads to state-of-the art classification accuracy and fast training times.

M. Sajjadi, M. Javanmardi, T. Tasdizen.

“Regularization With Stochastic Transformations and Perturbations for Deep Semi-Supervised Learning,” In CoRR, Vol. abs/1606.04586, 2016.

Effective convolutional neural networks are trained on large sets of labeled data. However, creating large labeled datasets is a very costly and time-consuming task. Semi-supervised learning uses unlabeled data to train a model with higher accuracy when there is a limited set of labeled data available. In this paper, we consider the problem of semi-supervised learning with convolutional neural networks. Techniques such as randomized data augmentation, dropout and random max-pooling provide better generalization and stability for classifiers that are trained using gradient descent. Multiple passes of an individual sample through the network might lead to different predictions due to the non-deterministic behavior of these techniques. We propose an unsupervised loss function that takes advantage of the stochastic nature of these methods and minimizes the difference between the predictions of multiple passes of a training sample through the network. We evaluate the proposed method on several benchmark datasets.

M. Sajjadi, M. Javanmardi, T. Tasdizen.

“Mutual exclusivity loss for semi-supervised deep learning,” In 2016 IEEE International Conference on Image Processing (ICIP), IEEE, September, 2016.

In this paper we consider the problem of semi-supervised learning with deep Convolutional Neural Networks (ConvNets). Semi-supervised learning is motivated on the observation that unlabeled data is cheap and can be used to improve the accuracy of classifiers. In this paper we propose an unsupervised regularization term that explicitly forces the classifier's prediction for multiple classes to be mutually-exclusive and effectively guides the decision boundary to lie on the low density space between the manifolds corresponding to different classes of data. Our proposed approach is general and can be used with any backpropagation-based learning method. We show through different experiments that our method can improve the object recognition performance of ConvNets using unlabeled data.

S.M. Seyedhosseini, T. Tasdizen.

“Semantic Image Segmentation with Contextual Hierarchical Models,” In IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 38, No. 5, IEEE, pp. 951--964. May, 2016.

DOI: 10.1109/tpami.2015.2473846

Semantic segmentation is the problem of assigning an object label to each pixel. It unifies the image segmentation and object recognition problems. The importance of using contextual information in semantic segmentation frameworks has been widely realized in the field. We propose a contextual framework, called contextual hierarchical model (CHM), which learns contextual information in a hierarchical framework for semantic segmentation. At each level of the hierarchy, a classifier is trained based on downsampled input images and outputs of previous levels. Our model then incorporates the resulting multi-resolution contextual information into a classifier to segment the input image at original resolution. This training strategy allows for optimization of a joint posterior probability at multiple resolutions through the hierarchy. Contextual hierarchical model is purely based on the input image patches and does not make use of any fragments or shape examples. Hence, it is applicable to a variety of problems such as object segmentation and edge detection. We demonstrate that CHM performs at par with state-of-the-art on Stanford background and Weizmann horse datasets. It also outperforms state-of-the-art edge detection methods on NYU depth dataset and achieves state-of-the-art on Berkeley segmentation dataset (BSDS 500).

H. De Sterck, C. Johnson,, L. C. McInnes.

“Special Section on Two Themes: CSE Software and Big Data in CSE,” In SIAM J. Sci. Comput, Vol. 38, No. 5, SIAM, pp. S1--S2. 2016.

The 2015 SIAM Conference on Computational Science and Engineering (CSE) was held March 14-18, 2015, in Salt Lake City, Utah. The SIAM Journal on Scientific Computing (SISC) created this special section in association with the CSE15 conference. The special section focuses on two topics that are of significant current interest to CSE researchers: CSE software and big data in CSE.

Read More: http://epubs.siam.org/doi/abs/10.1137/16N974188

D. Sunderland, B. Peterson, J. Schmidt, A. Humphrey, J. Thornock,, M. Berzins.

“An Overview of Performance Portability in the Uintah Runtime System Through the Use of Kokkos,” In Proceedings of the Second Internationsl Workshop on Extreme Scale Programming Models and Middleware, Salt Lake City, Utah, ESPM2, IEEE Press, Piscataway, NJ, USA pp. 44--47. 2016.

ISBN: 978-1-5090-3858-9

DOI: 10.1109/ESPM2.2016.10

The current diversity in nodal parallel computer architectures is seen in machines based upon multicore CPUs, GPUs and the Intel Xeon Phi's. A class of approaches for enabling scalability of complex applications on such architectures is based upon Asynchronous Many Task software architectures such as that in the Uintah framework used for the parallel solution of solid and fluid mechanics problems. Uintah has both an applications layer with its own programming model and a separate runtime system. While Uintah scales well today, it is necessary to address nodal performance portability in order for it to continue to do. Incrementally modifying Uintah to use the Kokkos performance portability library through prototyping experiments results in improved kernel performance by more than a factor of two.

D. Sunderland, B. Peterson, J. Schmidt, A. Humphrey, J. Thornock, M. Berzins.

“An Overview of Performance Portability in the Uintah Runtime System through the Use of Kokkos,” In 2016 Second International Workshop on Extreme Scale Programming Models and Middlewar (ESPM2), IEEE, Nov, 2016.

DOI: 10.1109/espm2.2016.012

The current diversity in nodal parallel computer architectures is seen in machines based upon multicore CPUs, GPUs and the Intel Xeon Phi's. A class of approaches for enabling scalability of complex applications on such architectures is based upon Asynchronous Many Task software architectures such as that in the Uintah framework used for the parallel solution of solid and fluid mechanics problems. Uintah has both an applications layer with its own programming model and a separate runtime system. While Uintah scales well today, it is necessary to address nodal performance portability in order for it to continue to do. Incrementally modifying Uintah to use the Kokkos performance portability library through prototyping experiments results in improved kernel performance by more than a factor of two.

X. Tong, J. Edwards, C. Chen, H. Shen, C. R. Johnson, P. Wong.

“View-Dependent Streamline Deformation and Exploration,” In Transactions on Visualization and Computer Graphics, Vol. 22, No. 7, IEEE, pp. 1788--1801. July, 2016.

ISSN: 1077-2626

DOI: 10.1109/tvcg.2015.2502583

Occlusion presents a major challenge in visualizing 3D flow and tensor fields using streamlines. Displaying too many streamlines creates a dense visualization filled with occluded structures, but displaying too few streams risks losing important features. We propose a new streamline exploration approach by visually manipulating the cluttered streamlines by pulling visible layers apart and revealing the hidden structures underneath. This paper presents a customized view-dependent deformation algorithm and an interactive visualization tool to minimize visual clutter in 3D vector and tensor fields. The algorithm is able to maintain the overall integrity of the fields and expose previously hidden structures. Our system supports both mouse and direct-touch interactions to manipulate the viewing perspectives and visualize the streamlines in depth. By using a lens metaphor of different shapes to select the transition zone of the targeted area interactively, the users can move their focus and examine the vector or tensor field freely.

Keywords: Context;Deformable models;Lenses;Shape;Streaming media;Three-dimensional displays;Visualization;Flow visualization;deformation;focus+context;occlusion;streamline;white matter tracts

W. Usher, I. Wald, A. Knoll, M. Papka, V. Pascucci.

“In Situ Exploration of Particle Simulations with CPU Ray Tracing,” In Supercomputing Frontiers and Innovations, Vol. 3, No. 4, 2016.

ISSN: 2313-8734

DOI: 10.14529/jsfi160401

We present a system for interactive in situ visualization of large particle simulations, suitable for general CPU-based HPC architectures. As simulations grow in scale, in situ methods are needed to alleviate IO bottlenecks and visualize data at full spatio-temporal resolution. We use a lightweight loosely-coupled layer serving distributed data from the simulation to a data-parallel renderer running in separate processes. Leveraging the OSPRay ray tracing framework for visualization and balanced P-k-d trees, we can render simulation data in real-time, as they arrive, with negligible memory overhead. This flexible solution allows users to perform exploratory in situ visualization on the same computational resources as the simulation code, on dedicated visualization clusters or remote workstations, via a standalone rendering client that can be connected or disconnected as needed. We evaluate this system on simulations with up to 227M particles in the LAMMPS and Uintah computational frameworks, and show that our approach provides many of the advantages of tightly-coupled systems, with the flexibility to render on a wide variety of remote and co-processing resources.

E. Wong, S. Palande, Bei Wang, B. Zielinski, J. Anderson, P. T. Fletcher.

“Kernel Partial Least Squares Regression for Relating Functional Brain Network Topology to Clinical Measures of Behavior,” In 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), IEEE, April, 2016.

DOI: 10.1109/isbi.2016.7493506

In this paper we present a novel method for analyzing the relationship between functional brain networks and behavioral phenotypes. Drawing from topological data analysis, we first extract topological features using persistent homology from functional brain networks that are derived from correlations in resting-state fMRI. Rather than fixing a discrete network topology by thresholding the connectivity matrix, these topological features capture the network organization across all continuous threshold values. We then propose to use a kernel partial least squares (kPLS) regression to statistically quantify the relationship between these topological features and behavior measures. The kPLS also provides an elegant way to combine multiple image features by using linear combinations of multiple kernels. In our experiments we test the ability of our proposed brain network analysis to predict autism severity from rs-fMRI. We show that combining correlations with topological features gives better prediction of autism severity than using correlations alone.

2015

K.K. Aras, W. Good, J. Tate, B.M. Burton, D.H. Brooks, J. Coll-Font, O. Doessel, W. Schulze, D. Patyogaylo, L. Wang, P. Van Dam,, R.S. MacLeod.

“Experimental Data and Geometric Analysis Repository: EDGAR,” In Journal of Electrocardiology, 2015.

Introduction

The "Experimental Data and Geometric Analysis Repository", or EDGAR is an Internet-based archive of curated data that are freely distributed to the international research community for the application and validation of electrocardiographic imaging (ECGI) techniques. The EDGAR project is a collaborative effort by the Consortium for ECG Imaging (CEI, ecg-imaging.org), and focused on two specific aims. One aim is to host an online repository that provides access to a wide spectrum of data, and the second aim is to provide a standard information format for the exchange of these diverse datasets.

Methods

The EDGAR system is composed of two interrelated components: 1) a metadata model, which includes a set of descriptive parameters and information, time signals from both the cardiac source and body-surface, and extensive geometric information, including images, geometric models, and measure locations used during the data acquisition/generation; and 2) a web interface. This web interface provides efficient, search, browsing, and retrieval of data from the repository.

Results

An aggregation of experimental, clinical and simulation data from various centers is being made available through the EDGAR project including experimental data from animal studies provided by the University of Utah (USA), clinical data from multiple human subjects provided by the Charles University Hospital (Czech Republic), and computer simulation data provided by the Karlsruhe Institute of Technology (Germany).

Conclusions

It is our hope that EDGAR will serve as a communal forum for sharing and distribution of cardiac electrophysiology data and geometric models for use in ECGI research.

I. Arganda-Carreras, S.C. Turaga, D.R. Berger, D. Ciresan, A. Giusti, L.M. Gambardella, J. Schmidhuber, D. Laptev, S. Dwivedi, J. Buhmann, T. Liu, M. Seyedhosseini, T. Tasdizen, L. Kamentsky, R. Burget, V. Uher, X. Tan, C. Sun, T.D. Pham, E. Bas, M.G. Uzunbas, A. Cardona, J. Schindelin, H.S. Seung.

“Crowdsourcing the creation of image segmentation algorithms for connectomics,” In Frontiers in Neuroanatomy, Vol. 9, Frontiers Media SA, Nov, 2015.

DOI: 10.3389/fnana.2015.00142

To stimulate progress in automating the reconstruction of neural circuits, we organized the first international challenge on 2D segmentation of electron microscopic (EM) images of the brain. Participants submitted boundary maps predicted for a test set of images, and were scored based on their agreement with a consensus of human expert annotations. The winning team had no prior experience with EM images, and employed a convolutional network. This "deep learning" approach has since become accepted as a standard for segmentation of EM images. The challenge has continued to accept submissions, and the best so far has resulted from cooperation between two teams. The challenge has probably saturated, as algorithms cannot progress beyond limits set by ambiguities inherent in 2D scoring and the size of the test dataset. Retrospective evaluation of the challenge scoring system reveals that it was not sufficiently robust to variations in the widths of neurite borders. We propose a solution to this problem, which should be useful for a future 3D segmentation challenge.

J. Bennett, F. Vivodtzev, V. Pascucci (Eds.).

“Topological and Statistical Methods for Complex Data,” Subtitled “Tackling Large-Scale, High-Dimensional, and Multivariate Data Spaces,” Mathematics and Visualization, Springer Berlin Heidelberg, 2015.

ISBN: 978-3-662-44899-1

This book contains papers presented at the Workshop on the Analysis of Large-scale,

High-Dimensional, and Multi-Variate Data Using Topology and Statistics, held in Le Barp,

France, June 2013. It features the work of some of the most prominent and recognized

leaders in the field who examine challenges as well as detail solutions to the analysis of

extreme scale data.

The book presents new methods that leverage the mutual strengths of both topological

and statistical techniques to support the management, analysis, and visualization

of complex data. It covers both theory and application and provides readers with an

overview of important key concepts and the latest research trends.

Coverage in the book includes multi-variate and/or high-dimensional analysis techniques,

feature-based statistical methods, combinatorial algorithms, scalable statistics algorithms,

scalar and vector field topology, and multi-scale representations. In addition, the book

details algorithms that are broadly applicable and can be used by application scientists to

glean insight from a wide range of complex data sets.

J. Bennett, R. Clay, G. Baker, M. Gamell, D. Hollman, S. Knight, H. Kolla, G. Sjaardema, N. Slattengren, K. Teranishi, J. Wilke, M. Bettencourt, S. Bova, K. Franko, P. Lin, R. Grant, S. Hammond, S. Olivier.

“ASC ATDM Level 2 Milestone #5325,” Subtitled “Asynchronous Many-Task Runtime System Analysis and Assessment for Next Generation Platforms,” Note: Sandia Report, 2015.

This report provides in-depth information and analysis to help create a technical road map for developing nextgeneration programming models and runtime systems that support Advanced Simulation and Computing (ASC) workload requirements. The focus herein is on asynchronous many-task (AMT) model and runtime systems, which are of great interest in the context of "exascale" computing, as they hold the promise to address key issues associated with future extreme-scale computer architectures. This report includes a thorough qualitative and quantitative examination of three best-of-class AMT runtime systems—Charm++, Legion, and Uintah, all of which are in use as part of the ASC Predictive Science Academic Alliance Program II (PSAAP-II) Centers. The studies focus on each of the runtimes' programmability, performance, and mutability. Through the experiments and analysis presented, several overarching findings emerge. From a performance perspective, AMT runtimes show tremendous potential for addressing extremescale challenges. Empirical studies show an AMT runtime can mitigate performance heterogeneity inherent to the machine itself and that Message Passing Interface (MPI) and AMT runtimes perform comparably under balanced conditions. From a programmability and mutability perspective however, none of the runtimes in this study are currently ready for use in developing production-ready Sandia ASC applications. The report concludes by recommending a codesign path forward, wherein application, programming model, and runtime system developers work together to define requirements and solutions. Such a requirements-driven co-design approach benefits the high-performance computing (HPC) community as a whole, with widespread community engagement mitigating risk for both application developers and runtime system developers.

J. Bennett, R. Clay, G. Baker, M. Gamell, D. Hollman, S. Knight, H. Kolla, G. Sjaardema, N. Slattengren, K. Teranishi, J. Wilke, M. Bettencourt, S. Bova, K. Franko, P. Lin, R. Grant, S. Hammond, S. Olivier, L. Kale, N. Jain, E. Mikida, A. Aiken, M. Bauer, W. Lee, E. Slaughter, S. Treichler, M. Berzins, T. Harman, A. Humphrey, J. Schmidt, D. Sunderland, P. McCormick, S. Gutierrez, M. Schulz, A. Bhatele, D. Boehme, P. Bremer, T. Gamblin.

“ASC ATDM level 2 milestone #5325: Asynchronous many-task runtime system analysis and assessment for next generation platforms,” Sandia National Laboratories, 2015.

This report provides in-depth information and analysis to help create a technical road map for developing nextgeneration programming models and runtime systems that support Advanced Simulation and Computing (ASC) workload requirements. The focus herein is on asynchronous many-task (AMT) model and runtime systems, which are of great interest in the context of "exascale" computing, as they hold the promise to address key issues associated with future extreme-scale computer architectures. This report includes a thorough qualitative and quantitative examination of three best-of-class AMT runtime systems—Charm++, Legion, and Uintah, all of which are in use as part of the ASC Predictive Science Academic Alliance Program II (PSAAP-II) Centers. The studies focus on each of the runtimes' programmability, performance, and mutability. Through the experiments and analysis presented, several overarching findings emerge. From a performance perspective, AMT runtimes show tremendous potential for addressing extremescale challenges. Empirical studies show an AMT runtime can mitigate performance heterogeneity inherent to the machine itself and that Message Passing Interface (MPI) and AMT runtimes perform comparably under balanced conditions. From a programmability and mutability perspective however, none of the runtimes in this study are currently ready for use in developing production-ready Sandia ASC applications. The report concludes by recommending a codesign path forward, wherein application, programming model, and runtime system developers work together to define requirements and solutions. Such a requirements-driven co-design approach benefits the high-performance computing (HPC) community as a whole, with widespread community engagement mitigating risk for both application developers and runtime system developers.

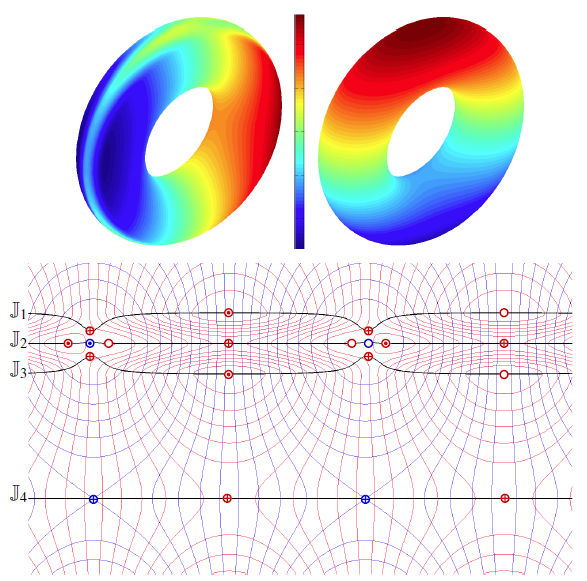

H. Bhatia, Bei Wang, G. Norgard, V. Pascucci, P. T. Bremer.

“Local, Smooth, and Consistent Jacobi Set Simplification,” In Computational Geometry, Vol. 48, No. 4, Elsevier, pp. 311-332. May, 2015.

DOI: 10.1016/j.comgeo.2014.10.009

The relation between two Morse functions defined on a smooth, compact, and orientable 2-manifold can be studied in terms of their Jacobi set. The Jacobi set contains points in the domain where the gradients of the two functions are aligned. Both the Jacobi set itself as well as the segmentation of the domain it induces, have shown to be useful in various applications. In practice, unfortunately, functions often contain noise and discretization artifacts, causing their Jacobi set to become unmanageably large and complex. Although there exist techniques to simplify Jacobi sets, they are unsuitable for most applications as they lack fine-grained control over the process, and heavily restrict the type of simplifications possible.

This paper introduces the theoretical foundations of a new simplification framework for Jacobi sets. We present a new interpretation of Jacobi set simplification based on the perspective of domain segmentation. Generalizing the cancellation of critical points from scalar functions to Jacobi sets, we focus on simplifications that can be realized by smooth approximations of the corresponding functions, and show how these cancellations imply simultaneous simplification of contiguous subsets of the Jacobi set. Using these extended cancellations as atomic operations, we introduce an algorithm to successively cancel subsets of the Jacobi set with minimal modifications to some userdefined metric. We show that for simply connected domains, our algorithm reduces a given Jacobi set to its minimal configuration, that is, one with no birth-death points (a birth-death point is a specific type of singularity within the Jacobi set where the level sets of the two functions and the Jacobi set have a common normal direction).

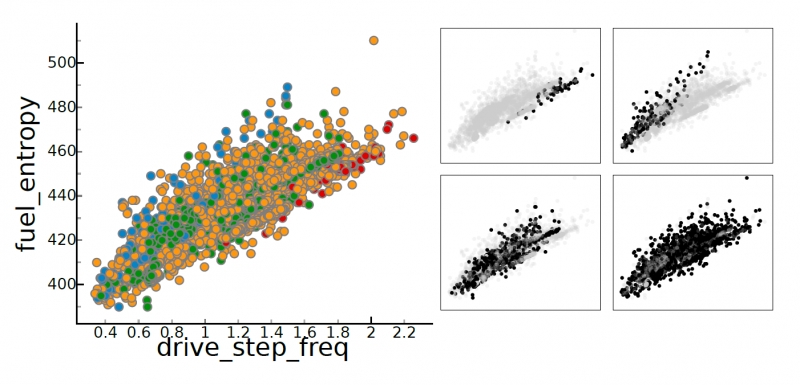

P. T. Bremer, D. Maljovec, A. Saha, Bei Wang, J. Gaffney, B. K. Spears, V. Pascucci.

“ND2AV: N-Dimensional Data Analysis and Visualization -- Analysis for the National Ignition Campaign,” In Computing and Visualization in Science, 2015.

One of the biggest challenges in high-energy physics is to analyze a complex mix of experimental and simulation data to gain new insights into the underlying physics. Currently, this analysis relies primarily on the intuition of trained experts often using nothing more sophisticated than default scatter plots. Many advanced analysis techniques are not easily accessible to scientists and not flexible enough to explore the potentially interesting hypotheses in an intuitive manner. Furthermore, results from individual techniques are often difficult to integrate, leading to a confusing patchwork of analysis snippets too cumbersome for data exploration. This paper presents a case study on how a combination of techniques from statistics, machine learning, topology, and visualization can have a significant impact in the field of inertial confinement fusion. We present the ND2AV: N-Dimensional Data Analysis and Visualization framework, a user-friendly tool aimed at exploiting the intuition and current work flow of the target users. The system integrates traditional analysis approaches such as dimension reduction and clustering with state-of-the-art techniques such as neighborhood graphs and topological analysis, and custom capabilities such as defining combined metrics on the fly. All components are linked into an interactive environment that enables an intuitive exploration of a wide variety of hypotheses while relating the results to concepts familiar to the users, such as scatter plots. ND2AV uses a modular design providing easy extensibility and customization for different applications. ND2AV is being actively used in the National Ignition Campaign and has already led to a number of unexpected discoveries.

T. Bregman, R. Reznikov, M. Diwan, R. Raymond, C. R.Butson, J. N.Nobrega, C. Hamani.

“Antidepressant-like Effects of Medial Forebrain Bundle Deep Brain Stimulation in Rats are not Associated With Accumbens Dopamine Release,” In Brain Stimulation, Vol. 8, No. 4, pp. 708--713. 2015.

BACKGROUND:

Medial forebrain bundle (MFB) deep brain stimulation (DBS) is currently being investigated in patients with treatment-resistant depression. Striking features of this therapy are the large number of patients who respond to treatment and the rapid nature of the antidepressant response.

OBJECTIVE:

To study antidepressant-like behavioral responses, changes in regional brain activity, and monoamine release in rats receiving MFB DBS.

METHODS:

Antidepressant-like effects of MFB stimulation at 100 μA, 90 μs and either 130 Hz or 20 Hz were characterized in the forced swim test (FST). Changes in the expression of the immediate early gene (IEG) zif268 were measured with in situ hybridization and used as an index of regional brain activity. Microdialysis was used to measure DBS-induced dopamine and serotonin release in the nucleus accumbens.

RESULTS:

Stimulation at parameters that approximated those used in clinical practice, but not at lower frequencies, induced a significant antidepressant-like response in the FST. In animals receiving MFB DBS at high frequency, increases in zif268 expression were observed in the piriform cortex, prelimbic cortex, nucleus accumbens shell, anterior regions of the caudate/putamen and the ventral tegmental area. These structures are involved in the neurocircuitry of reward and are also connected to other brain areas via the MFB. At settings used during behavioral tests, stimulation did not induce either dopamine or serotonin release in the nucleus accumbens.

CONCLUSIONS:

These results suggest that MFB DBS induces an antidepressant-like effect in rats and recruits structures involved in the neurocircuitry of reward without affecting dopamine release in the nucleus accumbens.

C. R. Butson, C. C. McIntyre.

“The use of stimulation field models for deep brain stimulation programming,” In Brain Stimulation, Vol. 8, No. 5, Elsevier BV, pp. 976--978. September, 2015.

DOI: 10.1016/j.brs.2015.06.005