SCI at University of Utah Accelerates Visual Computing via oneAPI

oneAPI cross-architecture programming & Intel® oneAPI Rendering Toolkit to Improve Large-scale Simulations, Data Analytics & Visualization for Scientific Workflows

Intel oneAPI Centers of Excellence

[Oct. 26, 2021] - The Scientific Computing and Imaging (SCI) Institute at the University of Utah is pleased to announce that it is expanding its Intel Graphics and Visualization Institute of Xellence (Intel GVI) to an Intel oneAPI Center of Excellence (CoE). The oneAPI Center of Excellence will focus on advancing research, development and teaching of the latest visual computing innovations in ray tracing and rendering, and using oneAPI to accelerateing compute across heterogeneous architectures (CPUs, GPUs including future upcoming Intel Xe architecture, and other accelerators). Adopting oneAPI’s cross-architecture programming model provides a path to achieve maximum efficiency in multi-architecture deployments supporting CPUs + accelerators. This core approach based on open standards will allow fast, agile development and support new, advanced features without costly management of multiple vendors’ -specificproprietary code bases.

Over the past two years, Principal Investigators Chris Johnson, Valerio Pascucci, and Martin Berzins led more than 20 research papers and development of the OpenViSUS and Uintah software, and created rendering and scientific visualization algorithms for advanced graphics and visualization deployed within Intel OSPRay’s ray tracing API and engine through the Intel GVI.

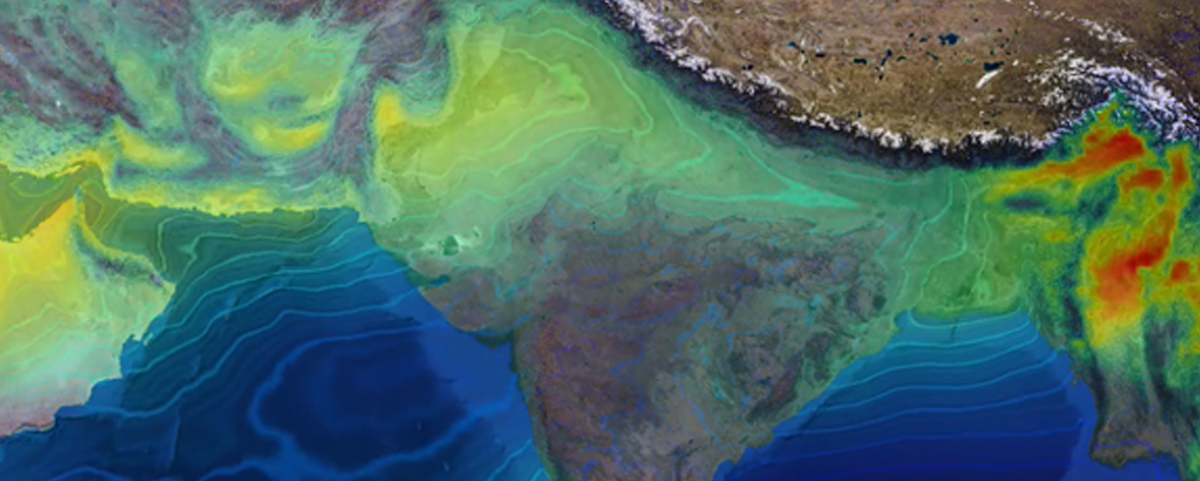

The SCI Institute oneAPI Center of Excellence team is extending its Intel GVI work to pursue new high-performance visual computing methods that utilize oneAPI cross-architecture programming, which delivers performance and productivity, along withprovides the ability to create single source code that takes advantage of CPUs, GPUs and other accelerator technologiescan be deployed across a variety of architectures. For infrastructure, the Utah project provides an end-to-end computing and data movement environment using oneAPI to achieve seamless integration of large-scale simulations, data analytics, and visualization in practical scientific workflows. In particular, we will deploy an innovative data movement and streaming infrastructure based on a novel encoding approach that enables expressing new quality-vs-speed tradeoffs by modeling spatial resolution and numerical precision of the data independently. This model organizes scientific data in a single layout that allows decoding the data in various incremental decoding streams, each satisfying a different scientific workflow requirement. We use this data model as the foundation for a new generation of tools that combines Intel® oneAPI technology with the OpenViSUS and Uintah frameworks to efficiently manage, store, analyze, and visualize scientific data.

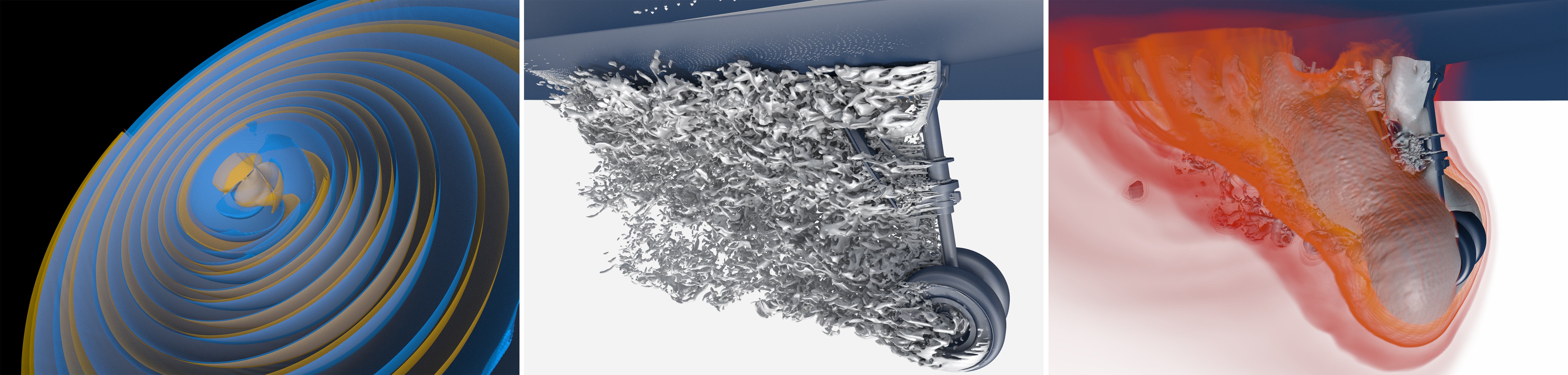

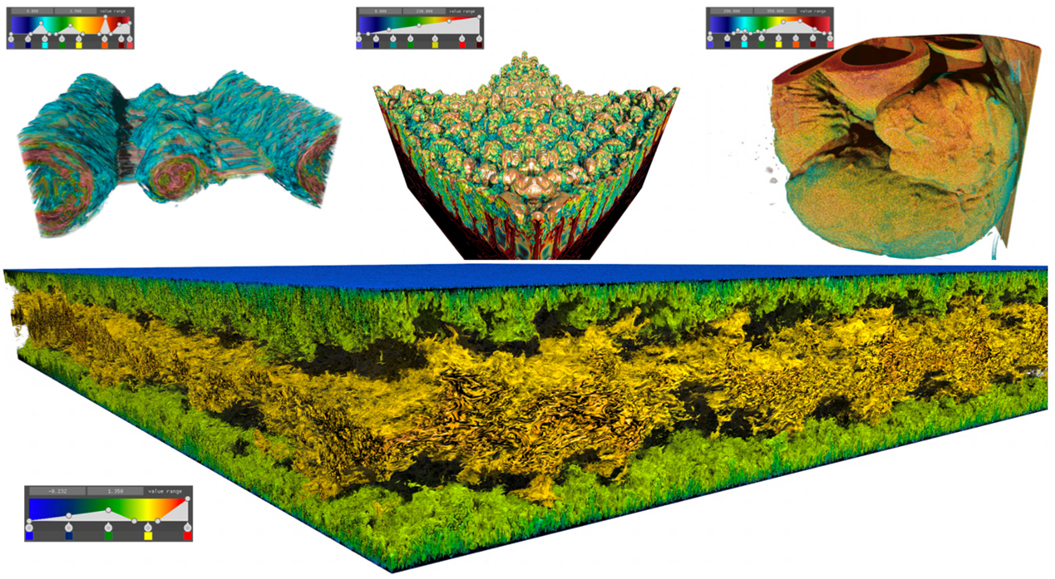

Projects include scalable ray tracing for adaptive mesh refinement data, multidimensional transfer function volume rendering, in situ visualization, multifield visualization and uncertainty visualization applied to large-scale problems in science, engineering, and medicine. Much of this work uses the Academy Award® winning Intel® Embree, Intel® OSPRay, and other components of the Intel® oneAPI Rendering Toolkit.

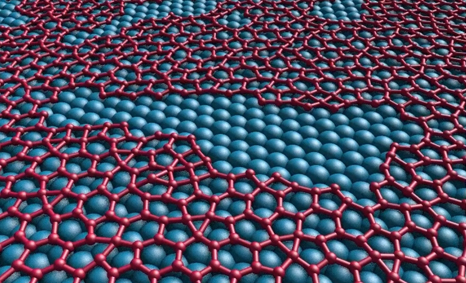

In particular, the OpenViSUS framework (visus.org) provides advanced data management and visualization infrastructure enabling data sharing and collaborative activities for national resources such the National Ecological Observatory Network (NEON) or the Materials Commons. Integration of OpenViSUS with the Uintah simulation code also allows archiving scalable computing and streaming of massive simulation data on leadership class computing resources in DOE national laboratories.

oneAPI cross-architecture programming is key to advancing simulations for challenging engineering problems using the Utah Uintah software on forthcoming exascale architectures such as the Argonne Leadership Computing Facility’s Aurora supercomputer.

“The SCI Institute’s pioneering research in visualization, imaging, and scientific computing and its long track record in creating open- source scientific software will enable their work on OoneAPI to help scientists, engineers and biomedical researchers to focus on their research instead of the details of the underlying software,” says Dan Reed, Senior Vice President for Academic Affairs at the University of Utah .

“The SCI Institute’s pioneering research in visualization, imaging, and scientific computing and its long track record in creating open- source scientific software will enable their work on OoneAPI to help scientists, engineers and biomedical researchers to focus on their research instead of the details of the underlying software,” says Dan Reed, Senior Vice President for Academic Affairs at the University of Utah .“SCI at University of Utah is a world-leading visual computing and scientific visualization institution with many past graduates across industry and academia defining the most advanced visual computing techniques available, such as ray tracing. Extending its core capabilities within OpenViSUS and Uintah with oneAPI heterogeneous programming allows users and developerment partners to utilize the full potential of visual computing systems from laptops to exascale supercomputers. This platform view for software will in turn provide ever-improving levels of performance as new innovative hardware becomes available, and opens doors for unbridled new levels of visual computing and scientific visualization,” says Jim Jeffers, senior principal engineer and senior director of Intel Advanced Rendering and Visualization.

About oneAPI

oneAPI is an open, unified and cross-architecture programming model for CPUs and accelerator architectures (GPUs, FPGAs, and others). Based on standards, the programming model simplifies software development and delivers uncompromised performance for accelerated compute without proprietary lock-in, while enabling the integration of legacy code. With oneAPI, developers can choose the best architecture for the specific problem they are trying to solve without needing to rewrite software for the next architecture and platform.

About Intel® oneAPI Rendering Toolkit

The Intel® oneAPI Rendering Toolkit is a set of open source libraries that enables creation of high-performance, high-fidelity, extensible, and cost-effective visualization applications and solutions. Its libraries provide rendering kernels and middleware for Intel® platforms for maximum flexibility, performance, and technical transparency. The toolkit supports Intel CPUs and future Xe architectures (GPUs). It includes the award-winning Intel® Embree, Intel® Open Image Denoise, Intel® Open Volume Kernel Library, Intel® OSPRay, Intel® OpenSWR and other components and utilities.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries

Past Intel Centers

Intel Visualization and Graphics Institute

- PIs: Chris Johnson, Valerio Pascucci

- Advanced graphics and visualization capabilities made available to the rendering, scientific visualization and virtual design communities

Intel PCC: Modernizing Scientific Visualization and Computation on Many-core Architectures

Website- PIs: Valerio Pascucci, Martin Berzins

- Applying OSPRay to visualization and HPC production in practice (i.e., Uintah, VisIt)

- Visualization analysis research: IO, topology, multifield/multidimensional (ViSUS)

- Staging Intel resources for both the Vis Center and IPCC.

- Preparing for exascale on DOE A21 via early science program

- Optimizing next-gen Navy weather codes for Intel KNL and beyond

Intel Visualization Center

- PIs: Chris Johnson, Ingo Wald (Intel)

- Large-scale vis and HPC technology on CPU/Phi hardware (OSPRay)

Current and Past Projects

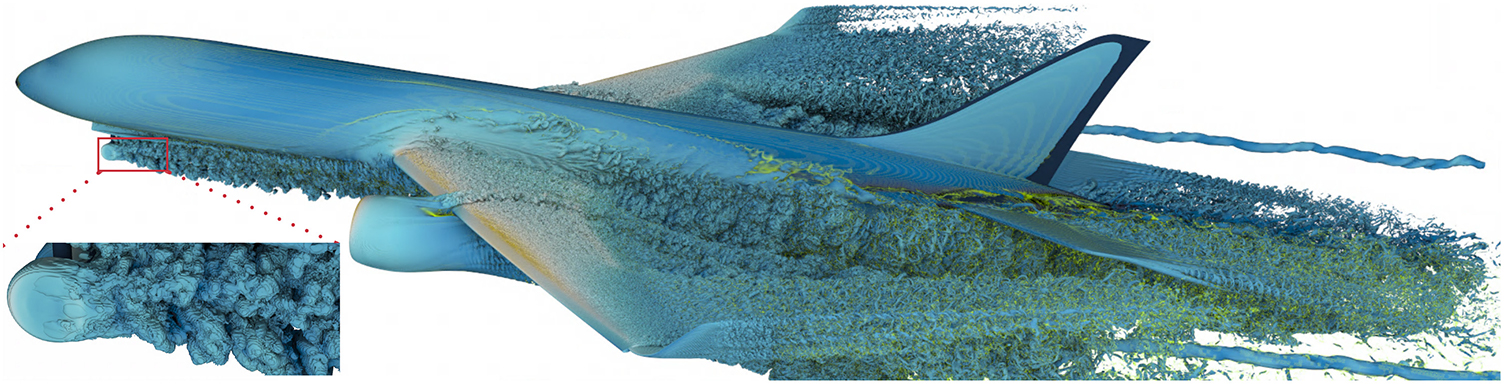

CPU Ray Tracing of Tree-Based Adaptive Mesh Refinement Data

- A novel reconstruction strategy for cell-centered AMR data, GTI,that enables artifact-free volume and isosurface rendering;

- An efficient high-fidelity visualization solution for both AMR and other multiresolution grid datasets on multicore CPUs, supporting empty space skipping, adaptive sampling and isosurfacing;

- Integration of our method into the OSPRay ray tracing library and open-source release to make it widely available to domain scientists

- The Paper is published in EuroVIS 2020 (http://www.sci.utah.edu/publications/Wan2020a/TAMR_final.pdf)

Hybrid Isosurface Ray Tracing of Block Strictured (BS) AMR Data in OSPRay

- Proposed a novel BS-AMR reconstruction strategy—the octant method— applicable to both isosurface and direct volume rendering, that is locally rectilinear, adaptive, and continuous, even across level boundaries.

- Provided an efficient hybrid implicit isosurface ray-tracing approach that combines ideas from isosurface extraction and implicit isosurface ray-tracing, applicable to both non-AMR data and (using our octant method) BS-AMR data.

- Extended OSPRay to support interactive high-fidelity rendering of BS-AMR data

- The Paper is published and was presented at IEEE VIS 2018 (https://ieeexplore-ieee-org.ezproxy.lib.utah.edu/document/8493612)

SENSEI: LBL, ANL, Georgia Tech, VisIt, Kitware collaboration to enable portable in situ analysis

-

Now released on Github, presented at ISAV18

Now released on Github, presented at ISAV18 - In Transit coupling via libIS, informing design of SENSEI's in transit API

- Working on merging libIS adaptor and SENSEI execution application into SENSEI

- OSPRay in transit viewer using MPI-Distributed Device

Preparing for Exascale Projects (i)

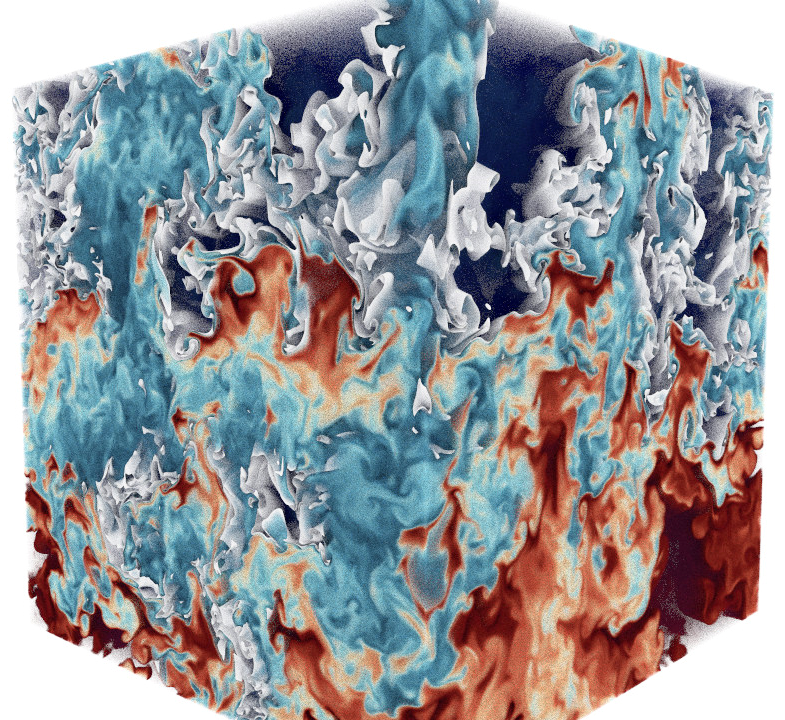

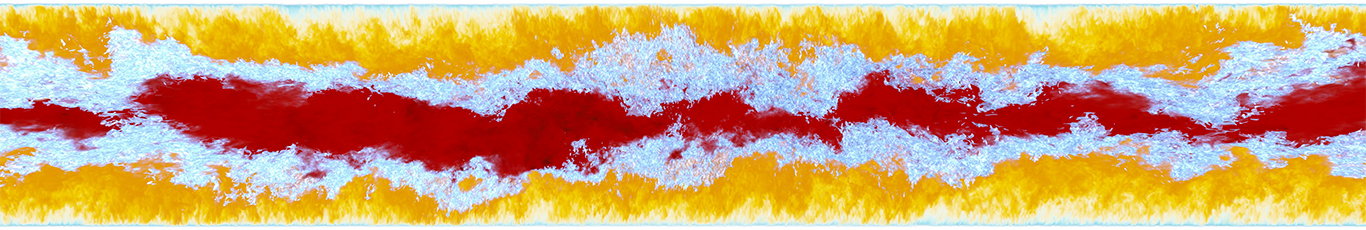

- Collaborating with GE through DOE/NNSA PSAAP II Initiative to support design/evaluation of existing ultra-supercritical clean coal boiler

- Adopted Kokkos within Uintah to prepare for large-scale combustion simulations for A21 Exascale Early Science Program, using Kokkos instead of OpenMP direct to improve portability to future architectures

- Developed a portability layer and hybrid parallelism approach within Uintah to interface to Kokkos::OpenMP and Kokkos::CUDA

- Demonstrated good strong-scaling with MPI+Kokkos to: 442,368 threads across 1,728 KNLs on TACC Stampede 2, as well as 131,072 threads across 512 KNLs on ALCF Theta, and 64 GPUs on ORNL Titan

Performance Portability Across Diverse Systems

- Extended Uintah's MPI+Kokkos infrastructure to improve node use

- Working to try to identify loop characteristics that scale well with Kokkos on Intel architectures

- Preparing for large-scale runs with MPI+Kokkos on NERSC Cori, TACC Frontera (pending access), and LLNL Lassen

Optimizing Numerical Weather Prediction Codes

- Performance Optimization of physics routines

- Positivity-preserving mapping in physics-dynamics coupling

OSPRay Distributed FrameBuffer

- Have made significant improvements to OSPRay's distributed rendering scalability on Xeon and Xeon Phi

- Combination of optimizations to communication pattern, code, data transferred and data compression

- Provide better scalability with compute or memory capacity to end users, releasing in OSPRay 1.8

|

| 4096x1024 image of 1TB Volume + 4.35B transparent triangles Rendered at 5FPS on 64 Stampede2 KNL nodes |

OSPRay MPI-Distributed Device

- Enable MPI-parallel applications to use OSPRay for scalable rendering with the Distributed FrameBuffer

- Releasing soon in OSPRay 1.8

- Imposes no requirements on data types/geometry, supports existing OSPRay extension modules

- Enables direct OSPRay integration into parallel applications like ParaView, VisIt, VL3 (ANL), or in situ via ParaView Catalyst, VisIt LibSim

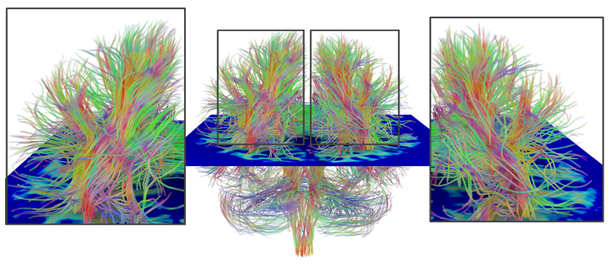

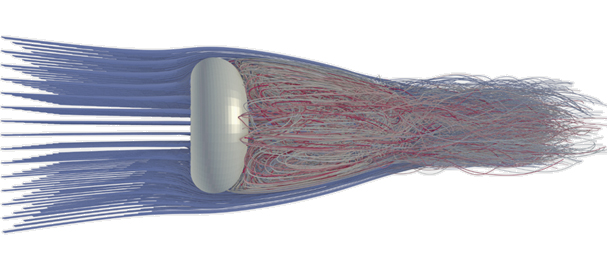

Ray Tracing Generalized Stream Lines in OSPRay

- High performance, high fidelity technique for interactively ray tracing generalized stream line primitives.

- High-performance technique capable of: lines with same radius and varying radii, bifurcations, such as neuron morphology, and correct transparency

|

|

| Semi-transparent visualization of DTI data (218k links) | Flow past a torus (fixed radius pathlines ~6.5 M links) |

Bricktree for Large-scale Volumetric Data Visualization

- Interactive visualization solution for large-scale volumes in OSPRay

- Quickly loads progressively higher resolutions of data, reducing user wait times

- Bricktree – a low-overhead hierarchical structure allows for encoding a large volume into multi-resolution representation

- Rendered via OSPRay module

Display Wall Rendering with OSPRay

- Software infrastructure that allows parallel renderers (OSPRay) to render to large-tiled display clusters.

- Decouples the rendering cluster and display cluster, providing a "service" that treats the display wall as a single virtual screen, and a client-side library that allows an MPI-parallel renderer to connect and send pixel data to the display wall

- Exploring lightweight, inexpensive and easy to deploy options via Intel NUC + remote rendering cluster