SCI Publications

2014

C. Vachet, H.C. Hazlett, J. Piven, G. Gerig.

“4D Modeling of Infant Brain Growth in Down's Syndrome and Controls from longitudinal MRI,” In Proceeding of the 2014 Joint Annual Meeting ISMRM-ESMRMB, pp. (accepted). 2014.

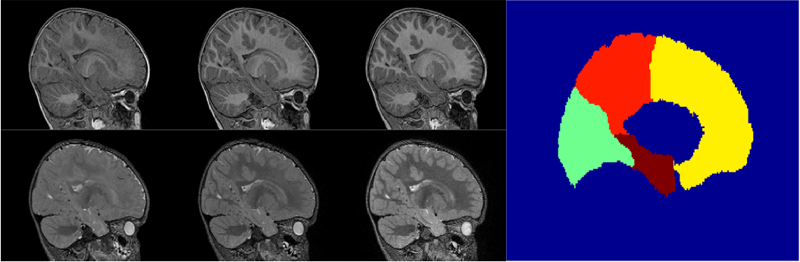

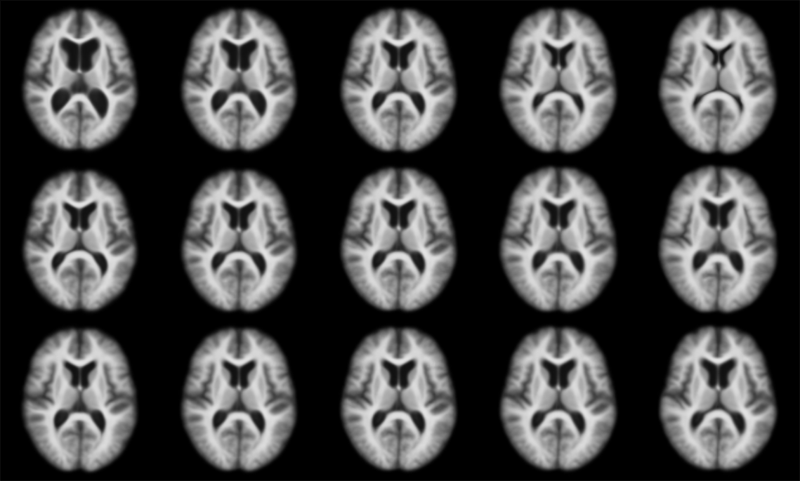

Modeling of early brain growth trajectories from longitudinal MRI will provide new insight into neurodevelopmental characteristics, timing and type of changes in neurological disorders from controls. In addition to an ongoing large-scale infant autism neuroimaging study 1, we recruited 4 infants with Down’s syndrome (DS) in order to evaluate newly developed methods for 4D segmentation from longitudinal infant MRI, and for temporal modeling of brain growth trajectories. Specifically to Down's, a comparison of patterns of full brain and lobar tissue growth may lead to better insight into the observed variability of cognitive development and neurological effects, and may help with development of disease-modifying therapeutic intervention.

A. Vardhan, M. Prastawa, C. Vachet, J. Piven, G. Gerig.

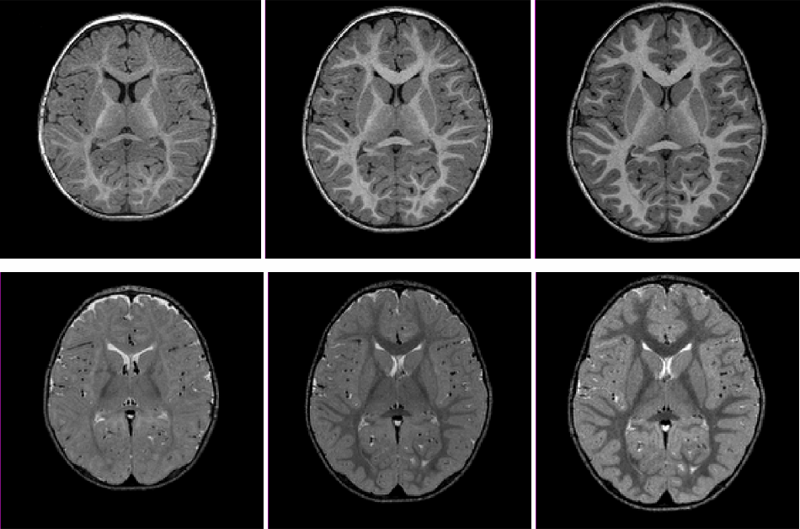

“Characterizing growth patterns in longitudinal MRI using image contrast,” In Proceedings of Medical Imaging 2014: Image Processing, 2014.

A. Vardhan, N. Sadeghi, C. Vachet, J. Piven, G. Gerig.

“Joint Longitudinal Modeling of Brain Appearance in Multimodal MRI for the Characterization of Early Brain Developmental Processes,” In Spatiotemporal Image Analysis for Longitudinal and Time-Series Image Data (STIA'14) , LNCS. MICCAI'14, Springer Verlag, June, 2014.

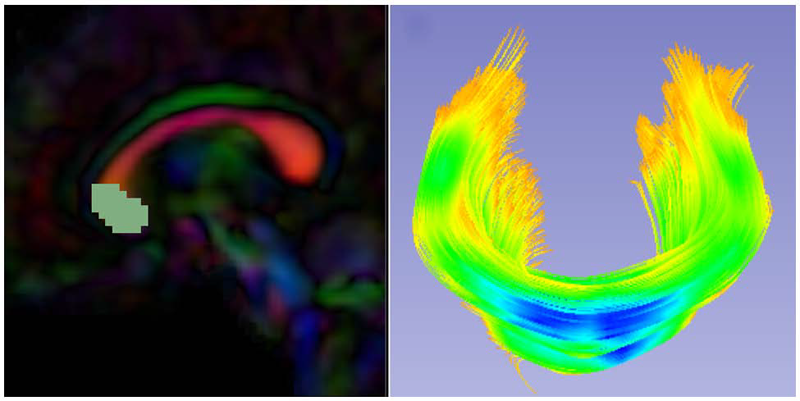

A.R. Verde, F. Budin, J.-B. Berger, A. Gupta, M. Farzinfar, A. Kaiser, M. Ahn, H. Johnson, J. Matsui, H.C. Hazlett, A. Sharma, C. Goodlett, Y. Shi, S. Gouttard, C. Vachet, J. Piven, H. Zhu, G. Gerig, M. Styner.

“UNC-Utah NA-MIC framework for DTI fiber tract analysis,” In Frontiers in Neuroinformatics, Vol. 7, No. 51, January, 2014.

DOI: 10.3389/fninf.2013.00051

Keywords: neonatal neuroimaging, white matter pathways, magnetic resonance imaging, diffusion tensor imaging, diffusion imaging quality control, DTI atlas building

Bo Wang, W. Liu, M. Prastawa, A. Irimia, P.M. Vespa, J.D. van Horn, P.T. Fletcher, G. Gerig.

“4D Active Cut: An Interactive Tool for Pathological Anatomy Modeling,” In Proceedings of the 2014 IEEE International Symposium on Biomedical Imaging (ISBI), pp. (accepted). 2014.

Keywords: Active learning, graph cuts, longitudinal MRI, Markov Random Fields, semi-supervised learning

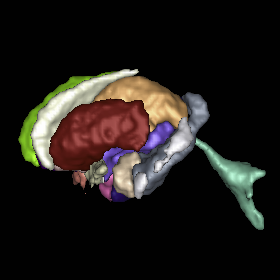

J. Wang, C. Vachet, A. Rumple, S. Gouttard, C. Ouzie, E. Perrot, G. Du, X. Huang, G. Gerig, M.A. Styner.

“Multi-atlas segmentation of subcortical brain structures via the AutoSeg software pipeline,” In Frontiers in Neuroinformatics, Vol. 8, No. 7, 2014.

DOI: 10.3389/fninf.2014.00007

Keywords: segmentation, Registration, MRI, Atlas, Brain, Insight Toolkit

Y. Wan, H. Otsuna, K. Kwan, C.D. Hansen.

“Real-Time Dense Nucleus Selection from Confocal Data,” In Proceedings of the Eurographics Workshop on Visual Computing for Biology and Medicine, 2014.

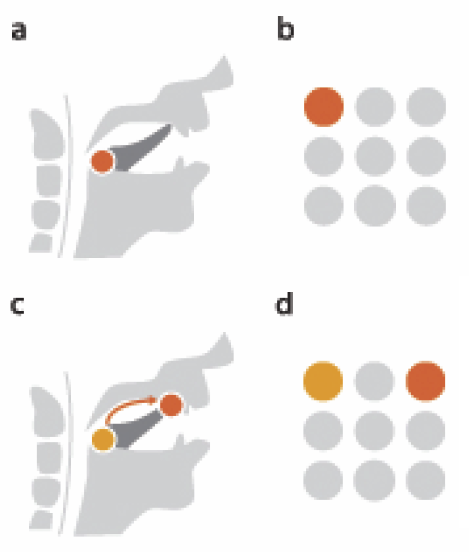

Selecting structures from volume data using direct over-the-visualization interactions, such as a paint brush, is perhaps the most intuitive method in a variety of application scenarios. Unfortunately, it seems difficult to design a universal tool that is effective for all different structures in biology research. In [WOCH12b], an interactive technique was proposed for extracting neural structures from confocal microscopy data. It uses a dual-stroke paint brush to select desired structures directly from volume visualizations. However, the technique breaks down when it was applied to selecting densely packed structures with condensed shapes, such as nuclei from zebrafish eye development research. We collaborated with biologists studying zebrafish eye development and adapted the paint brush tool for real-time nucleus selection from volume data. The morphological diffusion algorithm used in the previous paint brush is restricted to gradient descending directions for improved nucleus boundary definition. Occluded seeds are removed using backward ray-casting. The adapted paint brush is then used in tracking cell movements in a time sequence dataset of a developing zebrafish eye.

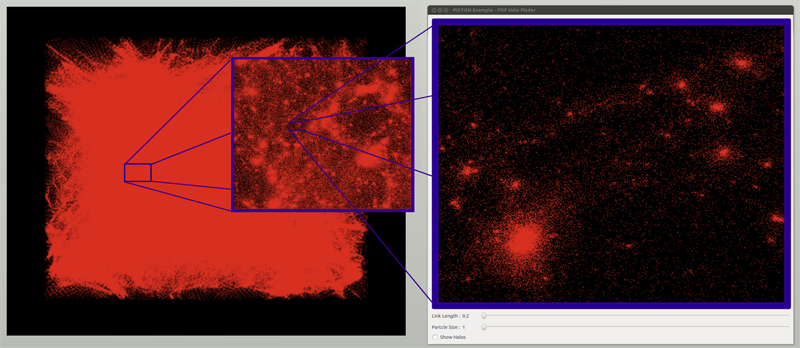

W. Widanagamaachchi, P.-T. Bremer, C. Sewell, L.-T. Lo; J. Ahrens, V. Pascucci.

“Data-Parallel Halo Finding with Variable Linking Lengths,” In Proceedings of the 2014 IEEE 4th Symposium on Large Data Analysis and Visualization (LDAV), pp. 27--34. November, 2014.

Y.-Y. Yu, P.T. Fletcher, S.P. Awate.

“Hierarchical Bayesian Modeling, Estimation, and Sampling for Multigroup Shape Analysis,” In Proceedings of Medical Image Computing and Computer Assisted Intervention (MICCAI), 2014.

This paper proposes a novel method for the analysis of anatomical shapes present in biomedical image data. Motivated by the natural organization of population data into multiple groups, this paper presents a novel hierarchical generative statistical model on shapes. The proposed method represents shapes using pointsets and defines a joint distribution on the population's (i) shape variables and (ii) object-boundary data. The proposed method solves for optimal (i) point locations, (ii) correspondences, and (iii) model-parameter values as a single optimization problem. The optimization uses expectation maximization relying on a novel Markov-chain Monte-Carlo algorithm for sampling in Kendall shape space. Results on clinical brain images demonstrate advantages over the state of the art.

M. Zhang, P.T. Fletcher.

“Bayesian Principal Geodesic Analysis in Diffeomorphic Image Registration,” In Proceedings of Medical Image Computing and Computer Assisted Intervention (MICCAI), 2014.

L. Zhou, C.D. Hansen.

“GuideME: Slice-guided Semiautomatic Multivariate Exploration of Volumes,” In Computer Graphics Forum, Vol. 33, No. 3, Wiley-Blackwell, pp. 151--160. jun, 2014.

DOI: 10.1111/cgf.12371

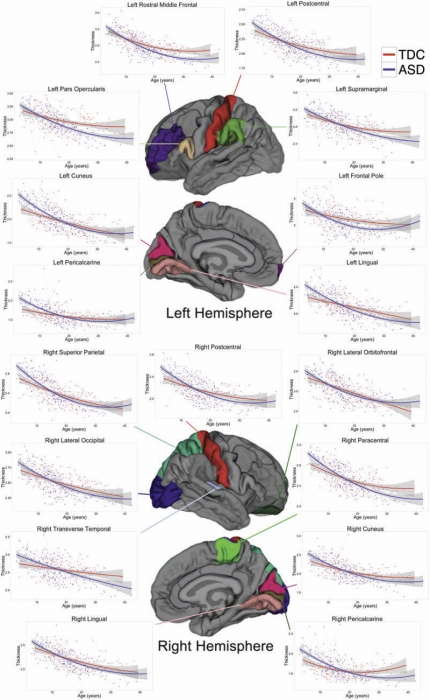

B.A. Zielinski, M.B.D. Prigge, J.A. Nielsen, A.L. Froehlich, T.J. Abildskov, J.S. Anderson, P.T. Fletcher, K.M. Zygmunt, B.G. Travers, N. Lange, A.L. Alexander, E.D. Bigler, J.E. Lainhart.

“Longitudinal changes in cortical thickness in autism and typical development,” In Brain, Vol. 137, No. 6, Edited by Dimitri M. Kullmann, pp. 1799--1812. 2014.

DOI: 10.1093/brain/awu083

2013

A. Abdul-Rahman, J. Lein, K. Coles, E. Maguire, M.D. Meyer, M. Wynne, C.R. Johnson, A. Trefethen, M. Chen.

“Rule-based Visual Mappings - with a Case Study on Poetry Visualization,” In Proceedings of the 2013 Eurographics Conference on Visualization (EuroVis), Vol. 32, No. 3, pp. 381--390. June, 2013.

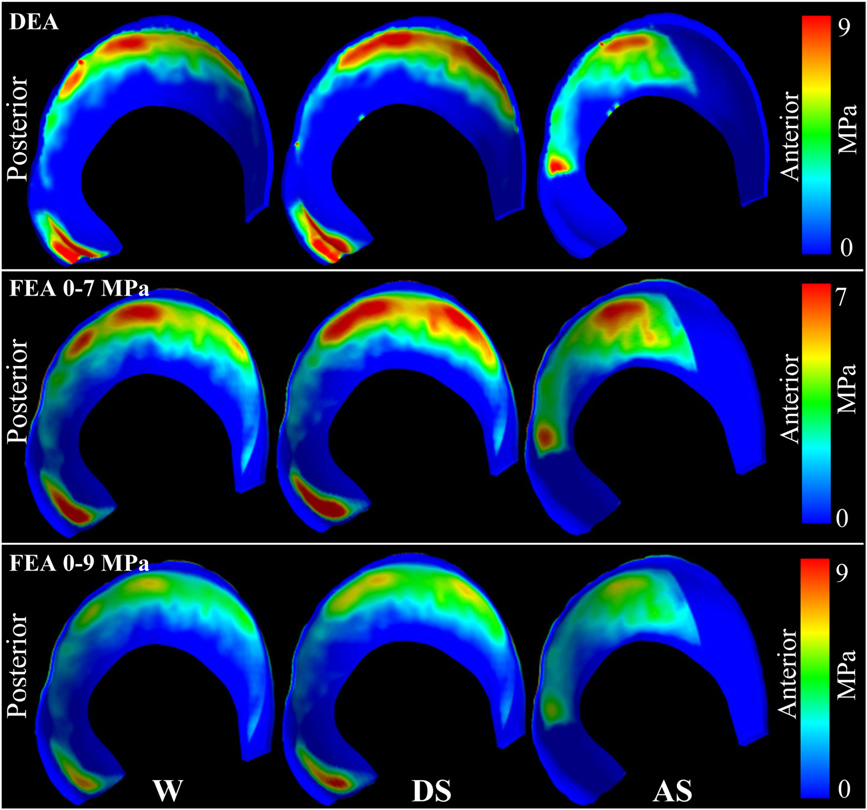

C.L. Abraham, S.A. Maas, J.A. Weiss, B.J. Ellis, C.L. Peters, A.E. Anderson.

“A new discrete element analysis method for predicting hip joint contact stresses,” In Journal of Biomechanics, Vol. 46, No. 6, pp. 1121--1127. 2013.

DOI: 10.1016/j.jbiomech.2013.01.012

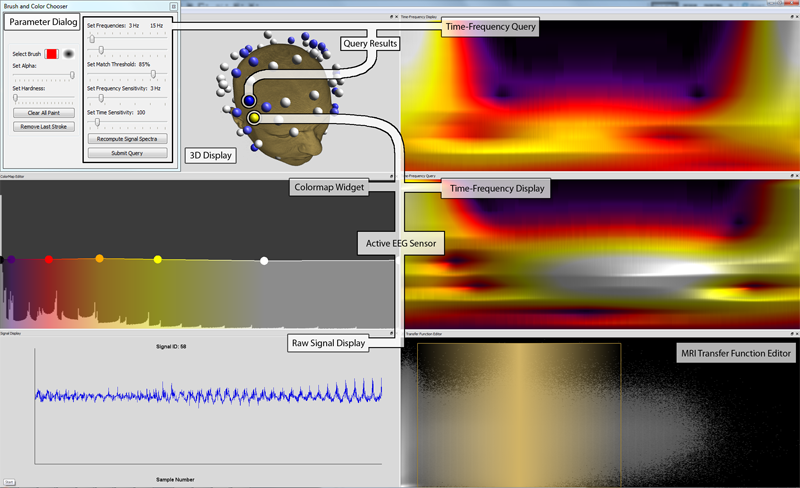

E.W. Anderson, C. Chong, G.A. Preston, C.T. Silva.

“Discovering and Visualizing Patterns in EEG Data,” In Proceedings of the 2013 IEEE Pacific Visualization Symposium (PacificVis), pp. 105--112. 2013.

Brain activity data is often collected through the use of electroencephalography (EEG). In this data acquisition modality, the electric fields generated by neurons are measured at the scalp. Although this technology is capable of measuring activity from a group of neurons, recent efforts provide evidence that these small neuronal collections communicate with other, distant assemblies in the brain's cortex. These collaborative neural assemblies are often found by examining the EEG record to find shared activity patterns.

In this paper, we present a system that focuses on extracting and visualizing potential neural activity patterns directly from EEG data. Using our system, neuroscientists may investigate the spectral dynamics of signals generated by individual electrodes or groups of sensors. Additionally, users may interactively generate queries which are processed to reveal which areas of the brain may exhibit common activation patterns across time and frequency. The utility of this system is highlighted in a case study in which it is used to analyze EEG data collected during a working memory experiment.

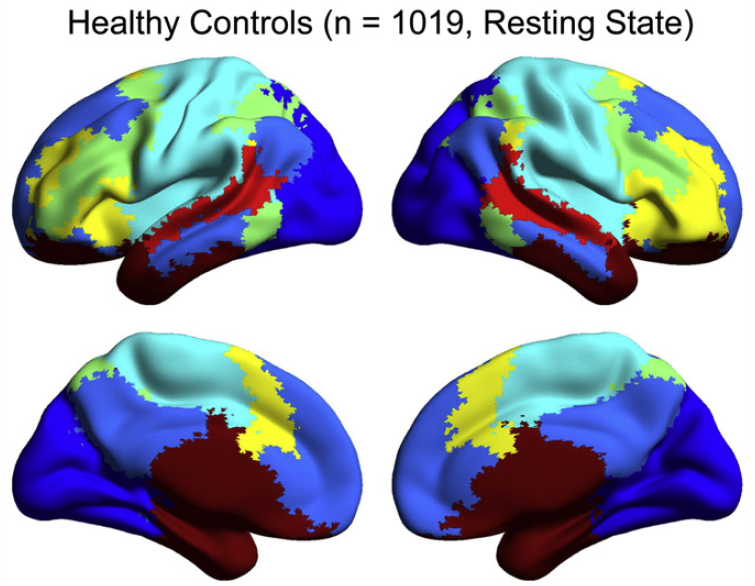

J.S. Anderson, J.A. Nielsen, M.A. Ferguson, M.C. Burback, E.T. Cox, L. Dai, G. Gerig, J.O. Edgin, J.R. Korenberg.

“Abnormal brain synchrony in Down Syndrome,” In NeuroImage: Clinical, Vol. 2, pp. 703--715. 2013.

ISSN: 2213-1582

DOI: 10.1016/j.nicl.2013.05.006

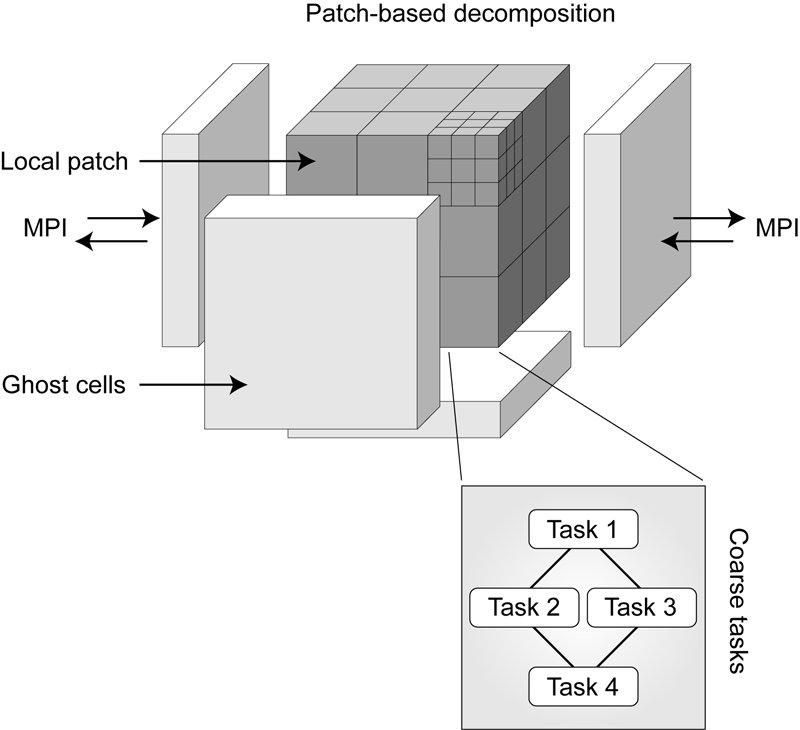

J. Beckvermit, J. Peterson, T. Harman, S. Bardenhagen, C. Wight, Q. Meng, M. Berzins.

“Multiscale Modeling of Accidental Explosions and Detonations,” In Computing in Science and Engineering, Vol. 15, No. 4, pp. 76--86. 2013.

DOI: 10.1109/MCSE.2013.89

Accidental explosions are exceptionally dangerous and costly, both in lives and money. Regarding world-wide conflict with small arms and light weapons, the Small Arms Survey has recorded over 297 accidental explosions in munitions depots across the world that have resulted in thousands of deaths and billions of dollars in damage in the past decade alone [45]. As the recent fertilizer plant explosion that killed 15 people in West, Texas demonstrates, accidental explosions are not limited to military operations. Transportation accidents also pose risks, as illustrated by the occasional train derailment/explosion in the nightly news, or the semi-truck explosion detailed in the following section. Unlike other industrial accident scenarios, explosions can easily affect the general public, a dramatic example being the PEPCON disaster in 1988, where windows were shattered, doors blown off their hinges, and flying glass and debris caused injuries up to 10 miles away.

While the relative rarity of accidental explosions speaks well of our understanding to date, their violence rightly gives us pause. A better understanding of these materials is clearly still needed, but a significant barrier is the complexity of these materials and the various length scales involved. In typical military applications, explosives are known to be ignited by the coalescence of hot spots which occur on micrometer scales. Whether this reaction remains a deflagration (burning) or builds to a detonation depends both on the stimulus and the boundary conditions or level of confinement. Boundary conditions are typically on the scale of engineered parts, approximately meters. Additional dangers are present at the scale of trucks and factories. The interaction of various entities, such as barrels of fertilizer or crates of detonators, admits the possibility of a sympathetic detonation, i.e. the unintended detonation of one entity by the explosion of another, generally caused by an explosive shock wave or blast fragments.

While experimental work has been and will continue to be critical to developing our fundamental understanding of explosive initiation, de agration and detonation, there is no practical way to comprehensively assess safety on the scale of trucks and factories experimentally. The scenarios are too diverse and the costs too great. Numerical simulation provides a complementary tool that, with the steadily increasing computational power of the past decades, makes simulations at this scale begin to look plausible. Simulations at both the micrometer scale, the "mesoscale", and at the scale of engineered parts, the "macro-scale", have been contributing increasingly to our understanding of these materials. Still, simulations on this scale require both massively parallel computational infrastructure and selective sampling of mesoscale response, i.e. advanced computational tools and modeling. The computational framework Uintah [1] has been developed for exactly this purpose.

Keywords: uintah, c-safe, accidents, explosions, military computing, risk analysis

M. Berzins, J. Schmidt, Q. Meng, A. Humphrey.

“Past, Present, and Future Scalability of the Uintah Software,” In Proceedings of the Blue Waters Extreme Scaling Workshop 2012, pp. Article No.: 6. 2013.

Keywords: netl, Uintah, parallelism, scalability, adaptive mesh refinement, linear equations

M. Berzins.

“Data and Range-Bounded Polynomials in ENO Methods,” In Journal of Computational Science, Vol. 4, No. 1-2, pp. 62--70. 2013.

DOI: 10.1016/j.jocs.2012.04.006

Essentially Non-Oscillatory (ENO) methods and Weighted Essentially Non-Oscillatory (WENO) methods are of fundamental importance in the numerical solution of hyperbolic equations. A key property of such equations is that the solution must remain positive or lie between bounds. A modification of the polynomials used in ENO methods to ensure that the modified polynomials are either bounded by adjacent values (data-bounded) or lie within a specified range (range-bounded) is considered. It is shown that this approach helps both in the range boundedness in the preservation of extrema in the ENO polynomial solution.

N.M. Bertagnolli, J.A. Drake, J.M. Tennessen, O. Alter.

“SVD Identifies Transcript Length Distribution Functions from DNA Microarray Data and Reveals Evolutionary Forces Globally Affecting GBM Metabolism,” In Public Library of Science (PLoS) One, Vol. 8, No. 11, pp. article e78913. November, 2013.

DOI: 10.1371/journal.pone.0078913

To search for evolutionary forces that might act upon transcript length, we use the singular value decomposition (SVD) to identify the length distribution functions of sets and subsets of human and yeast transcripts from profiles of mRNA abundance levels across gel electrophoresis migration distances that were previously measured by DNA microarrays. We show that the SVD identifies the transcript length distribution functions as “asymmetric generalized coherent states” from the DNA microarray data and with no a-priori assumptions. Comparing subsets of human and yeast transcripts of the same gene ontology annotations, we find that in both disparate eukaryotes, transcripts involved in protein synthesis or mitochondrial metabolism are significantly shorter than typical, and in particular, significantly shorter than those involved in glucose metabolism. Comparing the subsets of human transcripts that are overexpressed in glioblastoma multiforme (GBM) or normal brain tissue samples from The Cancer Genome Atlas, we find that GBM maintains normal brain overexpression of significantly short transcripts, enriched in transcripts that are involved in protein synthesis or mitochondrial metabolism, but suppresses normal overexpression of significantly longer transcripts, enriched in transcripts that are involved in glucose metabolism and brain activity. These global relations among transcript length, cellular metabolism and tumor development suggest a previously unrecognized physical mode for tumor and normal cells to differentially regulate metabolism in a transcript length-dependent manner. The identified distribution functions support a previous hypothesis from mathematical modeling of evolutionary forces that act upon transcript length in the manner of the restoring force of the harmonic oscillator.

Page 43 of 144