SCI Publications

2015

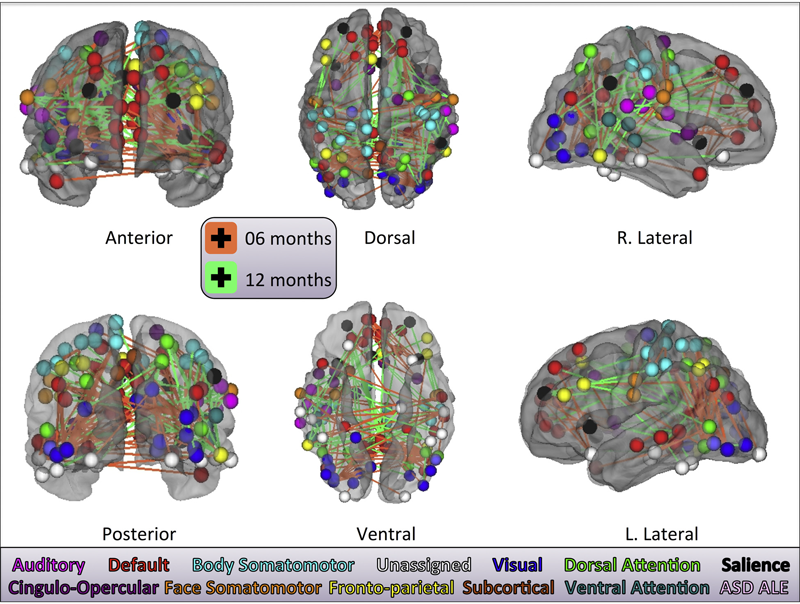

J.R. Pruett Jr., S. Kandala, S. Hoertel, A.Z. Snyder, J.T. Elison, T. Nishino, E. Feczko, N.U.F. Dosenbach, B. Nardos, J.D. Power, B. Adeyemo, K.N. Botteron, R.C. McKinstry, A.C. Evans, H.C. Hazlett, S.R. Dager, S. Paterson, R.T. Schultz, D.L. Collins, V.S. Fonov, M. Styner, G. Gerig, S. Das, P. Kostopoulos, J.N. Constantino, A.M. Estes, The IBIS Network, S.E. Petersen, B.L. Schlaggar, J. Piven.

“Accurate age classification of 6 and 12 month-old infants based on resting-state functional connectivity magnetic resonance imaging data,” In Developmental Cognitive Neuroscience, Vol. 12, pp. 123--133. April, 2015.

DOI: 10.1016/j.dcn.2015.01.003

S. Pujol, W. Wells, C. Pierpaoli, C. Brun, J. Gee, G. Cheng, B. Vemuri, O. Commowick, S. Prima, A. Stamm, M. Goubran, A. Khan, T. Peters, P. Neher, K. H. Maier-Hein, Y. Shi, A. Tristan-Vega, G. Veni, R. Whitaker, M. Styner, C.F. Westin, S. Gouttard, I. Norton, L. Chauvin, H. Mamata, G. Gerig, A. Nabavi, A. Golby,, R. Kikinis.

“The DTI Challenge: Toward Standardized Evaluation of Diffusion Tensor Imaging Tractography for Neurosurgery,” In Journal of Neuroimaging, Wiley, August, 2015.

DOI: 10.1111/jon.12283

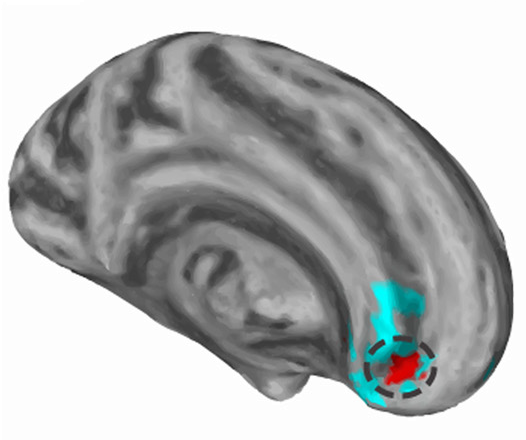

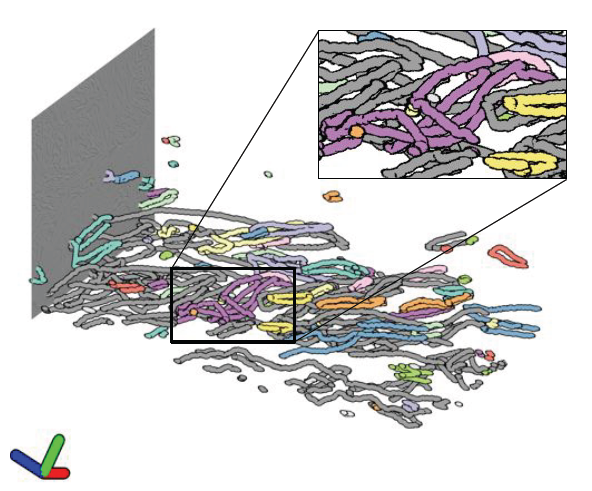

Diffusion tensor imaging (DTI) tractography reconstruction of white matter pathways can help guide brain tumor resection. However, DTI tracts are complex mathematical objects and the validity of tractography-derived information in clinical settings has yet to be fully established. To address this issue, we initiated the DTI Challenge, an international working group of clinicians and scientists whose goal was to provide standardized evaluation of tractography methods for neurosurgery. The purpose of this empirical study was to evaluate different tractography techniques in the first DTI Challenge workshop.

METHODS

Eight international teams from leading institutions reconstructed the pyramidal tract in four neurosurgical cases presenting with a glioma near the motor cortex. Tractography methods included deterministic, probabilistic, filtered, and global approaches. Standardized evaluation of the tracts consisted in the qualitative review of the pyramidal pathways by a panel of neurosurgeons and DTI experts and the quantitative evaluation of the degree of agreement among methods.

RESULTS

The evaluation of tractography reconstructions showed a great interalgorithm variability. Although most methods found projections of the pyramidal tract from the medial portion of the motor strip, only a few algorithms could trace the lateral projections from the hand, face, and tongue area. In addition, the structure of disagreement among methods was similar across hemispheres despite the anatomical distortions caused by pathological tissues.

CONCLUSIONS

The DTI Challenge provides a benchmark for the standardized evaluation of tractography methods on neurosurgical data. This study suggests that there are still limitations to the clinical use of tractography for neurosurgical decision making.

M. Raj, M. Mirzargar, R. Kirby, R. Whitaker, J. Preston.

“Evaluating Alignment of Shapes by Ensemble Visualization,” In IEEE Computer Graphics and Applications, IEEE, 2015.

The visualization of variability in 3D shapes or surfaces, which is a type of ensemble uncertainty visualization for volume data, provides a means of understanding the underlying distribution for a collection or ensemble of surfaces. While ensemble visualization for surfaces is already described in the literature, we conduct an expert-based evaluation in a particular medical imaging application: the construction of atlases or templates from a population of images. In this work, we extend contour boxplots to 3D, allowing us to evaluate it against an enumeration-style visualization of the ensemble members and also other conventional visualizations used by atlas builders, namely examining the atlas image and the corresponding images/data provided as part of the construction process. We present feedback from domain experts on the efficacy of contour boxplots compared to other modalities when used as part of the atlas construction and analysis stages of their work.

N. Ramesh, F. Mesadi, M. Cetin, T. Tasdizen.

“Disjunctive normal shape models,” In 2015 IEEE 12th International Symposium on Biomedical Imaging (ISBI), IEEE, April, 2015.

DOI: 10.1109/isbi.2015.7164170

A novel implicit parametric shape model is proposed for segmentation and analysis of medical images. Functions representing the shape of an object can be approximated as a union of N polytopes. Each polytope is obtained by the intersection of M half-spaces. The shape function can be approximated as a disjunction of conjunctions, using the disjunctive normal form. The shape model is initialized using seed points defined by the user. We define a cost function based on the Chan-Vese energy functional. The model is differentiable, hence, gradient based optimization algorithms are used to find the model parameters.

D. Reed, M. Berzins, R. Lucas, S. Matsuoka, R. Pennington, V. Sarkar, V. Taylor.

“DOE Advanced Scientific Computing Advisory Committee (ASCAC) Report: Exascale Computing Initiative Review,” Note: DOE Report, 2015.

DOI: DOI 10.2172/1222712

H. J.V. Rutherford, G. Gerig, S. Gouttard, M. N. Potenza, L. C. Mayes.

“Investigating maternal brain structure and its relationship to substance use and motivational systems,” In Yale Journal of Biology and Medicine, in print, 2015.

Substance use during pregnancy and the postpartum period may have significant implications for both mother and the developing child. However, the neurobiological basis of the impact of substance use on parenting is less well understood. Here we examined the impact of maternal substance use on cortical gray matter (GM) and white matter volumes, and whether this was associated with individual differences in motivational systems of behavioral activation and inhibition. Mothers were included in the substance-using group if any addictive substance was used during pregnancy and/or in the immediate postpartum period (within 3 months of delivery). GM volume was reduced in substance-using mothers compared to non-substance-using mothers, particularly in frontal brain regions. In substance-using mothers, we also found that frontal GM was negatively correlated with levels of behavioral activation (i.e., the motivation to approach rewarding stimuli). This effect was absent in non-substance-using mothers. Taken together, these findings indicate a reduction in GM volume is associated with substance use, and that frontal GM volumetric differences may be related to approach motivation in substance-using mothers.

N. Sadeghi, J. H. Gilmore , G. Gerig.

“Modeling Brain Growth and Development,” In Brain, Vol. 1, pp. 429-436. 2015.

DOI: 10.1016/B978-0-12-397025-1.00314-6

M. Sajjadi, M. Seyedhosseini,, T. Tasdizen.

“Nonlinear Regression with Logistic Product Basis Networks,” In IEEE Signal Processing Letters, Vol. 22, No. 8, IEEE, pp. 1011--1015. Aug, 2015.

DOI: 10.1109/lsp.2014.2380791

We introduce a novel general regression model that is based on a linear combination of a new set of non-local basis functions that forms an effective feature space. We propose a training algorithm that learns all the model parameters simultaneously and offer an initialization scheme for parameters of the basis functions. We show through several experiments that the proposed method offers better coverage for high-dimensional space compared to local Gaussian basis functions and provides competitive performance in comparison to other state-of-the-art regression methods.

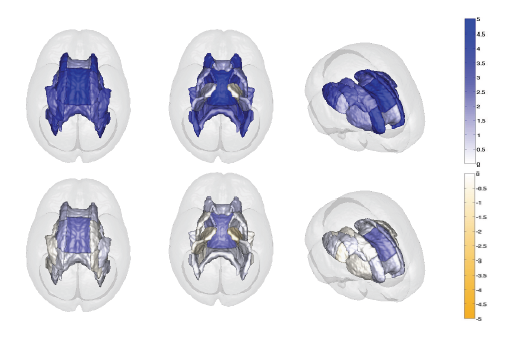

A. P. Salzwedel, K. M. Grewen, C. Vachet, G. Gerig, W. Lin,, W. Gao.

“Prenatal Drug Exposure Affects Neonatal Brain Functional Connectivity,” In The Journal of Neuroscience, Vol. 35, No. 14, pp. 5860-5869. April, 2015.

DOI: 10.1523/JNEUROSCI.4333-14.2015

S. Sankaranarayanan, T.E. Schomay, K.A. Aiello, O. Alter.

“Tensor GSVD of patient- and platform-matched tumor and normal DNA copy-number profiles uncovers chromosome arm-wide patterns of tumor-exclusive platform-consistent alterations encoding for cell transformation and predicting ovarian cancer survival,” In PLoS ONE, Vol. 10, No. e121396, 2015.

DOI: 10.1371/journal.pone.0121396

Note: Scientific Computing and Imaging Institute (SCI), University of Utah, www.sci.utah.edu, 2015.

SCI Institute.

Note: SCIRun: A Scientific Computing Problem Solving Environment, Scientific Computing and Imaging Institute (SCI), Download from: http://www.scirun.org, 2015.

CIBC.

Note: Seg3D: Volumetric Image Segmentation and Visualization. Scientific Computing and Imaging Institute (SCI), Download from: http://www.seg3d.org, 2015.

M. Seyedhosseini , T. Tasdizen.

“Disjunctive normal random forests,” In Pattern Recognition, Vol. 48, No. 3, Elsevier BV, pp. 976--983. March, 2015.

DOI: 10.1016/j.patcog.2014.08.023

We develop a novel supervised learning/classification method, called disjunctive normal random forest (DNRF). A DNRF is an ensemble of randomly trained disjunctive normal decision trees (DNDT). To construct a DNDT, we formulate each decision tree in the random forest as a disjunction of rules, which are conjunctions of Boolean functions. We then approximate this disjunction of conjunctions with a differentiable function and approach the learning process as a risk minimization problem that incorporates the classification error into a single global objective function. The minimization problem is solved using gradient descent. DNRFs are able to learn complex decision boundaries and achieve low generalization error. We present experimental results demonstrating the improved performance of DNDTs and DNRFs over conventional decision trees and random forests. We also show the superior performance of DNRFs over state-of-the-art classification methods on benchmark datasets.

S.M. Seyedhosseini, S. Shushruth, T. Davis, J.M. Ichida, P.A. House, B. Greger, A. Angelucci, T. Tasdizen.

“Informative features of local field potential signals in primary visual cortex during natural image stimulation,” In Journal of Neurophysiology, Vol. 113, No. 5, American Physiological Society, pp. 1520--1532. March, 2015.

DOI: 10.1152/jn.00278.2014

The local field potential (LFP) is of growing importance in neurophysiology as a metric of network activity and as a readout signal for use in brain-machine interfaces. However, there are uncertainties regarding the kind and visual field extent of information carried by LFP signals, as well as the specific features of the LFP signal conveying such information, especially under naturalistic conditions. To address these questions, we recorded LFP responses to natural images in V1 of awake and anesthetized macaques using Utah multielectrode arrays. First, we have shown that it is possible to identify presented natural images from the LFP responses they evoke using trained Gabor wavelet (GW) models. Because GW models were devised to explain the spiking responses of V1 cells, this finding suggests that local spiking activity and LFPs (thought to reflect primarily local synaptic activity) carry similar visual information. Second, models trained on scalar metrics, such as the evoked LFP response range, provide robust image identification, supporting the informative nature of even simple LFP features. Third, image identification is robust only for the first 300 ms following image presentation, and image information is not restricted to any of the spectral bands. This suggests that the short-latency broadband LFP response carries most information during natural scene viewing. Finally, best image identification was achieved by GW models incorporating information at the scale of ∼0.5° in size and trained using four different orientations. This suggests that during natural image viewing, LFPs carry stimulus-specific information at spatial scales corresponding to few orientation columns in macaque V1.

SCI Institute.

Note: ShapeWorks: An open-source tool for constructing compact statistical point-based models of ensembles of similar shapes that does not rely on specific surface parameterization. Scientific Computing and Imaging Institute (SCI). Download from: http://www.sci.utah.edu/software/shapeworks.html, 2015.

P. Skraba, Bei Wang, G. Chen, P. Rosen.

“Robustness-Based Simplification of 2D Steady and Unsteady Vector Fields,” In IEEE Transactions on Visualization and Computer Graphics (to appear), 2015.

SLASH.

Note: SLASH: A hybrid system for high-throughput segmentation of large neuropil datasets, SLASH is funded by the National Institute of Neurological Disorders and Stroke (NINDS) grant 5R01NS075314-03., 2015.

H. Strobelt, B. Alsallakh, J. Botros, B. Peterson, M. Borowsky, H. Pfister,, A. Lex.

“Vials: Visualizing Alternative Splicing of Genes,” In IEEE Transactions on Visualization and Computer Graphics (InfoVis '15), Vol. 22, No. 1, pp. 399-408. 2015.

Alternative splicing is a process by which the same DNA sequence is used to assemble different proteins, called protein isoforms. Alternative splicing works by selectively omitting some of the coding regions (exons) typically associated with a gene. Detection of alternative splicing is difficult and uses a combination of advanced data acquisition methods and statistical inference. Knowledge about the abundance of isoforms is important for understanding both normal processes and diseases and to eventually improve treatment through targeted therapies. The data, however, is complex and current visualizations for isoforms are neither perceptually efficient nor scalable. To remedy this, we developed Vials, a novel visual analysis tool that enables analysts to explore the various datasets that scientists use to make judgments about isoforms: the abundance of reads associated with the coding regions of the gene, evidence for junctions, i.e., edges connecting the coding regions, and predictions of isoform frequencies. Vials is scalable as it allows for the simultaneous analysis of many samples in multiple groups. Our tool thus enables experts to (a) identify patterns of isoform abundance in groups of samples and (b) evaluate the quality of the data. We demonstrate the value of our tool in case studies using publicly available datasets.

B. Summa, A. A. Gooch, G. Scorzelli, V. Pascucci.

“Paint and Click: Unified Interactions for Image Boundaries,” In Computer Graphics Forum, Vol. 34, No. 2, Wiley-Blackwell, pp. 385--393. May, 2015.

DOI: 10.1111/cgf.12568

Image boundaries are a fundamental component of many interactive digital photography techniques, enabling applications such as segmentation, panoramas, and seamless image composition. Interactions for image boundaries often rely on two complementary but separate approaches: editing via painting or clicking constraints. In this work, we provide a novel, unified approach for interactive editing of pairwise image boundaries that combines the ease of painting with the direct control of constraints. Rather than a sequential coupling, this new formulation allows full use of both interactions simultaneously, giving users unprecedented flexibility for fast boundary editing. To enable this new approach, we provide technical advancements. In particular, we detail a reformulation of image boundaries as a problem of finding cycles, expanding and correcting limitations of the previous work. Our new formulation provides boundary solutions for painted regions with performance on par with state-of-the-art specialized, paint-only techniques. In addition, we provide instantaneous exploration of the boundary solution space with user constraints. Finally, we provide examples of common graphics applications impacted by our new approach.

Page 37 of 144