SCI Publications

2015

C.R. Johnson.

“Visualization,” In Encyclopedia of Applied and Computational Mathematics, Edited by Björn Engquist, Springer, pp. 1537-1546. 2015.

ISBN: 978-3-540-70528-4

DOI: 10.1007/978-3-540-70529-1_368

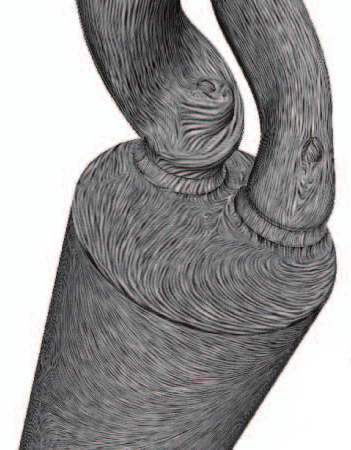

C. Jones, T. Liu, N.W. Cohan, M. Ellisman, T. Tasdizen.

“Efficient semi-automatic 3D segmentation for neuron tracing in electron microscopy images,” In Journal of Neuroscience Methods, Vol. 246, Elsevier BV, pp. 13--21. May, 2015.

DOI: 10.1016/j.jneumeth.2015.03.005

Background

In the area of connectomics, there is a significant gap between the time required for data acquisition and dense reconstruction of the neural processes contained in the same dataset. Automatic methods are able to eliminate this timing gap, but the state-of-the-art accuracy so far is insufficient for use without user corrections. If completed naively, this process of correction can be tedious and time consuming.

New method

We present a new semi-automatic method that can be used to perform 3D segmentation of neurites in EM image stacks. It utilizes an automatic method that creates a hierarchical structure for recommended merges of superpixels. The user is then guided through each predicted region to quickly identify errors and establish correct links.

Results

We tested our method on three datasets with both novice and expert users. Accuracy and timing were compared with published automatic, semi-automatic, and manual results.

Comparison with existing methods

Post-automatic correction methods have also been used in Mishchenko et al. (2010) and Haehn et al. (2014). These methods do not provide navigation or suggestions in the manner we present. Other semi-automatic methods require user input prior to the automatic segmentation such as Jeong et al. (2009) and Cardona et al. (2010) and are inherently different than our method.

Conclusion

Using this method on the three datasets, novice users achieved accuracy exceeding state-of-the-art automatic results, and expert users achieved accuracy on par with full manual labeling but with a 70% time improvement when compared with other examples in publication.

M. Kim, C.D. Hansen.

“Surface Flow Visualization using the Closest Point Embedding,” In 2015 IEEE Pacific Visualization Symposium, April, 2015.

Keywords: vector field, flow visualization

M. Kim, C.D. Hansen.

“GPU Surface Extraction with the Closest Point Embedding,” In Proceedings of IS&T/SPIE Visualization and Data Analysis, 2015, February, 2015.

Keywords: scalar field methods, GPGPU, curvature based, scientific visualization

R.M. Kirby, M. Berzins, J.S. Hesthaven (Editors).

“Spectral and High Order Methods for Partial Differential Equations,” Subtitled “Selected Papers from the ICOSAHOM'14 Conference, June 23-27, 2014, Salt Lake City, UT, USA.,” In Lecture Notes in Computational Science and Engineering, Springer, 2015.

O. A. von Lilienfeld, R. Ramakrishanan, M., A. Knoll.

“Fourier Series of Atomic Radial Distribution Functions: A Molecular Fingerprint for Machine Learning Models of Quantum Chemical Properties,” In International Journal of Quantum Chemistry, Wiley Online Library, 2015.

We introduce a fingerprint representation of molecules based on a Fourier series of atomic radial distribution functions. This fingerprint is unique (except for chirality), continuous, and differentiable with respect to atomic coordinates and nuclear charges. It is invariant with respect to translation, rotation, and nuclear permutation, and requires no pre-conceived knowledge about chemical bonding, topology, or electronic orbitals. As such it meets many important criteria for a good molecular representation, suggesting its usefulness for machine learning models of molecular properties trained across chemical compound space. To assess the performance of this new descriptor we have trained machine learning models of molecular enthalpies of atomization for training sets with up to 10 k organic molecules, drawn at random from a published set of 134 k organic molecules with an average atomization enthalpy of over 1770 kcal/mol. We validate the descriptor on all remaining molecules of the 134 k set. For a training set of 10k molecules the fingerprint descriptor achieves a mean absolute error of 8.0 kcal/mol, respectively. This is slightly worse than the performance attained using the Coulomb matrix, another popular alternative, reaching 6.2 kcal/mol for the same training and test sets.

S. Liu, D. Maljovec, Bei Wang, P. T. Bremer, V. Pascucci.

“Visualizing High-Dimensional Data: Advances in the Past Decade,” In State of The Art Report, Eurographics Conference on Visualization (EuroVis), 2015.

S. Liu, Bei Wang, J. J. Thiagarajan, P. T. Bremer, V. Pascucci.

“Visual Exploration of High-Dimensional Data through Subspace Analysis and Dynamic Projections,” In Computer Graphics Forum, Vol. 34, No. 3, Wiley-Blackwell, pp. 271--280. June, 2015.

DOI: 10.1111/cgf.12639

CIBC.

Note: map3d: Interactive scientific visualization tool for bioengineering data. Scientific Computing and Imaging Institute (SCI), Download from: http://www.sci.utah.edu/cibc/software.html, 2015.

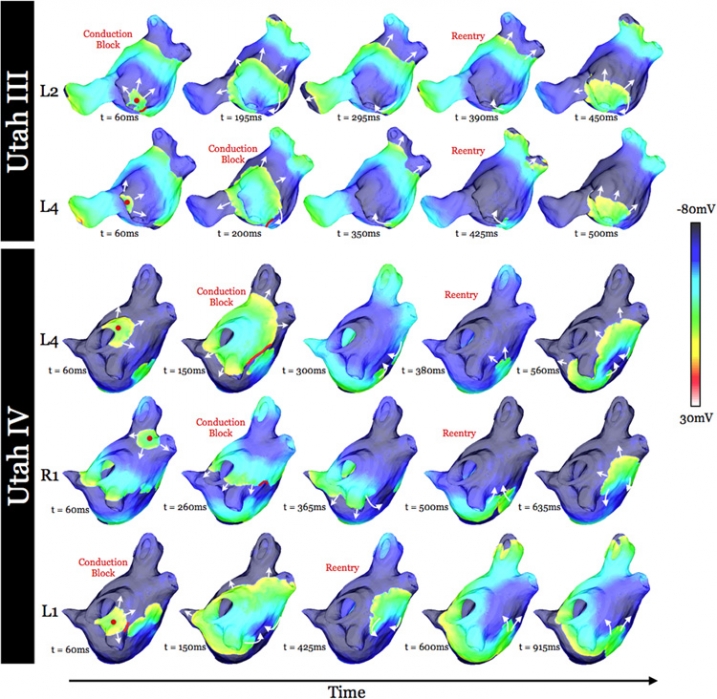

K.S. McDowell, S. Zahid, F. Vadakkumpadan, J.J. Blauer, R.S. MacLeod, N.A. Trayanova.

“Virtual Electrophysiological Study of Atrial Fibrillation in Fibrotic Remodeling,” In PLoS ONE, Vol. 10, No. 2, pp. e0117110. February, 2015.

DOI: 10.1371/journal.pone.0117110

K. S. McDowell, S. Zahid, F. Vadakkumpadan, J. Blauer, R. S. MacLeod, N. A. Trayanova.

“Virtual Electrophysiological Study of Atrial Fibrillation in Fibrotic Remodeling,” In PLoS ONE, Vol. 10, No. 2, Public Library of Science, pp. 1-16. May, 2015.

DOI: doi.org/10.1371/journal.pone.0117110

Research has indicated that atrial fibrillation (AF) ablation failure is related to the presence of atrial fibrosis. However it remains unclear whether this information can be successfully used in predicting the optimal ablation targets for AF termination. We aimed to provide a proof-of-concept that patient-specific virtual electrophysiological study that combines i) atrial structure and fibrosis distribution from clinical MRI and ii) modeling of atrial electrophysiology, could be used to predict: (1) how fibrosis distribution determines the locations from which paced beats degrade into AF; (2) the dynamic behavior of persistent AF rotors; and (3) the optimal ablation targets in each patient. Four MRI-based patient-specific models of fibrotic left atria were generated, ranging in fibrosis amount. Virtual electrophysiological studies were performed in these models, and where AF was inducible, the dynamics of AF were used to determine the ablation locations that render AF non-inducible. In 2 of the 4 models patient-specific models AF was induced; in these models the distance between a given pacing location and the closest fibrotic region determined whether AF was inducible from that particular location, with only the mid-range distances resulting in arrhythmia. Phase singularities of persistent rotors were found to move within restricted regions of tissue, which were independent of the pacing location from which AF was induced. Electrophysiological sensitivity analysis demonstrated that these regions changed little with variations in electrophysiological parameters. Patient-specific distribution of fibrosis was thus found to be a critical component of AF initiation and maintenance. When the restricted regions encompassing the meander of the persistent phase singularities were modeled as ablation lesions, AF could no longer be induced. The study demonstrates that a patient-specific modeling approach to identify non-invasively AF ablation targets prior to the clinical procedure is feasible.

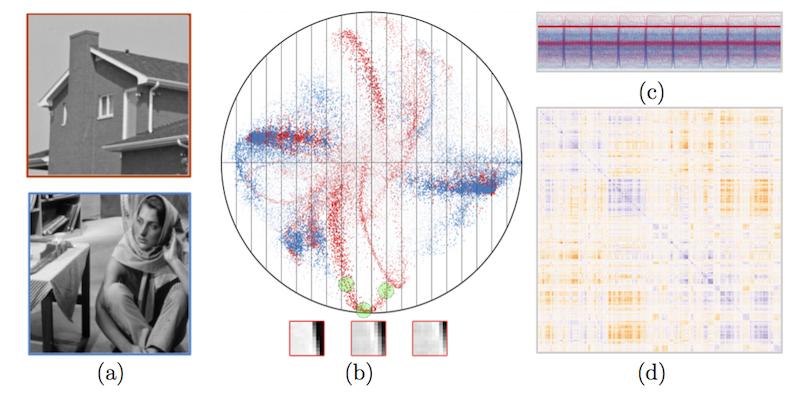

S. McKenna, M. Meyer, C. Gregg, S. Gerber.

“s-CorrPlot: An Interactive Scatterplot for Exploring Correlation,” In Journal of Computational and Graphical Statistics, 2015.

DOI: 10.1080/10618600.2015.1021926

F. Mesadi, M. Cetin, T. Tasdizen.

“Disjunctive Normal Shape and Appearance Priors with Applications to Image Segmentation,” In Lecture Notes in Computer Science, Springer International Publishing, pp. 703--710. 2015.

ISBN: 978-3-319-24574-4

DOI: 10.1007/978-3-319-24574-4_84

The use of appearance and shape priors in image segmentation is known to improve accuracy; however, existing techniques have several drawbacks. Active shape and appearance models require landmark points and assume unimodal shape and appearance distributions. Level set based shape priors are limited to global shape similarity. In this paper, we present a novel shape and appearance priors for image segmentation based on an implicit parametric shape representation called disjunctive normal shape model (DNSM). DNSM is formed by disjunction of conjunctions of half-spaces defined by discriminants. We learn shape and appearance statistics at varying spatial scales using nonparametric density estimation. Our method can generate a rich set of shape variations by locally combining training shapes. Additionally, by studying the intensity and texture statistics around each discriminant of our shape model, we construct a local appearance probability map. Experiments carried out on both medical and natural image datasets show the potential of the proposed method.

SCI Institute.

Note: NCR Toolset: A collection of software tools for the reconstruction and visualization of neural circuitry from electron microscopy data. Scientific Computing and Imaging Institute (SCI). Download from: http://www.sci.utah.edu/software.html, 2015.

C. Nobre, A. Lex.

“OceanPaths: Visualizing Multivariate Oceanography Data,” In Eurographics Conference on Visualization (EuroVis) - Short Papers, Edited by E. Bertini, J. Kennedy, E. Puppo, The Eurographics Association, 2015.

DOI: 10.2312/eurovisshort.20151124

Geographical datasets are ubiquitous in oceanography. While map-based visualizations are useful for many different domains, they can suffer from cluttering and overplotting issues when used for multivariate data sets. As a result, spatial data exploration in oceanography has often been restricted to multiple maps showing various depths or time intervals. This lack of interactive exploration often hinders efforts to expose correlations between properties of oceanographic features, specifically currents. OceanPaths provides powerful interaction and exploration methods for spatial, multivariate oceanography datasets to remedy these situations. Fundamentally, our method allows users to define pathways, typically following currents, along which the variation of the high-dimensional data can be plotted efficiently. We present a case study conducted by domain experts to underscore the usefulness of OceanPaths in uncovering trends and correlations in oceanographic data sets.

I. OguzI, J. Cates, M. Datar, B. Paniagua, T. Fletcher, C. Vachet, M. Styner, R. Whitaker.

“Entropy-based particle correspondence for shape populations,” In International Journal of Computer Assisted Radiology and Surgery, Springer, pp. 1-12. December, 2015.

Purpose

Statistical shape analysis of anatomical structures plays an important role in many medical image analysis applications such as understanding the structural changes in anatomy in various stages of growth or disease. Establishing accurate correspondence across object populations is essential for such statistical shape analysis studies.

Methods

In this paper, we present an entropy-based correspondence framework for computing point-based correspondence among populations of surfaces in a groupwise manner. This robust framework is parameterization-free and computationally efficient. We review the core principles of this method as well as various extensions to deal effectively with surfaces of complex geometry and application-driven correspondence metrics.

Results

We apply our method to synthetic and biological datasets to illustrate the concepts proposed and compare the performance of our framework to existing techniques.

Conclusions

Through the numerous extensions and variations presented here, we create a very flexible framework that can effectively handle objects of various topologies, multi-object complexes, open surfaces, and objects of complex geometry such as high-curvature regions or extremely thin features.

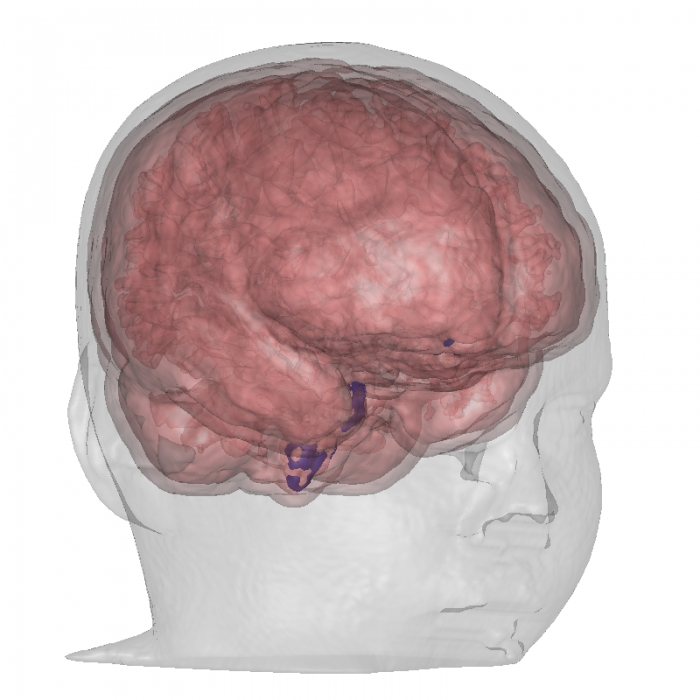

B.R. Parmar, T.R. Jarrett, E.G. Kholmovski, N. Hu, D. Parker, R.S. MacLeod, N.F. Marrouche, R. Ranjan.

“Poor scar formation after ablation is associated with atrial fibrillation recurrence,” In Journal of Interventional Cardiac Electrophysiology, Vol. 44, No. 3, pp. 247-256. December, 2015.

Purpose

Patients routinely undergo ablation for atrial fibrillation (AF) but the recurrence rate remains high. We explored in this study whether poor scar formation as seen on late-gadolinium enhancement magnetic resonance imaging (LGE-MRI) correlates with AF recurrence following ablation.

Methods

We retrospectively identified 94 consecutive patients who underwent their initial ablation for AF at our institution and had pre-procedural magnetic resonance angiography (MRA) merged with left atrial (LA) anatomy in an electroanatomic mapping (EAM) system, ablated areas marked intraprocedurally in EAM, 3-month post-ablation LGE-MRI for assessment of scar, and minimum of 3-months of clinical follow-up. Ablated area was quantified retrospectively in EAM and scarred area was quantified in the 3-month post-ablation LGE-MRI.

Results

With the mean follow-up of 336 days, 26 out of 94 patients had AF recurrence. Age, hypertension, and heart failure were not associated with AF recurrence, but LA size and difference between EAM ablated area and LGE-MRI scar area was associated with higher AF recurrence. For each percent higher difference between EAM ablated area and LGE-MRI scar area, there was a 7–9 % higher AF recurrence (p values 0.001–0.003) depending on the multivariate analysis.

Conclusions

In AF ablation, poor scar formation as seen on LGE-MRI was associated with AF recurrence. Improved mapping and ablation techniques are necessary to achieve the desired LA scar and reduce AF recurrence.

B. Peterson, N. Xiao, J. Holmen, S. Chaganti, A. Pakki, J. Schmidt, D. Sunderland, A. Humphrey, M. Berzins.

“Developing Uintah’s Runtime System For Forthcoming Architectures,” Subtitled “Refereed paper presented at the RESPA 15 Workshop at SuperComputing 2015 Austin Texas,” SCI Institute, 2015.

B. Peterson, H. K. Dasari, A. Humphrey, J.C. Sutherland, T. Saad, M. Berzins.

“Reducing overhead in the Uintah framework to support short-lived tasks on GPU-heterogeneous architectures,” In Proceedings of the 5th International Workshop on Domain-Specific Languages and High-Level Frameworks for High Performance Computing (WOLFHPC'15), ACM, pp. 4:1-4:8. 2015.

DOI: 10.1145/2830018.2830023

J. M. Phillips, Bei Wang, Y. Zheng.

“Geometric Inference on Kernel Density Estimates,” In CoRR, Vol. abs/1307.7760, 2015.

Page 36 of 144