SCI Publications

2011

D.E. Hart, M. Berzins, C.E. Goodyer, P.K. Jimack.

“Using Adjoint Error Estimation Techniques for Elastohydrodynamic Lubrication Line Contact Problems,” In International Journal for Numerical Methods in Fluids, Vol. 67, Note: Published online 29 October, pp. 1559--1570. 2011.

H.C. Hazlett, M. Poe, G. Gerig, M. Styner, C. Chappell, R.G. Smith, C. Vachet, J. Piven.

“Early Brain Overgrowth in Autism Associated with an Increase in Cortical Surface Area Before Age 2,” In Arch of Gen Psych, Vol. 68, No. 5, pp. 467--476. 2011.

DOI: 10.1001/archgenpsychiatry.2011.39

C.R. Henak, B.J. Ellis, M.D. Harris, A.E. Anderson, C.L. Peters, J.A. Weiss.

“Role of the acetabular labrum in load support across the hip joint,” In Journal of Biomechanics, Vol. 44, No. 12, pp. 2201-2206. 2011.

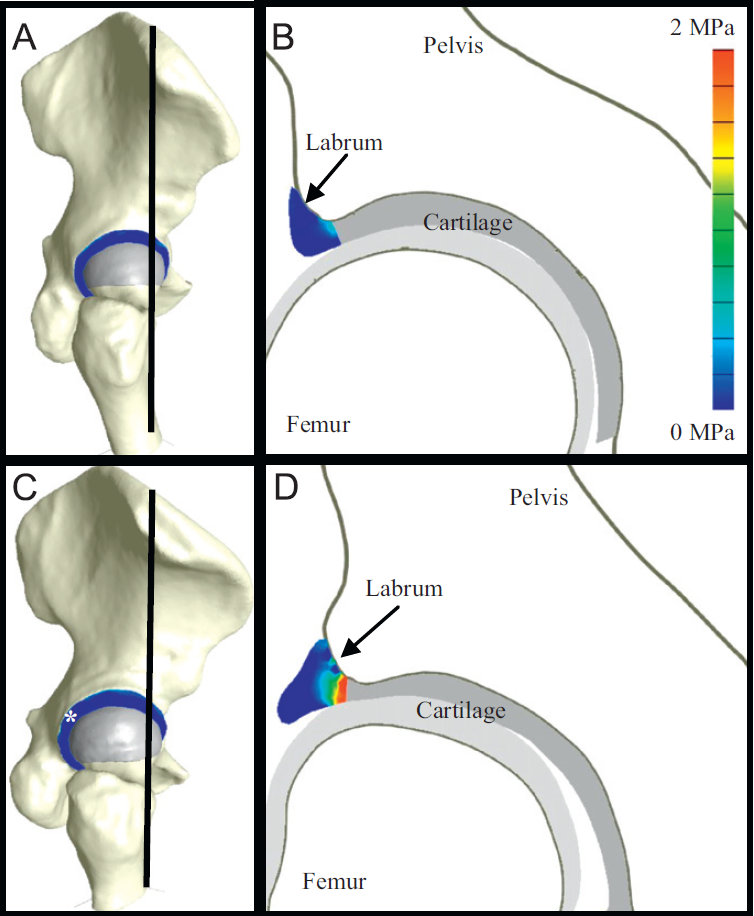

The relatively high incidence of labral tears among patients presenting with hip pain suggests that the acetabular labrum is often subjected to injurious loading in vivo. However, it is unclear whether the labrum participates in load transfer across the joint during activities of daily living. This study examined the role of the acetabular labrum in load transfer for hips with normal acetabular geometry and acetabular dysplasia using subject-specific finite element analysis. Models were generated from volumetric CT data and analyzed with and without the labrum during activities of daily living. The labrum in the dysplastic model supported 4–11\% of the total load transferred across the joint, while the labrum in the normal model supported only 1–2\% of the total load. Despite the increased load transferred to the acetabular cartilage in simulations without the labrum, there were minimal differences in cartilage contact stresses. This was because the load supported by the cartilage correlated with the cartilage contact area. A higher percentage of load was transferred to the labrum in the dysplastic model because the femoral head achieved equilibrium near the lateral edge of the acetabulum. The results of this study suggest that the labrum plays a larger role in load transfer and joint stability in hips with acetabular dysplasia than in hips with normal acetabular geometry.

L. Hogrebe, A. Paiva, E. Jurrus, C. Christensen, M. Bridge, J.R. Korenberg, T. Tasdizen.

“Trace Driven Registration of Neuron Confocal Microscopy Stacks,” In IEEE International Symposium on Biomedical Imaging (ISBI), pp. 1345--1348. 2011.

DOI: 10.1109/ISBI.2011.5872649

I. Hunsaker, T. Harman, J. Thornock, P.J. Smith.

“Efficient Parallelization of RMCRT for Large Scale LES Combustion Simulations,” In Proceedings of the AIAA 20th Computational Fluids Dynamics Conference, 2011.

DOI: 10.2514/6.2011-3770

A. Irimia, M.C. Chambers, J.R. Alger, M. Filippou, M.W. Prastawa, Bo Wang, D. Hovda, G. Gerig, A.W. Toga, R. Kikinis, P.M. Vespa, J.D. Van Horn.

“Comparison of acute and chronic traumatic brain injury using semi-automatic multimodal segmentation of MR volumes,” In Journal of Neurotrauma, Vol. 28, No. 11, pp. 2287--2306. November, 2011.

DOI: 10.1089/neu.2011.1920

PubMed ID: 21787171

Although neuroimaging is essential for prompt and proper management of traumatic brain injury (TBI), there is a regrettable and acute lack of robust methods for the visualization and assessment of TBI pathophysiology, especially for of the purpose of improving clinical outcome metrics. Until now, the application of automatic segmentation algorithms to TBI in a clinical setting has remained an elusive goal because existing methods have, for the most part, been insufficiently robust to faithfully capture TBI-related changes in brain anatomy. This article introduces and illustrates the combined use of multimodal TBI segmentation and time point comparison using 3D Slicer, a widely-used software environment whose TBI data processing solutions are openly available. For three representative TBI cases, semi-automatic tissue classification and 3D model generation are performed to perform intra-patient time point comparison of TBI using multimodal volumetrics and clinical atrophy measures. Identification and quantitative assessment of extra- and intra-cortical bleeding, lesions, edema, and diffuse axonal injury are demonstrated. The proposed tools allow cross-correlation of multimodal metrics from structural imaging (e.g., structural volume, atrophy measurements) with clinical outcome variables and other potential factors predictive of recovery. In addition, the workflows described are suitable for TBI clinical practice and patient monitoring, particularly for assessing damage extent and for the measurement of neuroanatomical change over time. With knowledge of general location, extent, and degree of change, such metrics can be associated with clinical measures and subsequently used to suggest viable treatment options.

Keywords: namic

B.M. Isaacson, J.G. Stinstra, R.D. Bloebaum, COL P.F. Pasquina, R.S. MacLeod.

“Establishing Multiscale Models for Simulating Whole Limb Estimates of Electric Fields for Osseointegrated Implants,” In IEEE Transactions on Biomedical Engineering, Vol. 58, No. 10, pp. 2991--2994. 2011.

DOI: 10.1109/TBME.2011.2160722

PubMed ID: 21712151

PubMed Central ID: PMC3179554

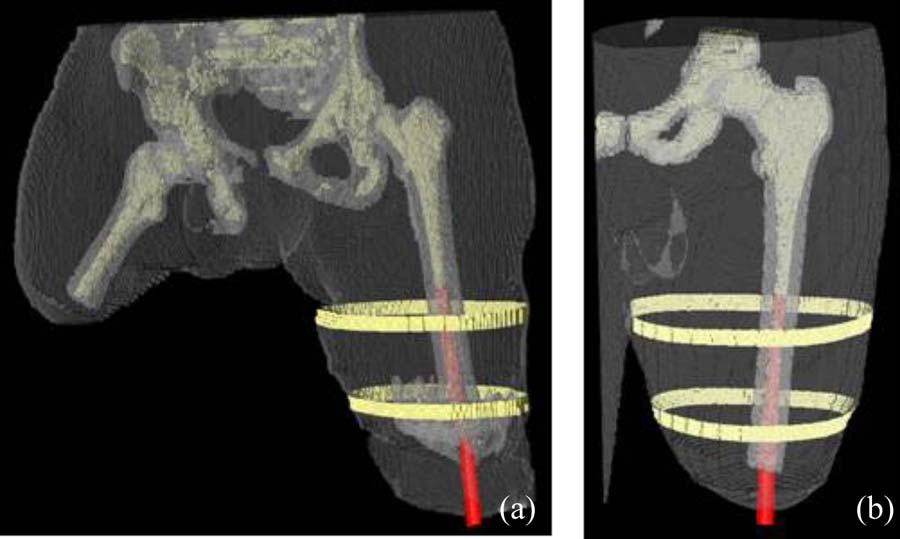

Although the survival rates of warfighters in recent conflicts are among the highest in military history, those who have sustained proximal limb amputations may present additional rehabilitation challenges. In some of these cases, traditional prosthetic limbs may not provide adequate function for service members returning to an active lifestyle. Osseointegration has emerged as an acknowledged treatment for those with limited residual limb length and those with skin issues associated with a socket together. Using this technology, direct skeletal attachment occurs between a transcutaneous osseointegrated implant (TOI) and the host bone, thereby eliminating the need for a socket. While reports from the first 100 patients with a TOI have been promising, some rehabilitation regimens require 12-18 months of restricted weight bearing to prevent overloading at the bone-implant interface. Electrically induced osseointegration has been proposed as an option for expediting periprosthetic fixation and preliminary studies have demonstrated the feasibility of adapting the TOI into a functional cathode. To assure safe and effective electric fields that are conducive for osseoinduction and osseointegration, we have developed multiscale modeling approaches to simulate the expected electric metrics at the bone--implant interface. We have used computed tomography scans and volume segmentation tools to create anatomically accurate models that clearly distinguish tissue parameters and serve as the basis for finite element analysis. This translational computational biological process has supported biomedical electrode design, implant placement, and experiments to date have demonstrated the clinical feasibility of electrically induced osseointegration.

S.A. Isaacson, R.M. Kirby.

“Numerical Solution of Linear Volterra Integral Equations of the Second Kind with Sharp Gradients,” In Journal of Computational and Applied Mathematics, Vol. 235, No. 14, pp. 4283--4301. 2011.

Collocation methods are a well-developed approach for the numerical solution of smooth and weakly singular Volterra integral equations. In this paper, we extend these methods through the use of partitioned quadrature based on the qualocation framework, to allow the efficient numerical solution of linear, scalar Volterra integral equations of the second kind with smooth kernels containing sharp gradients. In this case, the standard collocation methods may lose computational efficiency despite the smoothness of the kernel. We illustrate how the qualocation framework can allow one to focus computational effort where necessary through improved quadrature approximations, while keeping the solution approximation fixed. The computational performance improvement introduced by our new method is examined through several test examples. The final example we consider is the original problem that motivated this work: the problem of calculating the probability density associated with a continuous-time random walk in three dimensions that may be killed at a fixed lattice site. To demonstrate how separating the solution approximation from quadrature approximation may improve computational performance, we also compare our new method to several existing Gregory, Sinc, and global spectral methods, where quadrature approximation and solution approximation are coupled.

T. Ize, C.D. Hansen.

“RTSAH Traversal Order for Occlusion Rays,” In Computer Graphics Forum, Vol. 30, No. 2, Wiley-Blackwell, pp. 297--305. April, 2011.

DOI: 10.1111/j.1467-8659.2011.01861.x

We accelerate the finding of occluders in tree based acceleration structures, such as a packetized BVH and a single ray kd-tree, by deriving the ray termination surface area heuristic (RTSAH) cost model for traversing an occlusion ray through a tree and then using the RTSAH to determine which child node a ray should traverse first instead of the traditional choice of traversing the near node before the far node. We further extend RTSAH to handle materials that attenuate light instead of fully occluding it, so that we can avoid superfluous intersections with partially transparent objects. For scenes with high occlusion, we substantially lower the number of traversal steps and intersection tests and achieve up to 2x speedups.

T. Ize, C. Brownlee, C.D. Hansen.

“Real-Time Ray Tracer for Visualizing Massive Models on a Cluster,” In Proceedings of the 2011 Eurographics Symposium on Parallel Graphics and Visualization, pp. 61--69. 2011.

We present a state of the art read-only distributed shared memory (DSM) ray tracer capable of fully utilizing modern cluster hardware to render massive out-of-core polygonal models at real-time frame rates. Achieving this required adapting a state of the art packetized BVH acceleration structure for use with DSM and modifying the mesh and BVH data layouts to minimize communication costs. Furthermore, several design decisions and optimizations were made to take advantage of InfiniBand interconnects and multi-core machines.

S. Jadhav, H. Bhatia, P.-T. Bremer, J.A. Levine, L.G. Nonato, V. Pascucci.

“Consistent Approximation of Local Flow Behavior for 2D Vector Fields,” In Mathematics and Visualization, Springer, pp. 141--159. Nov, 2011.

DOI: 10.1007/978-3-642-23175-9 10

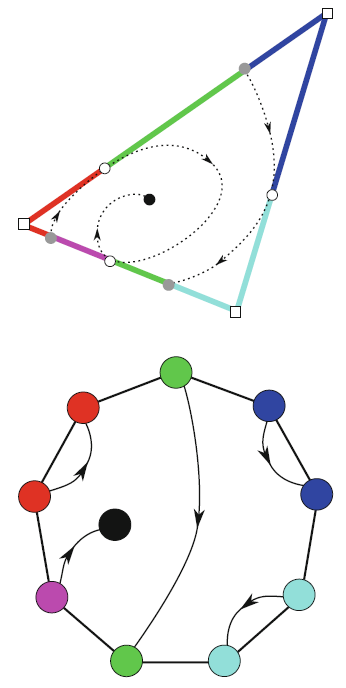

Typically, vector fields are stored as a set of sample vectors at discrete locations. Vector values at unsampled points are defined by interpolating some subset of the known sample values. In this work, we consider two-dimensional domains represented as triangular meshes with samples at all vertices, and vector values on the interior of each triangle are computed by piecewise linear interpolation.

Many of the commonly used techniques for studying properties of the vector field require integration techniques that are prone to inconsistent results. Analysis based on such inconsistent results may lead to incorrect conclusions about the data. For example, vector field visualization techniques integrate the paths of massless particles (streamlines) in the flow or advect a texture using line integral convolution (LIC). Techniques like computation of the topological skeleton of a vector field, require integrating separatrices, which are streamlines that asymptotically bound regions where the flow behaves differently. Since these integrations may lead to compound numerical errors, the computed streamlines may intersect, violating some of their fundamental properties such as being pairwise disjoint. Detecting these computational artifacts to allow further analysis to proceed normally remains a significant challenge.

J. Jakeman, R. Archibald, D. Xiu.

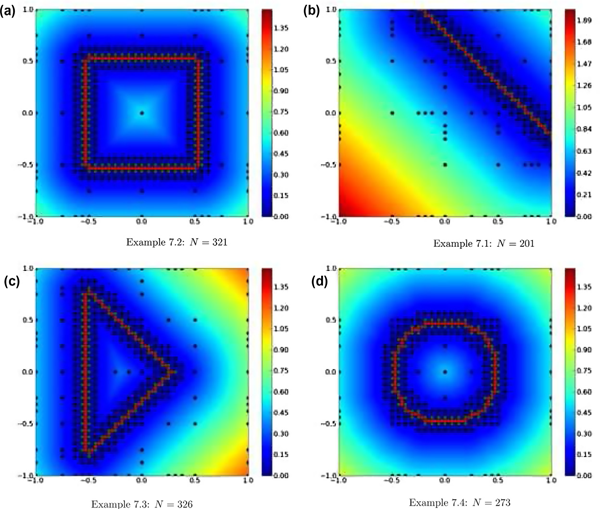

“Characterization of Discontinuities in High-dimensional Stochastic Problmes on Adaptive Sparse Grids,” In Journal of Computational Physics, Vol. 230, No. 10, pp. 3977--3997. 2011.

DOI: 10.1016/j.jcp.2011.02.022

In this paper we present a set of efficient algorithms for detection and identification of discontinuities in high dimensional space. The method is based on extension of polynomial annihilation for discontinuity detection in low dimensions. Compared to the earlier work, the present method poses significant improvements for high dimensional problems. The core of the algorithms relies on adaptive refinement of sparse grids. It is demonstrated that in the commonly encountered cases where a discontinuity resides on a small subset of the dimensions, the present method becomes \"optimal\", in the sense that the total number of points required for function evaluations depends linearly on the dimensionality of the space. The details of the algorithms will be presented and various numerical examples are utilized to demonstrate the efficacy of the method.

Keywords: Adaptive sparse grids, Stochastic partial differential equations, Multivariate discontinuity detection, Generalized polynomial chaos method, High-dimensional approximation

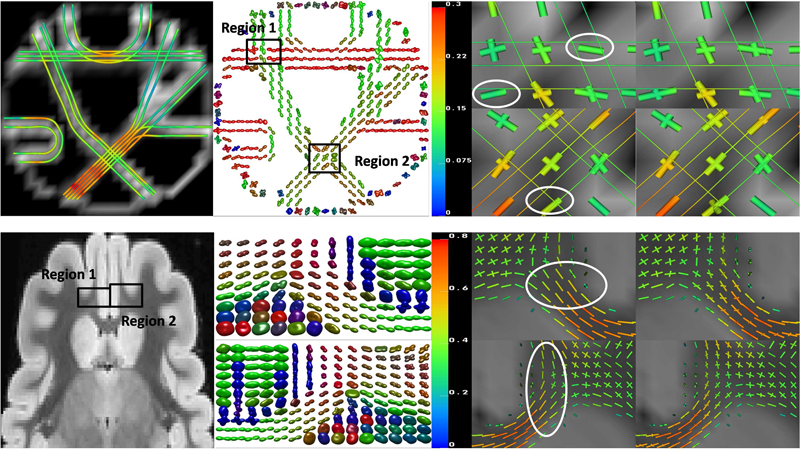

F. Jiao, Y. Gur, C.R. Johnson, S. Joshi.

“Detection of crossing white matter fibers with high-order tensors and rank-k decompositions,” In Proceedings of the International Conference on Information Processing in Medical Imaging (IPMI 2011), Lecture Notes in Computer Science (LNCS), Vol. 6801, pp. 538--549. 2011.

DOI: 10.1007/978-3-642-22092-0_44

PubMed Central ID: PMC3327305

Fundamental to high angular resolution diffusion imaging (HARDI), is the estimation of a positive-semidefinite orientation distribution function (ODF) and extracting the diffusion properties (e.g., fiber directions). In this work we show that these two goals can be achieved efficiently by using homogeneous polynomials to represent the ODF in the spherical deconvolution approach, as was proposed in the Cartesian Tensor-ODF (CT-ODF) formulation. Based on this formulation we first suggest an estimation method for positive-semidefinite ODF by solving a linear programming problem that does not require special parametrization of the ODF. We also propose a rank-k tensor decomposition, known as CP decomposition, to extract the fibers information from the estimated ODF. We show that this decomposition is superior to the fiber direction estimation via ODF maxima detection as it enables one to reach the full fiber separation resolution of the estimation technique. We assess the accuracy of this new framework by applying it to synthetic and experimentally obtained HARDI data.

P.K. Jimack, R.M. Kirby.

“Towards the Development on an h-p-Refinement Strategy Based Upon Error Estimate Sensitivity,” In Computers and Fluids, Vol. 46, No. 1, pp. 277--281. 2011.

The use of (a posteriori) error estimates is a fundamental tool in the application of adaptive numerical methods across a range of fluid flow problems. Such estimates are incomplete however, in that they do not necessarily indicate where to refine in order to achieve the most impact on the error, nor what type of refinement (for example h-refinement or p-refinement) will be best. This paper extends preliminary work of the authors (Comm Comp Phys, 2010;7:631–8), which uses adjoint-based sensitivity estimates in order to address these questions, to include application with p-refinement to arbitrary order and the use of practical a posteriori estimates. Results are presented which demonstrate that the proposed approach can guide both the h-refinement and the p-refinement processes, to yield improvements in the adaptive strategy compared to the use of more orthodox criteria.

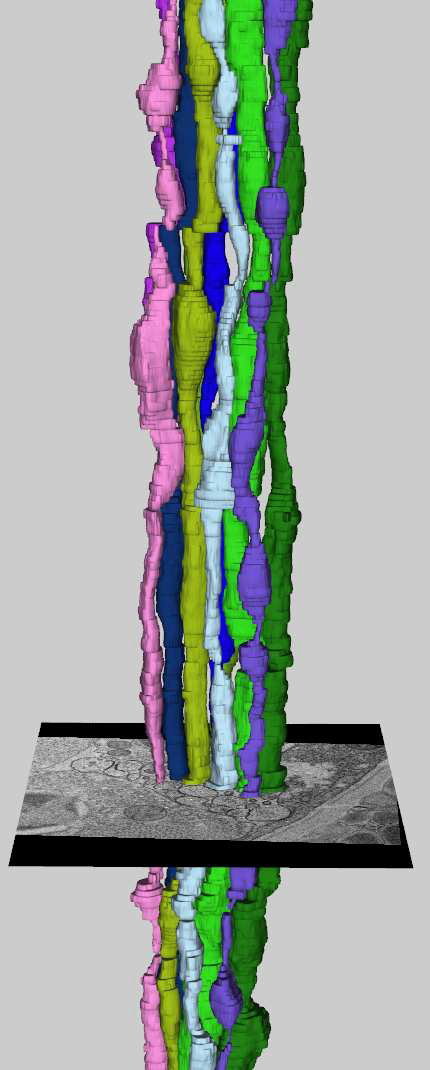

E. Jurrus, S. Watanabe, R. Guily, A.R.C. Paiva, M.H. Ellisman, E.M. Jorgensen, T. Tasdizen.

“Semi-automated Neuron Boundary Detection and Slice Traversal Algorithm for Segmentation of Neurons from Electron Microscopy Images,” In Microscopic Image Analysis with Applications in Biology (MIAAB) Workshop, 2011.

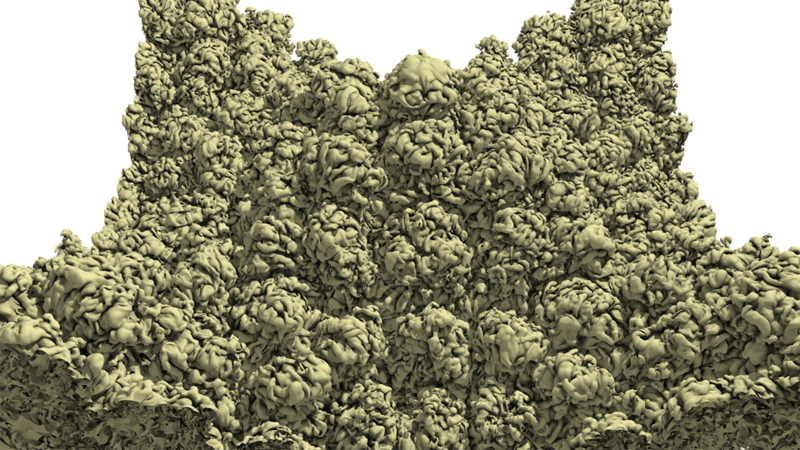

Neuroscientists are developing new imaging techniques and generating large volumes of data in an effort to understand the complex structure of the nervous system. To aid in the analysis, new segmentation techniques for identifying neurons in these feature rich datasets are required. However, the extremely anisotropic resolution of the data makes segmentation and tracking across slices difficult. This paper presents a complete method for segmenting neurons in electron microscopy images and visualizing them in three dimensions. First, we present an advanced method for identifying neuron membranes, necessary for whole neuron segmentation, using a machine learning approach. Next, neurons are segmented in each two-dimensional section and connected using correlation of regions between sections. These techniques, combined with a visual user interface, enable users to quickly segment whole neurons in large volumes.

K. Kamojjala, R.M. Brannon.

“Verification Of Frame Indifference For Complicated Numerical Constitutive Models,” In Proceedings of the ASME Early Career Technical Conference, 2011.

The principle of material frame indifference requires spatial stresses to rotate with the material, whereas reference stresses must be insensitive to rotation. Testing of a classical uniaxial strain problem with superimposed rotation reveals that a very common approach to strong incremental objectivity taken in finite element codes to satisfy frame indifference (namely working in an approximate un-rotated frame) fails this simplistic test. A more complicated verification example is constructed based on the method of manufactured solutions (MMS) which involves the same character of loading at all points, providing a means to test any nonlinear-elastic arbitrarily anisotropic constitutive model.

Y. Keller, Y. Gur.

“A Diffusion Approach to Network Localization,” In IEEE Transactions on Signal Processing, Vol. 59, No. 6, pp. 2642--2654. 2011.

DOI: 10.1109/TSP.2011.2122261

D. Keyes, V. Taylor, T. Hey, S. Feldman, G. Allen, P. Colella, P. Cummings, F. Darema, J. Dongarra, T. Dunning, M. Ellisman, I. Foster, W. Gropp, C.R. Johnson, C. Kamath, R. Madduri, M. Mascagni, S.G. Parker, P. Raghavan, A. Trefethen, S. Valcourt, A. Patra, F. Choudhury, C. Cooper, P. McCartney, M. Parashar, T. Russell, B. Schneider, J. Schopf, N. Sharp.

“Advisory Committee for CyberInfrastructure Task Force on Software for Science and Engineering,” Note: NSF Report, 2011.

The Software for Science and Engineering (SSE) Task Force commenced in June 2009 with a charge that consisted of the following three elements:

Identify specific needs and opportunities across the spectrum of scientific software infrastructure. Characterize the specific needs and analyze technical gaps and opportunities for NSF to meet those needs through individual and systemic approaches. Design responsive approaches. Develop initiatives and programs led (or co-led) by NSF to grow, develop, and sustain the software infrastructure needed to support NSF’s mission of transformative research and innovation leading to scientific leadership and technological competitiveness. Address issues of institutional barriers. Anticipate, analyze and address both institutional and exogenous barriers to NSF’s promotion of such an infrastructure.The SSE Task Force members participated in bi-weekly telecons to address the given charge. The telecons often included additional distinguished members of the scientific community beyond the task force membership engaged in software issues, as well as personnel from federal agencies outside of NSF who manage software programs. It was quickly acknowledged that a number of reports loosely and tightly related to SSE existed and should be leveraged. By September 2009, the task formed had formed three subcommittees focused on the following topics: (1) compute-intensive science, (2) data-intensive science, and (3) software evolution.

S.H. Kim, V. Fonov, J. Piven, J. Gilmore, C. Vachet, G. Gerig, D.L. Collins, M. Styner.

“Spatial Intensity Prior Correction for Tissue Segmentation in the Developing human Brain,” In Proceedings of IEEE ISBI 2011, pp. 2049--2052. 2011.

DOI: 10.1109/ISBI.2011.5872815

R.M. Kirby, B. Cockburn, S.J. Sherwin.

“To CG or to HDG: A Comparative Study,” In Journal of Scientific Computing, Note: published online, 2011.

DOI: 10.1007/s10915-011-9501-7

Hybridization through the border of the elements (hybrid unknowns) combined with a Schur complement procedure (often called static condensation in the context of continuous Galerkin linear elasticity computations) has in various forms been advocated in the mathematical and engineering literature as a means of accomplishing domain decomposition, of obtaining increased accuracy and convergence results, and of algorithm optimization. Recent work on the hybridization of mixed methods, and in particular of the discontinuous Galerkin (DG) method, holds the promise of capitalizing on the three aforementioned properties; in particular, of generating a numerical scheme that is discontinuous in both the primary and flux variables, is locally conservative, and is computationally competitive with traditional continuous Galerkin (CG) approaches. In this paper we present both implementation and optimization strategies for the Hybridizable Discontinuous Galerkin (HDG) method applied to two dimensional elliptic operators. We implement our HDG approach within a spectral/hp element framework so that comparisons can be done between HDG and the traditional CG approach.

We demonstrate that the HDG approach generates a global trace space system for the unknown that although larger in rank than the traditional static condensation system in CG, has significantly smaller bandwidth at moderate polynomial orders. We show that if one ignores set-up costs, above approximately fourth-degree polynomial expansions on triangles and quadrilaterals the HDG method can be made to be as efficient as the CG approach, making it competitive for time-dependent problems even before taking into consideration other properties of DG schemes such as their superconvergence properties and their ability to handle hp-adaptivity.