SCI Publications

2011

N. Sadeghi, M.W. Prastawa, P.T. Fletcher, J.H. Gilmore, W. Lin, G. Gerig.

“Statistical Growth Modeling of Longitudinal DT-MRI for Regional Characterization of Early Brain Development,” In Proceedings of the Medical Image Computing and Computer Assisted Intervention (MICCAI) 2011 Workshop on Image Analysis of Human Brain Development, pp. 1507--1510. 2011.

DOI: 10.1109/ISBI.2012.6235858

A population growth model that represents the growth trajectories of individual subjects is critical to study and understand neurodevelopment. This paper presents a framework for jointly estimating and modeling individual and population growth trajectories, and determining significant regional differences in growth pattern characteristics applied to longitudinal neuroimaging data. We use non-linear mixed effect modeling where temporal change is modeled by the Gompertz function. The Gompertz function uses intuitive parameters related to delay, rate of change, and expected asymptotic value; all descriptive measures which can answer clinical questions related to growth. Our proposed framework combines nonlinear modeling of individual trajectories, population analysis, and testing for regional differences. We apply this framework to the study of early maturation in white matter regions as measured with diffusion tensor imaging (DTI). Regional differences between anatomical regions of interest that are known to mature differently are analyzed and quantified. Experiments with image data from a large ongoing clinical study show that our framework provides descriptive, quantitative information on growth trajectories that can be directly interpreted by clinicians. To our knowledge, this is the first longitudinal analysis of growth functions to explain the trajectory of early brain maturation as it is represented in DTI.

Keywords: namic

A. Sadeghirad, R.M. Brannon, J. Burghardt.

“A Convected Particle Domain Interpolation Technique To Extend Applicability of the Material Point Method for Problems Involving Massive Deformations,” In International Journal for Numerical Methods in Engineering, Vol. 86, No. 12, pp. 1435--1456. 2011.

DOI: 10.1002/nme.3110

A new algorithm is developed to improve the accuracy and efficiency of the material point method for problems involving extremely large tensile deformations and rotations. In the proposed procedure, particle domains are convected with the material motion more accurately than in the generalized interpolation material point method. This feature is crucial to eliminate instability in extension, which is a common shortcoming of most particle methods. Also, a novel alternative set of grid basis functions is proposed for efficiently calculating nodal force and consistent mass integrals on the grid. Specifically, by taking advantage of initially parallelogram-shaped particle domains, and treating the deformation gradient as constant over the particle domain, the convected particle domain is a reshaped parallelogram in the deformed configuration. Accordingly, an alternative grid basis function over the particle domain is constructed by a standard 4-node finite element interpolation on the parallelogram. Effectiveness of the proposed modifications is demonstrated using several large deformation solid mechanics problems.

A. Sadeghirad, R.M. Brannon, J. Guilkey.

“Enriched Convected Particle Domain Interpolation (CPDI) Method for Analyzing Weak Discontinuities,” In Particles, 2011.

B. Salter, B. Wang, M. Sadinski, S. Ruhnau, V. Sarkar, J. Hinkle, Y. Hitchcock, K. Kokeny, S. Joshi.

“WE-E-BRC-06: Comparison of Two Methods of Contouring Internal Target Volume on Multiple 4DCT Data Sets from the Same Subjects: Maximum Intensity Projection and Combination of 10 Phases,” In Medical Physics, Vol. 38, No. 6, pp. 3820. 2011.

R. Samuel, H.J. Sant, F. Jiao, C.R. Johnson, B.K. Gale.

“Microfluidic laminate-based phantom for diffusion tensor-magnetic resonance imaging,” In Journal of Micromech. Microeng., Vol. 21, pp. 095027--095038. 2011.

DOI: 10.1088/0960-1317/21/9/095027

M. Schott, A.V.P. Grosset, T. Martin, V. Pegoraro, S.T. Smith, C.D. Hansen.

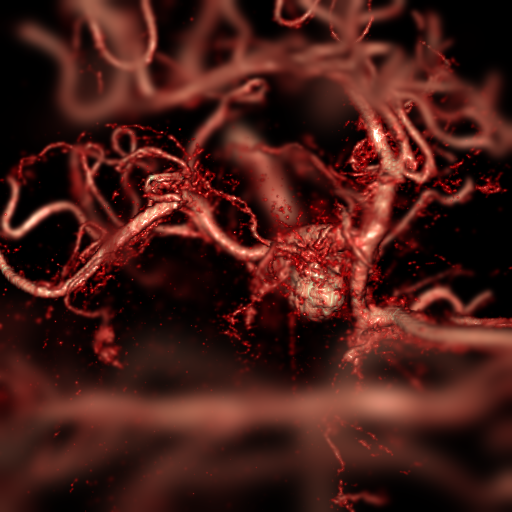

“Depth of Field Effects for Interactive Direct Volume Rendering,” In Computer Graphics Forum, Vol. 30, No. 3, Edited by H. Hauser and H. Pfister and J.J. van Wijk, Wiley-Blackwell, pp. 941--950. jun, 2011.

DOI: 10.1111/j.1467-8659.2011.01943.x

In this paper, a method for interactive direct volume rendering is proposed for computing depth of field effects, which previously were shown to aid observers in depth and size perception of synthetically generated images. The presented technique extends those benefits to volume rendering visualizations of 3D scalar fields from CT/MRI scanners or numerical simulations. It is based on incremental filtering and as such does not depend on any precomputation, thus allowing interactive explorations of volumetric data sets via on-the-fly editing of the shading model parameters or (multi-dimensional) transfer functions.

M. Schulz, J.A. Levine, P.-T. Bremer, T. Gamblin, V. Pascucci.

“Interpreting Performance Data Across Intuitive Domains,” In International Conference on Parallel Processing, Taipei, Taiwan, IEEE, pp. 206--215. 2011.

DOI: 10.1109/ICPP.2011.60

M. Seyedhosseini, A.R.C. Paiva, T. Tasdizen.

“Multi-scale Series Contextual Model for Image Parsing,” SCI Technical Report, No. UUSCI-2011-004, SCI Institute, University of Utah, 2011.

M. Seyedhosseini, A.R.C. Paiva, T. Tasdizen.

“Fast AdaBoost training using weighted novelty selection,” In Proc. IEEE Intl. Joint Conf. on Neural Networks, San Jose, CA, USA pp. 1245--1250. August, 2011.

In this paper, a new AdaBoost learning framework, called WNS-AdaBoost, is proposed for training discriminative models. The proposed approach significantly speeds up the learning process of adaptive boosting (AdaBoost) by reducing the number of data points. For this purpose, we introduce the weighted novelty selection (WNS) sampling strategy and combine it with AdaBoost to obtain an efficient and fast learning algorithm. WNS selects a representative subset of data thereby reducing the number of data points onto which AdaBoost is applied. In addition, WNS associates a weight with each selected data point such that the weighted subset approximates the distribution of all the training data. This ensures that AdaBoost can trained efficiently and with minimal loss of accuracy. The performance of WNS-AdaBoost is first demonstrated in a classification task. Then, WNS is employed in a probabilistic boosting-tree (PBT) structure for image segmentation. Results in these two applications show that the training time using WNS-AdaBoost is greatly reduced at the cost of only a few percent in accuracy.

M. Seyedhosseini, R. Kumar, E. Jurrus, R. Guily, M. Ellisman, H. Pfister, T. Tasdizen.

“Detection of Neuron Membranes in Electron Microscopy Images using Multi-scale Context and Radon-like Features,” In Medical Image Computing and Computer-Assisted Intervention – MICCAI 2011, Lecture Notes in Computer Science (LNCS), Vol. 6891, pp. 670--677. 2011.

DOI: 10.1007/978-3-642-23623-5_84

F. Shi, D. Shen, P.-T. Yap, Y. Fan, J.-Z. Cheng, H. An, L.L. Wald, G. Gerig, J.H. Gilmore, W. Lin.

“CENTS: Cortical Enhanced Neonatal Tissue Segmentation,” In Human Brain Mapping HBM, Vol. 32, No. 3, Note: ePub 5 Aug 2010, pp. 382--396. March, 2011.

DOI: 10.1002/hbm.21023

PubMed ID: 20690143

P.J. Smith, M. Hradisky, J. Thornock, J. Spinti, D. Nguyen.

“Large eddy simulation of a turbulent buoyant helium plume,” In Proceedings of Supercomputing 2011 Companion, pp. 135--136. 2011.

DOI: 10.1145/2148600.2148671

At the Institute for Clean and Secure Energy at the University of Utah we are focused on education through interdisciplinary research on high-temperature fuel-utilization processes for energy generation, and the associated health, environmental, policy and performance issues. We also work closely with the government agencies and private industry companies to promote rapid deployment of new technologies through the use of high performance computational tools.

Buoyant flows are encountered in many situations of engineering and environmental importance, including fires, subsea and atmospheric exhaust phenomena, gas releases and geothermal events. Buoyancy-driven flows also play a key role in such physical processes as the spread of smoke or toxic gases from fires. As such, buoyant flow experiments are an important step in developing and validating simulation tools for numerical techniques such as Large Eddy Simulation (LES) for predictive use of complex systems. Large Eddy Simulation is a turbulence model that provides a much greater degree of resolution of physical scales than the more common Reynolds-Averaged Navier Stokes models. The validation activity requires increasing levels of complexity to sequentially quantify the effects of coupling increased physics, and to explore the effects of scale on the objectives of the simulation.

In this project we are using buoyant flows to examine the validity and accuracy of numerical techniques. By using the non-reacting buoyant helium plume flow we can study the generation of turbulence due to buoyancy, uncoupled from the complexities of combustion chemistry.

We are performing Large Eddy Simulation of a one-meter diameter buoyancy-driven helium plume using two software simulation tools -- ARCHES and Star-CCM+. ARCHES is a finite-volume Large Eddy Simulation code built within the Uintah framework, which is a set of software components and libraries that facilitate the solution of partial differential equations on structured adaptive mesh refinement grids using thousands of processors. Uintah is the product of a ten-year partnership with the Department of Energy's Advanced Simulation and Computing (ASC) program through the University of Utah's Center for Simulation of Accidental Fires and Explosions (C-SAFE). The ARCHES component was initially designed for predicting the heat-flux from large buoyant pool fires with potential hazards immersed in or near a pool fire of transportation fuel. Since then, this component has been extended to solve many industrially relevant problems such as industrial flares, oxy-coal combustion processes, and fuel gasification.

The second simulation tool, Star-CCM+, is a commercial, integrated software environment developed by CD-adapco, that can be used to simulate the entire engineering simulation process. The engineering process can be started with CAD preparation, meshing, model setup, and continued with running simulations, post-processing, and visualizing the results. This allows for faster development and design turn-over time, especially for industry-type application. Star-CCM+ was build from ground up to provide scalable parallel performance. Furthermore, it is not only supported on the industry-standard Linux HPC platforms, but also on Windows HPC, allowing us to explore computational demands on both Linux as well as Windows-based HPC clusters.

P.J. Smith, J. Thornock, J., D. Hinckley, M. Hradisky.

“Large Eddy Simulation Of Industrial Flares,” In Proceedings of Supercomputing 2011 Companion, pp. 137--138. 2011.

DOI: 10.1145/2148600.2148672

At the Institute for Clean and Secure Energy at the University of Utah we are focused on education through interdisciplinary research on high-temperature fuel-utilization processes for energy generation, and the associated health, environmental, policy and performance issues. We also work closely with the government agencies and private industry companies to promote rapid deployment of new technologies through the use of high performance computational tools.

Industrial flare simulation can provide important information on combustion efficiency, pollutant emissions, and operational parameter sensitivities for design or operation that cannot be measured. These simulations provide information that may help design or operate flares so as to reduce or eliminate harmful pollutants and increase combustion efficiency.

Fires and flares have been particularly difficult to simulate with traditional computational fluid dynamics (CFD) simulation tools that are based on Reynolds-Averaged Navier-Stokes (RANS) approaches. The large-scale mixing due to vortical coherent structures in these flames is not readily reduced to steady-state CFD calculations with RANS.

Simulation of combustion using Large Eddy Simulations (LES) has made it possible to more accurately simulate the complex combustion seen in these flares. Resolution of all length and time scales is not possible even for the largest supercomputers. LES gives a numerical technique which resolves the large length and time scales while using models for more homogenous smaller scales. By using LES, the combustion dynamics capture the puffing created by buoyancy in industrial flare simulation.

All of our simulations were performed using either the University of Utah's ARCHES simulation tool or the commercially available Star-CCM+ software. ARCHES is a finite-volume Large Eddy Simulation code built within the Uintah framework, which is a set of software components and libraries that facilitate the solution of partial differential equations on structured adaptive mesh refinement grids using thousands of processors. Uintah is the product of a ten-year partnership with the Department of Energy's Advanced Simulation and Computing (ASC) program through the University of Utah's Center for Simulation of Accidental Fires and Explosions (C-SAFE). The ARCHES component was initially designed for predicting the heat-flux from large buoyant pool fires with potential hazards immersed in or near a pool fire of transportation fuel. Since then, this component has been extended to solve many industrially relevant problems such as industrial flares, oxy-coal combustion processes, and fuel gasification.

In this report we showcase selected results that help us visualize and understand the physical processes occurring in the simulated systems.

Most of the simulations were completed on the University of Utah's Updraft and Ember high performance computing clusters, which are managed by the Center for High Performance Computing. High performance computational tools are essential in our effort to successfully answer all aspects of our research areas and we promote the use of high performance computational tools beyond the research environment by directly working with our industry partners.

M. Steinberger, M. Waldner, M. Streit, A. Lex, D. Schmalstieg.

“Context-Preserving Visual Links,” In IEEE Transactions on Visualization and Computer Graphics (InfoVis '11), Vol. 17, No. 12, 2011.

Evaluating, comparing, and interpreting related pieces of information are tasks that are commonly performed during visual data analysis and in many kinds of information-intensive work. Synchronized visual highlighting of related elements is a well-known technique used to assist this task. An alternative approach, which is more invasive but also more expressive is visual linking in which line connections are rendered between related elements. In this work, we present context-preserving visual links as a new method for generating visual links. The method specifically aims to fulfill the following two goals: first, visual links should minimize the occlusion of important information; second, links should visually stand out from surrounding information by minimizing visual interference. We employ an image-based analysis of visual saliency to determine the important regions in the original representation. A consequence of the image-based approach is that our technique is application-independent and can be employed in a large number of visual data analysis scenarios in which the underlying content cannot or should not be altered. We conducted a controlled experiment that indicates that users can find linked elements in complex visualizations more quickly and with greater subjective satisfaction than in complex visualizations in which plain highlighting is used. Context-preserving visual links were perceived as visually more attractive than traditional visual links that do not account for the context information.

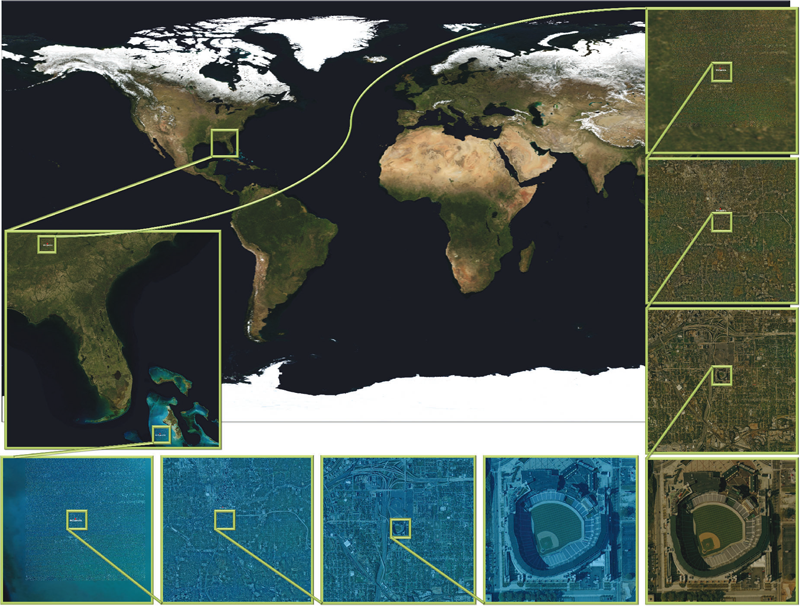

B. Summa, G. Scorzelli, M. Jiang, P.-T. Bremer, V. Pascucci.

“Interactive Editing of Massive Imagery Made Simple: Turning Atlanta into Atlantis,” In ACM Transactions on Graphics, Vol. 30, No. 2, pp. 7:1--7:13. April, 2011.

DOI: 10.1145/1944846.1944847

This article presents a simple framework for progressive processing of high-resolution images with minimal resources. We demonstrate this framework's effectiveness by implementing an adaptive, multi-resolution solver for gradient-based image processing that, for the first time, is capable of handling gigapixel imagery in real time. With our system, artists can use commodity hardware to interactively edit massive imagery and apply complex operators, such as seamless cloning, panorama stitching, and tone mapping.

We introduce a progressive Poisson solver that processes images in a purely coarse-to-fine manner, providing near instantaneous global approximations for interactive display (see Figure 1). We also allow for data-driven adaptive refinements to locally emulate the effects of a global solution. These techniques, combined with a fast, cache-friendly data access mechanism, allow the user to interactively explore and edit massive imagery, with the illusion of having a full solution at hand. In particular, we demonstrate the interactive modification of gigapixel panoramas that previously required extensive offline processing. Even with massive satellite images surpassing a hundred gigapixels in size, we enable repeated interactive editing in a dynamically changing environment. Images at these scales are significantly beyond the purview of previous methods yet are processed interactively using our techniques. Finally our system provides a robust and scalable out-of-core solver that consistently offers high-quality solutions while maintaining strict control over system resources.

J. Sutherland, T. Saad.

“The Discrete Operator Approach to the Numerical Solution of Partial Differential Equations,” In Proceedings of the 20th AIAA Computational Fluid Dynamics Conference, Honolulu, Hawaii, pp. AIAA-2011-3377. 2011.

DOI: 10.2514/6.2011-3377

J. Sutherland, T. Saad.

“A Novel Computational Framework for Reactive Flow and Multiphysics Simulations,” Note: AIChE Annual Meeting, 2011.

D.J. Swenson, S.E. Geneser, J.G. Stinstra, R.M. Kirby, R.S. MacLeod.

“Cardiac Position Sensitivity Study in the Electrocardiographic Forward Problem Using Stochastic Collocation and Boundary Element Methods,” In Annals of Biomedical Engineering, Vol. 39, No. 12, pp. 2900--2910. 2011.

DOI: 10.1007/s10439-011-0391-5

PubMed ID: 21909818

PubMed Central ID: PMC336204

The electrocardiogram (ECG) is ubiquitously employed as a diagnostic and monitoring tool for patients experiencing cardiac distress and/or disease. It is widely known that changes in heart position resulting from, for example, posture of the patient (sitting, standing, lying) and respiration significantly affect the body-surface potentials; however, few studies have quantitatively and systematically evaluated the effects of heart displacement on the ECG. The goal of this study was to evaluate the impact of positional changes of the heart on the ECG in the specific clinical setting of myocardial ischemia. To carry out the necessary comprehensive sensitivity analysis, we applied a relatively novel and highly efficient statistical approach, the generalized polynomial chaos-stochastic collocation method, to a boundary element formulation of the electrocardiographic forward problem, and we drove these simulations with measured epicardial potentials from whole-heart experiments. Results of the analysis identified regions on the body-surface where the potentials were especially sensitive to realistic heart motion. The standard deviation (STD) of ST-segment voltage changes caused by the apex of a normal heart, swinging forward and backward or side-to-side was approximately 0.2 mV. Variations were even larger, 0.3 mV, for a heart exhibiting elevated ischemic potentials. These variations could be large enough to mask or to mimic signs of ischemia in the ECG. Our results suggest possible modifications to ECG protocols that could reduce the diagnostic error related to postural changes in patients possibly suffering from myocardial ischemia.

M. Szegedi, J. Hinkle, S. Joshi, V. Sarkar, P. Rassiah-Szegedi, B. Wang, B. Salter.

“WE-E-BRC-05: Voxel Based Four Dimensional Tissue Deformation Reconstruction (4DTDR) Validation Using a Real Tissue Phantom,” In Medical Physics, Vol. 38, pp. 3819. 2011.

G. Tamm, A. Schiewe, J. Krüger.

“ZAPP – A management framework for distributed visualization systems,” In Proceedings of CGVCVIP 2011 : IADIS International Conference on Computer Graphics, Visualization, Computer Vision And Image Processing, pp. (accepted). 2011.