SCI Publications

2013

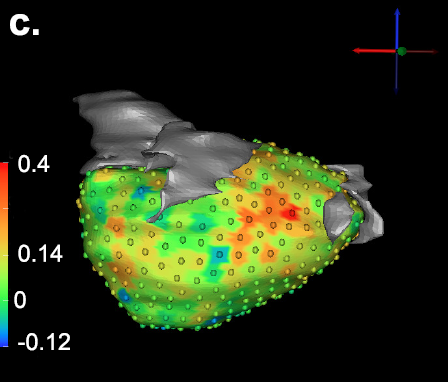

G. Gardner, A. Morris, K. Higuchi, R.S. MacLeod, J. Cates.

“A Point-Correspondence Approach to Describing the Distribution of Image Features on Anatomical Surfaces, with Application to Atrial Fibrillation,” In Proceedings of the 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI), pp. 226--229. 2013.

DOI: 10.1109/ISBI.2013.6556453

This paper describes a framework for summarizing and comparing the distributions of image features on anatomical shape surfaces in populations. The approach uses a pointbased correspondence model to establish a mapping among surface positions and may be useful for anatomy that exhibits a relatively high degree of shape variability, such as cardiac anatomy. The approach is motivated by the MRI-based study of diseased, or fibrotic, tissue in the left atrium of atrial fibrillation (AF) patients, which has been difficult to measure quantitatively using more established image and surface registration techniques. The proposed method is to establish a set of point correspondences across a population of shape surfaces that provides a mapping from any surface to a common coordinate frame, where local features like fibrosis can be directly compared. To establish correspondence, we use a previously-described statistical optimization of particle-based shape representations. For our atrial fibrillation population, the proposed method provides evidence that more intense and widely distributed fibrosis patterns exist in patients that do not respond well to radiofrequency ablation therapy.

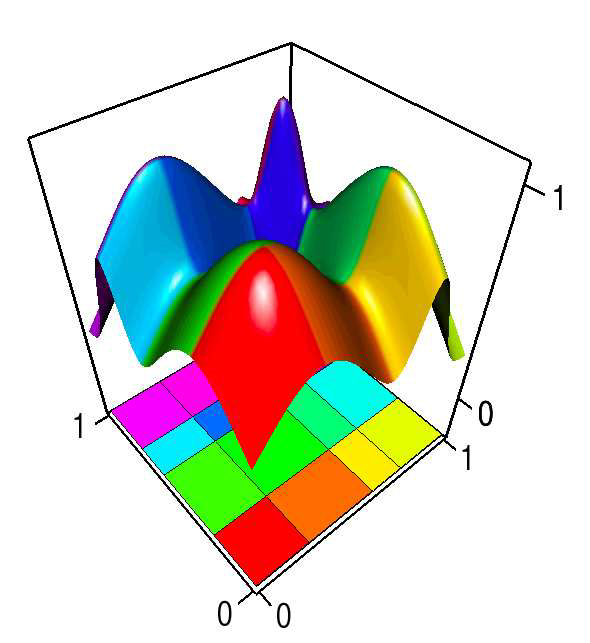

S. Gerber, O. Reubel, P.-T. Bremer, V. Pascucci, R.T. Whitaker.

“Morse-Smale Regression,” In Journal of Computational and Graphical Statistics, Vol. 22, No. 1, pp. 193--214. 2013.

DOI: 10.1080/10618600.2012.657132

This paper introduces a novel partition-based regression approach that incorporates topological information. Partition-based regression typically introduce a quality-of-fit-driven decomposition of the domain. The emphasis in this work is on a topologically meaningful segmentation. Thus, the proposed regression approach is based on a segmentation induced by a discrete approximation of the Morse-Smale complex. This yields a segmentation with partitions corresponding to regions of the function with a single minimum and maximum that are often well approximated by a linear model. This approach yields regression models that are amenable to interpretation and have good predictive capacity. Typically, regression estimates are quantified by their geometrical accuracy. For the proposed regression, an important aspect is the quality of the segmentation itself. Thus, this paper introduces a new criterion that measures the topological accuracy of the estimate. The topological accuracy provides a complementary measure to the classical geometrical error measures and is very sensitive to over-fitting. The Morse-Smale regression is compared to state-of-the-art approaches in terms of geometry and topology and yields comparable or improved fits in many cases. Finally, a detailed study on climate-simulation data demonstrates the application of the Morse-Smale regression. Supplementary materials are available online and contain an implementation of the proposed approach in the R package msr, an analysis and simulations on the stability of the Morse-Smale complex approximation and additional tables for the climate-simulation study.

J.M. Gililland, L.A. Anderson, H.B. Henninger, E.N. Kubiak, C.L. Peters.

“Biomechanical analysis of acetabular revision constructs: is pelvic discontinuity best treated with bicolumnar or traditional unicolumnar fixation?,” In Journal of Arthoplasty, Vol. 28, No. 1, pp. 178--186. 2013.

DOI: 10.1016/j.arth.2012.04.031

Pelvic discontinuity in revision total hip arthroplasty presents problems with component fixation and union. A construct was proposed based on bicolumnar fixation for transverse acetabular fractures. Each of 3 reconstructions was performed on 6 composite hemipelvises: (1) a cup-cage construct, (2) a posterior column plate construct, and (3) a bicolumnar construct (no. 2 plus an antegrade 4.5-mm anterior column screw). Bone-cup interface motions were measured, whereas cyclical loads were applied in both walking and descending stair simulations. The bicolumnar construct provided the most stable construct. Descending stair mode yielded more significant differences between constructs. The bicolumnar construct provided improved component stability. Placing an antegrade anterior column screw through a posterior approach is a novel method of providing anterior column support in this setting.

S. Gratzl, A. Lex, N. Gehlenborg, H. Pfister,, M. Streit.

“LineUp: Visual Analysis of Multi-Attribute Rankings,” In IEEE Transactions on Visualization and Computer Graphics (InfoVis '13), Vol. 19, No. 12, pp. 2277--2286. 2013.

ISSN: 1077-2626

DOI: 10.1109/TVCG.2013.173

Rankings are a popular and universal approach to structure otherwise unorganized collections of items by computing a rank for each item based on the value of one or more of its attributes. This allows us, for example, to prioritize tasks or to evaluate the performance of products relative to each other. While the visualization of a ranking itself is straightforward, its interpretation is not because the rank of an item represents only a summary of a potentially complicated relationship between its attributes and those of the other items. It is also common that alternative rankings exist that need to be compared and analyzed to gain insight into how multiple heterogeneous attributes affect the rankings. Advanced visual exploration tools are needed to make this process efficient.

In this paper we present a comprehensive analysis of requirements for the visualization of multi-attribute rankings. Based on these considerations, we propose a novel and scalable visualization technique - LineUp - that uses bar charts. This interactive technique supports the ranking of items based on multiple heterogeneous attributes with different scales and semantics. It enables users to interactively combine attributes and flexibly refine parameters to explore the effect of changes in the attribute combination. This process can be employed to derive actionable insights into which attributes of an item need to be modified in order for its rank to change.

Additionally, through integration of slope graphs, LineUp can also be used to compare multiple alternative rankings on the same set of items, for example, over time or across different attribute combinations. We evaluate the effectiveness of the proposed multi-attribute visualization technique in a qualitative study. The study shows that users are able to successfully solve complex ranking tasks in a short period of time.

A. Grosset, M. Schott, G.-P. Bonneau, C.D. Hansen.

“Evaluation of Depth of Field for Depth Perception in DVR,” In Proceedings of the 2013 IEEE Pacific Visualization Symposium (PacificVis), pp. 81--88. 2013.

In this paper we present a user study on the use of Depth of Field for depth perception in Direct Volume Rendering. Direct Volume Rendering with Phong shading and perspective projection is used as the baseline. Depth of Field is then added to see its impact on the correct perception of ordinal depth. Accuracy and response time are used as the metrics to evaluate the usefulness of Depth of Field. The onsite user study has two parts: static and dynamic. Eye tracking is used to monitor the gaze of the subjects. From our results we see that though Depth of Field does not act as a proper depth cue in all conditions, it can be used to reinforce the perception of which feature is in front of the other. The best results (high accuracy & fast response time) for correct perception of ordinal depth occurs when the front feature (out of the two features users were to choose from) is in focus and perspective projection is used.

J. Grüninger, J. Krüger.

“The impact of display bezels on stereoscopic vision for tiled displays,” In Proceedings of the 19th ACM Symposium on Virtual Reality Software and Technology (VRST), pp. 241--250. 2013.

DOI: 10.1145/2503713.2503717

In recent years high-resolution tiled display systems have gained significant attention in scientific and information visualization of large-scale data. Modern tiled display setups are based on either video projectors or LCD screens. While LCD screens are the preferred solution for monoscopic setups, stereoscopic displays almost exclusively consist of some kind of video projection. This is because projections can significantly reduce gaps between tiles, while LCD screens require a bezel around the panel. Projection setups, however, suffer from a number of maintenance issues that are avoided by LCD screens. For example, projector alignment is a very time-consuming task that needs to be repeated at intervals, and different aging states of lamps and filters cause color inconsistencies. The growing availability of inexpensive stereoscopic LCDs for television and gaming allows one to build high-resolution stereoscopic tiled display walls with the same dimensions and resolution as projection systems at a fraction of the cost, while avoiding the aforementioned issues. The only drawback is the increased gap size between tiles.

In this paper, we investigate the effects of bezels on the stereo perception with three surveys and show, that smaller LCD bezels and larger displays significantly increase stereo perception on display wall systems. We also show that the bezel color is not very important and that bezels can negatively affect the adaption times to the stereoscopic effect but improve task completion times. Finally, we present guidelines for the setup of tiled stereoscopic display wall systems.

L.K. Ha, J. King, Z. Fu, R.M. Kirby.

“A High-Performance Multi-Element Processing Framework on GPUs,” SCI Technical Report, No. UUSCI-2013-005, SCI Institute, University of Utah, 2013.

Many computational engineering problems ranging from finite element methods to image processing involve the batch processing on a large number of data items. While multielement processing has the potential to harness computational power of parallel systems, current techniques often concentrate on maximizing elemental performance. Frameworks that take this greedy optimization approach often fail to extract the maximum processing power of the system for multi-element processing problems. By ultilizing the knowledge that the same operation will be accomplished on a large number of items, we can organize the computation to maximize the computational throughput available in parallel streaming hardware. In this paper, we analyzed weaknesses of existing methods and we proposed efficient parallel programming patterns implemented in a high performance multi-element processing framework to harness the processing power of GPUs. Our approach is capable of levering out the performance curve even on the range of small element size.

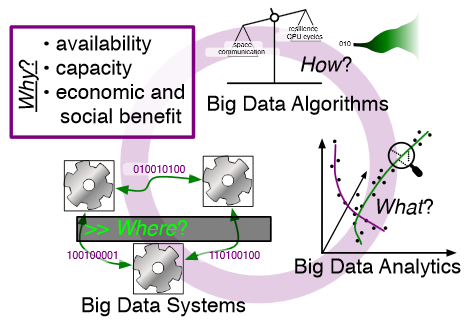

M. Hall, R.M. Kirby, F. Li, M.D. Meyer, V. Pascucci, J.M. Phillips, R. Ricci, J. Van der Merwe, S. Venkatasubramanian.

“Rethinking Abstractions for Big Data: Why, Where, How, and What,” In Cornell University Library, 2013.

Big data refers to large and complex data sets that, under existing approaches, exceed the capacity and capability of current compute platforms, systems software, analytical tools and human understanding [7]. Numerous lessons on the scalability of big data can already be found in asymptotic analysis of algorithms and from the high-performance computing (HPC) and applications communities. However, scale is only one aspect of current big data trends; fundamentally, current and emerging problems in big data are a result of unprecedented complexity |in the structure of the data and how to analyze it, in dealing with unreliability and redundancy, in addressing the human factors of comprehending complex data sets, in formulating meaningful analyses, and in managing the dense, power-hungry data centers that house big data.

The computer science solution to complexity is finding the right abstractions, those that hide as much triviality as possible while revealing the essence of the problem that is being addressed. The "big data challenge" has disrupted computer science by stressing to the very limits the familiar abstractions which define the relevant subfields in data analysis, data management and the underlying parallel systems. Efficient processing of big data has shifted systems towards increasingly heterogeneous and specialized units, with resilience and energy becoming important considerations. The design and analysis of algorithms must now incorporate emerging costs in communicating data driven by IO costs, distributed data, and the growing energy cost of these operations. Data analysis representations as structural patterns and visualizations surpass human visual bandwidth, structures studied at small scale are rare at large scale, and large-scale high-dimensional phenomena cannot be reproduced at small scale.

As a result, not enough of these challenges are revealed by isolating abstractions in a traditional soft-ware stack or standard algorithmic and analytical techniques, and attempts to address complexity either oversimplify or require low-level management of details. The authors believe that the abstractions for big data need to be rethought, and this reorganization needs to evolve and be sustained through continued cross-disciplinary collaboration.

In what follows, we first consider the question of why big data and why now. We then describe the where (big data systems), the how (big data algorithms), and the what (big data analytics) challenges that we believe are central and must be addressed as the research community develops these new abstractions. We equate the biggest challenges that span these areas of big data with big mythological creatures, namely cyclops, that should be conquered.

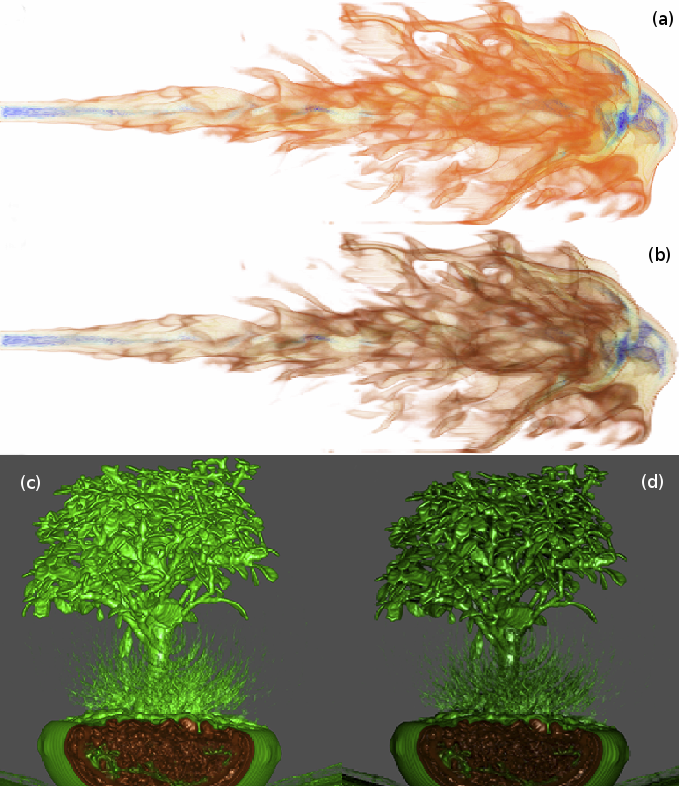

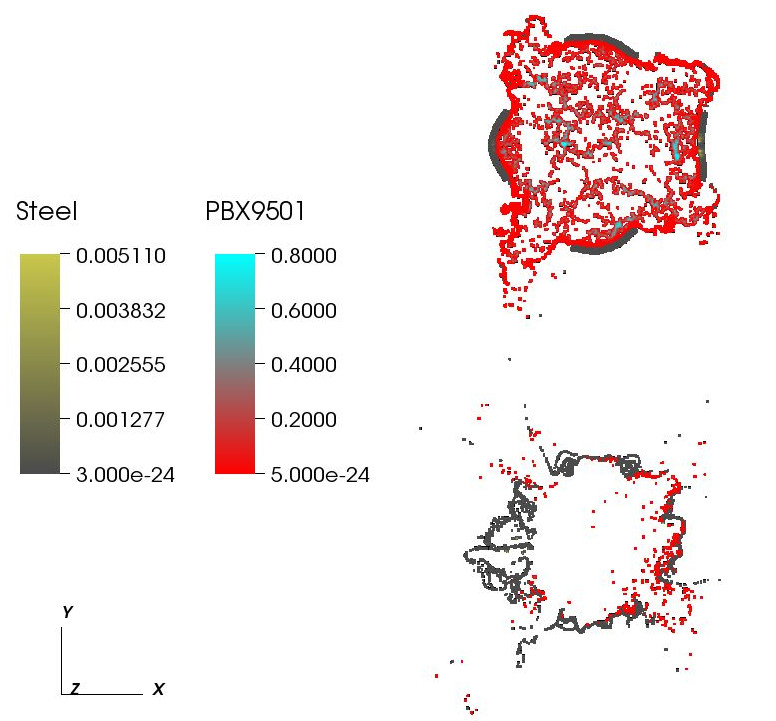

M. Hall, J.C. Beckvermit, C.A. Wight, T. Harman, M. Berzins.

“The influence of an applied heat flux on the violence of reaction of an explosive device,” In Proceedings of the Conference on Extreme Science and Engineering Discovery Environment: Gateway to Discovery, San Diego, California, XSEDE '13, pp. 11:1--11:8. 2013.

ISBN: 978-1-4503-2170-9

DOI: 10.1145/2484762.2484786

It is well known that the violence of slow cook-off explosions can greatly exceed the comparatively mild case burst events typically observed for rapid heating. However, there have been few studies that examine the reaction violence as a function of applied heat flux that explore the dependence on heating geometry and device size. Here we report progress on a study using the Uintah Computation Framework, a high-performance computer model capable of modeling deflagration, material damage, deflagration to detonation transition and detonation for PBX9501 and similar explosives. Our results suggests the existence of a sharp threshold for increased reaction violence with decreasing heat flux. The critical heat flux was seen to increase with increasing device size and decrease with the heating of multiple surfaces, suggesting that the temperature gradient in the heated energetic material plays an important role the violence of reactions.

Keywords: DDT, cook-off, deflagration, detonation, violence of reaction, c-safe

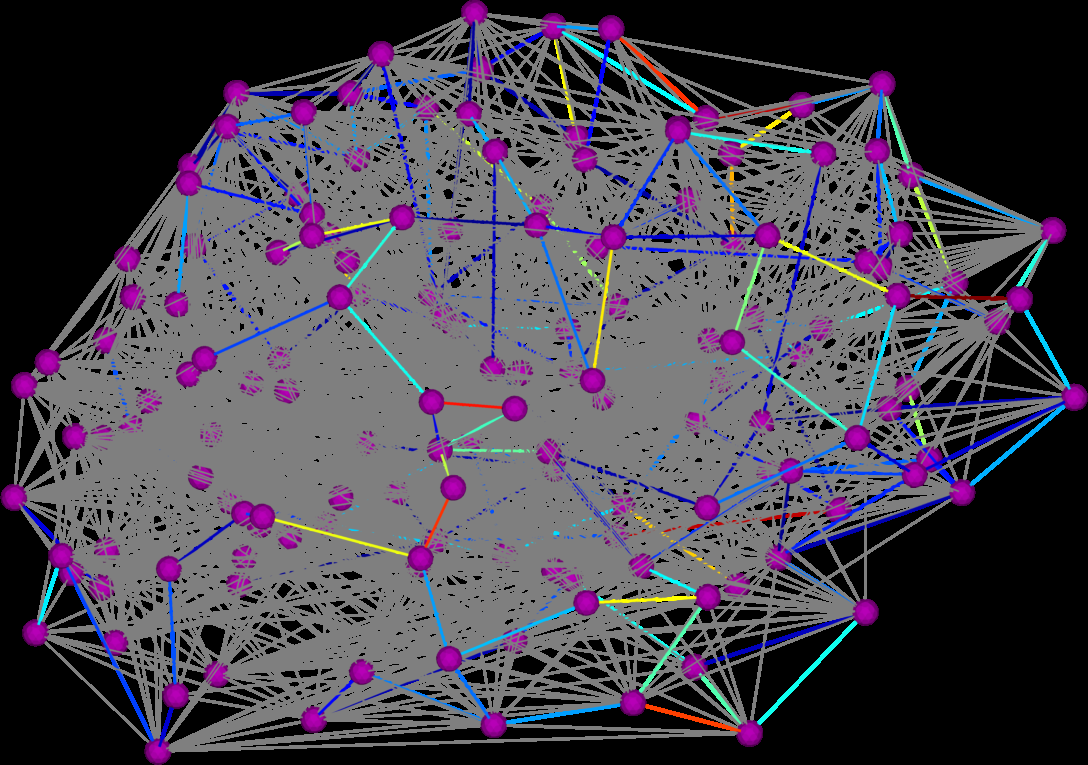

D.K. Hammond, Y. Gur, C.R. Johnson.

“Graph Diffusion Distance: A Difference Measure for Weighted Graphs Based on the Graph Laplacian Exponential Kernel,” In Proceedings of the IEEE global conference on information and signal processing (GlobalSIP'13), Austin, Texas, pp. 419--422. 2013.

DOI: 10.1109/GlobalSIP.2013.6736904

We propose a novel difference metric, called the graph diffusion distance (GDD), for quantifying the difference between two weighted graphs with the same number of vertices. Our approach is based on measuring the average similarity of heat diffusion on each graph. We compute the graph Laplacian exponential kernel matrices, corresponding to repeatedly solving the heat diffusion problem with initial conditions localized to single vertices. The GDD is then given by the Frobenius norm of the difference of the kernels, at the diffusion time yielding the maximum difference. We study properties of the proposed distance on both synthetic examples, and on real-data graphs representing human anatomical brain connectivity.

X. Hao, P.T. Fletcher.

“Joint Fractional Segmentation and Multi-Tensor Estimation in Diffusion MRI,” In Proceedings of the International Conference on Information Processing in Medical Imaging (IPMI), Lecture Notes in Computer Science (LNCS), pp. (accepted). 2013.

In this paper we present a novel Bayesian approach for fractional segmentation of white matter tracts and simultaneous estimation of a multi-tensor diffusion model. Our model consists of several white matter tracts, each with a corresponding weight and tensor compartment in each voxel. By incorporating a prior that assumes the tensor fields inside each tract are spatially correlated, we are able to reliably estimate multiple tensor compartments in fiber crossing regions, even with low angular diffusion-weighted imaging (DWI). Our model distinguishes the diffusion compartment associated with each tract, which reduces the effects of partial voluming and achieves more reliable statistics of diffusion measurements.We test our method on synthetic data with known ground truth and show that we can recover the correct volume fractions and tensor compartments. We also demonstrate that the proposed method results in improved segmentation and diffusion measurement statistics on real data in the presence of crossing tracts and partial voluming.

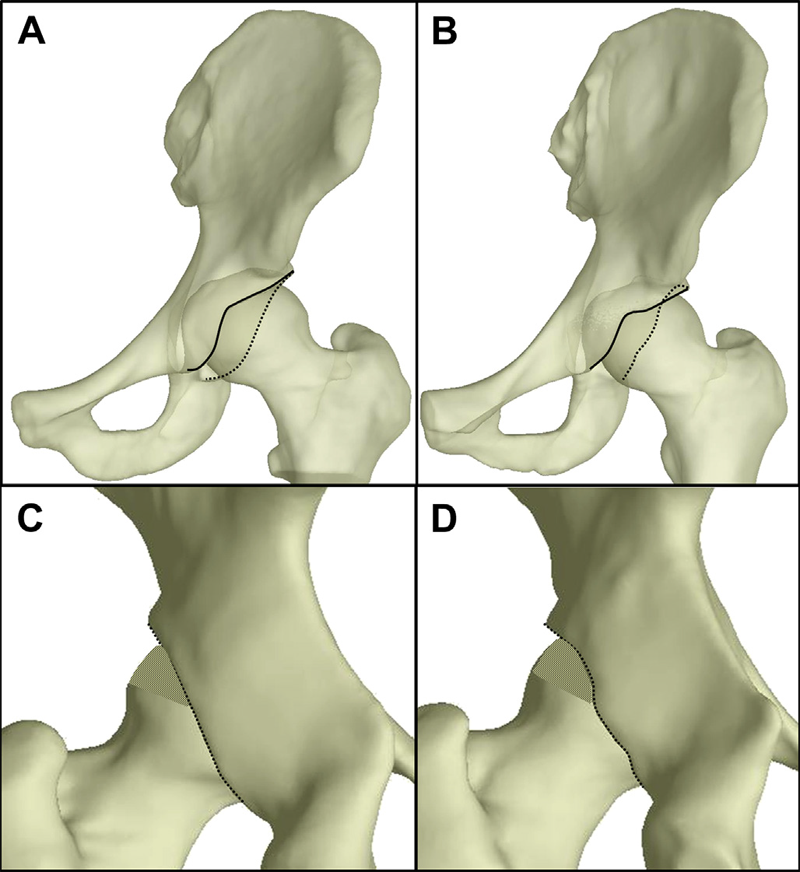

M.D. Harris, S.P. Reese, C.L. Peters, J.A. Weiss, A.E. Anderson.

“Three-dimensional Quantification of Femoral Head Shape in Controls and Patients with Cam-type Femoroacetabular Impingement,” In Annals of Biomedical Engineering, Vol. 41, No. 6, pp. 1162--1171. 2013.

DOI: 10.1007/s10439-013-0762-1

An objective measurement technique to quantify 3D femoral head shape was developed and applied to normal subjects and patients with cam-type femoroacetabular impingement (FAI). 3D reconstructions were made from high-resolution CT images of 15 cam and 15 control femurs. Femoral heads were fit to ideal geometries consisting of rotational conchoids and spheres. Geometric similarity between native femoral heads and ideal shapes was quantified. The maximum distance native femoral heads protruded above ideal shapes and the protrusion area were measured. Conchoids provided a significantly better fit to native femoral head geometry than spheres for both groups. Cam-type FAI femurs had significantly greater maximum deviations (4.99 ± 0.39 mm and 4.08 ± 0.37 mm) than controls (2.41 ± 0.31 mm and 1.75 ± 0.30 mm) when fit to spheres or conchoids, respectively. The area of native femoral heads protruding above ideal shapes was significantly larger in controls when a lower threshold of 0.1 mm (for spheres) and 0.01 mm (for conchoids) was used to define a protrusion. The 3D measurement technique described herein could supplement measurements of radiographs in the diagnosis of cam-type FAI. Deviations up to 2.5 mm from ideal shapes can be expected in normal femurs while deviations of 4–5 mm are characteristic of cam-type FAI.

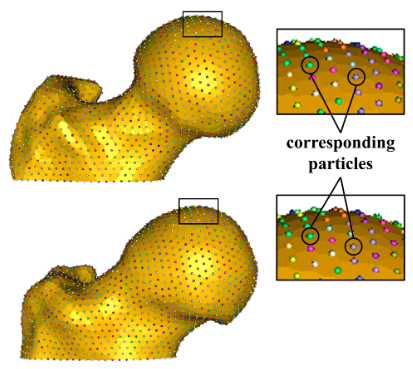

M.D. Harris, M. Datar, R.T. Whitaker, E.R. Jurrus, C.L. Peters, A.E. Anderson.

“Statistical Shape Modeling of Cam Femoroacetabular Impingement,” In Journal of Orthopaedic Research, Vol. 31, No. 10, pp. 1620--1626. 2013.

DOI: 10.1002/jor.22389

Statistical shape modeling (SSM) was used to quantify 3D variation and morphologic differences between femurs with and without cam femoroacetabular impingement (FAI). 3D surfaces were generated from CT scans of femurs from 41 controls and 30 cam FAI patients. SSM correspondence particles were optimally positioned on each surface using a gradient descent energy function. Mean shapes for groups were defined. Morphological differences between group mean shapes and between the control mean and individual patients were calculated. Principal component analysis described anatomical variation. Among all femurs, the first six modes (or principal components) captured significant variations, which comprised 84% of cumulative variation. The first two modes, which described trochanteric height and femoral neck width, were significantly different between groups. The mean cam femur shape protruded above the control mean by a maximum of 3.3 mm with sustained protrusions of 2.5–3.0 mm along the anterolateral head-neck junction/distal anterior neck. SSM described variations in femoral morphology that corresponded well with areas prone to damage. Shape variation described by the first two modes may facilitate objective characterization of cam FAI deformities; variation beyond may be inherent population variance. SSM could characterize disease severity and guide surgical resection of bone.

M.D. Harris.

“The geometry and biomechanics of normal and pathomorphologic human hips,” Note: Ph.D. Thesis, Department of Bioengineering, University of Utah, 2013.

C.R. Henak, A.E. Anderson, J.A. Weiss.

“Subject-specific analysis of joint contact mechanics: application to the study of osteoarthritis and surgical planning,” In Journal of Biomechanical Engineering, Vol. 135, No. 2, 2013.

DOI: 10.1115/1.4023386

PubMed ID: 23445048

Advances in computational mechanics, constitutive modeling, and techniques for subject-specific modeling have opened the door to patient-specific simulation of the relationships between joint mechanics and osteoarthritis (OA), as well as patient-specific preoperative planning. This article reviews the application of computational biomechanics to the simulation of joint contact mechanics as relevant to the study of OA. This review begins with background regarding OA and the mechanical causes of OA in the context of simulations of joint mechanics. The broad range of technical considerations in creating validated subject-specific whole joint models is discussed. The types of computational models available for the study of joint mechanics are reviewed. The types of constitutive models that are available for articular cartilage are reviewed, with special attention to choosing an appropriate constitutive model for the application at hand. Issues related to model generation are discussed, including acquisition of model geometry from volumetric image data and specific considerations for acquisition of computed tomography and magnetic resonance imaging data. Approaches to model validation are reviewed. The areas of parametric analysis, factorial design, and probabilistic analysis are reviewed in the context of simulations of joint contact mechanics. Following the review of technical considerations, the article details insights that have been obtained from computational models of joint mechanics for normal joints; patient populations; the study of specific aspects of joint mechanics relevant to OA, such as congruency and instability; and preoperative planning. Finally, future directions for research and application are summarized.

H.B. Henninger, C.J. Underwood, S.J. Romney, G.L. Davis, J.A. Weiss.

“Effect of Elastin Digestion on the Quasi-Static Tensile Response of Medial Collateral Ligament,” In Journal of Orthopaedic Research, pp. (published online). 2013.

DOI: 10.1002/jor.22352

Elastin is a structural protein that provides resilience to biological tissues. We examined the contributions of elastin to the quasi-static tensile response of porcine medial collateral ligament through targeted disruption of the elastin network with pancreatic elastase. Elastase concentration and treatment time were varied to determine a dose response. Whereas elastin content decreased with increasing elastase concentration and treatment time, the change in peak stress after cyclic loading reached a plateau above 1 U/ml elastase and 6 h treatment. For specimens treated with 2 U/ml elastase for 6 h, elastin content decreased approximately 35%. Mean peak tissue strain after cyclic loading (4.8%, p ≥ 0.300), modulus (275 MPa, p ≥ 0.114) and hysteresis (20%, p ≥ 0.553) were unaffected by elastase digestion, but stress decreased significantly after treatment (up to 2 MPa, p ≤ 0.049). Elastin degradation had no effect on failure properties, but tissue lengthened under the same pre-stress. Stiffness in the linear region was unaffected by elastase digestion, suggesting that enzyme treatment did not disrupt collagen. These results demonstrate that elastin primarily functions in the toe region of the stress–strain curve, yet contributes load support in the linear region. The increase in length after elastase digestion suggests that elastin may pre-stress and stabilize collagen crimp in ligaments

C.R. Henak, Carruth E, A.E. Anderson, M.D. Harris, B.J. Ellis, C.L. Peters, J.A. Weiss.

“Finite element predictions of cartilage contact mechanics in hips with retroverted acetabula,” In Osteoarthritis and Cartilage, Vol. 21, pp. 1522-1529. 2013.

DOI: 10.1016/j.joca.2013.06.008

Background

A contributory factor to hip osteoarthritis (OA) is abnormal cartilage mechanics. Acetabular retroversion, a version deformity of the acetabulum, has been postulated to cause OA via decreased posterior contact area and increased posterior contact stress. Although cartilage mechanics cannot be measured directly in vivo to evaluate the causes of OA, they can be predicted using finite element (FE) modeling.

Objective

The objective of this study was to compare cartilage contact mechanics between hips with normal and retroverted acetabula using subject-specific FE modeling.

Methods

Twenty subjects were recruited and imaged: 10 with normal acetabula and 10 with retroverted acetabula. FE models were constructed using a validated protocol. Walking, stair ascent, stair descent and rising from a chair were simulated. Acetabular cartilage contact stress and contact area were compared between groups.

Results

Retroverted acetabula had superomedial cartilage contact patterns, while normal acetabula had widely distributed cartilage contact patterns. In the posterolateral acetabulum, average contact stress and contact area during walking and stair descent were 2.6–7.6 times larger in normal than retroverted acetabula (P ≤ 0.017). Conversely, in the superomedial acetabulum, peak contact stress during walking was 1.2–1.6 times larger in retroverted than normal acetabula (P ≤ 0.044). Further differences varied by region and activity.

Conclusions

This study demonstrated superomedial contact patterns in retroverted acetabula vs widely distributed contact patterns in normal acetabula. Smaller posterolateral contact stress in retroverted acetabula than in normal acetabula suggests that increased posterior contact stress alone may not be the link between retroversion and OA.

C.R. Henak, A.K. Kapron, B.J. Ellis, S.A. Maas, A.E. Anderson, J.A. Weiss.

“Specimen-specific predictions of contact stress under physiological loading in the human hip: validation and sensitivity studies,” In Biomechanics and Modeling in Mechanobiology, pp. 1-14. 2013.

DOI: 10.1007/s10237-013-0504-1

Hip osteoarthritis may be initiated and advanced by abnormal cartilage contact mechanics, and finite element (FE) modeling provides an approach with the potential to allow the study of this process. Previous FE models of the human hip have been limited by single specimen validation and the use of quasi-linear or linear elastic constitutive models of articular cartilage. The effects of the latter assumptions on model predictions are unknown, partially because data for the instantaneous behavior of healthy human hip cartilage are unavailable. The aims of this study were to develop and validate a series of specimen-specific FE models, to characterize the regional instantaneous response of healthy human hip cartilage in compression, and to assess the effects of material nonlinearity, inhomogeneity and specimen-specific material coefficients on FE predictions of cartilage contact stress and contact area. Five cadaveric specimens underwent experimental loading, cartilage material characterization and specimen-specific FE modeling. Cartilage in the FE models was represented by average neo-Hookean, average Veronda Westmann and specimen- and region-specific Veronda Westmann hyperelastic constitutive models. Experimental measurements and FE predictions compared well for all three cartilage representations, which was reflected in average RMS errors in contact stress of less than 25 %. The instantaneous material behavior of healthy human hip cartilage varied spatially, with stiffer acetabular cartilage than femoral cartilage and stiffer cartilage in lateral regions than in medial regions. The Veronda Westmann constitutive model with average material coefficients accurately predicted peak contact stress, average contact stress, contact area and contact patterns. The use of subject- and region-specific material coefficients did not increase the accuracy of FE model predictions. The neo-Hookean constitutive model underpredicted peak contact stress in areas of high stress. The results of this study support the use of average cartilage material coefficients in predictions of cartilage contact stress and contact area in the normal hip. The regional characterization of cartilage material behavior provides the necessary inputs for future computational studies, to investigate other mechanical parameters that may be correlated with OA and cartilage damage in the human hip. In the future, the results of this study can be applied to subject-specific models to better understand how abnormal hip contact stress and contact area contribute to OA.

C.R. Henak.

“Cartilage and labrum mechanics in the normal and pathomorphologic human hip,” Note: Ph.D. Thesis, Department of Bioengineering, University of Utah, 2013.

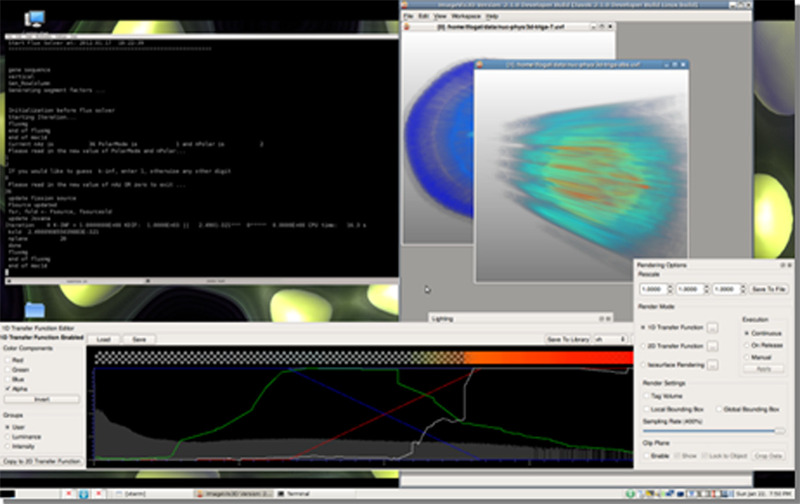

H. Hernandez, J. Knezevic, T. Fogal, T. Sherman, T. Jevremovic.

“Visual numerical steering in 3D AGENT code system for advanced nuclear reactor modeling and design,” In Annals of Nuclear Energy, Vol. 55, pp. 248--257. 2013.

The AGENT simulation system is used for detailed three-dimensional modeling of neutron transport and corresponding properties of nuclear reactors of any design. Numerical solution to the neutron transport equation in the AGENT system is based on the Method of Characteristics (MOCs) and the theory of R-functions. The latter of which is used for accurately describing current and future heterogeneous lattices of reactor core configurations. The AGENT code has been extensively verified to assure a high degree of accuracy for predicting neutron three-dimensional point-wise flux spatial distributions, power peaking factors, reaction rates, and eigenvalues. In this paper, a new AGENT code feature, a computational steering, is presented. This new feature provides a novel way for using deterministic codes for fast evaluation of reactor core parameters, at no loss to accuracy. The computational steering framework as developed at the Technische Universität München is smoothly integrated into the AGENT solver. This framework allows for an arbitrary interruption of AGENT simulation, allowing the solver to restart with updated parameters. One possible use of this is to accelerate the convergence of the final values resulting in significantly reduced simulation times. Using this computational steering in the AGENT system, coarse MOC resolution parameters can initially be selected and later update them – while the simulation is actively running – into fine resolution parameters. The utility of the steering framework is demonstrated using the geometry of a research reactor at the University of Utah: this new approach provides a savings in CPU time on the order of 50%.

Keywords: Numerical steering, AGENT code, Deterministic neutron transport codes, Method of Characteristics, R-functions, Numerical visualizations