SCI Publications

2013

D. Maljovec, Bei Wang, A. Kupresanin, G. Johannesson, V. Pascucci, P.-T. Bremer.

“Adaptive Sampling with Topological Scores,” In Int. J. Uncertainty Quantification, Vol. 3, No. 2, Begell House, pp. 119--141. 2013.

DOI: 10.1615/int.j.uncertaintyquantification.2012003955

Understanding and describing expensive black box functions such as physical simulations is a common problem in many application areas. One example is the recent interest in uncertainty quantification with the goal of discovering the relationship between a potentially large number of input parameters and the output of a simulation. Typically, the simulation of interest is expensive to evaluate and thus the sampling of the parameter space is necessarily small. As a result choosing a "good" set of samples at which to evaluate is crucial to glean as much information as possible from the fewest samples. While space-filling sampling designs such as Latin hypercubes provide a good initial cover of the entire domain, more detailed studies typically rely on adaptive sampling: Given an initial set of samples, these techniques construct a surrogate model and use it to evaluate a scoring function which aims to predict the expected gain from evaluating a potential new sample. There exist a large number of different surrogate models as well as different scoring functions each with their own advantages and disadvantages. In this paper we present an extensive comparative study of adaptive sampling using four popular regression models combined with six traditional scoring functions compared against a space-filling design. Furthermore, for a single high-dimensional output function, we introduce a new class of scoring functions based on global topological rather than local geometric information. The new scoring functions are competitive in terms of the root mean squared prediction error but are expected to better recover the global topological structure. Our experiments suggest that the most common point of failure of adaptive sampling schemes are ill-suited regression models. Nevertheless, even given well-fitted surrogate models many scoring functions fail to outperform a space-filling design.

R.K. McClure, M. Styner, J.A. Lieberman, S. Gouttard, G. Gerig, X. Shi, H. Zhu.

“Localized differences in caudate and hippocampal shape associated with schizophrenia but not antipsychotic type,” In Psychiatry Research: Neuroimaging, Vol. 211, No. 1, pp. 1--10. January, 2013.

DOI: 10.1016/j.pscychresns.2012.07.001

PubMed Central ID: PMC3557605

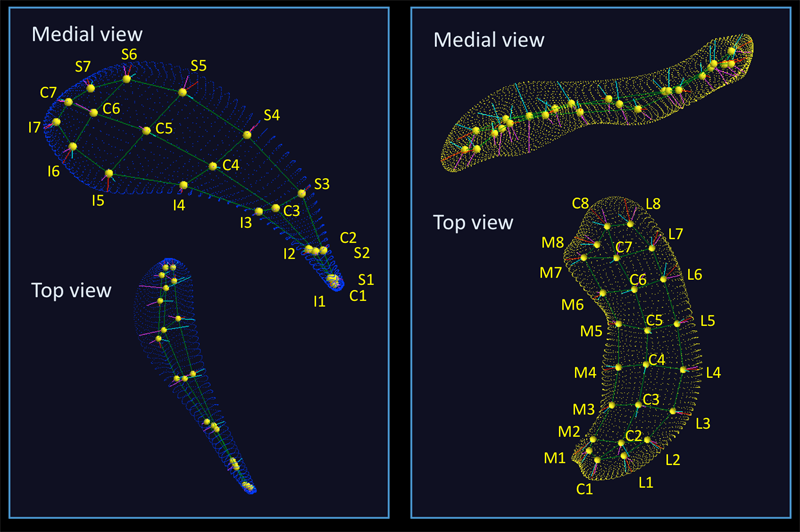

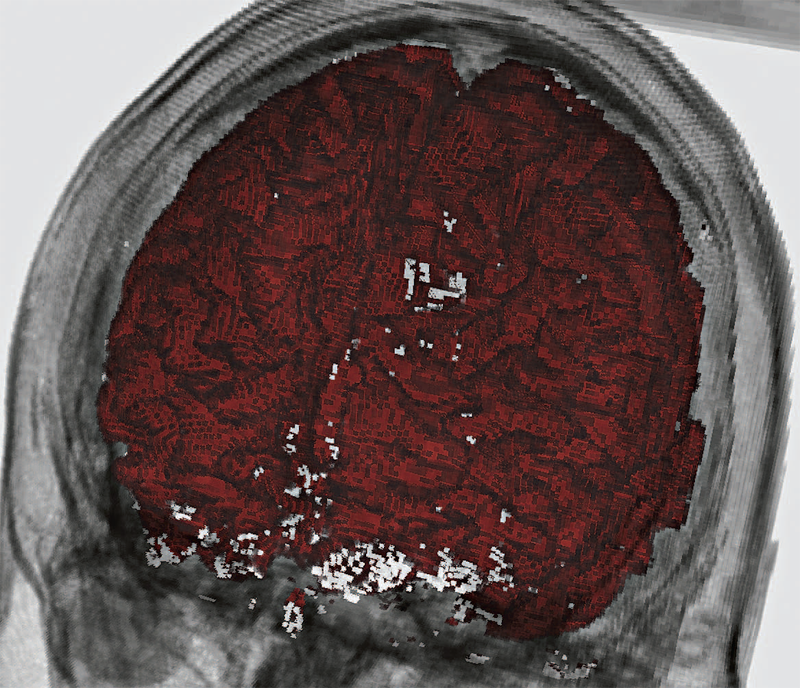

Caudate and hippocampal volume differences in patients with schizophrenia are associated with disease and antipsychotic treatment, but local shape alterations have not been thoroughly examined. Schizophrenia patients randomly assigned to haloperidol and olanzapine treatment underwent magnetic resonance imaging (MRI) at 3, 6, and 12 months. The caudate and hippocampus were represented as medial representations (M-reps); mesh structures derived from automatic segmentations of high resolution MRIs. Two quantitative shape measures were examined: local width and local deformation. A novel nonparametric statistical method, adjusted exponentially tilted (ET) likelihood, was used to compare the shape measures across the three groups while controlling for covariates. Longitudinal shape change was not observed in the hippocampus or caudate when the treatment groups and controls were examined in a global analysis, nor when the three groups were examined individually. Both baseline and repeated measures analysis showed differences in local caudate and hippocampal size between patients and controls, while no consistent differences were shown between treatment groups. Regionally specific differences in local hippocampal and caudate shape are present in schizophrenic patients. Treatment-related related longitudinal shape change was not observed within the studied timeframe. Our results provide additional evidence for disrupted cortico-basal ganglia-thalamo-cortical circuits in schizophrenia. CLINICAL TRIAL INFORMATION: This longitudinal study was conducted from March 1, 1997 to July 31, 2001 at 14 academic medical centers (11 in the United States, one in Canada, one in the Netherlands, and one in England). This study was performed prior to the establishment of centralized registries of federally and privately supported clinical trials.

K.S. McDowell, F. Vadakkumpadan, R. Blake, J. Blauer, G.t Plank, R.S. MacLeod, N.A. Trayanova.

“Mechanistic Inquiry into the Role of Tissue Remodeling in Fibrotic Lesions in Human Atrial Fibrillation,” In Biophysical Journal, Vol. 104, pp. 2764--2773. 2013.

DOI: 10.1016/j.bpj.2013.05.025

PubMed ID: 23790385

PubMed Central ID: PMC3686346

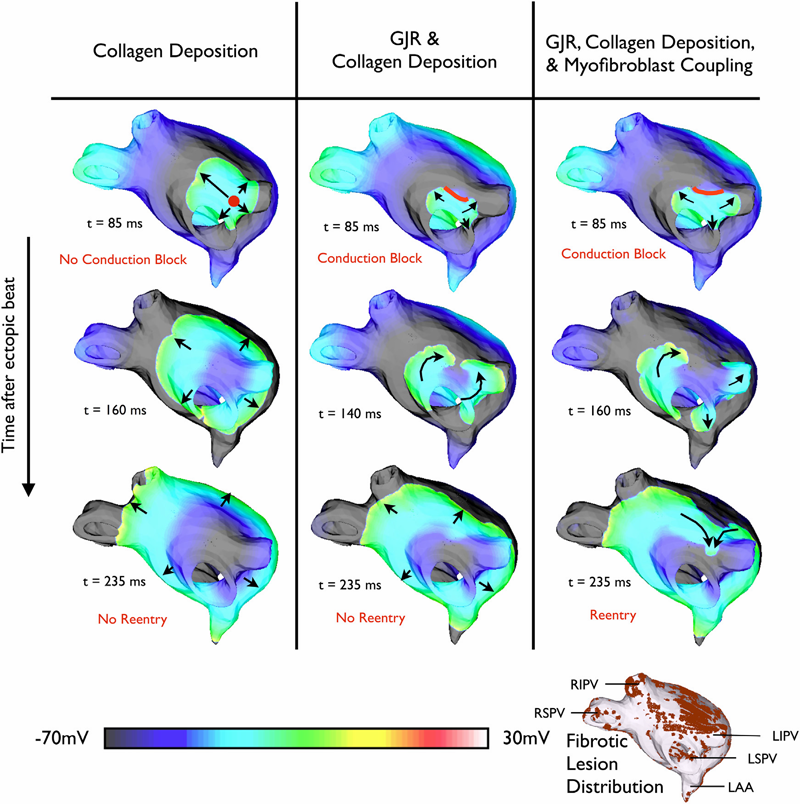

Atrial fibrillation (AF), the most common arrhythmia in humans, is initiated when triggered activity from the pulmonary veins propagates into atrial tissue and degrades into reentrant activity. Although experimental and clinical findings show a correlation between atrial fibrosis and AF, the causal relationship between the two remains elusive. This study used an array of 3D computational models with different representations of fibrosis based on a patient-specific atrial geometry with accurate fibrotic distribution to determine the mechanisms by which fibrosis underlies the degradation of a pulmonary vein ectopic beat into AF. Fibrotic lesions in models were represented with combinations of: gap junction remodeling; collagen deposition; and myofibroblast proliferation with electrotonic or paracrine effects on neighboring myocytes. The study found that the occurrence of gap junction remodeling and the subsequent conduction slowing in the fibrotic lesions was a necessary but not sufficient condition for AF development, whereas myofibroblast proliferation and the subsequent electrophysiological effect on neighboring myocytes within the fibrotic lesions was the sufficient condition necessary for reentry formation. Collagen did not alter the arrhythmogenic outcome resulting from the other fibrosis components. Reentrant circuits formed throughout the noncontiguous fibrotic lesions, without anchoring to a specific fibrotic lesion.

C. McGann, N. Akoum, A. Patel, E. Kholmovski, P. Revelo, K. Damal, B. Wilson, J. Cates, A. Harrison, R. Ranjan, N.S. Burgon, T. Greene, D. Kim, E.V.R. DiBella, D. Parker, R.S. MacLeod, N.F. Marrouche.

“Atrial Fibrillation Ablation Outcome is Predicted by Left Atrial Remodeling on MRI,” In Circulation: Arrhythmia and Electrophysiology, Note: Published online before print., December, 2013.

DOI: 10.1161/CIRCEP.113.000689

Background: While catheter ablation therapy for atrial fibrillation (AF) is becoming more common, results vary widely and patient selection criteria remain poorly defined. We hypothesized that late gadolinium enhancement magnetic resonance imaging (LGE-MRI) can identify left atrial (LA) wall structural remodeling (SRM) and stratify patients who are likely or not to benefit from ablation therapy.

Methods and Results: LGE-MRI was performed on 426 consecutive AF patients without contraindications to MRI and before undergoing their first ablation procedure and on 21 non-AF control subjects. Patients were categorized by SRM stage (I-IV) based on percentage of LA wall enhancement for correlation with procedure outcomes. Histological validation of SRM was performed comparing LGE-MRI to surgical biopsy. A total of 386 patients (91%) with adequate LGE-MRI scans were included in the study. Post-ablation, 123 (31.9%) experienced recurrent atrial arrhythmias over one-year follow-up. Recurrent arrhythmias (failed ablations) occurred at higher SRM stages with 28/133 (21.0%) stage I, 40/140 (29.3%) stage II, 24/71 (33.8%) stage III, and 30/42 (71.4%) stage IV. In multi-variate analysis, ablation outcome was best predicted by advanced SRM stage (hazard ratio (HR) 4.89; pKeywords: atrial fibrillation arrhythmia, catheter ablation, magnetic resonance imaging, remodeling, outcome

T. McLoughlin, M.W. Jones, R.S. Laramee, R. Malki, I. Masters, C.D. Hansen.

“Similarity Measures for Enhancing Interactive Streamline Seeding,” In IEEE Transactions on Visualization and Computer Graphics (TVCG), Vol. 19, No. 8, pp. 1342--1353. 2013.

ISSN: 1077-2626

DOI: 10.1109/TVCG.2012.150

PubMed ID: 23744264

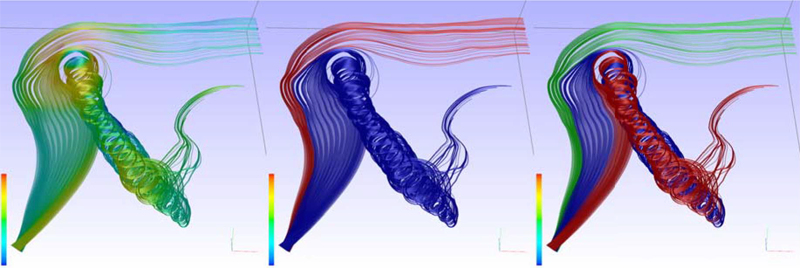

Streamline seeding rakes are widely used in vector field visualization. We present new approaches for calculating similarity between integral curves (streamlines and pathlines). While others have used similarity distance measures, the computational expense involved with existing techniques is relatively high due to the vast number of euclidean distance tests, restricting interactivity and their use for streamline seeding rakes. We introduce the novel idea of computing streamline signatures based on a set of curve-based attributes. A signature produces a compact representation for describing a streamline. Similarity comparisons are performed by using a popular statistical measure on the derived signatures. We demonstrate that this novel scheme, including a hierarchical variant, produces good clustering results and is computed over two orders of magnitude faster than previous methods. Similarity-based clustering enables filtering of the streamlines to provide a nonuniform seeding distribution along the seeding object. We show that this method preserves the overall flow behavior while using only a small subset of the original streamline set. We apply focus + context rendering using the clusters which allows for faster and easier analysis in cases of high visual complexity and occlusion. The method provides a high level of interactivity and allows the user to easily fine tune the clustering results at runtime while avoiding any time-consuming recomputation. Our method maintains interactive rates even when hundreds of streamlines are used.

Q. Meng, A. Humphrey, J. Schmidt, M. Berzins.

“Preliminary Experiences with the Uintah Framework on Intel Xeon Phi and Stampede,” SCI Technical Report, No. UUSCI-2013-002, SCI Institute, University of Utah, 2013.

In this work, we describe our preliminary experiences on the Stampede system in the context of the Uintah Computational Framework. Uintah was developed to provide an environment for solving a broad class of fluid-structure interaction problems on structured adaptive grids. Uintah uses a combination of fluid-flow solvers and particle-based methods, together with a novel asynchronous taskbased approach and fully automated load balancing. While we have designed scalable Uintah runtime systems for large CPU core counts, the emergence of heterogeneous systems presents considerable challenges in terms of effectively utilizing additional on-node accelerators and co-processors, deep memory hierarchies, as well as managing multiple levels of parallelism. Our recent work has addressed the emergence of heterogeneous CPU/GPU systems with the design of a Unified heterogeneous runtime system, enabling Uintah to fully exploit these architectures with support for asynchronous, out-of-order scheduling of both CPU and GPU computational tasks. Using this design, Uintah has run at full scale on the Keeneland System and TitanDev. With the release of the Intel Xeon Phi co-processor and the recent availability of the Stampede system, we show that Uintah may be modified to utilize such a coprocessor based system. We also explore the different usage models provided by the Xeon Phi with the aim of understanding portability of a general purpose framework like Uintah to this architecture. These usage models range from the pragma based offload model to the more complex symmetric model, utilizing all co-processor and host CPU cores simultaneously. We provide preliminary results of the various usage models for a challenging adaptive mesh refinement problem, as well as a detailed account of our experience adapting Uintah to run on the Stampede system. Our conclusion is that while the Stampede system is easy to use, obtaining high performance from the Xeon Phi co-processors requires a substantial but different investment to that needed for GPU-based systems.

Keywords: Uintah, hybrid parallelism, scalability, parallel, adaptive, MIC, Xeon Phi, heterogeneous systems, Stampede, co-processor

Q. Meng, A. Humphrey, J. Schmidt, M. Berzins.

“Investigating Applications Portability with the Uintah DAG-based Runtime System on PetaScale Supercomputers,” SCI Technical Report, No. UUSCI-2013-003, SCI Institute, University of Utah, 2013.

Present trends in high performance computing present formidable challenges for applications code using multicore nodes possibly with accelerators and/or co-processors and reduced memory while still attaining scalability. Software frameworks that execute machineindependent applications code using a runtime system that shields users from architectural complexities offer a possible solution. The Uintah framework for example, solves a broad class of large-scale problems on structured adaptive grids using fluid-flow solvers coupled with particle-based solids methods. Uintah executes directed acyclic graphs of computational tasks with a scalable asynchronous and dynamic runtime system for CPU cores and/or accelerators/coprocessors on a node. Uintah's clear separation between application and runtime code has led to scalability increases of 1000x without significant changes to application code. This methodology is tested on three leading Top500 machines; OLCF Titan, TACC Stampede and ALCF Mira using three diverse and challenging applications problems. This investigation of scalability with regard to the different processors and communications performance leads to the overall conclusion that the adaptive DAG-based approach provides a very powerful abstraction for solving challenging multiscale multi-physics engineering problems on some of the largest and most powerful computers available today.

Keywords: Uintah, hybrid parallelism, scalability, parallel, adaptive, MIC, Xeon Phi, heterogeneous systems, Stampede, co-processor

Q. Meng, A. Humphrey, J. Schmidt, M. Berzins.

“Investigating Applications Portability with the Uintah DAG-based Runtime System on PetaScale Supercomputers,” In Proceedings of SC13: International Conference for High Performance Computing, Networking, Storage and Analysis, pp. 96:1--96:12. 2013.

ISBN: 978-1-4503-2378-9

DOI: 10.1145/2503210.2503250

Present trends in high performance computing present formidable challenges for applications code using multicore nodes possibly with accelerators and/or co-processors and reduced memory while still attaining scalability. Software frameworks that execute machine-independent applications code using a runtime system that shields users from architectural complexities offer a possible solution. The Uintah framework for example, solves a broad class of large-scale problems on structured adaptive grids using fluid-flow solvers coupled with particle-based solids methods. Uintah executes directed acyclic graphs of computational tasks with a scalable asynchronous and dynamic runtime system for CPU cores and/or accelerators/co-processors on a node. Uintah's clear separation between application and runtime code has led to scalability increases of 1000x without significant changes to application code. This methodology is tested on three leading Top500 machines; OLCF Titan, TACC Stampede and ALCF Mira using three diverse and challenging applications problems. This investigation of scalability with regard to the different processors and communications performance leads to the overall conclusion that the adaptive DAG-based approach provides a very powerful abstraction for solving challenging multi-scale multi-physics engineering problems on some of the largest and most powerful computers available today.

Keywords: Blue Gene/Q, GPU, Xeon Phi, adaptive, application, co-processor, heterogeneous systems, hybrid parallelism, parallel, scalability, software, uintah, NETL

Q. Meng, A. Humphrey, J. Schmidt, M. Berzins.

“Preliminary Experiences with the Uintah Framework on Intel Xeon Phi and Stampede,” In Proceedings of the Conference on Extreme Science and Engineering Discovery Environment: Gateway to Discovery (XSEDE 2013), San Diego, California, pp. 48:1--48:8. 2013.

DOI: 10.1145/2484762.2484779

In this work, we describe our preliminary experiences on the Stampede system in the context of the Uintah Computational Framework. Uintah was developed to provide an environment for solving a broad class of fluid-structure interaction problems on structured adaptive grids. Uintah uses a combination of fluid-flow solvers and particle-based methods, together with a novel asynchronous task-based approach and fully automated load balancing. While we have designed scalable Uintah runtime systems for large CPU core counts, the emergence of heterogeneous systems presents considerable challenges in terms of effectively utilizing additional on-node accelerators and co-processors, deep memory hierarchies, as well as managing multiple levels of parallelism. Our recent work has addressed the emergence of heterogeneous CPU/GPU systems with the design of a Unified heterogeneous runtime system, enabling Uintah to fully exploit these architectures with support for asynchronous, out-of-order scheduling of both CPU and GPU computational tasks. Using this design, Uintah has run at full scale on the Keeneland System and TitanDev. With the release of the Intel Xeon Phi co-processor and the recent availability of the Stampede system, we show that Uintah may be modified to utilize such a co-processor based system. We also explore the different usage models provided by the Xeon Phi with the aim of understanding portability of a general purpose framework like Uintah to this architecture. These usage models range from the pragma based offload model to the more complex symmetric model, utilizing all co-processor and host CPU cores simultaneously. We provide preliminary results of the various usage models for a challenging adaptive mesh refinement problem, as well as a detailed account of our experience adapting Uintah to run on the Stampede system. Our conclusion is that while the Stampede system is easy to use, obtaining high performance from the Xeon Phi co-processors requires a substantial but different investment to that needed for GPU-based systems.

Keywords: MIC, Xeon Phi, adaptive, co-processor, heterogeneous systems, hybrid parallelism, parallel, scalability, stampede, uintah, c-safe

D.C.B. de Oliveira, Z. Rakamaric, G. Gopalakrishnan, A. Humphrey, Q. Meng, M. Berzins.

“Crash Early, Crash Often, Explain Well: Practical Formal Correctness Checking of Million-core Problem Solving Environments for HPC,” In Proceedings of the 35th International Conference on Software Engineering (ICSE 2013), pp. (accepted). 2013.

While formal correctness checking methods have been deployed at scale in a number of important practical domains, we believe that such an experiment has yet to occur in the domain of high performance computing at the scale of a million CPU cores. This paper presents preliminary results from the Uintah Runtime Verification (URV) project that has been launched with this objective. Uintah is an asynchronous task-graph based problem-solving environment that has shown promising results on problems as diverse as fluid-structure interaction and turbulent combustion at well over 200K cores to date. Uintah has been tested on leading platforms such as Kraken, Keenland, and Titan consisting of multicore CPUs and GPUs, incorporates several innovative design features, and is following a roadmap for development well into the million core regime. The main results from the URV project to date are crystallized in two observations: (1) A diverse array of well-known ideas from lightweight formal methods and testing/observing HPC systems at scale have an excellent chance of succeeding. The real challenges are in finding out exactly which combinations of ideas to deploy, and where. (2) Large-scale problem solving environments for HPC must be designed such that they can be \"crashed early\" (at smaller scales of deployment) and \"crashed often\" (have effective ways of input generation and schedule perturbation that cause vulnerabilities to be attacked with higher probability). Furthermore, following each crash, one must \"explain well\" (given the extremely obscure ways in which an error finally manifests itself, we must develop ways to record information leading up to the crash in informative ways, to minimize offsite debugging burden). Our plans to achieve these goals and to measure our success are described. We also highlight some of the broadly applicable concepts and approaches.

Keywords: Uintah

R.M. Orellana, B. Erem, D.H. Brooks.

“Time invariant multi electrode averaging for biomedical signals,” In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1242--1246. 2013.

ISSN: 1520-6149

DOI: 10.1109/ICASSP.2013.6637849

One of the biggest challenges in averaging ECG or EEG signals is to overcome temporal misalignments and distortions, due to uncertain timing or complex non-stationary dynamics. Standard methods average individual leads over a collection of epochs on a time-sample by time-sample basis, even when multi-electrode signals are available. Here we propose a method that averages multi electrode recordings simultaneously by using spatial patterns and without relying on time or frequency.

B. Paniagua, O. Emodi, J. Hill, J. Fishbaugh, L.A. Pimenta, S.R. Aylward, E. Andinet, G. Gerig, J. Gilmore, J.A. van Aalst, M. Styner.

“3D of brain shape and volume after cranial vault remodeling surgery for craniosynostosis correction in infants,” In Proceedings of SPIE 8672, Medical Imaging 2013: Biomedical Applications in Molecular, Structural, and Functional Imaging, 86720V, 2013.

DOI: 10.1117/12.2006524

The skull of young children is made up of bony plates that enable growth. Craniosynostosis is a birth defect that causes one or more sutures on an infant’s skull to close prematurely. Corrective surgery focuses on cranial and orbital rim shaping to return the skull to a more normal shape. Functional problems caused by craniosynostosis such as speech and motor delay can improve after surgical correction, but a post-surgical analysis of brain development in comparison with age-matched healthy controls is necessary to assess surgical outcome. Full brain segmentations obtained from pre- and post-operative computed tomography (CT) scans of 8 patients with single suture sagittal (n=5) and metopic (n=3), nonsyndromic craniosynostosis from 41 to 452 days-of-age were included in this study. Age-matched controls obtained via 4D acceleration-based regression of a cohort of 402 full brain segmentations from healthy controls magnetic resonance images (MRI) were also used for comparison (ages 38 to 825 days). 3D point-based models of patient and control cohorts were obtained using SPHARM-PDM shape analysis tool. From a full dataset of regressed shapes, 240 healthy regressed shapes between 30 and 588 days-of-age (time step = 2.34 days) were selected. Volumes and shape metrics were obtained for craniosynostosis and healthy age-matched subjects. Volumes and shape metrics in single suture craniosynostosis patients were larger than age-matched controls for pre- and post-surgery. The use of 3D shape and volumetric measurements show that brain growth is not normal in patients with single suture craniosynostosis.

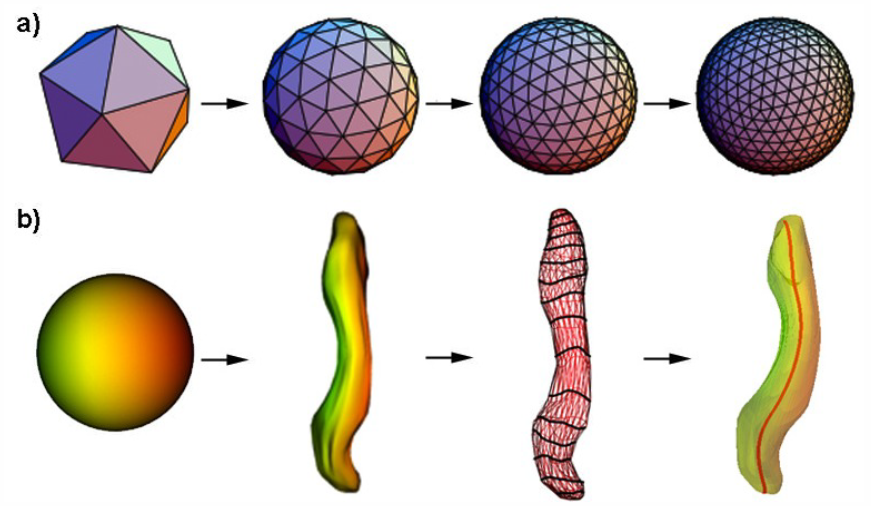

B. Paniagua, A. Lyall, J.-B. Berger, C. Vachet, R.M. Hamer, S. Woolson, W. Lin, J. Gilmore, M. Styner.

“Lateral ventricle morphology analysis via mean latitude axis,” In Proceedings of SPIE 8672, Biomedical Applications in Molecular, Structural, and Functional Imaging, 86720M, 2013.

DOI: 10.1117/12.2006846

PubMed ID: 23606800

PubMed Central ID: PMC3630372

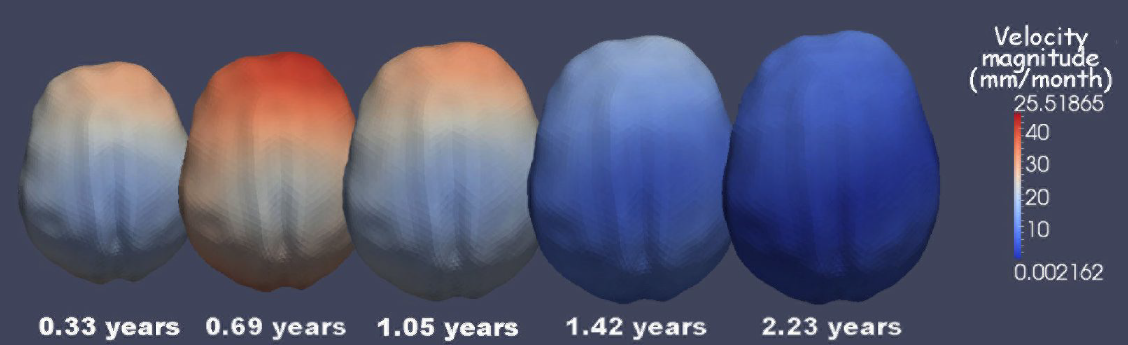

Statistical shape analysis has emerged as an insightful method for evaluating brain structures in neuroimaging studies, however most shape frameworks are surface based and thus directly depend on the quality of surface alignment. In contrast, medial descriptions employ thickness information as alignment-independent shape metric. We propose a joint framework that computes local medial thickness information via a mean latitude axis from the well-known spherical harmonic (SPHARM-PDM) shape framework. In this work, we applied SPHARM derived medial representations to the morphological analysis of lateral ventricles in neonates. Mild ventriculomegaly (MVM) subjects are compared to healthy controls to highlight the potential of the methodology. Lateral ventricles were obtained from MRI scans of neonates (9- 144 days of age) from 30 MVM subjects as well as age- and sex-matched normal controls (60 total). SPHARM-PDM shape analysis was extended to compute a mean latitude axis directly from the spherical parameterization. Local thickness and area was straightforwardly determined. MVM and healthy controls were compared using local MANOVA and compared with the traditional SPHARM-PDM analysis. Both surface and mean latitude axis findings differentiate successfully MVM and healthy lateral ventricle morphology. Lateral ventricles in MVM neonates show enlarged shapes in tail and head. Mean latitude axis is able to find significant differences all along the lateral ventricle shape, demonstrating that local thickness analysis provides significant insight over traditional SPHARM-PDM. This study is the first to precisely quantify 3D lateral ventricle morphology in MVM neonates using shape analysis.

C. Partl, A. Lex, M. Streit, D. Kalkofen, K. Kashofer, D. Schmalstieg.

“enRoute: Dynamic Path Extraction from Biological Pathway Maps for Exploring Heterogeneous Experimental Datasets,” In BMC Bioinformatics, Vol. 14, No. Suppl 19, Nov, 2013.

ISSN: 1471-2105

DOI: 10.1186/1471-2105-14-S19-S3

Jointly analyzing biological pathway maps and experimental data is critical for understanding how biological processes work in different conditions and why different samples exhibit certain characteristics. This joint analysis, however, poses a significant challenge for visualization. Current techniques are either well suited to visualize large amounts of pathway node attributes, or to represent the topology of the pathway well, but do not accomplish both at the same time. To address this we introduce enRoute, a technique that enables analysts to specify a path of interest in a pathway, extract this path into a separate, linked view, and show detailed experimental data associated with the nodes of this extracted path right next to it. This juxtaposition of the extracted path and the experimental data allows analysts to simultaneously investigate large amounts of potentially heterogeneous data, thereby solving the problem of joint analysis of topology and node attributes. As this approach does not modify the layout of pathway maps, it is compatible with arbitrary graph layouts, including those of hand-crafted, image-based pathway maps. We demonstrate the technique in context of pathways from the KEGG and the Wikipathways databases. We apply experimental data from two public databases, the Cancer Cell Line Encyclopedia (CCLE) and The Cancer Genome Atlas (TCGA) that both contain a wide variety of genomic datasets for a large number of samples. In addition, we make use of a smaller dataset of hepatocellular carcinoma and common xenograft models. To verify the utility of enRoute, domain experts conducted two case studies where they explore data from the CCLE and the hepatocellular carcinoma datasets in the context of relevant pathways.

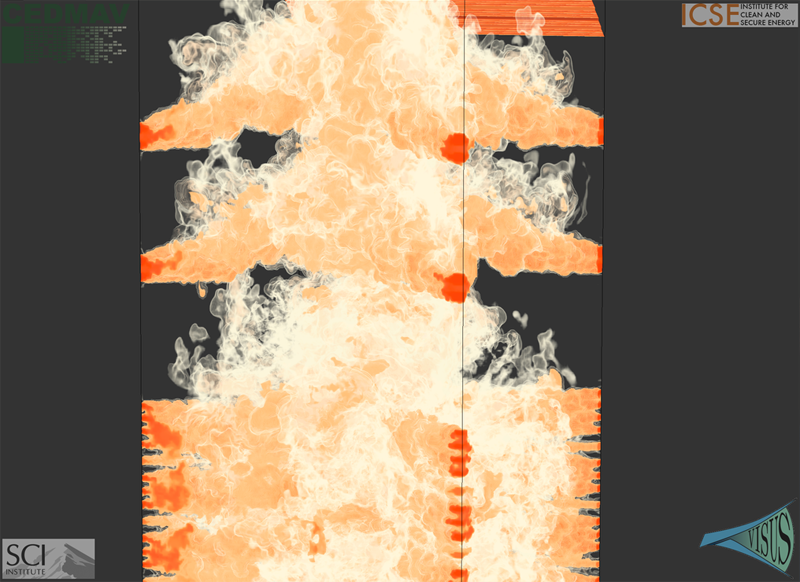

V. Pascucci, P.-T. Bremer, A. Gyulassy, G. Scorzelli, C. Christensen, B. Summa, S. Kumar.

“Scalable Visualization and Interactive Analysis Using Massive Data Streams,” In Cloud Computing and Big Data, Advances in Parallel Computing, Vol. 23, IOS Press, pp. 212--230. 2013.

Historically, data creation and storage has always outpaced the infrastructure for its movement and utilization. This trend is increasing now more than ever, with the ever growing size of scientific simulations, increased resolution of sensors, and large mosaic images. Effective exploration of massive scientific models demands the combination of data management, analysis, and visualization techniques, working together in an interactive setting. The ViSUS application framework has been designed as an environment that allows the interactive exploration and analysis of massive scientific models in a cache-oblivious, hardware-agnostic manner, enabling processing and visualization of possibly geographically distributed data using many kinds of devices and platforms.

For general purpose feature segmentation and exploration we discuss a new paradigm based on topological analysis. This approach enables the extraction of summaries of features present in the data through abstract models that are orders of magnitude smaller than the raw data, providing enough information to support general queries and perform a wide range of analyses without access to the original data.

Keywords: Visualization, data analysis, topological data analysis, Parallel I/O

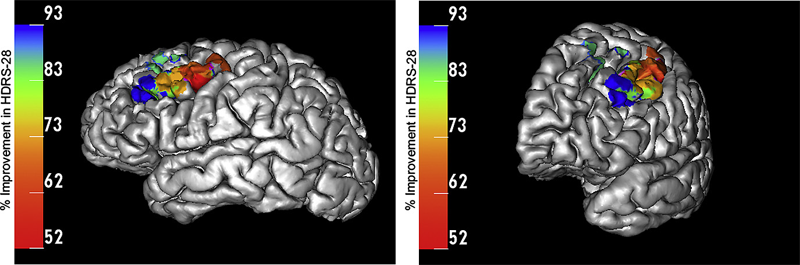

Y. Pathak, B.H. Kopell, A. Szabo, C. Rainey, H. Harsch, C.R. Butson.

“The role of electrode location and stimulation polarity in patient response to cortical stimulation for major depressive disorder,” In Brain Stimulation, Vol. 6, No. 3, Elsevier Ltd., pp. 254--260. July, 2013.

ISSN: 1935-861X

DOI: 10.1016/j.brs.2012.07.001

BACKGROUND: Major depressive disorder (MDD) is a neuropsychiatric condition that affects about one-sixth of the US population. Chronic epidural stimulation (EpCS) of the left dorsolateral prefrontal cortex (DLPFC) was recently evaluated as a treatment option for refractory MDD and was found to be effective during the open-label phase. However, two potential sources of variability in the study were differences in electrode position and the range of stimulation modes that were used in each patient. The objective of this study was to examine these factors in an effort to characterize successful EpCS therapy.

METHODS: Data were analyzed from eleven patients who received EpCS via a chronically implanted system. Estimates were generated of response probability as a function of duration of stimulation. The relative effectiveness of different stimulation modes was also evaluated. Lastly, a computational analysis of the pre- and post-operative imaging was performed to assess the effects of electrode location. The primary outcome measure was the change in Hamilton Depression Rating Scale (HDRS-28).

RESULTS: Significant improvement was observed in mixed mode stimulation (alternating cathodic and anodic) and continuous anodic stimulation (full power). The changes observed in HDRS-28 over time suggest that 20 weeks of stimulation are necessary to approach a 50\% response probability. Lastly, stimulation in the lateral and anterior regions of DLPFC was correlated with greatest degree of improvement.

CONCLUSIONS: A persistent problem in neuromodulation studies has been the selection of stimulation parameters and electrode location to provide optimal therapeutic response. The approach used in this paper suggests that insights can be gained by performing a detailed analysis of response while controlling for important details such as electrode location and stimulation settings.

Keywords: cortical stimulation

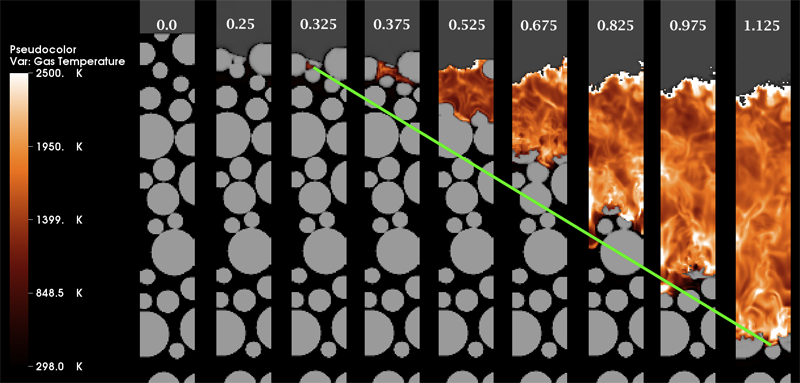

J.R. Peterson, C.A. Wight, M. Berzins.

“Applying high-performance computing to petascale explosive simulations,” In Procedia Computer Science, 2013.

Hazardous scenarios involving explosives are difficult to experimentally study and simulation is often the only viable approach to study highly reactive phenomena. Explosive simulations are computationally expensive, requiring supercomputing resources for continued scientific discovery in the field. Here an idealized mesoscale simulation of explosive grains under mechanical insult by a high-speed projectile with reaction represented by a novel kinetic model is designed to test the scalability of the Uintah software on petascale supercomputers. Good scalability is found up to 49K processors. Timing breakdown of computational tasks are determined with relocation of Lagrangian particles and interpolation of those particles to the grid identified as the most expensive operation and ideal for optimization. Potential optimization strategies are identified. Realistic model simulations rather than toy model simulations are found to better represent scalability of a science code on a supercomputer. Estimations for total supercomputer hours necessary to complete the kinetic model validation study are reported.

Keywords: Energetic Material Hazards, Uintah, MPM, ICE, MPMICE, Scalable Parallelism, C-SAFE

S. Philip, B. Summa, J. Tierny, P.-T. Bremer, V. Pascucci.

“Scalable Seams for Gigapixel Panoramas,” In Proceedings of the 2013 Eurographics Symposium on Parallel Graphics and Visualization, Note: Awarded Best Paper!, pp. 25--32. 2013.

DOI: 10.2312/EGPGV/EGPGV13/025-032

Gigapixel panoramas are an increasingly popular digital image application. They are often created as a mosaic of smaller images composited into a larger single image. The mosaic acquisition can occur over many hours causing the individual images to differ in exposure and lighting conditions. Therefore, to give the appearance of a single seamless image a blending operation is necessary. The quality of this blending depends on the magnitude of discontinuity along the boundaries between the images. Often image boundaries, or seams, are first computed to minimize this transition. Current techniques based on the multi-labeling Graph Cuts method are too slow and memory intensive for panoramas many gigapixels in size. In this paper we present a multithreaded out-of-core seam computing technique that is fast, has a small memory footprint, and gives near perfect scaling up to the number of physical cores of our test system. With this method the time required to compute image boundaries for gigapixel imagery improves from many hours (or even days) to just a few minutes on commodity hardware while still producing boundaries with energy that is on-par, if not better, than Graph Cuts.

K. Potter, S. Gerber, E.W. Anderson.

“Visualization of Uncertainty without a Mean,” In IEEE Computer Graphics and Applications, Visualization Viewpoints, Vol. 33, No. 1, pp. 75--79. 2013.

As dataset size and complexity steadily increase, uncertainty is becoming an important data aspect. So, today's visualizations need to incorporate indications of uncertainty. However, characterizing uncertainty for visualization isn't always straightforward. Entropy, in the information-theoretic sense, can be a measure for uncertainty in categorical datasets. The authors discuss the mathematical formulation, interpretation, and use of entropy in visualizations. This research aims to demonstrate entropy as a metric and expand the vocabulary of uncertainty measures for visualization.

N. Ramesh, T. Tasdizen.

“Three-dimensional alignment and merging of confocal microscopy stacks,” In 2013 IEEE International Conference on Image Processing, IEEE, September, 2013.

DOI: 10.1109/icip.2013.6738297

We describe an efficient, robust, automated method for image alignment and merging of translated, rotated and flipped con-focal microscopy stacks. The samples are captured in both directions (top and bottom) to increase the SNR of the individual slices. We identify the overlapping region of the two stacks by using a variable depth Maximum Intensity Projection (MIP) in the z dimension. For each depth tested, the MIP images gives an estimate of the angle of rotation between the stacks and the shifts in the x and y directions using the Fourier Shift property in 2D. We use the estimated rotation angle, shifts in the x and y direction and align the images in the z direction. A linear blending technique based on a sigmoidal function is used to maximize the information from the stacks and combine them. We get maximum information gain as we combine stacks obtained from both directions.