SCI Publications

2013

M. Datar, I. Lyu, S. Kim, J. Cates, M.A. Styner, R.T. Whitaker.

“Geodesic distances to landmarks for dense correspondence on ensembles of complex shapes,” In Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI 2011), Vol. 16(Pt. 2), pp. 19--26. 2013.

PubMed ID: 24579119

Establishing correspondence points across a set of biomedical shapes is an important technology for a variety of applications that rely on statistical analysis of individual subjects and populations. The inherent complexity (e.g. cortical surface shapes) and variability (e.g. cardiac chambers) evident in many biomedical shapes introduce significant challenges in finding a useful set of dense correspondences. Application specific strategies, such as registration of simplified (e.g. inflated or smoothed) surfaces or relying on manually placed landmarks, provide some improvement but suffer from limitations including increased computational complexity and ambiguity in landmark placement. This paper proposes a method for dense point correspondence on shape ensembles using geodesic distances to a priori landmarks as features. A novel set of numerical techniques for fast computation of geodesic distances to point sets is used to extract these features. The proposed method minimizes the ensemble entropy based on these features, resulting in isometry invariant correspondences in a very general, flexible framework.

D.J. Dosdall, R. Ranjan, K. Higuchi, E. Kholmovski, N. Angel, L. Li, R.S. Macleod, L. Norlund, A. Olsen, C.J. Davies, N.F. Marrouche.

“Chronic atrial fibrillation causes left ventricular dysfunction in dogs but not goats: experience with dogs, goats, and pigs,” In American Journal of Physiology: Heart and Circulatory Physiology, Vol. 305, No. 5, pp. H725--H731. September, 2013.

DOI: 10.1152/ajpheart.00440.2013

PubMed ID: 23812387

PubMed Central ID: PMC4116536

Structural remodeling in chronic atrial fibrillation (AF) occurs over weeks to months. To study the electrophysiological, structural, and functional changes that occur in chronic AF, the selection of the best animal model is critical. AF was induced by rapid atrial pacing (50-Hz stimulation every other second) in pigs (n = 4), dogs (n = 8), and goats (n = 9). Animals underwent MRIs at baseline and 6 mo to evaluate left ventricular (LV) ejection fraction (EF). Dogs were given metoprolol (50-100 mg po bid) and digoxin (0.0625-0.125 mg po bid) to limit the ventricular response rate to ot appropriate for chronic rapid atrial pacing-induced AF studies. Rate-controlled chronic AF in the dog model developed HF and LV fibrosis, whereas the goat model developed only atrial fibrosis without ventricular dysfunction and fibrosis. Both the dog and goat models are representative of segments of the patient population with chronic AF.

Keywords: animal models, chronic atrial fibrillation, fibrosis, heart failure, rapid atrial pacing

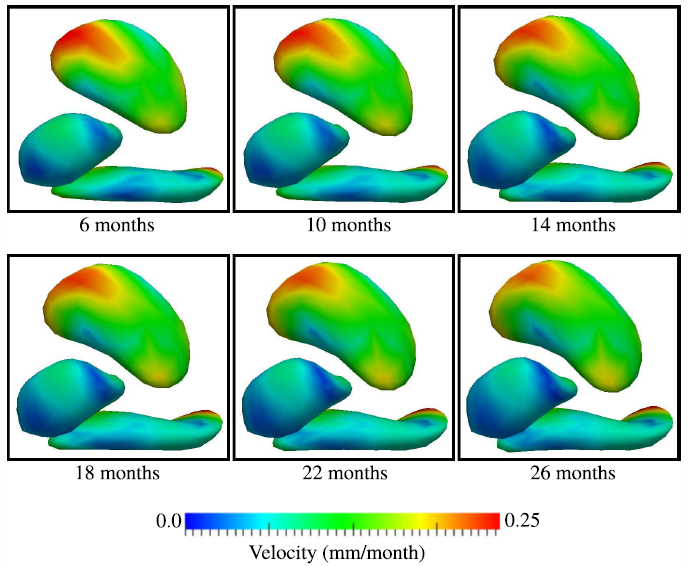

S. Durrleman, X. Pennec, A. Trouvé, J. Braga, G. Gerig, N. Ayache.

“Toward a comprehensive framework for the spatiotemporal statistical analysis of longitudinal shape data,” In International Journal of Computer Vision (IJCV), Vol. 103, No. 1, pp. 22--59. September, 2013.

DOI: 10.1007/s11263-012-0592-x

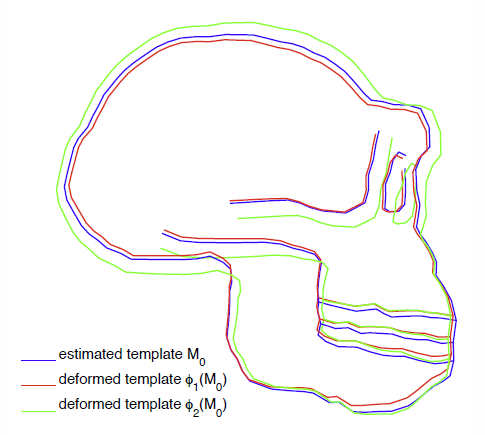

This paper proposes an original approach for the statistical analysis of longitudinal shape data. The proposed method allows the characterization of typical growth patterns and subject-specific shape changes in repeated time-series observations of several subjects. This can be seen as the extension of usual longitudinal statistics of scalar measurements to high-dimensional shape or image data.

The method is based on the estimation of continuous subject-specific growth trajectories and the comparison of such temporal shape changes across subjects. Differences between growth trajectories are decomposed into morphological deformations, which account for shape changes independent of the time, and time warps, which account for different rates of shape changes over time.

Given a longitudinal shape data set, we estimate a mean growth scenario representative of the population, and the variations of this scenario both in terms of shape changes and in terms of change in growth speed. Then, intrinsic statistics are derived in the space of spatiotemporal deformations, which characterize the typical variations in shape and in growth speed within the studied population. They can be used to detect systematic developmental delays across subjects.

In the context of neuroscience, we apply this method to analyze the differences in the growth of the hippocampus in children diagnosed with autism, developmental delays and in controls. Result suggest that group differences may be better characterized by a different speed of maturation rather than shape differences at a given age. In the context of anthropology, we assess the differences in the typical growth of the endocranium between chimpanzees and bonobos. We take advantage of this study to show the robustness of the method with respect to change of parameters and perturbation of the age estimates.

S. Durrleman, S. Allassonnière, S. Joshi.

“Sparse adaptive parameterization of variability in image ensembles,” In International Journal of Computer Vision (IJCV), Vol. 101, No. 1, pp. 161--183. 2013.

DOI: 10.1007/s11263-012-0556-1

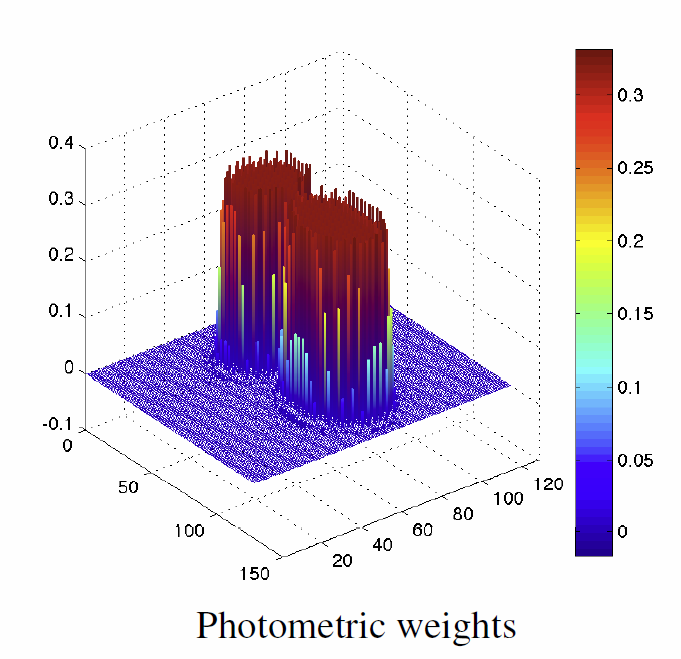

This paper introduces a new parameterization of diffeomorphic deformations for the characterization of the variability in image ensembles. Dense diffeomorphic deformations are built by interpolating the motion of a finite set of control points that forms a Hamiltonian flow of self-interacting particles. The proposed approach estimates a template image representative of a given image set, an optimal set of control points that focuses on the most variable parts of the image, and template-to-image registrations that quantify the variability within the image set. The method automatically selects the most relevant control points for the characterization of the image variability and estimates their optimal positions in the template domain. The optimization in position is done during the estimation of the deformations without adding any computational cost at each step of the gradient descent. The selection of the control points is done by adding a L1 prior to the objective function, which is optimized using the FISTA algorithm.

L.T. Edgar, S.C. Sibole, C.J. Underwood, J.E. Guilkey, J.A. Weiss.

“A computational model of in vitro angiogenesis based on extracellular matrix fiber orientation,” In Computer Methods in Biomechanical and Biomedical Engineering, Vol. 16, No. 7, pp. 790--801. 2013.

DOI: 10.1080/10255842.2012.662678

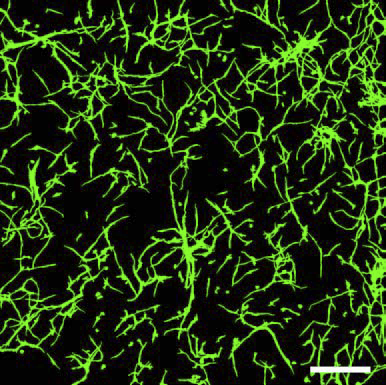

Recent interest in the process of vascularisation within the biomedical community has motivated numerous new research efforts focusing on the process of angiogenesis. Although the role of chemical factors during angiogenesis has been well documented, the role of mechanical factors, such as the interaction between angiogenic vessels and the extracellular matrix, remains poorly understood. In vitro methods for studying angiogenesis exist; however, measurements available using such techniques often suffer from limited spatial and temporal resolutions. For this reason, computational models have been extensively employed to investigate various aspects of angiogenesis. This paper outlines the formulation and validation of a simple and robust computational model developed to accurately simulate angiogenesis based on length, branching and orientation morphometrics collected from vascularised tissue constructs. Microvessels were represented as a series of connected line segments. The morphology of the vessels was determined by a linear combination of the collagen fibre orientation, the vessel density gradient and a random walk component. Excellent agreement was observed between computational and experimental morphometric data over time. Computational predictions of microvessel orientation within an anisotropic matrix correlated well with experimental data. The accuracy of this modelling approach makes it a valuable platform for investigating the role of mechanical interactions during angiogenesis.

S. Elhabian, A. Farag, D. Tasman, W. Aboelmaaty, A. Farman.

“Clinical Crowns Shape Reconstruction - An Image-based Approach,” In Proceedings of the 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI), pp. 93--96. 2013.

DOI: 10.1109/ISBI.2013.6556420

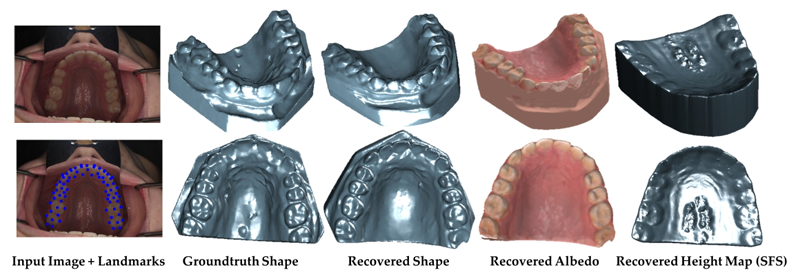

Precise knowledge of the 3D shape of clinical crowns is crucial for the treatment of malocclusion problems as well as several endodontic procedures. While Computed Tomography (CT) would present such information, it is believed that there is no threshold radiation dose below which it is considered safe. In this paper, we propose an image-based approach which allows for the construction of plausible human jaw models in vivo, without ionizing radiation, using fewer sample points in order to reduce the cost and intrusiveness of acquiring models of patients teeth/jaws over time. We assume that human teeth reflectance obeys Wolff-Oren-Nayar model where we experimentally prove that teeth surface obeys the microfacet theory. The inherent relation between the photometric information and the underlying 3D shape is formulated as a statistical model where the coupled effect of illumination and reflectance is modeled using the Helmhotlz Hemispherical Harmonics (HSH)-based irradiance harmonics whereas the Principle Component Regression (PCR) approach is deployed to carry out the estimation of dense 3D shapes. Vis-a-vis dental applications, the results demonstrate a significant increase in accuracy in favor of the proposed approach where our system is evaluated on a database of 16 jaws.

J.T. Elison, J.J. Wolff, D.C. Heimer, S.J. Paterson, H. Gu, M. Styner, G. Gerig, J. Piven, the IBIS Network.

“Frontolimbic neural circuitry at 6 months predicts individual differences in joint attention at 9 months,” In Developmental Science, Vol. 16, No. 2, Wiley-Blackwell, pp. 186--197. 2013.

DOI: 10.1111/desc.12015

PubMed Central ID: PMC3582040

Elucidating the neural basis of joint attention in infancy promises to yield important insights into the development of language and social cognition, and directly informs developmental models of autism.We describe a new method for evaluating responding to joint attention performance in infancy that highlights the 9- to 10-month period as a time interval of maximal individual differences.We then demonstrate that fractional anisotropy in the right uncinate fasciculus, a white matter fiber bundle connecting the amygdala to the ventral-medial prefrontal cortex and anterior temporal pole, measured in 6-month-olds predicts individual differences in responding to joint attention at 9 months of age. The white matter microstructure of the right uncinate was not related to receptive language ability at 9 months. These findings suggest that the development of core nonverbal social communication skills in infancy is largely supported by preceding developments within right lateralized frontotemporal brain systems.

J.T. Elison, S.J. Paterson, J.J. Wolff, J.S. Reznick, N.J. Sasson, H. Gu, K.N. Botteron, S.R. Dager, A.M. Estes, A.C. Evans, G. Gerig, H.C. Hazlett, R.T. Schultz, M. Styner, L. Zwaigenbaum, J. Piven for the IBIS Network.

“White Matter Microstructure and Atypical Visual Orienting in 7 Month-Olds at Risk for Autism,” In American Journal of Psychiatry, Vol. AJP-12-09-1150.R2, March, 2013.

DOI: 10.1176/appi.ajp.2012.12091150

PubMed ID: 23511344

Objective: To determine whether specific patterns of oculomotor functioning and visual orienting characterize 7 month-old infants later classified with an autism spectrum disorder (ASD) and to identify the neural correlates of these behaviors.

Method: Ninety-seven infants contributed data to the current study (16 high-familial risk infants later classified with an ASD, 40 high-familial risk infants not meeting ASD criteria (high-risk-negative), and 41 low-risk infants). All infants completed an eye tracking task at 7 months and a clinical assessment at 25 months; diffusion weighted imaging data was acquired on 84 infants at 7 months. Primary outcome measures included average saccadic reaction time in a visually guided saccade procedure and radial diffusivity (an index of white matter organization) in fiber tracts that included corticospinal pathways and the splenium and genu of the corpus callosum.

Results: Visual orienting latencies were increased in seven-month-old infants who later express ASD symptoms at 25 months when compared with both high-risk-negative infants (p = 0.012, d = 0.73) and low-risk infants (p = 0.032, d = 0.71). Visual orienting latencies were uniquely associated with the microstructural organization of the splenium of the corpus callosum in low-risk infants, but this association was not apparent in infants later classified with ASD.

Conclusions: Flexibly and efficiently orienting to salient information in the environment is critical for subsequent cognitive and social-cognitive development. Atypical visual orienting may represent an earlyemerging prodromal feature of ASD, and abnormal functional specialization of posterior cortical circuits directly informs a novel model of ASD pathogenesis.

B. Erem, J. Coll-Font, R.M. Orellana, P. Stovicek, D.H. Brooks, R.S. MacLeod.

“Noninvasive reconstruction of potentials on endocardial surface from body surface potentials and CT imaging of partial torso,” In Journal of Electrocardiology, Vol. 46, No. 4, pp. e28. 2013.

DOI: 10.1016/j.jelectrocard.2013.05.104

B. Erem, R.M. Orellana, P. Stovicek, D.H. Brooks, R.S. MacLeod.

“Improved averaging of multi-lead ECGs and electrograms,” In Journal of Electrocardiology, Vol. 46, No. 4, Elsevier, pp. e28. July, 2013.

DOI: 10.1016/j.jelectrocard.2013.05.103

T. Etiene, D. Jonsson, T. Ropinski, C. Scheidegger, J. Comba, L. Gustavo Nonato, R.M. Kirby, A. Ynnerman, C.T. Silva.

“Verifying Volume Rendering Using Discretization Error Analysis,” SCI Technical Report, No. UUSCI-2013-001, SCI Institute, University of Utah, 2013.

We propose an approach for verification of volume rendering correctness based on an analysis of the volume rendering integral, the basis for most DVR algorithms. With respect to the most common discretization of this continuous model, we make assumptions about the impact of parameter changes on the rendered results and derive convergence curves describing the expected behavior. Specifically, we progressively refine the number of samples along the ray, the grid size, and the pixel size, and evaluate how the errors observed during refinement compare against the expected approximation errors. We will derive the theoretical foundations of our verification approach, explain how to realize it in practice and discuss its limitations as well as the identified errors.

Keywords: discretization errors, volume rendering, verifiable visualization

K. Fakhar, E. Hastings, C.R. Butson, K.D. Foote, P. Zeilman, M.S. Okun.

“Management of deep brain stimulator battery failure: battery estimators, charge density, and importance of clinical symptoms,” In PloS One, Vol. 8, No. 3, pp. e58665. January, 2013.

ISSN: 1932-6203

DOI: 10.1371/journal.pone.0058665

PubMed ID: 23536810

We aimed in this investigation to study deep brain stimulation (DBS) battery drain with special attention directed toward patient symptoms prior to and following battery replacement.

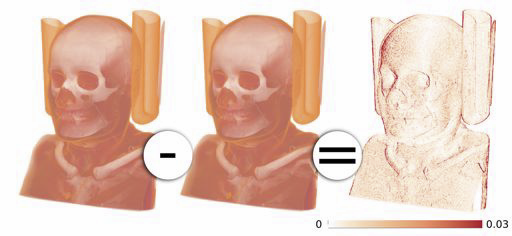

M. Farzinfar, Y. Li, A.R. Verde, I. Oguz, G. Gerig, M.A. Styner.

“DTI Quality Control Assessment via Error Estimation From Monte Carlo Simulations,” In Proceedings of SPIE 8669, Medical Imaging 2013: Image Processing, Vol. 8669, 2013.

DOI: 10.1117/12.2006925

PubMed ID: 23833547

PubMed Central ID: PMC3702180

Diffusion Tensor Imaging (DTI) is currently the state of the art method for characterizing the microscopic tissue structure of white matter in normal or diseased brain in vivo. DTI is estimated from a series of Diffusion Weighted Imaging (DWI) volumes. DWIs suffer from a number of artifacts which mandate stringent Quality Control (QC) schemes to eliminate lower quality images for optimal tensor estimation. Conventionally, QC procedures exclude artifact-affected DWIs from subsequent computations leading to a cleaned, reduced set of DWIs, called DWI-QC. Often, a rejection threshold is heuristically/empirically chosen above which the entire DWI-QC data is rendered unacceptable and thus no DTI is computed. In this work, we have devised a more sophisticated, Monte-Carlo (MC) simulation based method for the assessment of resulting tensor properties. This allows for a consistent, error-based threshold definition in order to reject/accept the DWI-QC data. Specifically, we propose the estimation of two error metrics related to directional distribution bias of Fractional Anisotropy (FA) and the Principal Direction (PD). The bias is modeled from the DWI-QC gradient information and a Rician noise model incorporating the loss of signal due to the DWI exclusions. Our simulations further show that the estimated bias can be substantially different with respect to magnitude and directional distribution depending on the degree of spatial clustering of the excluded DWIs. Thus, determination of diffusion properties with minimal error requires an evenly distributed sampling of the gradient directions before and after QC.

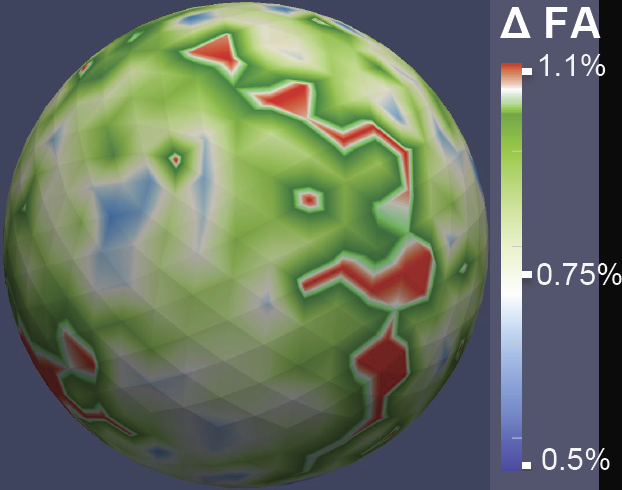

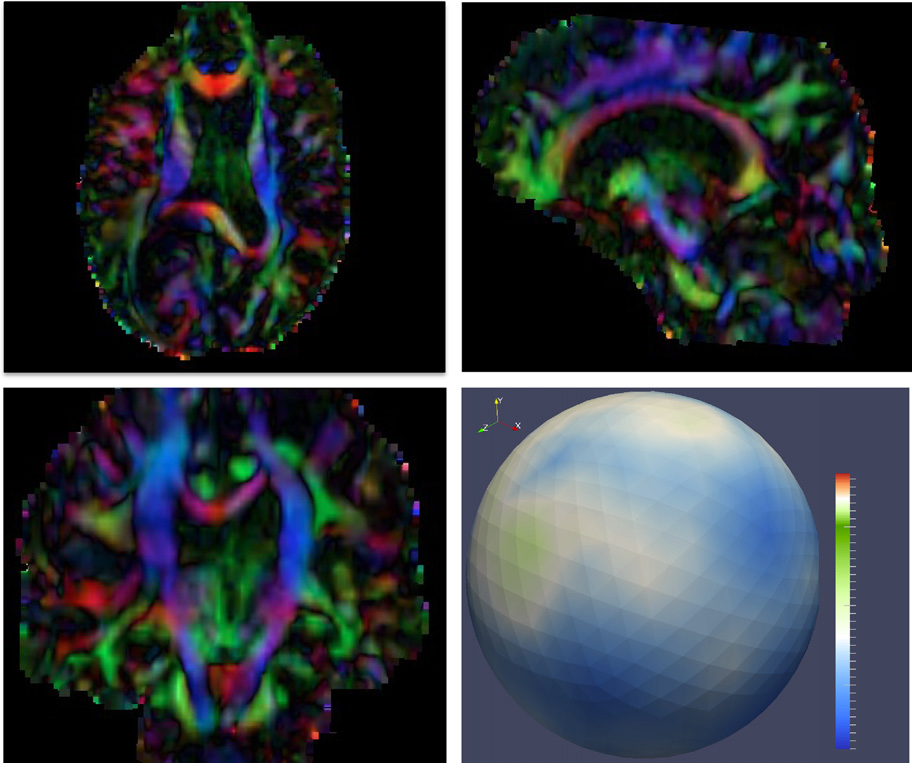

M. Farzinfar, I. Oguz, R.G. Smith, A.R. Verde, C. Dietrich, A. Gupta, M.L. Escolar, J. Piven, S. Pujol, C. Vachet, S. Gouttard, G. Gerig, S. Dager, R.C. McKinstry, S. Paterson, A.C. Evans, M.A. Styner.

“Diffusion imaging quality control via entropy of principal direction distribution,” In NeuroImage, Vol. 82, pp. 1--12. 2013.

ISSN: 1053-8119

DOI: 10.1016/j.neuroimage.2013.05.022

Diffusion MR imaging has received increasing attention in the neuroimaging community, as it yields new insights into the microstructural organization of white matter that are not available with conventional MRI techniques. While the technology has enormous potential, diffusion MRI suffers from a unique and complex set of image quality problems, limiting the sensitivity of studies and reducing the accuracy of findings. Furthermore, the acquisition time for diffusion MRI is longer than conventional MRI due to the need for multiple acquisitions to obtain directionally encoded Diffusion Weighted Images (DWI). This leads to increased motion artifacts, reduced signal-to-noise ratio (SNR), and increased proneness to a wide variety of artifacts, including eddy-current and motion artifacts, “venetian blind” artifacts, as well as slice-wise and gradient-wise inconsistencies. Such artifacts mandate stringent Quality Control (QC) schemes in the processing of diffusion MRI data. Most existing QC procedures are conducted in the DWI domain and/or on a voxel level, but our own experiments show that these methods often do not fully detect and eliminate certain types of artifacts, often only visible when investigating groups of DWI's or a derived diffusion model, such as the most-employed diffusion tensor imaging (DTI). Here, we propose a novel regional QC measure in the DTI domain that employs the entropy of the regional distribution of the principal directions (PD). The PD entropy quantifies the scattering and spread of the principal diffusion directions and is invariant to the patient's position in the scanner. High entropy value indicates that the PDs are distributed relatively uniformly, while low entropy value indicates the presence of clusters in the PD distribution. The novel QC measure is intended to complement the existing set of QC procedures by detecting and correcting residual artifacts. Such residual artifacts cause directional bias in the measured PD and here called dominant direction artifacts. Experiments show that our automatic method can reliably detect and potentially correct such artifacts, especially the ones caused by the vibrations of the scanner table during the scan. The results further indicate the usefulness of this method for general quality assessment in DTI studies.

Keywords: Diffusion magnetic resonance imaging, Diffusion tensor imaging, Quality assessment, Entropy

N. Farah, A. Zoubi, S. Matar, L. Golan, A. Marom, C.R. Butson, I. Brosh, S. Shoham.

“Holographically patterned activation using photo-absorber induced neural-thermal stimulation,” In Journal of Neural Engineering, Vol. 10, No. 5, pp. 056004. October, 2013.

ISSN: 1741-2560

DOI: 10.1088/1741-2560/10/5/056004

Objective. Patterned photo-stimulation offers a promising path towards the effective control of distributed neuronal circuits. Here, we demonstrate the feasibility and governing principles of spatiotemporally patterned microscopic photo-absorber induced neural.thermal stimulation (PAINTS) based on light absorption by exogenous extracellular photo-absorbers. Approach. We projected holographic light patterns from a green continuous-wave (CW) or an IR femtosecond laser onto exogenous photo-absorbing particles dispersed in the vicinity of cultured rat cortical cells. Experimental results are compared to predictions of a temperature-rate model (where membrane currents follow I ∝ dT/dt). Main results. The induced microscopic photo-thermal transients have sub-millisecond thermal relaxation times and stimulate adjacent cells. PAINTS activation thresholds for different laser pulse durations (0.02 to 1 ms) follow the Lapicque strength-duration formula, but with different chronaxies and minimal threshold energy levels for the two excitation lasers (an order of magnitude lower for the IR system mporal selectivity.

J. Fishbaugh, M.W. Prastawa, G. Gerig, S. Durrleman.

“Geodesic Shape Regression in the Framework of Currents,” In Proceedings of the International Conference on Information Processing in Medical Imaging (IPMI), Vol. 23, pp. 718--729. 2013.

PubMed ID: 24684012

PubMed Central ID: PMC4127488

Shape regression is emerging as an important tool for the statistical analysis of time dependent shapes. In this paper, we develop a new generative model which describes shape change over time, by extending simple linear regression to the space of shapes represented as currents in the large deformation diffeomorphic metric mapping (LDDMM) framework. By analogy with linear regression, we estimate a baseline shape (intercept) and initial momenta (slope) which fully parameterize the geodesic shape evolution. This is in contrast to previous shape regression methods which assume the baseline shape is fixed. We further leverage a control point formulation, which provides a discrete and low dimensional parameterization of large diffeomorphic transformations. This flexible system decouples the parameterization of deformations from the specific shape representation, allowing the user to define the dimension- ality of the deformation parameters. We present an optimization scheme that estimates the baseline shape, location of the control points, and initial momenta simultaneously via a single gradient descent algorithm. Finally, we demonstrate our proposed method on synthetic data as well as real anatomical shape complexes.

J. Fishbaugh, M. Prastawa, G. Gerig, S. Durrleman.

“Geodesic image regression with a sparse parameterization of diffeomorphisms,” In Geometric Science of Information Lecture Notes in Computer Science (LNCS), In Proceedings of the Geometric Science of Information Conference (GSI), Vol. 8085, pp. 95--102. 2013.

Image regression allows for time-discrete imaging data to be modeled continuously, and is a crucial tool for conducting statistical analysis on longitudinal images. Geodesic models are particularly well suited for statistical analysis, as image evolution is fully characterized by a baseline image and initial momenta. However, existing geodesic image regression models are parameterized by a large number of initial momenta, equal to the number of image voxels. In this paper, we present a sparse geodesic image regression framework which greatly reduces the number of model parameters. We combine a control point formulation of deformations with a L1 penalty to select the most relevant subset of momenta. This way, the number of model parameters reflects the complexity of anatomical changes in time rather than the sampling of the image. We apply our method to both synthetic and real data and show that we can decrease the number of model parameters (from the number of voxels down to hundreds) with only minimal decrease in model accuracy. The reduction in model parameters has the potential to improve the power of ensuing statistical analysis, which faces the challenging problem of high dimensionality.

T. Fogal, A. Schiewe, J. Krüger.

“An Analysis of Scalable GPU-Based Ray-Guided Volume Rendering,” In 2013 IEEE Symposium on Large Data Analysis and Visualization (LDAV), 2013.

Volume rendering continues to be a critical method for analyzing large-scale scalar fields, in disciplines as diverse as biomedical engineering and computational fluid dynamics. Commodity desktop hardware has struggled to keep pace with data size increases, challenging modern visualization software to deliver responsive interactions for O(N3) algorithms such as volume rendering. We target the data type common in these domains: regularly-structured data.

In this work, we demonstrate that the major limitation of most volume rendering approaches is their inability to switch the data sampling rate (and thus data size) quickly. Using a volume renderer inspired by recent work, we demonstrate that the actual amount of visualizable data for a scene is typically bound considerably lower than the memory available on a commodity GPU. Our instrumented renderer is used to investigate design decisions typically swept under the rug in volume rendering literature. The renderer is freely available, with binaries for all major platforms as well as full source code, to encourage reproduction and comparison with future research.

Z. Fu, R.M. Kirby, R.T. Whitaker.

“A Fast Iterative Method for Solving the Eikonal Equation on Tetrahedral Domains,” In SIAM Journal on Scientific Computing, Vol. 35, No. 5, pp. C473--C494. 2013.

Generating numerical solutions to the eikonal equation and its many variations has a broad range of applications in both the natural and computational sciences. Efficient solvers on cutting-edge, parallel architectures require new algorithms that may not be theoretically optimal, but that are designed to allow asynchronous solution updates and have limited memory access patterns. This paper presents a parallel algorithm for solving the eikonal equation on fully unstructured tetrahedral meshes. The method is appropriate for the type of fine-grained parallelism found on modern massively-SIMD architectures such as graphics processors and takes into account the particular constraints and capabilities of these computing platforms. This work builds on previous work for solving these equations on triangle meshes; in this paper we adapt and extend previous 2D strategies to accommodate three-dimensional, unstructured, tetrahedralized domains. These new developments include a local update strategy with data compaction for tetrahedral meshes that provides solutions on both serial and parallel architectures, with a generalization to inhomogeneous, anisotropic speed functions. We also propose two new update schemes, specialized to mitigate the natural data increase observed when moving to three dimensions, and the data structures necessary for efficiently mapping data to parallel SIMD processors in a way that maintains computational density. Finally, we present descriptions of the implementations for a single CPU, as well as multicore CPUs with shared memory and SIMD architectures, with comparative results against state-of-the-art eikonal solvers.

M. Gamell, I. Rodero, M. Parashar, J.C. Bennett, H. Kolla, J.H. Chen, P.-T. Bremer, A. Landge, A. Gyulassy, P. McCormick, Scott Pakin, Valerio Pascucci, Scott Klasky.

“Exploring Power Behaviors and Trade-offs of In-situ Data Analytics,” In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, Association for Computing Machinery, 2013.

ISBN: 978-1-4503-2378-9

DOI: 10.1145/2503210.2503303

As scientific applications target exascale, challenges related to data and energy are becoming dominating concerns. For example, coupled simulation workflows are increasingly adopting in-situ data processing and analysis techniques to address costs and overheads due to data movement and I/O. However it is also critical to understand these overheads and associated trade-offs from an energy perspective. The goal of this paper is exploring data-related energy/performance trade-offs for end-to-end simulation workflows running at scale on current high-end computing systems. Specifically, this paper presents: (1) an analysis of the data-related behaviors of a combustion simulation workflow with an in-situ data analytics pipeline, running on the Titan system at ORNL; (2) a power model based on system power and data exchange patterns, which is empirically validated; and (3) the use of the model to characterize the energy behavior of the workflow and to explore energy/performance trade-offs on current as well as emerging systems.

Keywords: SDAV