SCI Publications

2016

S.K. Iyer, T. Tasdizen, N. Burgon, E. Kholmovski, N. Marrouche, G. Adluru, E.V.R. DiBella.

“Compressed sending for rapid late gadolinium enhanced imaging of the left atrium: A preliminary study, Magnetic Resonance Imaging,” In Magnetic Resonance Imaging, Vol. 34, No. 7, Elsevier BV, pp. 846--854. September, 2016.

DOI: 10.1016/j.mri.2016.03.002

Current late gadolinium enhancement (LGE) imaging of left atrial (LA) scar or fibrosis is relatively slow and requires 5–15 min to acquire an undersampled (R = 1.7) 3D navigated dataset. The GeneRalized Autocalibrating Partially Parallel Acquisitions (GRAPPA) based parallel imaging method is the current clinical standard for accelerating 3D LGE imaging of the LA and permits an acceleration factor ~ R = 1.7. Two compressed sensing (CS) methods have been developed to achieve higher acceleration factors: a patch based collaborative filtering technique tested with acceleration factor R ~ 3, and a technique that uses a 3D radial stack-of-stars acquisition pattern (R ~ 1.8) with a 3D total variation constraint. The long reconstruction time of these CS methods makes them unwieldy to use, especially the patch based collaborative filtering technique. In addition, the effect of CS techniques on the quantification of percentage of scar/fibrosis is not known.

We sought to develop a practical compressed sensing method for imaging the LA at high acceleration factors. In order to develop a clinically viable method with short reconstruction time, a Split Bregman (SB) reconstruction method with 3D total variation (TV) constraints was developed and implemented. The method was tested on 8 atrial fibrillation patients (4 pre-ablation and 4 post-ablation datasets). Blur metric, normalized mean squared error and peak signal to noise ratio were used as metrics to analyze the quality of the reconstructed images, Quantification of the extent of LGE was performed on the undersampled images and compared with the fully sampled images. Quantification of scar from post-ablation datasets and quantification of fibrosis from pre-ablation datasets showed that acceleration factors up to R ~ 3.5 gave good 3D LGE images of the LA wall, using a 3D TV constraint and constrained SB methods. This corresponds to reducing the scan time by half, compared to currently used GRAPPA methods. Reconstruction of 3D LGE images using the SB method was over 20 times faster than standard gradient descent methods.

S. Kim, I.Lyu, V. Fonov, C. Vachet, H. Hazlett, R. Smith, J. Piven, S. Dager, R. Mckinstry, J. Pruett, A. Evans, D. Collins, K. Botteron, R. Schultz, G. Gerig, M. Styner.

“Development of Cortical Shape in the Human Brain from 6 to 24 Months of Age via a Novel Measure of Shape Complexity,” In NeuroImage, Vol. 135, Elsevier, pp. 163--176. July, 2016.

DOI: 10.1016/j.neuroimage.2016.04.053

The quantification of local surface morphology in the human cortex is important for examining population differences as well as developmental changes in neurodegenerative or neurodevelopmental disorders. We propose a novel cortical shape measure, referred to as the 'shape complexity index' (SCI), that represents localized shape complexity as the difference between the observed distributions of local surface topology, as quantified by the shape index (SI) measure, to its best fitting simple topological model within a given neighborhood. We apply a relatively small, adaptive geodesic kernel to calculate the SCI. Due to the small size of the kernel, the proposed SCI measure captures fine differences of cortical shape. With this novel cortical feature, we aim to capture comparatively small local surface changes that capture a) the widening versus deepening of sulcal and gyral regions, as well as b) the emergence and development of secondary and tertiary sulci. Current cortical shape measures, such as the gyrification index (GI) or intrinsic curvature measures, investigate the cortical surface at a different scale and are less well suited to capture these particular cortical surface changes. In our experiments, the proposed SCI demonstrates higher complexity in the gyral/sulcal wall regions, lower complexity in wider gyral ridges and lowest complexity in wider sulcal fundus regions. In early postnatal brain development, our experiments show that SCI reveals a pattern of increased cortical shape complexity with age, as well as sexual dimorphisms in the insula, middle cingulate, parieto-occipital sulcal and Broca's regions. Overall, sex differences were greatest at 6months of age and were reduced at 24months, with the difference pattern switching from higher complexity in males at 6months to higher complexity in females at 24months. This is the first study of longitudinal, cortical complexity maturation and sex differences, in the early postnatal period from 6 to 24months of age with fine scale, cortical shape measures. These results provide information that complement previous studies of gyrification index in early brain development.

M. Larsen, K. Moreland, C.R. Johnson,, H. Childs.

“Optimizing Multi-Image Sort-Last Parallel Rendering,” In Symposium on Large Data Analysis and Visualization, IEEE, 2016.

Sort-last parallel rendering can be improved by considering the rendering of multiple images at a time. Most parallel rendering algorithms consider the generation of only a single image. This makes sense when performing interactive rendering where the parameters of each rendering are not known until the previous rendering completes. However, in situ visualization often generates multiple images that do not need to be created sequentially. In this paper we present a simple and effective approach to improving parallel image generation throughput by amortizing the load and overhead among multiple image renders. Additionally, we validate our approach by conducting a performance study exploring the achievable speed-ups in a variety of image-based in situ use cases and rendering workloads. On average, our approach shows a 1.5 to 3.7 fold improvement in performance, and in some cases, shows a 10 fold improvement.

T. Liu, S.M. Seyedhosseini, T. Tasdizen.

“Image Segmentation Using Hierarchical Merge Tree,” In IEEE Transactions on Image Processing, Vol. 25, No. 10, IEEE, pp. 4596--4607. Oct, 2016.

DOI: 10.1109/tip.2016.2592704

This paper investigates one of the most fundamental computer vision problems: image segmentation. We propose a supervised hierarchical approach to object-independent image segmentation. Starting with oversegmenting superpixels, we use a tree structure to represent the hierarchy of region merging, by which we reduce the problem of segmenting image regions to finding a set of label assignment to tree nodes. We formulate the tree structure as a constrained conditional model to associate region merging with likelihoods predicted using an ensemble boundary classifier. Final segmentations can then be inferred by finding globally optimal solutions to the model efficiently. We also present an iterative training and testing algorithm that generates various tree structures and combines them to emphasize accurate boundaries by segmentation accumulation. Experiment results and comparisons with other recent methods on six public datasets demonstrate that our approach achieves the state-of-the-art region accuracy and is competitive in image segmentation without semantic priors.

T. Liu, M. Zhang, M. Javanmardi , N. Ramesh, T. Tasdizen.

“SSHMT: Semi-supervised Hierarchical Merge Tree for Electron Microscopy Image Segmentation,” In Lecture Notes in Computer Science, Vol. 9905, Springer International Publishing, pp. 144--159. 2016.

DOI: 10.1007/978-3-319-46448-0_9

Region-based methods have proven necessary for improving segmentation accuracy of neuronal structures in electron microscopy (EM) images. Most region-based segmentation methods use a scoring function to determine region merging. Such functions are usually learned with supervised algorithms that demand considerable ground truth data, which are costly to collect. We propose a semi-supervised approach that reduces this demand. Based on a merge tree structure, we develop a differentiable unsupervised loss term that enforces consistent predictions from the learned function. We then propose a Bayesian model that combines the supervised and the unsupervised information for probabilistic learning. The experimental results on three EM data sets demonstrate that by using a subset of only 3% to 7% of the entire ground truth data, our approach consistently performs close to the state-of-the-art supervised method with the full labeled data set, and significantly outperforms the supervised method with the same labeled subset.

P. Ljung, J. Krüger, E. Gröller, M. Hadwiger, C. D. Hansen,, A. Ynnerman.

“State of the Art in Transfer Functions for Direct Volume Rendering,” In Computer Graphics Forum, Vol. 35, No. 3, Wiley-Blackwell, pp. 669--691. June, 2016.

DOI: 10.1111/cgf.12934

A central topic in scientific visualization is the transfer function (TF) for volume rendering. The TF serves a fundamental role in translating scalar and multivariate data into color and opacity to express and reveal the relevant features present in the data studied. Beyond this core functionality, TFs also serve as a tool for encoding and utilizing domain knowledge and as an expression for visual design of material appearances. TFs also enable interactive volumetric exploration of complex data. The purpose of this state-of-the-art report (STAR) is to provide an overview of research into the various aspects of TFs, which lead to interpretation of the underlying data through the use of meaningful visual representations. The STAR classifies TF research into the following aspects: dimensionality, derived attributes, aggregated attributes, rendering aspects, automation, and user interfaces. The STAR concludes with some interesting research challenges that form the basis of an agenda for the development of next generation TF tools and methodologies.

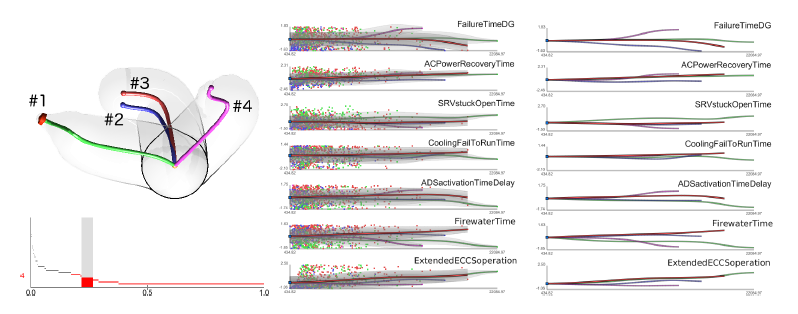

D. Maljovec, S. Liu, Bei Wang, V. Pascucci, P. T. Bremer, D. Mandelli, C. Smith..

“Analyzing Simulation-Based PRA Data Through Traditional and Topological Clustering: A BWR Station Blackout Case Study,” In Reliability Engineering & System Safety, Vol. 145, Elsevier, pp. 262--276. January, 2016.

DOI: 10.1016/j.ress.2015.07.001

Dynamic probabilistic risk assessment (DPRA) methodologies couple system simulator codes (e.g., RELAP, MELCOR) with simulation controller codes (e.g., RAVEN, ADAPT). Whereas system simulator codes model system dynamics deterministically, simulation controller codes introduce both deterministic (e.g., system control logic, operating procedures) and stochastic (e.g., component failures, parameter uncertainties) elements into the simulation. Typically, a DPRA is performed by sampling values of a set of parameters, and simulating the system behavior for that specific set of parameter values. For complex systems, a major challenge in using DPRA methodologies is to analyze the large number of scenarios generated, where clustering techniques are typically employed to better organize and interpret the data. In this paper, we focus on the analysis of two nuclear simulation datasets that are part of the risk-informed safety margin characterization (RISMC) boiling water reactor (BWR) station blackout (SBO) case study. We provide the domain experts a software tool that encodes traditional and topological clustering techniques within an interactive analysis and visualization environment, for understanding the structures of such high-dimensional nuclear simulation datasets. We demonstrate through our case study that both types of clustering techniques complement each other in bringing enhanced structural understanding of the data.

F. Mesadi, M. Cetin, T. Tasdizen.

“Disjunctive normal level set: An efficient parametric implicit method,” In 2016 IEEE International Conference on Image Processing (ICIP), IEEE, September, 2016.

DOI: 10.1109/icip.2016.7533171

Level set methods are widely used for image segmentation because of their capability to handle topological changes. In this paper, we propose a novel parametric level set method called Disjunctive Normal Level Set (DNLS), and apply it to both two phase (single object) and multiphase (multi-object) image segmentations. The DNLS is formed by union of polytopes which themselves are formed by intersections of half-spaces. The proposed level set framework has the following major advantages compared to other level set methods available in the literature. First, segmentation using DNLS converges much faster. Second, the DNLS level set function remains regular throughout its evolution. Third, the proposed multiphase version of the DNLS is less sensitive to initialization, and its computational cost and memory requirement remains almost constant as the number of objects to be simultaneously segmented grows. The experimental results show the potential of the proposed method.

K. Moreland, C. Sewell, W. Usher, L. Lo, J. Meredith, D. Pugmire, J. Kress, H. Schroots, K. Ma, H. Childs, M. Larsen, C. Chen, R. Maynard, B. Geveci.

“VTK-m: Accelerating the Visualization Toolkit for Massively Threaded Architectures,” In IEEE Computer Graphics and Applications, Vol. 36, No. 3, pp. 48--58. May, 2016.

ISSN: 0272-1716

DOI: 10.1109/MCG.2016.48

Traditional scientific visualization software approaches do not fare well in massively threaded environments. To address the needs of the high-performance computing community, the VTK-m framework fills the gaps in functionality by bringing together the most recent research.

P. Muralidharan, J. Fishbaugh, E. Y. Kim, H. J. Johnson, J. S. Paulsen, G. Gerig, P. T. Fletcher.

“Bayesian Covariate Selection in Mixed Effects Models for Longitudinal Shape Analysis,” In International Symposium on Biomedical Imaging (ISBI), IEEE, April, 2016.

DOI: 10.1109/isbi.2016.7493352

The goal of longitudinal shape analysis is to understand how anatomical shape changes over time, in response to biological processes, including growth, aging, or disease. In many imaging studies, it is also critical to understand how these shape changes are affected by other factors, such as sex, disease diagnosis, IQ, etc. Current approaches to longitudinal shape analysis have focused on modeling age-related shape changes, but have not included the ability to handle covariates. In this paper, we present a novel Bayesian mixed-effects shape model that incorporates simultaneous relationships between longitudinal shape data and multiple predictors or covariates to the model. Moreover, we place an Automatic Relevance Determination (ARD) prior on the parameters, that lets us automatically select which covariates are most relevant to the model based on observed data. We evaluate our proposed model and inference procedure on a longitudinal study of Huntington's disease from PREDICT-HD. We first show the utility of the ARD prior for model selection in a univariate modeling of striatal volume, and next we apply the full high-dimensional longitudinal shape model to putamen shapes.

C. Partl, S. Gratzl, M. Streit, A. Wassermann, H. Pfister, D. Schmalstieg, A. Lex.

“Pathfinder: Visual Analysis of Paths in Graphs,” In Computer Graphics Forum (EuroVis '16), Vol. 35, No. 3, pp. 71-80. jun, 2016.

ISSN: 1467-8659

DOI: 10.1111/cgf.12883

The analysis of paths in graphs is highly relevant in many domains. Typically, path-related tasks are performed in node-link layouts. Unfortunately, graph layouts often do not scale to the size of many real world networks. Also, many networks are multivariate, i.e., contain rich attribute sets associated with the nodes and edges. These attributes are often critical in judging paths, but directly visualizing attributes in a graph layout exacerbates the scalability problem. In this paper, we present visual analysis solutions dedicated to path-related tasks in large and highly multivariate graphs. We show that by focusing on paths, we can address the scalability problem of multivariate graph visualization, equipping analysts with a powerful tool to explore large graphs. We introduce Pathfinder, a technique that provides visual methods to query paths, while considering various constraints. The resulting set of paths is visualized in both a ranked list and as a node-link diagram. For the paths in the list, we display rich attribute data associated with nodes and edges, and the node-link diagram provides topological context. The paths can be ranked based on topological properties, such as path length or average node degree, and scores derived from attribute data. Pathfinder is designed to scale to graphs with tens of thousands of nodes and edges by employing strategies such as incremental query results. We demonstrate Pathfinder's fitness for use in scenarios with data from a coauthor network and biological pathways.

Y. Pathak, O. Salami, S. Baillet, Z. Li, C.R. Butson.

“Longitudinal Changes in Depressive Circuitry in Response to Neuromodulation Therapy,” In Frontiers in Neural Circuits, Vol. 10, rontiers Media SA, July, 2016.

DOI: 10.3389/fncir.2016.00050

BACKGROUND:

Major depressive disorder (MDD) is a public health problem worldwide. There is increasing interest in using non-invasive therapies such as repetitive transcranial magnetic stimulation (rTMS) to treat MDD. However, the changes induced by rTMS on neural circuits remain poorly characterized. The present study aims to test whether the brain regions previously targeted by deep brain stimulation (DBS) in the treatment of MDD respond to rTMS, and whether functional connectivity (FC) measures can predict clinical response.

METHODS:

rTMS (20 sessions) was administered to five MDD patients at the left-dorsolateral prefrontal cortex (L-DLPFC) over 4 weeks. Magnetoencephalography (MEG) recordings and Montgomery-Asberg depression rating scale (MADRS) assessments were acquired before, during and after treatment. Our primary measures, obtained with MEG source imaging, were changes in power spectral density (PSD) and changes in FC as measured using coherence.

RESULTS:

Of the five patients, four met the clinical response criterion (40% or greater decrease in MADRS) after 4 weeks of treatment. An increase in gamma power at the L-DLPFC was correlated with improvement in symptoms. We also found that increases in delta band connectivity between L-DLPFC/amygdala and L-DLPFC/pregenual anterior cingulate cortex (pACC), and decreases in gamma band connectivity between L-DLPFC/subgenual anterior cingulate cortex (sACC), were correlated with improvements in depressive symptoms.

CONCLUSIONS:

Our results suggest that non-invasive intervention techniques, such as rTMS, modulate the ongoing activity of depressive circuits targeted for DBS, and that MEG can capture these changes. Gamma oscillations may originate from GABA-mediated inhibition, which increases synchronization of large neuronal populations, possibly leading to increased long-range FC. We postulate that responses to rTMS could provide valuable insights into early evaluation of patient candidates for DBS surgery.

I.A. Polejaeva, R. Ranjan, C.J. Davies, M. Regouski, J. Hall, A.L. Olsen, Q. Meng, H.M. Rutigliano, D.J. Dosdall, N.A. Angel, F.B. Sachse, T. Seidel, A.J. Thomas, R. Stott, K.E. Panter, P.M. Lee, A.J. Van Wettere, J.R. Stevens, Z. Wang, R.S. Macleod, N.F. Marrouche, K.L. White.

“Increased Susceptibility to Atrial Fibrillation Secondary to Atrial Fibrosis in Transgenic Goats Expressing Transforming Growth Factor-β1,” In Journal of Cardiovascular Electrophysiology, Vol. 27, No. 10, Wiley-Blackwell, pp. 1220--1229. Aug, 2016.

DOI: 10.1111/jce.13049

Introduction

Large animal models of progressive atrial fibrosis would provide an attractive platform to study relationship between structural and electrical remodeling in atrial fibrillation (AF). Here we established a new transgenic goat model of AF with cardiac specific overexpression of TGF-β1 and investigated the changes in the cardiac structure and function leading to AF.

Methods and Results

Transgenic goats with cardiac specific overexpression of constitutively active TGF-β1 were generated by somatic cell nuclear transfer. We examined myocardial tissue, ECGs, echocardiographic data, and AF susceptibility in transgenic and wild-type control goats. Transgenic goats exhibited significant increase in fibrosis and myocyte diameters in the atria compared to controls, but not in the ventricles. P-wave duration was significantly greater in transgenic animals starting at 12 months of age, but no significant chamber enlargement was detected, suggesting conduction slowing in the atria. Furthermore, this transgenic goat model exhibited a significant increase in AF vulnerability. Six of 8 transgenic goats (75%) were susceptible to AF induction and exhibited sustained AF (>2 minutes), whereas none of 6 controls displayed sustained AF (P < 0.01). Length of induced AF episodes was also significantly greater in the transgenic group compared to controls (687 ± 212.02 seconds vs. 2.50 ± 0.88 seconds, P < 0.0001), but no persistent or permanent AF was observed.

Conclusion

A novel transgenic goat model with a substrate for AF was generated. In this model, cardiac overexpression of TGF-β1 led to an increase in fibrosis and myocyte size in the atria, and to progressive P-wave prolongation. We suggest that these factors underlie increased AF susceptibility.

M. Raj, M. Mirzargar, R. Kirby, R. Whitaker, J. Preston.

“Evaluating Shape Alignment via Ensemble Visualization,” In IEEE Computer Graphics and Applications, Vol. 36, No. 3, IEEE, pp. 60--71. May, 2016.

DOI: 10.1109/mcg.2015.70

The visualization of variability in surfaces embedded in 3D, which is a type of ensemble uncertainty visualization, provides a means of understanding the underlying distribution of a collection or ensemble of surfaces. This work extends the contour boxplot technique to 3D and evaluates it against an enumeration-style visualization of the ensemble members and other conventional visualizations used by atlas builders. The authors demonstrate the efficacy of using the 3D contour boxplot ensemble visualization technique to analyze shape alignment and variability in atlas construction and analysis as a real-world application.

I. Rodero, M. Parashar, A.G. Landge, S. Kumar, V. Pascucci,, P.T. Bremer.

“Evaluation of in-situ analysis strategies at scale for power efficiency and scalability,” In Cluster, Cloud and Grid Computing (CCGrid), 2016 16th IEEE/ACM International Symposium on, IEEE, pp. 156--164. 2016.

The increasing gap between available compute power and I/O capabilities is resulting in simulation pipelines running on leadership computing facilities being reformulated. In particular, in-situ processing is complementing conventional post-process analysis; however, it can be performed by using the same compute resources as the simulation or using secondary dedicated resources.

In this paper, we focus on three different in-situ analysis strategies, which use the same compute resources as the ongoing simulation but different data movement strategies. We evaluate the costs incurred by these strategies in terms of run time, scalability and power/energy consumption. Furthermore, we extrapolate power behavior to peta-scale and investigate different design choices through projections. Experimental evaluation at full machine scale on Titan supports that using fewer cores per node for in-situ analysis is the optimum choice in terms of scalability. Hence, further research effort should be devoted towards developing in-situ analysis techniques following this strategy in future high-end systems.

P. Rosen, B. Burton, K. Potter, C.R. Johnson.

“muView: A Visual Analysis System for Exploring Uncertainty in Myocardial Ischemia Simulations,” In Visualization in Medicine and Life Sciences III, Springer Nature, pp. 49--69. 2016.

DOI: 10.1007/978-3-319-24523-2_3

In this paper we describe the Myocardial Uncertainty Viewer (muView or µView) system for exploring data stemming from the simulation of cardiac ischemia. The simulation uses a collection of conductivity values to understand how ischemic regions effect the undamaged anisotropic heart tissue. The data resulting from the simulation is multi-valued and volumetric, and thus, for every data point, we have a collection of samples describing cardiac electrical properties. µView combines a suite of visual analysis methods to explore the area surrounding the ischemic zone and identify how perturbations of variables change the propagation of their effects. In addition to presenting a collection of visualization techniques, which individually highlight different aspects of the data, the coordinated view system forms a cohesive environment for exploring the simulations.We also discuss the findings of our study, which are helping to steer further development of the simulation and strengthening our collaboration with the biomedical engineers attempting to understand the phenomenon.

U. Rüde, K. Willcox, L. C. McInnes, H. De Sterck, G. Biros, H. Bungartz, J. Corones, E. Cramer, J. Crowley, O. Ghattas, M. Gunzburger, M. Hanke, R. Harrison, M. Heroux, J. Hesthaven, P. Jimack, C. Johnson, K. E. Jordan, D. E. Keyes, R. Krause, V. Kumar, S. Mayer, J. Meza, K. M. Mørken, J. T. Oden, L. Petzold, P. Raghavan, S. M. Shontz, A. Trefethen, P. Turner, V. Voevodin, B. Wohlmuth, C. S. Woodward.

“Research and Education in Computational Science and Engineering,” Subtitled “Report from a workshop sponsored by the Society for Industrial and Applied Mathematics (SIAM) and the European Exascale Software Initiative (EESI-2),” Aug, 2016.

Over the past two decades the field of computational science and engineering (CSE) has penetrated both basic and applied research in academia, industry, and laboratories to advance discovery, optimize systems, support decision-makers, and educate the scientific and engineering workforce. Informed by centuries of theory and experiment, CSE performs computational experiments to answer questions that neither theory nor experiment alone is equipped to answer. CSE provides scientists and engineers of all persuasions with algorithmic inventions and software systems that transcend disciplines and scales. Carried on a wave of digital technology, CSE brings the power of parallelism to bear on troves of data. Mathematics-based advanced computing has become a prevalent means of discovery and innovation in essentially all areas of science, engineering, technology, and society; and the CSE community is at the core of this transformation. However, a combination of disruptive developments---including the architectural complexity of extreme-scale computing, the data revolution that engulfs the planet, and the specialization required to follow the applications to new frontiers---is redefining the scope and reach of the CSE endeavor. This report describes the rapid expansion of CSE and the challenges to sustaining its bold advances. The report also presents strategies and directions for CSE research and education for the next decade.

M. Sajjadi, S.M. Seyedhosseini, T. Tasdizen.

“Disjunctive Normal Networks,” In Neurocomputing, Vol. 218, Elsevier BV, pp. 276--285. Dec, 2016.

DOI: 10.1016/j.neucom.2016.08.047

Artificial neural networks are powerful pattern classifiers. They form the basis of the highly successful and popular Convolutional Networks which offer the state-of-the-art performance on several computer visions tasks. However, in many general and non-vision tasks, neural networks are surpassed by methods such as support vector machines and random forests that are also easier to use and faster to train. One reason is that the backpropagation algorithm, which is used to train artificial neural networks, usually starts from a random weight initialization which complicates the optimization process leading to long training times and increases the risk of stopping in a poor local minima. Several initialization schemes and pre-training methods have been proposed to improve the efficiency and performance of training a neural network. However, this problem arises from the architecture of neural networks. We use the disjunctive normal form and approximate the boolean conjunction operations with products to construct a novel network architecture. The proposed model can be trained by minimizing an error function and it allows an effective and intuitive initialization which avoids poor local minima. We show that the proposed structure provides efficient coverage of the decision space which leads to state-of-the art classification accuracy and fast training times.

M. Sajjadi, M. Javanmardi, T. Tasdizen.

“Regularization With Stochastic Transformations and Perturbations for Deep Semi-Supervised Learning,” In CoRR, Vol. abs/1606.04586, 2016.

Effective convolutional neural networks are trained on large sets of labeled data. However, creating large labeled datasets is a very costly and time-consuming task. Semi-supervised learning uses unlabeled data to train a model with higher accuracy when there is a limited set of labeled data available. In this paper, we consider the problem of semi-supervised learning with convolutional neural networks. Techniques such as randomized data augmentation, dropout and random max-pooling provide better generalization and stability for classifiers that are trained using gradient descent. Multiple passes of an individual sample through the network might lead to different predictions due to the non-deterministic behavior of these techniques. We propose an unsupervised loss function that takes advantage of the stochastic nature of these methods and minimizes the difference between the predictions of multiple passes of a training sample through the network. We evaluate the proposed method on several benchmark datasets.

M. Sajjadi, M. Javanmardi, T. Tasdizen.

“Mutual exclusivity loss for semi-supervised deep learning,” In 2016 IEEE International Conference on Image Processing (ICIP), IEEE, September, 2016.

In this paper we consider the problem of semi-supervised learning with deep Convolutional Neural Networks (ConvNets). Semi-supervised learning is motivated on the observation that unlabeled data is cheap and can be used to improve the accuracy of classifiers. In this paper we propose an unsupervised regularization term that explicitly forces the classifier's prediction for multiple classes to be mutually-exclusive and effectively guides the decision boundary to lie on the low density space between the manifolds corresponding to different classes of data. Our proposed approach is general and can be used with any backpropagation-based learning method. We show through different experiments that our method can improve the object recognition performance of ConvNets using unlabeled data.