SCI Publications

2014

J. Bronson, J.A. Levine, R.T. Whitaker.

“Lattice cleaving: a multimaterial tetrahedral meshing algorithm with guarantees,” In IEEE Transactions on Visualization and Computer Graphics (TVCG), pp. 223--237. 2014.

DOI: 10.1109/TVCG.2013.115

PubMed ID: 24356365

We introduce a new algorithm for generating tetrahedral meshes that conform to physical boundaries in volumetric domains consisting of multiple materials. The proposed method allows for an arbitrary number of materials, produces high-quality tetrahedral meshes with upper and lower bounds on dihedral angles, and guarantees geometric fidelity. Moreover, the method is combinatoric so its implementation enables rapid mesh construction. These meshes are structured in a way that also allows grading, to reduce element counts in regions of homogeneity. Additionally, we provide proofs showing that both element quality and geometric fidelity are bounded using this approach.

M.S. Okun, S.S. Wu, S. Fayad, H. Ward, D. Bowers, C. Rosado, L. Bowen, C. Jacobson, C.R. Butson, K.D. Foote.

“Acute and Chronic Mood and Apathy Outcomes from a Randomized Study of Unilateral STN and GPi DBS,” In PLoS ONE, Vol. 9, No. 12, pp. e114140. December, 2014.

Objective: To study mood and behavioral effects of unilateral and staged bilateral subthalamic nucleus (STN) and globus pallidus internus (GPi) deep brain stimulation (DBS) for Parkinson's disease (PD).

Background: There are numerous reports of mood changes following DBS, however, most have focused on bilateral simultaneous STN implants with rapid and aggressive post-operative medication reduction.

Methods: A standardized evaluation was applied to a subset of patients undergoing STN and GPi DBS and who were also enrolled in the NIH COMPARE study. The Unified Parkinson Disease Rating Scale (UPDRS III), the Hamilton depression (HAM-D) and anxiety rating scales (HAM-A), the Yale-Brown obsessive-compulsive rating scale (YBOCS), the Apathy Scale (AS), and the Young mania rating scale (YMRS) were used. The scales were repeated at acute and chronic intervals. A post-operative strategy of non-aggressive medication reduction was employed.

Results: Thirty patients were randomized and underwent unilateral DBS (16 STN, 14 GPi). There were no baseline differences. The GPi group had a higher mean dopaminergic dosage at 1-year, however the between group difference in changes from baseline to 1-year was not significant. There were no differences between groups in mood and motor outcomes. When combining STN and GPi groups, the HAM-A scores worsened at 2-months, 4-months, 6-months and 1-year when compared with baseline; the HAM-D and YMRS scores worsened at 4-months, 6-months and 1-year; and the UPDRS Motor scores improved at 4-months and 1-year. Psychiatric diagnoses (DSM-IV) did not change. No between group differences were observed in the cohort of bilateral cases.

Conclusions: There were few changes in mood and behavior with STN or GPi DBS. The approach of staging STN or GPi DBS without aggressive medication reduction could be a viable option for managing PD surgical candidates. A study of bilateral DBS and of medication reduction will be required to better understand risks and benefits of a bilateral approach.

B. Chapman, H. Calandra, S. Crivelli, J. Dongarra, J. Hittinger, C.R. Johnson, S.A. Lathrop, V. Sarkar, E. Stahlberg, J.S. Vetter, D. Williams.

“ASCAC Workforce Subcommittee Letter,” Note: Office of Scientific and Technical Information, DOE ASCAC Committee Report, July, 2014.

DOI: 10.2172/1222711

Simulation and computing are essential to much of the research conducted at the DOE national laboratories. Experts in the ASCR-relevant Computing Sciences, which encompass a range of disciplines including Computer Science, Applied Mathematics, Statistics and domain sciences, are an essential element of the workforce in nearly all of the DOE national laboratories. This report seeks to identify the gaps and challenges facing DOE with respect to this workforce.

The DOE laboratories provided the committee with information on disciplines in which they experienced workforce gaps. For the larger laboratories, the majority of the cited workforce gaps were in the Computing Sciences. Since this category spans multiple disciplines, it was difficult to obtain comprehensive information on workforce gaps in the available timeframe. Nevertheless, five multi-purpose laboratories provided additional relevant data on recent hiring and retention.

Data on academic coursework was reviewed. Studies on multidisciplinary education in Computational Science and Engineering (CS&E) revealed that, while the number of CS&E courses offered is growing, the overall availability is low and the coursework fails to provide skills for applying CS&E to real-world applications. The number of graduates in different fields within Computer Science (CS) and Computer Engineering (CE) was also reviewed, which confirmed that specialization in DOE areas of interest is less common than in many other areas.

Projections of industry needs and employment figures (mostly for CS and CE) were examined. They indicate a high and increasing demand for graduates in all areas of computing, with little unemployment. This situation will be exacerbated by large numbers of retirees in the coming decade. Further, relatively few US students study toward higher degrees in the Computing Sciences, and those who do are predominantly white and male. As a result of this demographic imbalance, foreign nationals are an increasing fraction of the graduate population and we fail to benefit from including women and underrepresented minorities.

There is already a program that supports graduate education that is tailored to the needs of the DOE laboratories. The Computational Science Graduate Fellowship (CSGF) enables graduates to pursue a multidisciplinary program of education that is coupled with practical experience at the laboratories. It has been demonstrated to be highly effective in both its educational goals and in its ability to supply talent to the laboratories. However, its current size and scope are too limited to solve the workforce problems identified. The committee felt strongly that this proven program should be extended to increase its ability to support the DOE mission.

Since no single program can eliminate the workforce gap, existing recruitment efforts by the laboratories were examined. It was found that the laboratories already make considerable effort to recruit in this area. Although some challenges, such as the inability to match industry compensation, cannot be directly addressed, DOE could develop a roadmap to increase the impact of individual laboratory efforts, to enhance the suitability of existing educational opportunities, to increase the attractiveness of the laboratories, and to attract and sustain a full spectrum of human talent, which includes women and underrepresented minorities.

S.E. Cooper, K.G. Driesslein, A.M. Noecker, C.C. McIntyre, A.M. Machado, C.R. Butson.

“Anatomical targets associated with abrupt versus gradual washout of subthalamic deep brain stimulation effects on bradykinesia,” In PloS One, Vol. 9, No. 8, pp. e99663. January, 2014.

ISSN: 1932-6203

DOI: 10.1371/journal.pone.0099663

PubMed ID: 25098453

The subthalamic nucleus (STN) is a common anatomical target for deep brain stimulation (DBS) for the treatment of Parkinson's disease. However, the effects of stimulation may spread beyond the STN. Ongoing research aims to identify nearby anatomical structures where DBS-induced effects could be associated with therapeutic improvement or side effects. We previously found that DBS lead location determines the rate--abrupt vs. gradual--with which therapeutic effect washes out after stimulation is stopped. Those results suggested that electrical current spreads from the electrodes to two spatially distinct stimulation targets associated with different washout rates. In order to identify these targets we used computational models to predict the volumes of tissue activated during DBS in 14 Parkinson's patients from that study. We then coregistered each patient with a stereotaxic atlas and generated a probabilistic stimulation atlas to obtain a 3-dimensional representation of regions where stimulation was associated with abrupt vs. gradual washout. We found that the therapeutic effect which washed out gradually was associated with stimulation of the zona incerta and fields of Forel, whereas abruptly-disappearing therapeutic effect was associated with stimulation of STN itself. This supports the idea that multiple DBS targets exist and that current spread from one electrode may activate more than one of them in a given patient, producing a combination of effects which vary according to electrode location and stimulation settings.

A. Dubey, A. Almgren, John Bell, M. Berzins, S. Brandt, G. Bryan, P. Colella, D. Graves, M. Lijewski, F. Löffler, B. O’Shea, E. Schnetter, B. Van Straalen, K. Weide.

“A survey of high level frameworks in block-structured adaptive mesh refinement packages,” In Journal of Parallel and Distributed Computing, 2014.

DOI: 10.1016/j.jpdc.2014.07.001

Over the last decade block-structured adaptive mesh refinement (SAMR) has found increasing use in large, publicly available codes and frameworks. SAMR frameworks have evolved along different paths. Some have stayed focused on specific domain areas, others have pursued a more general functionality, providing the building blocks for a larger variety of applications. In this survey paper we examine a representative set of SAMR packages and SAMR-based codes that have been in existence for half a decade or more, have a reasonably sized and active user base outside of their home institutions, and are publicly available. The set consists of a mix of SAMR packages and application codes that cover a broad range of scientific domains. We look at their high-level frameworks, their design trade-offs and their approach to dealing with the advent of radical changes in hardware architecture. The codes included in this survey are BoxLib, Cactus, Chombo, Enzo, FLASH, and Uintah.

Keywords: SAMR, BoxLib, Chombo, FLASH, Cactus, Enzo, Uintah

S. Durrleman, M. Prastawa, N. Charon, J.R. Korenberg, S. Joshi, G. Gerig, A. Trouvé.

“Morphometry of anatomical shape complexes with dense deformations and sparse parameters,” In NeuroImage, 2014.

DOI: 10.1016/j.neuroimage.2014.06.043

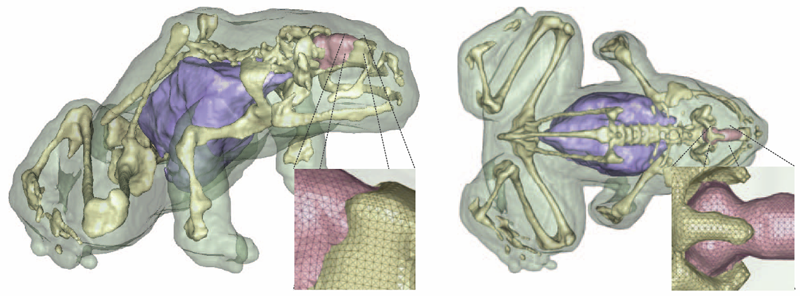

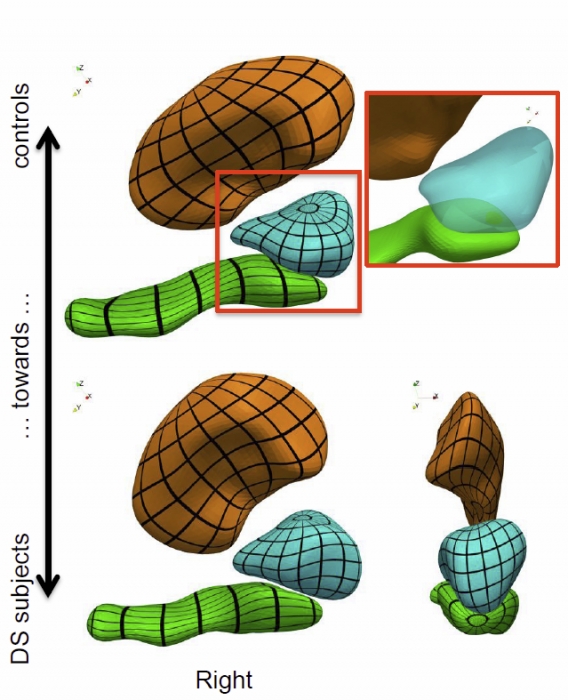

We propose a generic method for the statistical analysis of collections of anatomical shape complexes, namely sets of surfaces that were previously segmented and labeled in a group of subjects. The method estimates an anatomical model, the template complex, that is representative of the population under study. Its shape reflects anatomical invariants within the dataset. In addition, the method automatically places control points near the most variable parts of the template complex. Vectors attached to these points are parameters of deformations of the ambient 3D space. These deformations warp the template to each subject’s complex in a way that preserves the organization of the anatomical structures. Multivariate statistical analysis is applied to these deformation parameters to test for group differences. Results of the statistical analysis are then expressed in terms of deformation patterns of the template complex, and can be visualized and interpreted.

The user needs only to specify the topology of the template complex and the number of control points. The method then automatically estimates the shape of the template complex, the optimal position of control points and deformation parameters. The proposed approach is completely generic with respect to any type of application and well adapted to efficient use in clinical studies, in that it does not require point correspondence across surfaces and is robust to mesh imperfections such as holes, spikes, inconsistent orientation or irregular meshing.

The approach is illustrated with a neuroimaging study of Down syndrome (DS). Results demonstrate that the complex of deep brain structures shows a statistically significant shape difference between control and DS subjects. The deformation-based modelingis able to classify subjects with very high specificity and sensitivity, thus showing important generalization capability even given a low sample size. We show that results remain significant even if the number of control points, and hence the dimension of variables in the statistical model, are drastically reduced. The analysis may even suggest that parsimonious models have an increased statistical performance.

The method has been implemented in the software Deformetrica, which is publicly available at www.deformetrica.org.

Keywords: morphometry, deformation, varifold, anatomy, shape, statistics

L.T. Edgar, C.J. Underwood, J.E. Guilkey, J.B. Hoying, J.A. Weiss.

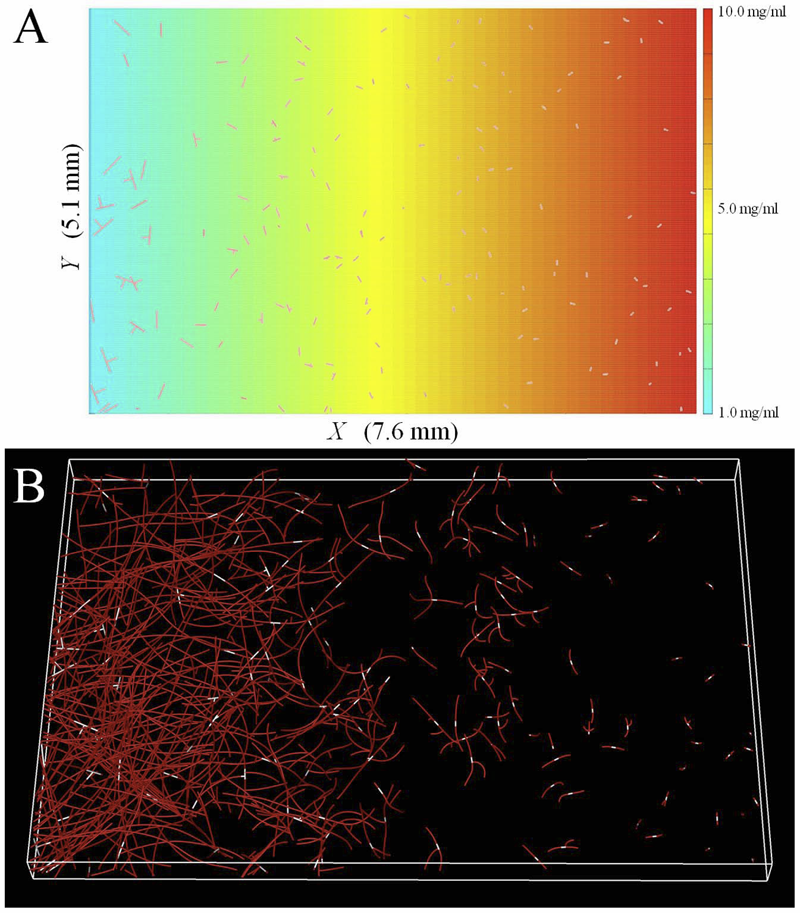

“Extracellular matrix density regulates the rate of neovessel growth and branching in sprouting angiogenesis,” In PLOS one, Vol. 9, No. 1, 2014.

DOI: 10.1371/journal.pone.0085178

Angiogenesis is regulated by the local microenvironment, including the mechanical interactions between neovessel sprouts and the extracellular matrix (ECM). However, the mechanisms controlling the relationship of mechanical and biophysical properties of the ECM to neovessel growth during sprouting angiogenesis are just beginning to be understood. In this research, we characterized the relationship between matrix density and microvascular topology in an in vitro 3D organ culture model of sprouting angiogenesis. We used these results to design and calibrate a computational growth model to demonstrate how changes in individual neovessel behavior produce the changes in vascular topology that were observed experimentally. Vascularized gels with higher collagen densities produced neovasculatures with shorter vessel lengths, less branch points, and reduced network interconnectivity. The computational model was able to predict these experimental results by scaling the rates of neovessel growth and branching according to local matrix density. As a final demonstration of utility of the modeling framework, we used our growth model to predict several scenarios of practical interest that could not be investigated experimentally using the organ culture model. Increasing the density of the ECM significantly reduced angiogenesis and network formation within a 3D organ culture model of angiogenesis. Increasing the density of the matrix increases the stiffness of the ECM, changing how neovessels are able to deform and remodel their surroundings. The computational framework outlined in this study was capable of predicting this observed experimental behavior by adjusting neovessel growth rate and branching probability according to local ECM density, demonstrating that altering the stiffness of the ECM via increasing matrix density affects neovessel behavior, thereby regulated vascular topology during angiogenesis.

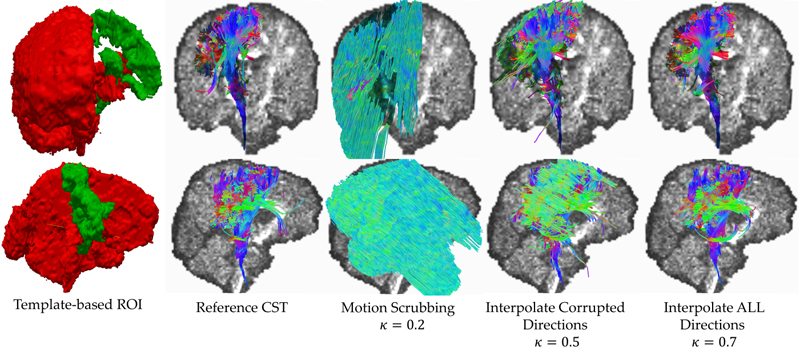

S. Elhabian, Y. Gur, C. Vachet, J. Piven, M. Styner, I. Leppert, G.B. Pike, G. Gerig.

“A Preliminary Study on the Effect of Motion Correction On HARDI Reconstruction,” In Proceedings of the 2014 IEEE International Symposium on Biomedical Imaging (ISBI), pp. (accepted). 2014.

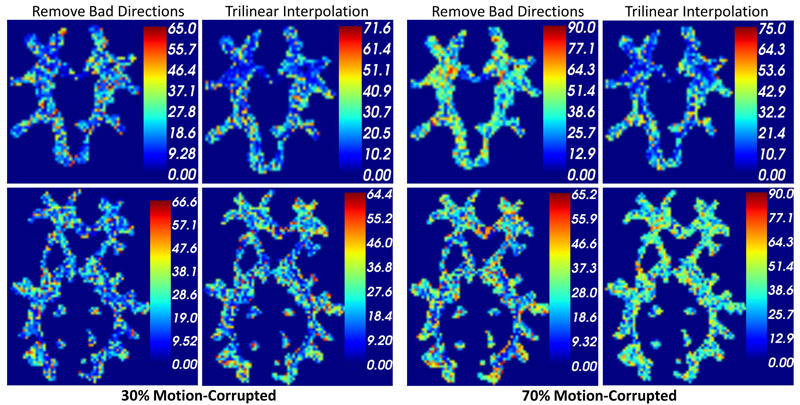

Post-acquisition motion correction is widely performed in diffusionweighted imaging (DWI) to guarantee voxel-wise correspondence between DWIs. Whereas this is primarily motivated to save as many scans as possible if corrupted by motion, users do not fully understand the consequences of different types of interpolation schemes on the final analysis. Nonetheless, interpolation might increase the partial volume effect while not preserving the volume of the diffusion profile, whereas excluding poor DWIs may affect the ability to resolve crossing fibers especially with small separation angles. In this paper, we investigate the effect of interpolating diffusion measurements as well as the elimination of bad directions on the reconstructed fiber orientation diffusion functions and on the estimated fiber orientations. We demonstrate such an effect on synthetic and real HARDI datasets. Our experiments demonstrate that the effect of interpolation is more significant with small fibers separation angles where the exclusion of motion-corrupted directions decreases the ability to resolve such crossing fibers.

Keywords: Diffusion MRI, HARDI, motion correction, interpolation

S. Elhabian, Y. Gur, J. Piven, M. Styner, I. Leppert, G.B. Pike, G. Gerig.

“Subject-Motion Correction in HARDI Acquisitions: Choices and Consequences,” In Proceeding of the 2014 Joint Annual Meeting ISMRM-ESMRMB, pp. (accepted). 2014.

DOI: 10.3389/fneur.2014.00240

Unlike anatomical MRI where subject motion can most often be assessed by quick visual quality control, the detection, characterization and evaluation of the impact of motion in diffusion imaging are challenging issues due to the sensitivity of diffusion weighted imaging (DWI) to motion originating from vibration, cardiac pulsation, breathing and head movement. Post-acquisition motion correction is widely performed, e.g. using the open-source DTIprep software [1,2] or TORTOISE [3], but in particular in high angular resolution diffusion imaging (HARDI), users often do not fully understand the consequences of different types of correction schemes on the final analysis, and whether those choices may introduce confounding factors when comparing populations. Although there is excellent theoretical work on the number of directional DWI and its effect on the quality and crossing fiber resolution of orientation distribution functions (ODF), standard users lack clear guidelines and recommendations in practical settings. This research investigates motion correction using transformation and interpolation of affected DWI directions versus the exclusion of subsets of DWI’s, and its effects on diffusion measurements on the reconstructed fiber orientation diffusion functions and on the estimated fiber orientations. The various effects are systematically studied via a newly developed synthetic phantom and also on real HARDI data.

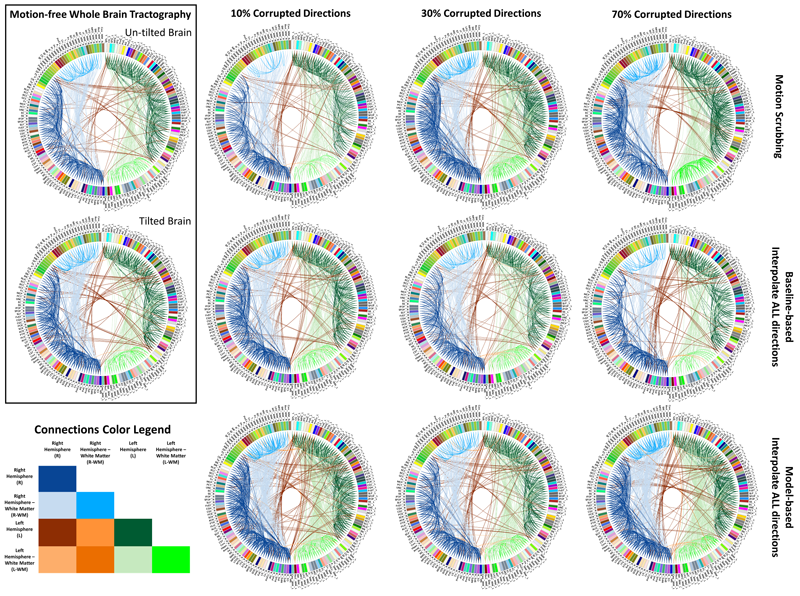

S. Elhabian, Y. Gur, J. Piven, M. Styner, I. Leppert, G. Bruce Pike, G. Gerig.

“Motion is inevitable: The impact of motion correction schemes on hardi reconstructions,” In Proceedings of the MICCAI 2014 Workshop on Computational Diffusion MRI, September, 2014.

Diffusion weighted imaging (DWI) is known to be prone to artifacts related to motion originating from subject movement, cardiac pulsation and breathing, but also to mechanical issues such as table vibrations. Given the necessity for rigorous quality control and motion correction, users are often left to use simple heuristics to select correction schemes, but do not fully understand the consequences of such choices on the final analysis, moreover being at risk to introduce confounding factors in population studies. This paper reports work in progress towards a comprehensive evaluation framework of HARDI motion correction to support selection of optimal methods to correct for even subtle motion. We make use of human brain HARDI data from a well controlled motion experiment to simulate various degrees of motion corruption. Choices for correction include exclusion or registration of motion corrupted directions, with different choices of interpolation. The comparative evaluation is based on studying effects of motion correction on three different metrics commonly used when using DWI data, including similarity of fiber orientation distribution functions (fODFs), global brain connectivity via Graph Diffusion Distance (GDD), and reproducibility of prominent and anatomically defined fiber tracts. Effects of various settings are systematically explored and illustrated, leading to the somewhat surprising conclusion that a best choice is the alignment and interpolation of all DWI directions, not only directions considered as corrupted.

S.Y. Elhabian, Y. Gur, C. Vachet, J. Piven, M.A. Styner, I.R. Leppert, B. Pike, G. Gerig.

“Subject-Motion Correction in HARDI Acquisitions: Choices and Consequences,” In Frontiers in Neurology - Brain Imaging Methods, 2014.

DOI: 10.3389/fneur.2014.00240

Diffusion-weighted imaging (DWI) is known to be prone to artifacts related to motion originating from subject movement, cardiac pulsation and breathing, but also to mechanical issues such as table vibrations. Given the necessity for rigorous quality control and motion correction, users are often left to use simple heuristics to select correction schemes, which involves simple qualitative viewing of the set of DWI data, or the selection of transformation parameter thresholds for detection of motion outliers. The scientific community offers strong theoretical and experimental work on noise reduction and orientation distribution function (ODF) reconstruction techniques for HARDI data, where postacquisition motion correction is widely performed, e.g., using the open-source DTIprep software (Oguz et al., 2014), FSL (the FMRIB Software Library) (Jenkinson et al., 2012) or TORTOISE ( Pierpaoli et al. , 2010). Nonetheless, effects and consequences of the selection of motion correction schemes on the final analysis, and the eventual risk of introducing confounding factors when comparing populations, are much less known and far beyond simple intuitive guessing. Hence, standard users lack clear guidelines and recommendations in practical settings. This paper reports a comprehensive evaluation framework to systematically assess the outcome of different motion correction choices commonly used by the scientific community on different DWI-derived measures. We make use of human brain HARDI data from a well-controlled motion experiment to simulate various degrees of motion corruption and noise contamination. Choices for correction include exclusion/scrubbing or registration of motion corrupted directions with different choices of interpolation, as well as the option of interpolation of all directions. The comparative evaluation is based on a study of the impact of motion correction using four metrics that quantify (1) similarity of fiber orientation distribution functions (fODFs), (2) deviation of local fiber orientations, (3) global brain connectivity via Graph Diffusion Distance (GDD) and (4) the reproducibility of prominent and anatomically defined fiber tracts. Effects of various motion correction choices are systematically explored and illustrated, leading to a general conclusion of discouraging users from setting ad-hoc thresholds on the estimated motion parameters beyond which volumes are claimed to be corrupted.

T. Etiene, D. Jonsson, T. Ropinski, C. Scheidegger, J.L.D. Comba, L. G. Nonato, R. M. Kirby, A. Ynnerman,, C. T. Silva.

“Verifying Volume Rendering Using Discretization Error Analysis,” In IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS, Vol. 20, No. 1, IEEE, pp. 140-154. January, 2014.

We propose an approach for verification of volume rendering correctness based on an analysis of the volume rendering integral, the basis of most DVR algorithms. With respect to the most common discretization of this continuous model (Riemann summation), we make assumptions about the impact of parameter changes on the rendered results and derive convergence curves describing the expected behavior. Specifically, we progressively refine the number of samples along the ray, the grid size, and the pixel size, and evaluate how the errors observed during refinement compare against the expected approximation errors. We derive the theoretical foundations of our verification approach, explain how to realize it in practice, and discuss its limitations. We also report the errors identified by our approach when applied to two publicly available volume rendering packages.

A. Faucett, T. Harman, T. Ameel.

“Computational Determination of the Modified Vortex Shedding Frequency for a Rigid, Truncated, Wall-Mounted Cylinder in Cross Flow,” In Volume 10: Micro- and Nano-Systems Engineering and Packaging, Montreal, ASME International Mechanical Engineering Congress and Exposition (IMECE), International Conference on Computational Science, November, 2014.

DOI: 10.1115/imece2014-39064

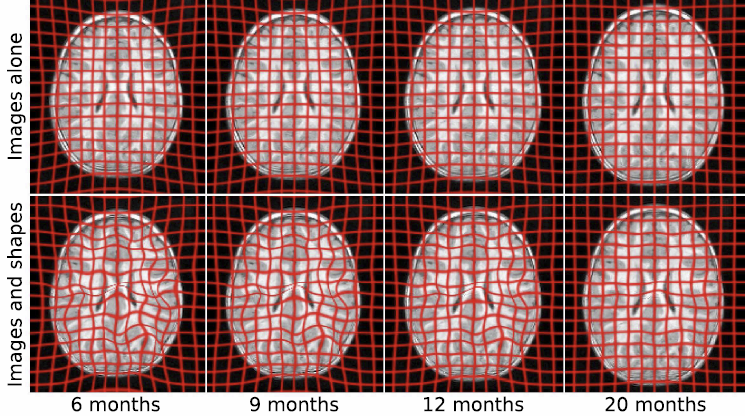

J. Fishbaugh, M. Prastawa, G. Gerig, S. Durrleman.

“Geodesic Regression of Image and Shape Data for Improved Modeling of 4D Trajectories,” In Proceedings of the 2014 IEEE International Symposium on Biomedical Imaging (ISBI), pp. (accepted). 2014.

A variety of regression schemes have been proposed on images or shapes, although available methods do not handle them jointly. In this paper, we present a framework for joint image and shape regression which incorporates images as well as anatomical shape information in a consistent manner. Evolution is described by a generative model that is the analog of linear regression, which is fully characterized by baseline images and shapes (intercept) and initial momenta vectors (slope). Further, our framework adopts a control point parameterization of deformations, where the dimensionality of the deformation is determined by the complexity of anatomical changes in time rather than the sampling of the image and/or the geometric data. We derive a gradient descent algorithm which simultaneously estimates baseline images and shapes, location of control points, and momenta. Experiments on real medical data demonstrate that our framework effectively combines image and shape information, resulting in improved modeling of 4D (3D space + time) trajectories.

T. Fogal, F. Proch, A. Schiewe, O. Hasemann, A. Kempf, J. Krüger.

“Freeprocessing: Transparent in situ visualization via data interception,” In Proceedings of the 14th Eurographics Conference on Parallel Graphics and Visualization, EGPGV, Eurographics Association, 2014.

In situ visualization has become a popular method for avoiding the slowest component of many visualization pipelines: reading data from disk. Most previous in situ work has focused on achieving visualization scalability on par with simulation codes, or on the data movement concerns that become prevalent at extreme scales. In this work, we consider in situ analysis with respect to ease of use and programmability. We describe an abstraction that opens up new applications for in situ visualization, and demonstrate that this abstraction and an expanded set of use cases can be realized without a performance cost.

Z. Fu, H.K. Dasari, M. Berzins, B. Thompson.

“Parallel Breadth First Search on GPU Clusters,” SCI Technical Report, No. UUSCI-2014-002, SCI Institute, University of Utah, 2014.

Fast, scalable, low-cost, and low-power execution of parallel graph algorithms is important for a wide variety of commercial and public sector applications. Breadth First Search (BFS) imposes an extreme burden on memory bandwidth and network communications and has been proposed as a benchmark that may be used to evaluate current and future parallel computers. Hardware trends and manufacturing limits strongly imply that many core devices, such as NVIDIA® GPUs and the Intel® Xeon Phi®, will become central components of such future systems. GPUs are well known to deliver the highest FLOPS/watt and enjoy a very significant memory bandwidth advantage over CPU architectures. Recent work has demonstrated that GPUs can deliver high performance for parallel graph algorithms and, further, that it is possible to encapsulate that capability in a manner that hides the low level details of the GPU architecture and the CUDA language but preserves the high throughput of the GPU. We extend previous research on GPUs and on scalable graph processing on super-computers and demonstrate that a high-performance parallel graph machine can be created using commodity GPUs and networking hardware.

Keywords: GPU cluster, MPI, BFS, graph, parallel graph algorithm

Z. Fu, H.K. Dasari, M. Berzins, B. Thompson.

“Parallel Breadth First Search on GPU Clusters,” In Proceedings of the IEEE BigData 2014 Conference, Washington DC, October, 2014.

Fast, scalable, low-cost, and low-power execution of parallel graph algorithms is important for a wide variety of commercial and public sector applications. Breadth First Search (BFS) imposes an extreme burden on memory bandwidth and network communications and has been proposed as a benchmark that may be used to evaluate current and future parallel computers. Hardware trends and manufacturing limits strongly imply that many core devices, such as NVIDIA® GPUs and the Intel® Xeon Phi®, will become central components of such future systems. GPUs are well known to deliver the highest FLOPS/watt and enjoy a very significant memory bandwidth advantage over CPU architectures. Recent work has demonstrated that GPUs can deliver high performance for parallel graph algorithms and, further, that it is possible to encapsulate that capability in a manner that hides the low level details of the GPU architecture and the CUDA language but preserves the high throughput of the GPU. We extend previous research on GPUs and on scalable graph processing on super-computers and demonstrate that a high-performance parallel graph machine can be created using commodity GPUs and networking hardware.

Keywords: GPU cluster, MPI, BFS, graph, parallel graph algorithm

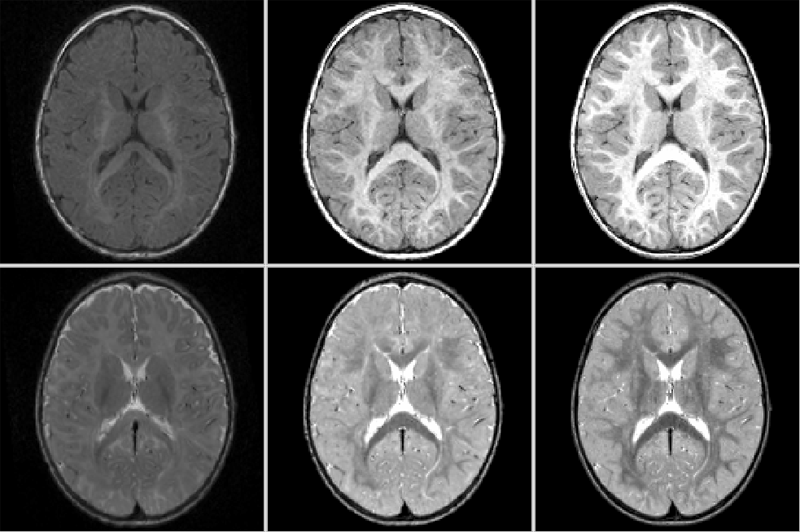

Y. Gao, M. Prastawa, M. Styner, J. Piven, G. Gerig.

“A Joint Framework for 4D Segmentation and Estimation of Smooth Temporal Appearance Changes,” In Proceedings of the 2014 IEEE International Symposium on Biomedical Imaging (ISBI), pp. (accepted). 2014.

Medical imaging studies increasingly use longitudinal images of individual subjects in order to follow-up changes due to development, degeneration, disease progression or efficacy of therapeutic intervention. Repeated image data of individuals are highly correlated, and the strong causality of information over time lead to the development of procedures for joint segmentation of the series of scans, called 4D segmentation. A main aim was improved consistency of quantitative analysis, most often solved via patient-specific atlases. Challenging open problems are contrast changes and occurance of subclasses within tissue as observed in multimodal MRI of infant development, neurodegeneration and disease. This paper proposes a new 4D segmentation framework that enforces continuous dynamic changes of tissue contrast patterns over time as observed in such data. Moreover, our model includes the capability to segment different contrast patterns within a specific tissue class, for example as seen in myelinated and unmyelinated white matter regions in early brain development. Proof of concept is shown with validation on synthetic image data and with 4D segmentation of longitudinal, multimodal pediatric MRI taken at 6, 12 and 24 months of age, but the methodology is generic w.r.t. different application domains using serial imaging.

M.G. Genton, C.R. Johnson, K. Potter, G. Stenchikov, Y. Sun.

“Surface boxplots,” In Stat Journal, Vol. 3, No. 1, pp. 1--11. 2014.

In this paper, we introduce a surface boxplot as a tool for visualization and exploratory analysis of samples of images. First, we use the notion of volume depth to order the images viewed as surfaces. In particular, we define the median image. We use an exact and fast algorithm for the ranking of the images. This allows us to detect potential outlying images that often contain interesting features not present in most of the images. Second, we build a graphical tool to visualize the surface boxplot and its various characteristics. A graph and histogram of the volume depth values allow us to identify images of interest. The code is available in the supporting information of this paper. We apply our surface boxplot to a sample of brain images and to a sample of climate model outputs.

T. Geymayer, M. Steinberger, A. Lex, M. Streit,, D. Schmalstieg.

“Show me the Invisible: Visualizing Hidden Content,” In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems (CHI '14), CHI '14, ACM, pp. 3705--3714. 2014.

ISBN: 978-1-4503-2473-1

DOI: 10.1145/2556288.2557032

Content on computer screens is often inaccessible to users because it is hidden, e.g., occluded by other windows, outside the viewport, or overlooked. In search tasks, the efficient retrieval of sought content is important. Current software, however, only provides limited support to visualize hidden occurrences and rarely supports search synchronization crossing application boundaries. To remedy this situation, we introduce two novel visualization methods to guide users to hidden content. Our first method generates awareness for occluded or out-of-viewport content using see-through visualization. For content that is either outside the screen's viewport or for data sources not opened at all, our second method shows off-screen indicators and an on-demand smart preview. To reduce the chances of overlooking content, we use visual links, i.e., visible edges, to connect the visible content or the visible representations of the hidden content. We show the validity of our methods in a user study, which demonstrates that our technique enables a faster localization of hidden content compared to traditional search functionality and thereby assists users in information retrieval tasks.