SCI Publications

2013

M. Farzinfar, Y. Li, A.R. Verde, I. Oguz, G. Gerig, M.A. Styner.

“DTI Quality Control Assessment via Error Estimation From Monte Carlo Simulations,” In Proceedings of SPIE 8669, Medical Imaging 2013: Image Processing, Vol. 8669, 2013.

DOI: 10.1117/12.2006925

PubMed ID: 23833547

PubMed Central ID: PMC3702180

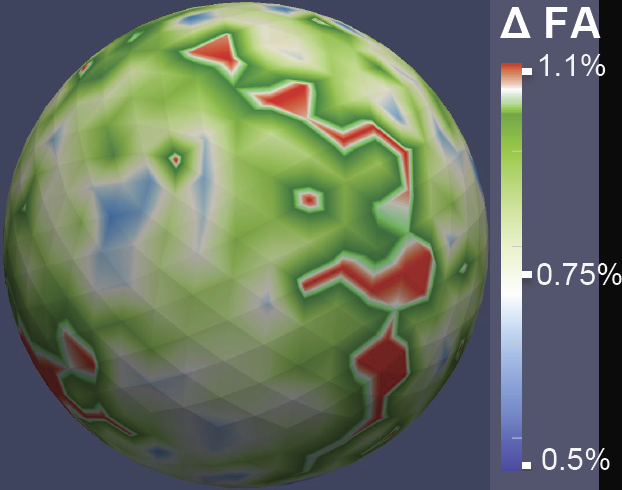

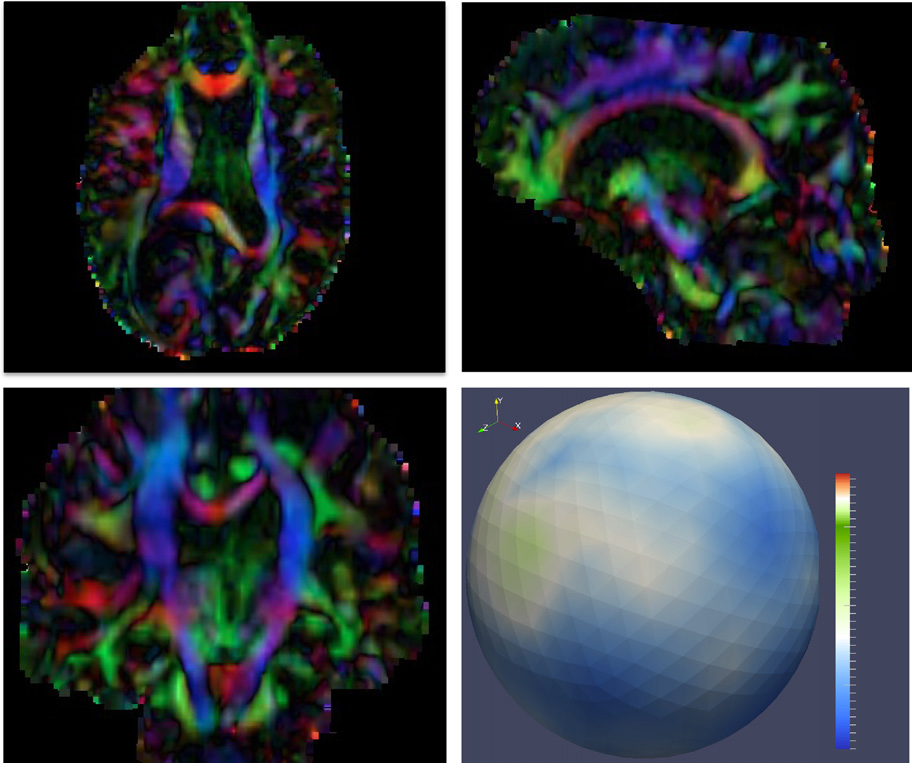

M. Farzinfar, I. Oguz, R.G. Smith, A.R. Verde, C. Dietrich, A. Gupta, M.L. Escolar, J. Piven, S. Pujol, C. Vachet, S. Gouttard, G. Gerig, S. Dager, R.C. McKinstry, S. Paterson, A.C. Evans, M.A. Styner.

“Diffusion imaging quality control via entropy of principal direction distribution,” In NeuroImage, Vol. 82, pp. 1--12. 2013.

ISSN: 1053-8119

DOI: 10.1016/j.neuroimage.2013.05.022

Keywords: Diffusion magnetic resonance imaging, Diffusion tensor imaging, Quality assessment, Entropy

N. Farah, A. Zoubi, S. Matar, L. Golan, A. Marom, C.R. Butson, I. Brosh, S. Shoham.

“Holographically patterned activation using photo-absorber induced neural-thermal stimulation,” In Journal of Neural Engineering, Vol. 10, No. 5, pp. 056004. October, 2013.

ISSN: 1741-2560

DOI: 10.1088/1741-2560/10/5/056004

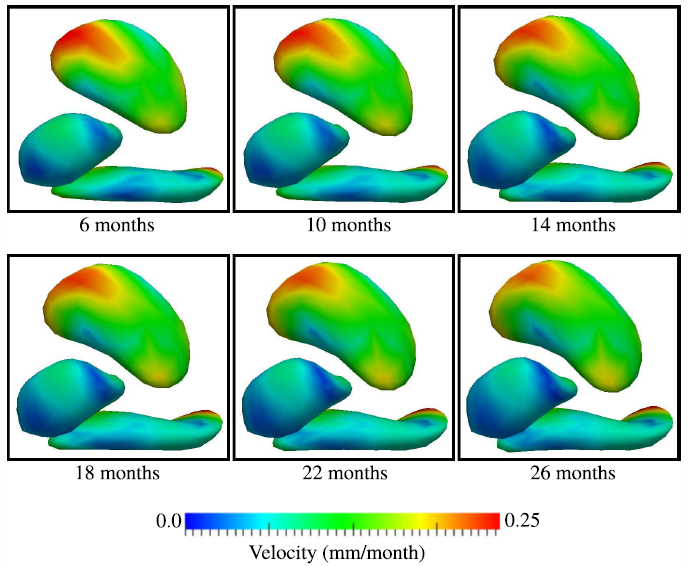

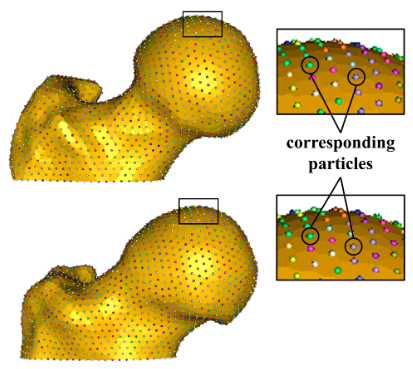

J. Fishbaugh, M.W. Prastawa, G. Gerig, S. Durrleman.

“Geodesic Shape Regression in the Framework of Currents,” In Proceedings of the International Conference on Information Processing in Medical Imaging (IPMI), Vol. 23, pp. 718--729. 2013.

PubMed ID: 24684012

PubMed Central ID: PMC4127488

J. Fishbaugh, M. Prastawa, G. Gerig, S. Durrleman.

“Geodesic image regression with a sparse parameterization of diffeomorphisms,” In Geometric Science of Information Lecture Notes in Computer Science (LNCS), In Proceedings of the Geometric Science of Information Conference (GSI), Vol. 8085, pp. 95--102. 2013.

Image regression allows for time-discrete imaging data to be modeled continuously, and is a crucial tool for conducting statistical analysis on longitudinal images. Geodesic models are particularly well suited for statistical analysis, as image evolution is fully characterized by a baseline image and initial momenta. However, existing geodesic image regression models are parameterized by a large number of initial momenta, equal to the number of image voxels. In this paper, we present a sparse geodesic image regression framework which greatly reduces the number of model parameters. We combine a control point formulation of deformations with a L1 penalty to select the most relevant subset of momenta. This way, the number of model parameters reflects the complexity of anatomical changes in time rather than the sampling of the image. We apply our method to both synthetic and real data and show that we can decrease the number of model parameters (from the number of voxels down to hundreds) with only minimal decrease in model accuracy. The reduction in model parameters has the potential to improve the power of ensuing statistical analysis, which faces the challenging problem of high dimensionality.

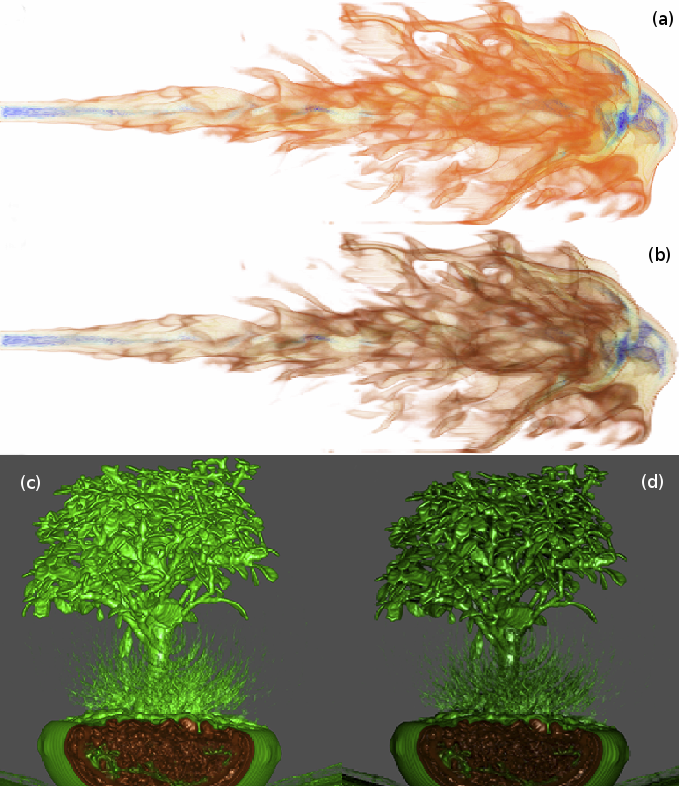

T. Fogal, A. Schiewe, J. Krüger.

“An Analysis of Scalable GPU-Based Ray-Guided Volume Rendering,” In 2013 IEEE Symposium on Large Data Analysis and Visualization (LDAV), 2013.

Volume rendering continues to be a critical method for analyzing large-scale scalar fields, in disciplines as diverse as biomedical engineering and computational fluid dynamics. Commodity desktop hardware has struggled to keep pace with data size increases, challenging modern visualization software to deliver responsive interactions for O(N3) algorithms such as volume rendering. We target the data type common in these domains: regularly-structured data.

In this work, we demonstrate that the major limitation of most volume rendering approaches is their inability to switch the data sampling rate (and thus data size) quickly. Using a volume renderer inspired by recent work, we demonstrate that the actual amount of visualizable data for a scene is typically bound considerably lower than the memory available on a commodity GPU. Our instrumented renderer is used to investigate design decisions typically swept under the rug in volume rendering literature. The renderer is freely available, with binaries for all major platforms as well as full source code, to encourage reproduction and comparison with future research.

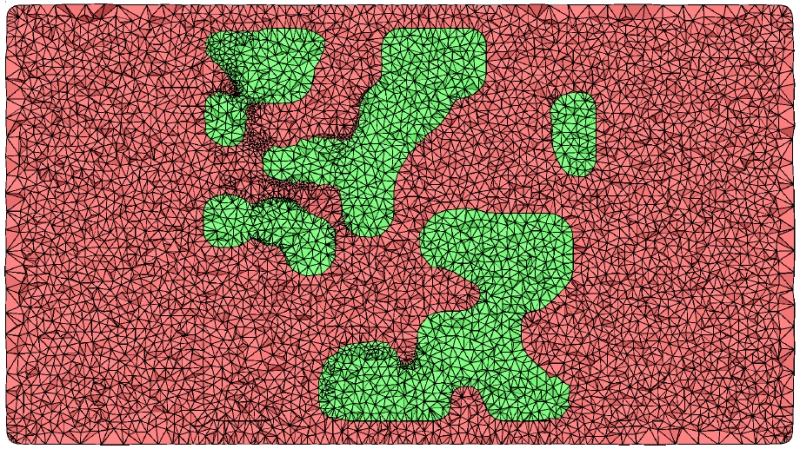

Z. Fu, R.M. Kirby, R.T. Whitaker.

“A Fast Iterative Method for Solving the Eikonal Equation on Tetrahedral Domains,” In SIAM Journal on Scientific Computing, Vol. 35, No. 5, pp. C473--C494. 2013.

M. Gamell, I. Rodero, M. Parashar, J.C. Bennett, H. Kolla, J.H. Chen, P.-T. Bremer, A. Landge, A. Gyulassy, P. McCormick, Scott Pakin, Valerio Pascucci, Scott Klasky.

“Exploring Power Behaviors and Trade-offs of In-situ Data Analytics,” In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, Association for Computing Machinery, 2013.

ISBN: 978-1-4503-2378-9

DOI: 10.1145/2503210.2503303

As scientific applications target exascale, challenges related to data and energy are becoming dominating concerns. For example, coupled simulation workflows are increasingly adopting in-situ data processing and analysis techniques to address costs and overheads due to data movement and I/O. However it is also critical to understand these overheads and associated trade-offs from an energy perspective. The goal of this paper is exploring data-related energy/performance trade-offs for end-to-end simulation workflows running at scale on current high-end computing systems. Specifically, this paper presents: (1) an analysis of the data-related behaviors of a combustion simulation workflow with an in-situ data analytics pipeline, running on the Titan system at ORNL; (2) a power model based on system power and data exchange patterns, which is empirically validated; and (3) the use of the model to characterize the energy behavior of the workflow and to explore energy/performance trade-offs on current as well as emerging systems.

Keywords: SDAV

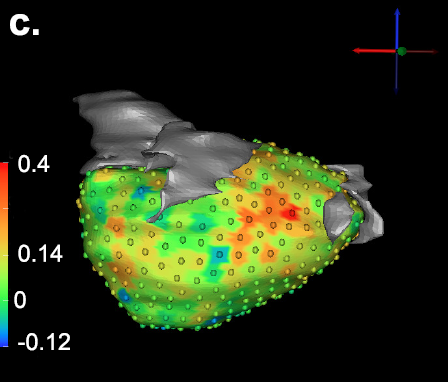

G. Gardner, A. Morris, K. Higuchi, R.S. MacLeod, J. Cates.

“A Point-Correspondence Approach to Describing the Distribution of Image Features on Anatomical Surfaces, with Application to Atrial Fibrillation,” In Proceedings of the 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI), pp. 226--229. 2013.

DOI: 10.1109/ISBI.2013.6556453

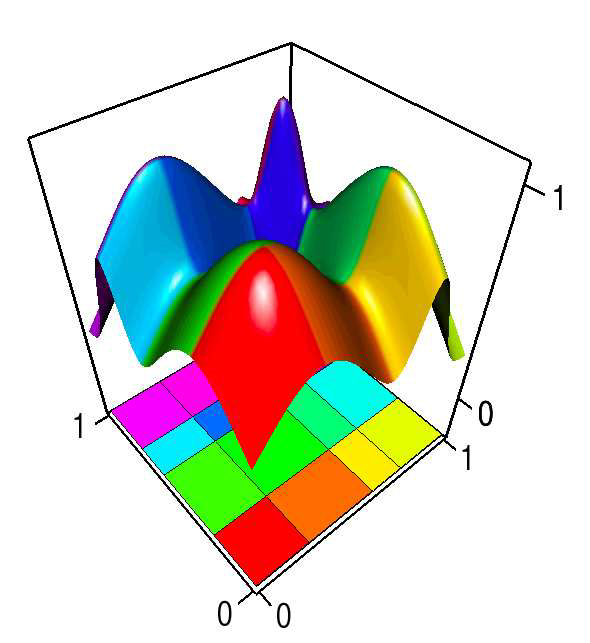

S. Gerber, O. Reubel, P.-T. Bremer, V. Pascucci, R.T. Whitaker.

“Morse-Smale Regression,” In Journal of Computational and Graphical Statistics, Vol. 22, No. 1, pp. 193--214. 2013.

DOI: 10.1080/10618600.2012.657132

J.M. Gililland, L.A. Anderson, H.B. Henninger, E.N. Kubiak, C.L. Peters.

“Biomechanical analysis of acetabular revision constructs: is pelvic discontinuity best treated with bicolumnar or traditional unicolumnar fixation?,” In Journal of Arthoplasty, Vol. 28, No. 1, pp. 178--186. 2013.

DOI: 10.1016/j.arth.2012.04.031

Pelvic discontinuity in revision total hip arthroplasty presents problems with component fixation and union. A construct was proposed based on bicolumnar fixation for transverse acetabular fractures. Each of 3 reconstructions was performed on 6 composite hemipelvises: (1) a cup-cage construct, (2) a posterior column plate construct, and (3) a bicolumnar construct (no. 2 plus an antegrade 4.5-mm anterior column screw). Bone-cup interface motions were measured, whereas cyclical loads were applied in both walking and descending stair simulations. The bicolumnar construct provided the most stable construct. Descending stair mode yielded more significant differences between constructs. The bicolumnar construct provided improved component stability. Placing an antegrade anterior column screw through a posterior approach is a novel method of providing anterior column support in this setting.

S. Gratzl, A. Lex, N. Gehlenborg, H. Pfister,, M. Streit.

“LineUp: Visual Analysis of Multi-Attribute Rankings,” In IEEE Transactions on Visualization and Computer Graphics (InfoVis '13), Vol. 19, No. 12, pp. 2277--2286. 2013.

ISSN: 1077-2626

DOI: 10.1109/TVCG.2013.173

Rankings are a popular and universal approach to structure otherwise unorganized collections of items by computing a rank for each item based on the value of one or more of its attributes. This allows us, for example, to prioritize tasks or to evaluate the performance of products relative to each other. While the visualization of a ranking itself is straightforward, its interpretation is not because the rank of an item represents only a summary of a potentially complicated relationship between its attributes and those of the other items. It is also common that alternative rankings exist that need to be compared and analyzed to gain insight into how multiple heterogeneous attributes affect the rankings. Advanced visual exploration tools are needed to make this process efficient.

In this paper we present a comprehensive analysis of requirements for the visualization of multi-attribute rankings. Based on these considerations, we propose a novel and scalable visualization technique - LineUp - that uses bar charts. This interactive technique supports the ranking of items based on multiple heterogeneous attributes with different scales and semantics. It enables users to interactively combine attributes and flexibly refine parameters to explore the effect of changes in the attribute combination. This process can be employed to derive actionable insights into which attributes of an item need to be modified in order for its rank to change.

Additionally, through integration of slope graphs, LineUp can also be used to compare multiple alternative rankings on the same set of items, for example, over time or across different attribute combinations. We evaluate the effectiveness of the proposed multi-attribute visualization technique in a qualitative study. The study shows that users are able to successfully solve complex ranking tasks in a short period of time.

A. Grosset, M. Schott, G.-P. Bonneau, C.D. Hansen.

“Evaluation of Depth of Field for Depth Perception in DVR,” In Proceedings of the 2013 IEEE Pacific Visualization Symposium (PacificVis), pp. 81--88. 2013.

L.K. Ha, J. King, Z. Fu, R.M. Kirby.

“A High-Performance Multi-Element Processing Framework on GPUs,” SCI Technical Report, No. UUSCI-2013-005, SCI Institute, University of Utah, 2013.

Many computational engineering problems ranging from finite element methods to image processing involve the batch processing on a large number of data items. While multielement processing has the potential to harness computational power of parallel systems, current techniques often concentrate on maximizing elemental performance. Frameworks that take this greedy optimization approach often fail to extract the maximum processing power of the system for multi-element processing problems. By ultilizing the knowledge that the same operation will be accomplished on a large number of items, we can organize the computation to maximize the computational throughput available in parallel streaming hardware. In this paper, we analyzed weaknesses of existing methods and we proposed efficient parallel programming patterns implemented in a high performance multi-element processing framework to harness the processing power of GPUs. Our approach is capable of levering out the performance curve even on the range of small element size.

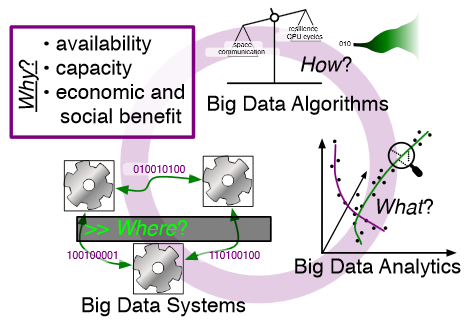

M. Hall, R.M. Kirby, F. Li, M.D. Meyer, V. Pascucci, J.M. Phillips, R. Ricci, J. Van der Merwe, S. Venkatasubramanian.

“Rethinking Abstractions for Big Data: Why, Where, How, and What,” In Cornell University Library, 2013.

Big data refers to large and complex data sets that, under existing approaches, exceed the capacity and capability of current compute platforms, systems software, analytical tools and human understanding [7]. Numerous lessons on the scalability of big data can already be found in asymptotic analysis of algorithms and from the high-performance computing (HPC) and applications communities. However, scale is only one aspect of current big data trends; fundamentally, current and emerging problems in big data are a result of unprecedented complexity |in the structure of the data and how to analyze it, in dealing with unreliability and redundancy, in addressing the human factors of comprehending complex data sets, in formulating meaningful analyses, and in managing the dense, power-hungry data centers that house big data.

The computer science solution to complexity is finding the right abstractions, those that hide as much triviality as possible while revealing the essence of the problem that is being addressed. The "big data challenge" has disrupted computer science by stressing to the very limits the familiar abstractions which define the relevant subfields in data analysis, data management and the underlying parallel systems. Efficient processing of big data has shifted systems towards increasingly heterogeneous and specialized units, with resilience and energy becoming important considerations. The design and analysis of algorithms must now incorporate emerging costs in communicating data driven by IO costs, distributed data, and the growing energy cost of these operations. Data analysis representations as structural patterns and visualizations surpass human visual bandwidth, structures studied at small scale are rare at large scale, and large-scale high-dimensional phenomena cannot be reproduced at small scale.

As a result, not enough of these challenges are revealed by isolating abstractions in a traditional soft-ware stack or standard algorithmic and analytical techniques, and attempts to address complexity either oversimplify or require low-level management of details. The authors believe that the abstractions for big data need to be rethought, and this reorganization needs to evolve and be sustained through continued cross-disciplinary collaboration.

In what follows, we first consider the question of why big data and why now. We then describe the where (big data systems), the how (big data algorithms), and the what (big data analytics) challenges that we believe are central and must be addressed as the research community develops these new abstractions. We equate the biggest challenges that span these areas of big data with big mythological creatures, namely cyclops, that should be conquered.

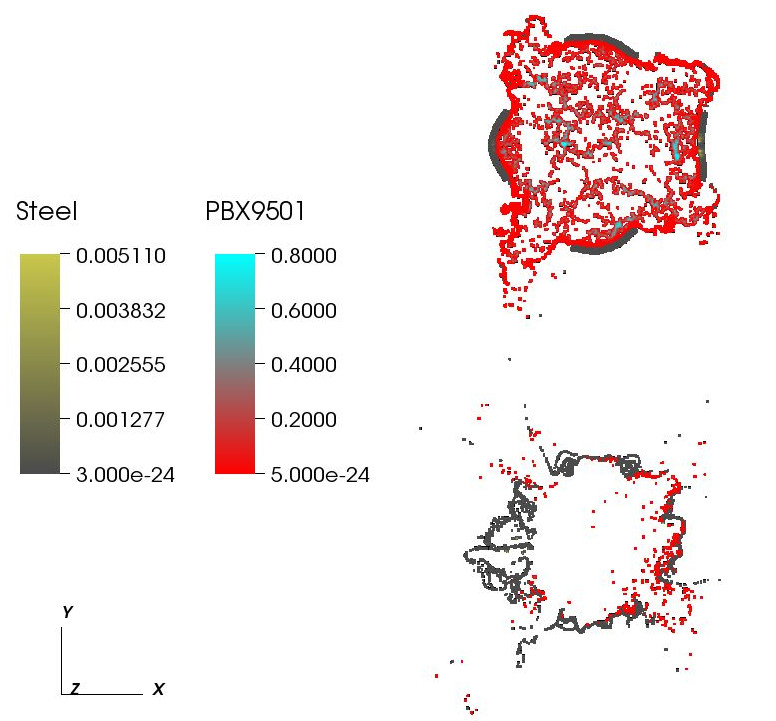

M. Hall, J.C. Beckvermit, C.A. Wight, T. Harman, M. Berzins.

“The influence of an applied heat flux on the violence of reaction of an explosive device,” In Proceedings of the Conference on Extreme Science and Engineering Discovery Environment: Gateway to Discovery, San Diego, California, XSEDE '13, pp. 11:1--11:8. 2013.

ISBN: 978-1-4503-2170-9

DOI: 10.1145/2484762.2484786

Keywords: DDT, cook-off, deflagration, detonation, violence of reaction, c-safe

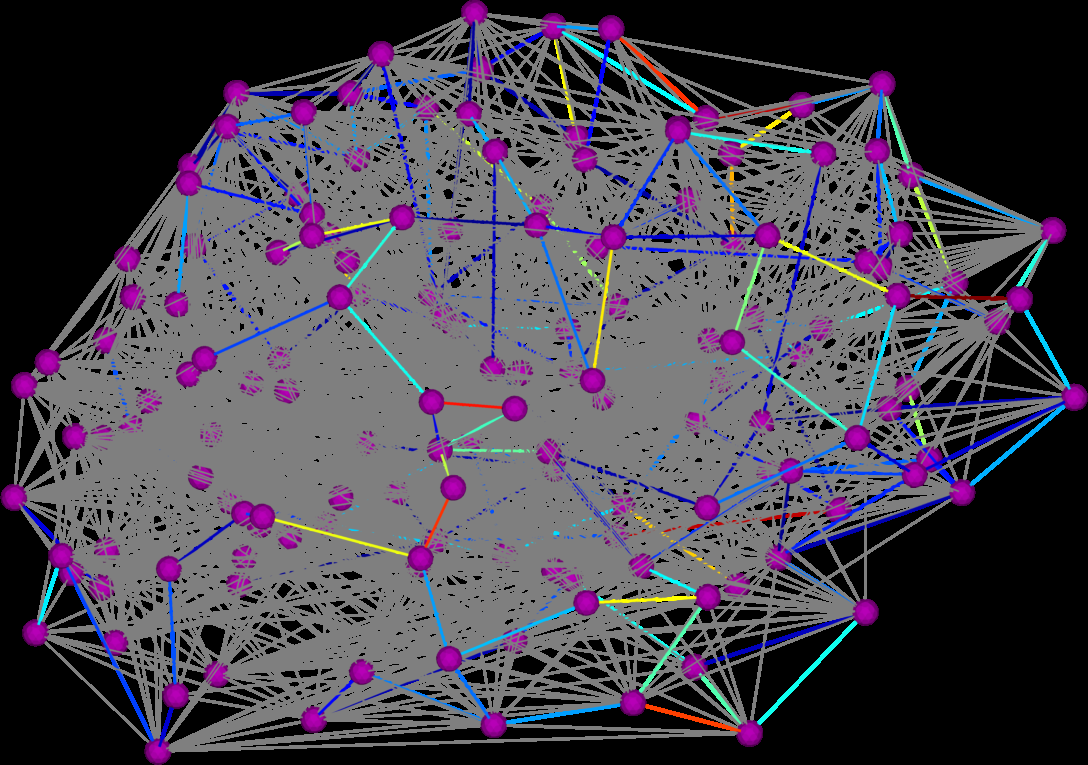

D.K. Hammond, Y. Gur, C.R. Johnson.

“Graph Diffusion Distance: A Difference Measure for Weighted Graphs Based on the Graph Laplacian Exponential Kernel,” In Proceedings of the IEEE global conference on information and signal processing (GlobalSIP'13), Austin, Texas, pp. 419--422. 2013.

DOI: 10.1109/GlobalSIP.2013.6736904

X. Hao, P.T. Fletcher.

“Joint Fractional Segmentation and Multi-Tensor Estimation in Diffusion MRI,” In Proceedings of the International Conference on Information Processing in Medical Imaging (IPMI), Lecture Notes in Computer Science (LNCS), pp. (accepted). 2013.

In this paper we present a novel Bayesian approach for fractional segmentation of white matter tracts and simultaneous estimation of a multi-tensor diffusion model. Our model consists of several white matter tracts, each with a corresponding weight and tensor compartment in each voxel. By incorporating a prior that assumes the tensor fields inside each tract are spatially correlated, we are able to reliably estimate multiple tensor compartments in fiber crossing regions, even with low angular diffusion-weighted imaging (DWI). Our model distinguishes the diffusion compartment associated with each tract, which reduces the effects of partial voluming and achieves more reliable statistics of diffusion measurements.We test our method on synthetic data with known ground truth and show that we can recover the correct volume fractions and tensor compartments. We also demonstrate that the proposed method results in improved segmentation and diffusion measurement statistics on real data in the presence of crossing tracts and partial voluming.

M.D. Harris, S.P. Reese, C.L. Peters, J.A. Weiss, A.E. Anderson.

“Three-dimensional Quantification of Femoral Head Shape in Controls and Patients with Cam-type Femoroacetabular Impingement,” In Annals of Biomedical Engineering, Vol. 41, No. 6, pp. 1162--1171. 2013.

DOI: 10.1007/s10439-013-0762-1

M.D. Harris, M. Datar, R.T. Whitaker, E.R. Jurrus, C.L. Peters, A.E. Anderson.

“Statistical Shape Modeling of Cam Femoroacetabular Impingement,” In Journal of Orthopaedic Research, Vol. 31, No. 10, pp. 1620--1626. 2013.

DOI: 10.1002/jor.22389

Page 45 of 144