Developing the Next Generation Tools for Preoperative Planning for Implantable Cardiac Defibrillators

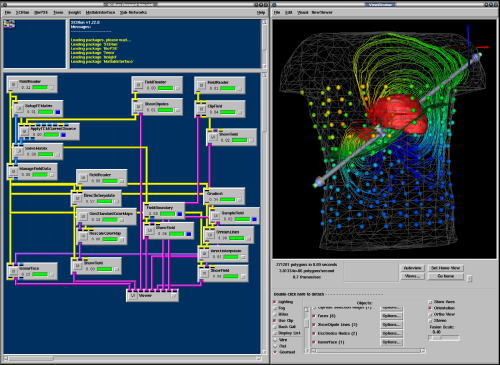

BioPSE visualizing the electrical field generated by an ICD device. |

The use of ICDs has greatly increased over the last few years due to their efficacy in preventing sudden cardiac death (SCD) in patients with congenital heart defects or heart disease. These devices work by continually monitoring the rhythm of the patient's heart and immediately delivering a corrective electric shock if a life-threatening tachycardia is detected. Through this innovation, thousands of lives are saved each year. Surprisingly, these devices are sometimes implanted in newborns and older children with congenital heart defects. Pediatric patients present a particular challenge to the surgeons planning an implantation due to the wide variety of shapes and sizes of torsos. It often has proven difficult for physicians to determine the ideal placement and orientation of the electrodes prior to surgery. Accurate placement of the electrodes is crucial to ensure successful defibrillation with a minimum amount of electric current and to minimize potential damage to the heart and the surrounding tissues.

Building a Better Brain Atlas

In 1909 the Broadman areas map was published which localized various functions of cortex. |

Although we've identified many structures and characteristics that are common in all human brains, in reality every brain is different and we need to improve our understanding of how brains vary between individuals. One problem that persists is that most current atlases have been based on arbitrarily chosen individuals. Even today, when intense research has been directed toward the development of digital three-dimensional atlases of the brain, most digital brain atlases so far have been based on a single subject's anatomy. This introduces a bias into the analysis when comparing individual brains to the atlas and does not provide a meaningful baseline with which to measure individual anatomical variation.

SCI to Collaborate on Three New SciDAC Centers

The SCI Institute is pleased to announce that it will be participating in three DOE SciDAC 2 research centers.

The SCI Institute is pleased to announce that it will be participating in three DOE SciDAC 2 research centers.The Visualization and Analytics Center for Enabling Technologies (VACET), includes SCI Institute faculty Chris Johnson (Center Co- PI with Wes Bethel from LBNL), Chuck Hansen, Steve Parker, Claudio Silva, Allen Sanderson and Xavier Tricoche. The center will focus on leveraging scientific visualization and analytics technology to increase scientific productivity and insight. It will be challenged with resolving one of the primary bottlenecks in contemporary science, making the massive amounts of data now available to scientists accessible and understandable. Advances in computational technology have resulted in an "information Big Bang," vastly increasing the amount of scientific data available, but also creating a significant challenge to reveal the structures, relationships, and anomalies hidden within the data. The VACET Center will respond to that challenge by adapting, extending, creating when necessary, and deploying visualization and data understanding technologies for the scientific community.

SCI Grad Student Helps Solve Open Problem in Computational Geometry

SCI graduate student Jason F. Shepherd and coauthor Carlos D. Carbonera have published a solution to Problem #27 of The Open Problems Project's list of unresolved problems in computational geometry. The question is:

SCI graduate student Jason F. Shepherd and coauthor Carlos D. Carbonera have published a solution to Problem #27 of The Open Problems Project's list of unresolved problems in computational geometry. The question is:

Can the interior of every simply connected polyhedron whose surface is meshed by an even number of quadrilaterals be partitioned into a hexahedral mesh compatible with the surface meshing?The solution of Carbonera and Shepherd settles the practical aspects of the problem by demonstrating an explicit algorithm that extends a quadrilateral surface mesh to a hexahedral mesh where all the hexahedra have straight segment edges. This work did leave one aspect of the problem open. The authors did not resolve the question of achieving a hexahedral mesh with all planar faces. The collaborators are now working on a revision that should close this problem definitively.

C. D. Carbonera, J.F. Shepherd, "A Constructive Approach to Constrained Hexahedral Mesh Generation," Proceedings, 15th International Meshing Roundtable, Birmingham, AL, September 2006.

Dr. Sarang Joshi joins SCI Institute

Dr. Sarang Joshi has joined SCI as an Associate Professor of the Department of Bio Engineering. Before coming to Utah, Dr. Joshi was an Assistant Professor of Radiation Oncology and an Adjunct Assistant Professor of Computer Science at the University of North Carolina in Chapel Hill. Prior to joining Chapel Hill, Dr. Joshi was Director of Technology Development at IntellX, a Medical Imaging start-up company which was later acquired by Medtronic. Sarang's research interests are in the emerging field of Computational Anatomy and have recently focused on its application to Radiation Oncology. Most recently he spent a year on sabbatical at DKFZ (German Cancer Research Center) in Heidelberg, Germany, as a visiting scientist in the Department of Medical Physics where he focused on developing four dimensional radiation therapy approaches for improved treatment of Prostate and Lung Cancer.

Dr. Sarang Joshi has joined SCI as an Associate Professor of the Department of Bio Engineering. Before coming to Utah, Dr. Joshi was an Assistant Professor of Radiation Oncology and an Adjunct Assistant Professor of Computer Science at the University of North Carolina in Chapel Hill. Prior to joining Chapel Hill, Dr. Joshi was Director of Technology Development at IntellX, a Medical Imaging start-up company which was later acquired by Medtronic. Sarang's research interests are in the emerging field of Computational Anatomy and have recently focused on its application to Radiation Oncology. Most recently he spent a year on sabbatical at DKFZ (German Cancer Research Center) in Heidelberg, Germany, as a visiting scientist in the Department of Medical Physics where he focused on developing four dimensional radiation therapy approaches for improved treatment of Prostate and Lung Cancer.Dr. Joshi received his D.Sc. in Electrical Engineering from Washington University in St. Louis. His research interests include Image Understanding, Computer Vision and Shape Analysis. He holds numerous patents in the area of image registration and has over 50 scholarly publications.

Steve Corbató Named Associate Director of the Scientific Computing and Imaging Institute

Steve Corbató has joined the University of Utah's Scientific Computing and Imaging (SCI) Institute as its Associate Director. The Scientific Computing and Imaging (SCI) Institute has established itself as an international research leader in the areas of scientific computing, scientific visualization, and imaging, and in this new position Steve will help lead more than 100 faculty, staff and students in pursuing innovative, ground-breaking research and development aimed at solving important problems in science, engineering, and medicine.

Steve Corbató has joined the University of Utah's Scientific Computing and Imaging (SCI) Institute as its Associate Director. The Scientific Computing and Imaging (SCI) Institute has established itself as an international research leader in the areas of scientific computing, scientific visualization, and imaging, and in this new position Steve will help lead more than 100 faculty, staff and students in pursuing innovative, ground-breaking research and development aimed at solving important problems in science, engineering, and medicine.Steve most recently served as Managing Director for Technology Direction and Development at Internet2, a non-profit, university-led consortium focused on developing and deploying advanced Internet technologies. In that role, he oversaw a broad portfolio of initiatives in high-performance networking, middleware, network diagnostics, and security. He also worked to develop overall strategy and key relationships for Internet2's next generation of network infrastructure.

Embryos Exposed in 3-D

New Method Can Identify What Genes Do, Test Drugs' Safety

May 4, 2006 -- Utah and Texas researchers combined miniature medical CT scans with high-tech computer methods to produce detailed three-dimensional images of mouse embryos – an efficient new method to test the safety of medicines and learn how mutant genes cause birth defects or cancer."Our method provides a fast, high-quality and inexpensive way to visually explore the 3-D internal structure of mouse embryos so scientists can more easily and quickly see the effects of a genetic defect or chemical damage,” says Chris Johnson, a distinguished professor of computer science at the University of Utah.

A study reporting development of the new method – known as “microCT-based virtual histology” – was published recently in PLoS Genetics, an online journal of the Public Library of Science.

The study was led by Charles Keller, a pediatric cancer specialist who formerly worked as a postdoctoral fellow in the laboratory of University of Utah geneticist Mario Capecchi. Keller now is an assistant professor at the Children's Cancer Research Institute at the University of Texas Health Science Center in San Antonio.

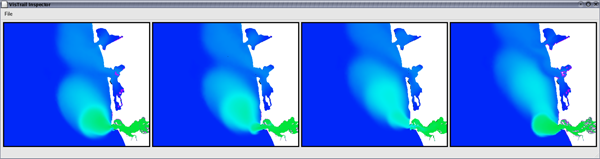

VisTrails: A New Paradigm for Dataflow Management

Figure 1: The VisTrails Visualization Spreadsheet. Surface salinity variation at the mouth of the Columbia River over the period of a day. The green regions represent the fresh-water discharge of the river into the ocean. A single vistrail specification is used to construct this ensemble. Each cell corresponds to a single visualization pipeline specification executed with a different timestamp value. |

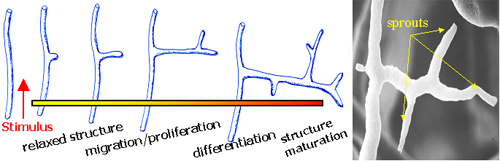

The Mechanics of Angiogenesis

Growth of new blood vessels from existing ones. |

The Center for Interactive Ray-Tracing and Photo Realistic Visualization

Almost every modern computer comes with a graphics processing unit (GPU) that implements an object-based graphics algorithm for fast 3-D graphics. The object-based algorithm in these chips was developed at the University of Utah in the 1970s. While these chips are extremely effective for video games and the visualization of moderately sized models, they cannot interactively display many of the large models that arise in computer-aided design, film animation, and scientific visualization. Researchers at the University of Utah have demonstrated that image-based ray tracing algorithms are more suited for such large-scale applications. A substantial code base has been developed in the form of two ray tracing programs. The new Center aims to improve and integrate these programs to make them appropriate for commercial use.