SCI Publications

2014

S. Liu, Bei Wang, P.-T. Bremer, V. Pascucci.

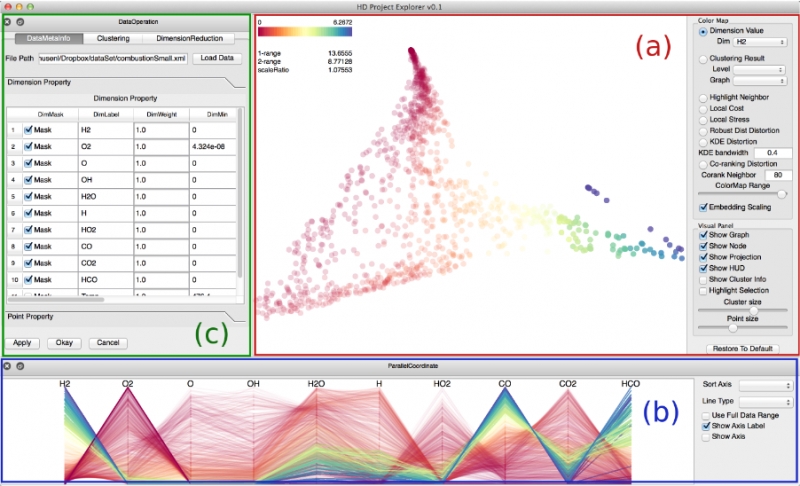

“Distortion-Guided Structure-Driven Interactive Exploration of High-Dimensional Data,” In Computer Graphics Forum, Vol. 33, No. 3, Wiley-Blackwell, pp. 101--110. June, 2014.

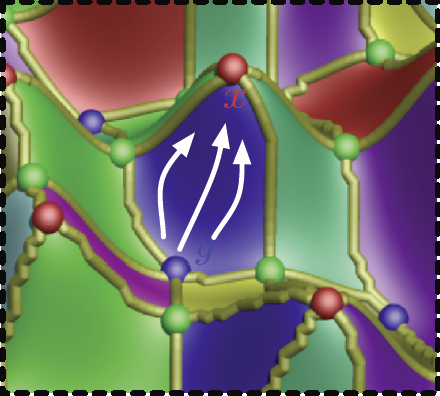

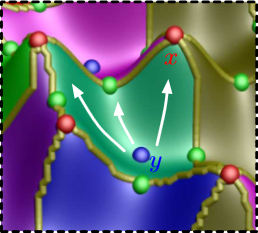

Dimension reduction techniques are essential for feature selection and feature extraction of complex high-dimensional data. These techniques, which construct low-dimensional representations of data, are typically geometrically motivated, computationally efficient and approximately preserve certain structural properties of the data. However, they are often used as black box solutions in data exploration and their results can be difficult to interpret. To assess the quality of these results, quality measures, such as co-ranking [ LV09 ], have been proposed to quantify structural distortions that occur between high-dimensional and low-dimensional data representations. Such measures could be evaluated and visualized point-wise to further highlight erroneous regions [ MLGH13 ]. In this work, we provide an interactive visualization framework for exploring high-dimensional data via its two-dimensional embeddings obtained from dimension reduction, using a rich set of user interactions. We ask the following question: what new insights do we obtain regarding the structure of the data, with interactive manipulations of its embeddings in the visual space? We augment the two-dimensional embeddings with structural abstrac- tions obtained from hierarchical clusterings, to help users navigate and manipulate subsets of the data. We use point-wise distortion measures to highlight interesting regions in the domain, and further to guide our selection of the appropriate level of clusterings that are aligned with the regions of interest. Under the static setting, point-wise distortions indicate the level of structural uncertainty within the embeddings. Under the dynamic setting, on-the-fly updates of point-wise distortions due to data movement and data deletion reflect structural relations among different parts of the data, which may lead to new and valuable insights.

Shusen Liu, Bei Wang, J.J. Thiagarajan, P.-T. Bremer, V. Pascucci.

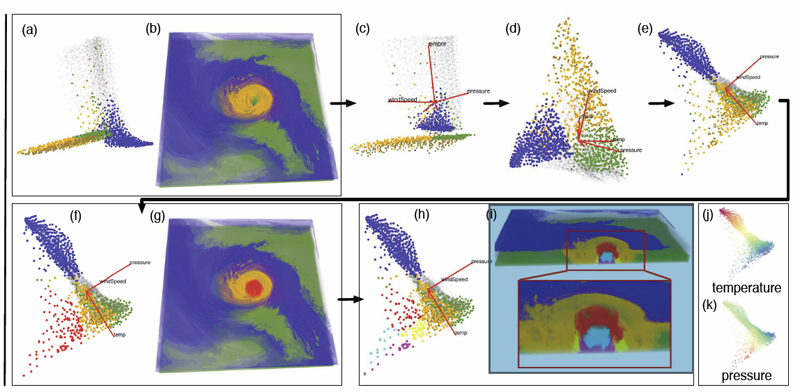

“Multivariate Volume Visualization through Dynamic Projections,” In Proceedings of the IEEE Symposium on Large Data Analysis and Visualization (LDAV), 2014.

We propose a multivariate volume visualization framework that tightly couples dynamic projections with a high-dimensional transfer function design for interactive volume visualization. We assume that the complex, high-dimensional data in the attribute space can be well-represented through a collection of low-dimensional linear subspaces, and embed the data points in a variety of 2D views created as projections onto these subspaces. Through dynamic projections, we present animated transitions between different views to help the user navigate and explore the attribute space for effective transfer function design. Our framework not only provides a more intuitive understanding of the attribute space but also allows the design of the transfer function under multiple dynamic views, which is more flexible than being restricted to a single static view of the data. For large volumetric datasets, we maintain interactivity during the transfer function design via intelligent sampling and scalable clustering. Using examples in combustion and climate simulations, we demonstrate how our framework can be used to visualize interesting structures in the volumetric space.

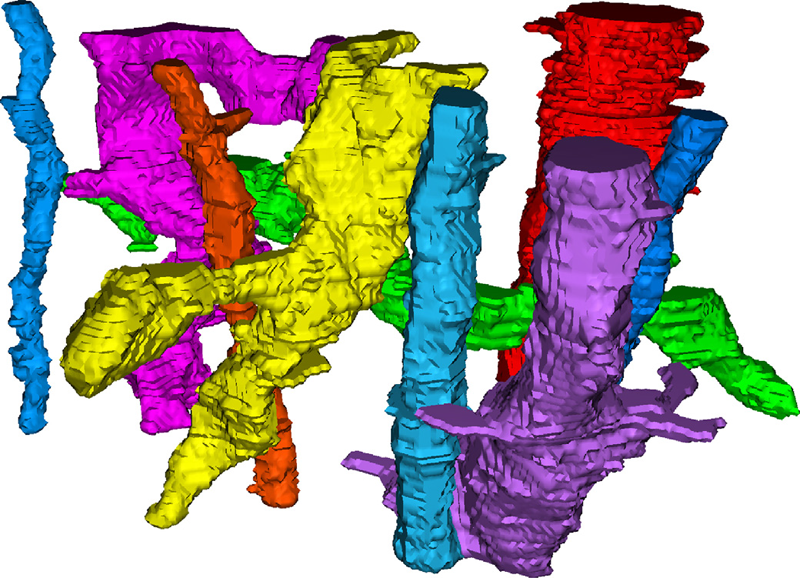

T. Liu, C. Jones, M. Seyedhosseini, T. Tasdizen.

“A modular hierarchical approach to 3D electron microscopy image segmentation,” In Journal of Neuroscience Methods, Vol. 226, No. 15, pp. 88--102. April, 2014.

DOI: 10.1016/j.jneumeth.2014.01.022

The study of neural circuit reconstruction, i.e., connectomics, is a challenging problem in neuroscience. Automated and semi-automated electron microscopy (EM) image analysis can be tremendously helpful for connectomics research. In this paper, we propose a fully automatic approach for intra-section segmentation and inter-section reconstruction of neurons using EM images. A hierarchical merge tree structure is built to represent multiple region hypotheses and supervised classification techniques are used to evaluate their potentials, based on which we resolve the merge tree with consistency constraints to acquire final intra-section segmentation. Then, we use a supervised learning based linking procedure for the inter-section neuron reconstruction. Also, we develop a semi-automatic method that utilizes the intermediate outputs of our automatic algorithm and achieves intra-segmentation with minimal user intervention. The experimental results show that our automatic method can achieve close-to-human intra-segmentation accuracy and state-of-the-art inter-section reconstruction accuracy. We also show that our semi-automatic method can further improve the intra-segmentation accuracy.

Keywords: Image segmentation, Electron microscopy, Hierarchical segmentation, Semi-automatic segmentation, Neuron reconstruction

D. Maljovec, Bei Wang, J. Moeller, V. Pascucci.

“Topology-Based Active Learning,” SCI Technical Report, No. UUSCI-2014-001, SCI Institute, University of Utah, 2014.

A common problem in simulation and experimental research involves obtaining time-consuming, expensive, or potentially hazardous samples from an arbitrary dimension parameter space. For example, many simulations modeled on supercomputers can take days or weeks to complete, so it is imperative to select samples in the most informative and interesting areas of the parameter space. In such environments, maximizing the potential gain of information is achieved through active learning (adaptive sampling). Though the topic of active learning is well-studied, this paper provides a new perspective on the problem. We consider topologybased batch selection strategies for active learning which are ideal for environments where parallel or concurrent experiments are able to be run, yet each has a heavy cost. These strategies utilize concepts derived from computational topology to choose a collection of locally distinct, optimal samples before updating the surrogate model. We demonstrate through experiments using a several different batch sizes that topology-based strategies have comparable and sometimes superior performance, compared to conventional approaches.

D. Maljovec, S. Liu, Bei Wang, V. Pascucci, P.-T. Bremer, D. Mandelli, C. Smith.

“Analyzing Simulation-Based PRA Data Through Clustering: a BWR Station Blackout Case Study,” In Proceedings of the Probabilistic Safety Assessment & Management conference (PSAM), 2014.

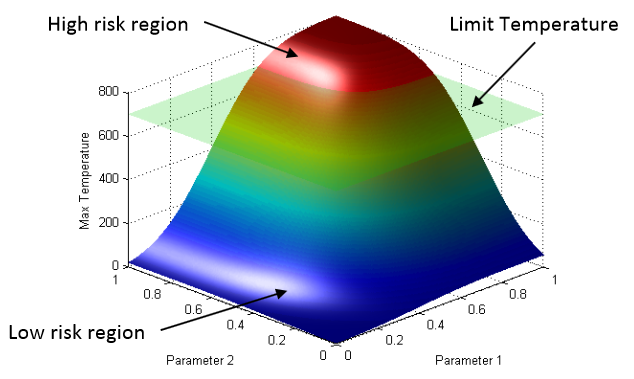

Dynamic probabilistic risk assessment (DPRA) methodologies couple system simulator codes (e.g., RELAP, MELCOR) with simulation controller codes (e.g., RAVEN, ADAPT). Whereas system simulator codes accurately model system dynamics deterministically, simulation controller codes introduce both deterministic (e.g., system control logic, operating procedures) and stochastic (e.g., component failures, parameter uncertainties) elements into the simulation. Typically, a DPRA is performed by 1) sampling values of a set of parameters from the uncertainty space of interest (using the simulation controller codes), and 2) simulating the system behavior for that specific set of parameter values (using the system simulator codes). For complex systems, one of the major challenges in using DPRA methodologies is to analyze the large amount of information (i.e., large number of scenarios ) generated, where clustering techniques are typically employed to allow users to better organize and interpret the data. In this paper, we focus on the analysis of a nuclear simulation dataset that is part of the risk-informed safety margin characterization (RISMC) boiling water reactor (BWR) station blackout (SBO) case study. We apply a software tool that provides the domain experts with an interactive analysis and visualization environment for understanding the structures of such high-dimensional nuclear simulation datasets. Our tool encodes traditional and topology-based clustering techniques, where the latter partitions the data points into clusters based on their uniform gradient flow behavior. We demonstrate through our case study that both types of clustering techniques complement each other in bringing enhanced structural understanding of the data.

Keywords: PRA, computational topology, clustering, high-dimensional analysis

D. Mandelli, C. Smith, T. Riley, J. Nielsen, J. Schroeder, C. Rabiti, A. Alfonsi, J. Cogliati, R. Kinoshita, V. Pascucci, Bei Wang, D. Maljovec.

“Overview of New Tools to Perform Safety Analysis: BWR Station Black Out Test Case,” In Proceedings of the Probabilistic Safety Assessment & Management conference (PSAM), 2014.

The existing fleet of nuclear power plants is in the process of extending its lifetime and increasing the power generated from these plants via power uprates. In order to evaluate the impacts of these two factors on the safety of the plant, the Risk Informed Safety Margin Characterization project aims to provide insights to decision makers through a series of simulations of the plant dynamics for different initial conditions (e.g., probabilistic analysis and uncertainty quantification). This paper focuses on the impacts of power uprate on the safety margin of a boiling water reactor for a station black-out event. Analysis is performed by using a combination of thermal-hydraulic codes and a stochastic analysis tool currently under development at the Idaho National Laboratory, i.e. RAVEN. We employed both classical statistical tools, i.e. Monte-Carlo, and more advanced machine learning based algorithms to perform uncertainty quantification in order to quantify changes in system performance and limitations as a consequence of power uprate. We also employed advanced data analysis and visualization tools that helped us to correlate simulation outcomes such as maximum core temperature with a set of input uncertain parameters. Results obtained give a detailed investigation of the issues associated with a plant power uprate including the effects of station black-out accident scenarios. We were able to quantify how the timing of specific events was impacted by a higher nominal reactor core power. Such safety insights can provide useful information to the decision makers to perform risk-informed margins management.

C. McGann, N. Akoum, A. Patel, E. Kholmovski, P. Revelo, K. Damal, B. Wilson, J. Cates, A. Harrison, R. Ranjan, N.S. Burgon, T. Greene, D. Kim, E.V. Dibella, D. Parker, R.S. MacLeod, N.F. Marrouche.

“Atrial fibrillation ablation outcome is predicted by left atrial remodeling on MRI,” In Circ Arrhythm Electrophysiol, Vol. 7, No. 1, pp. 23--30. 2014.

DOI: 10.1161/CIRCEP.113.000689

PubMed ID: 24363354

BACKGROUND:

Although catheter ablation therapy for atrial fibrillation (AF) is becoming more common, results vary widely, and patient selection criteria remain poorly defined. We hypothesized that late gadolinium enhancement MRI (LGE-MRI) can identify left atrial (LA) wall structural remodeling (SRM) and stratify patients who are likely or not to benefit from ablation therapy.

LGE-MRI was performed on 426 consecutive patients with AF without contraindications to MRI before undergoing their first ablation procedure and on 21 non-AF control subjects. Patients were categorized by SRM stage (I-IV) based on the percentage of LA wall enhancement for correlation with procedure outcomes. Histological validation of SRM was performed comparing LGE-MRI with surgical biopsy. A total of 386 patients (91%) with adequate LGE-MRI scans were included in the study. After ablation, 123 patients (31.9%) experienced recurrent atrial arrhythmias during the 1-year follow-up. Recurrent arrhythmias (failed ablations) occurred at higher SRM stages with 28 of 133 (21.0%) in stage I, 40 of 140 (29.3%) in stage II, 24 of 71 (33.8%) in stage III, and 30 of 42 (71.4%) in stage IV. In multivariate analysis, ablation outcome was best predicted by advanced SRM stage (hazard ratio, 4.89; P

G. McInerny, M. Chen, R. Freeman, D. Gavaghan, M.D. Meyer, F. Rowland, D. Spiegelhalter, M. Steganer, G. Tessarolo, J. Hortal.

“Information Visualization for Science and Policy: Engaging Users and Avoiding Bias,” In Trends in Ecology & Evolution, Vol. 29, No. 3, pp. 148--157. 2014.

DOI: 10.1016/j.tree.2014.01.003

Visualisations and graphics are fundamental to studying complex subject matter. However, beyond acknowledging this value, scientists and science-policy programmes rarely consider how visualisations can enable discovery, create engaging and robust reporting, or support online resources. Producing accessible and unbiased visualisations from complicated, uncertain data requires expertise and knowledge from science, policy, computing, and design. However, visualisation is rarely found in our scientific training, organisations, or collaborations. As new policy programmes develop [e.g., the Intergovernmental Platform on Biodiversity and Ecosystem Services (IPBES)], we need information visualisation to permeate increasingly both the work of scientists and science policy. The alternative is increased potential for missed discoveries, miscommunications, and, at worst, creating a bias towards the research that is easiest to display.

S. McKenna, D. Mazur, J. Agutter, M.D. Meyer.

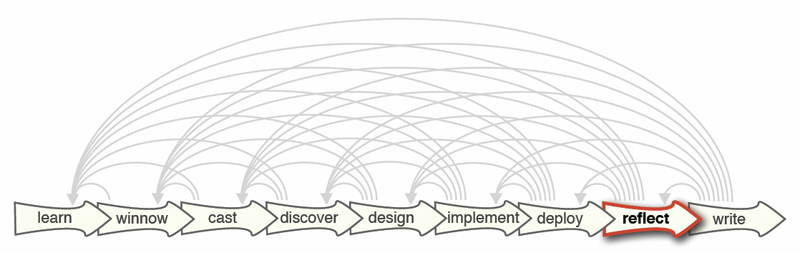

“Design Activity Framework for Visualization Design,” In IEEE Transactions on Visualization and Computer Graphics (TVCG), 2014.

An important aspect in visualization design is the connection between what a designer does and the decisions the designer makes. Existing design process models, however, do not explicitly link back to models for visualization design decisions. We bridge this gap by introducing the design activity framework, a process model that explicitly connects to the nested model, a well-known visualization design decision model. The framework includes four overlapping activities that characterize the design process, with each activity explicating outcomes related to the nested model. Additionally, we describe and characterize a list of exemplar methods and how they overlap among these activities. The design activity framework is the result of reflective discussions from a collaboration on a visualization redesign project, the details of which we describe to ground the framework in a real-world design process. Lastly, from this redesign project we provide several research outcomes in the domain of cybersecurity, including an extended data abstraction and rich opportunities for future visualization research.

Keywords: Design, frameworks, process, cybersecurity, nested model, decisions, models, evaluation, visualization

Q. Meng, M. Berzins.

“Scalable large-scale fluid-structure interaction solvers in the Uintah framework via hybrid task-based parallelism algorithms,” In Concurrency and Computation: Practice and Experience, Vol. 26, No. 7, pp. 1388--1407. May, 2014.

DOI: 10.1002/cpe

Uintah is a software framework that provides an environment for solving fluid–structure interaction problems on structured adaptive grids for large-scale science and engineering problems involving the solution of partial differential equations. Uintah uses a combination of fluid flow solvers and particle-based methods for solids, together with adaptive meshing and a novel asynchronous task-based approach with fully automated load balancing. When applying Uintah to fluid–structure interaction problems, the combination of adaptive mesh- ing and the movement of structures through space present a formidable challenge in terms of achieving scalability on large-scale parallel computers. The Uintah approach to the growth of the number of core counts per socket together with the prospect of less memory per core is to adopt a model that uses MPI to communicate between nodes and a shared memory model on-node so as to achieve scalability on large-scale systems. For this approach to be successful, it is necessary to design data structures that large numbers of cores can simultaneously access without contention. This scalability challenge is addressed here for Uintah, by the development of new hybrid runtime and scheduling algorithms combined with novel lock-free data structures, making it possible for Uintah to achieve excellent scalability for a challenging fluid–structure problem with mesh refinement on as many as 260K cores.

Keywords: MPI, threads, Uintah, many core, lock free, fluid-structure interaction, c-safe

Qingyu Meng.

“Large-Scale Distributed Runtime System for DAG-Based Computational Framework,” Note: Ph.D. in Computer Science, advisor Martin Berzins, School of Computing, University of Utah, August, 2014.

Recent trends in high performance computing present larger and more diverse computers using multicore nodes possibly with accelerators and/or coprocessors and reduced memory. These changes pose formidable challenges for applications code to attain scalability. Software frameworks that execute machine-independent applications code using a runtime system that shields users from architectural complexities offer a portable solution for easy programming. The Uintah framework, for example, solves a broad class of large-scale problems on structured adaptive grids using fluid-flow solvers coupled with particle-based solids methods. However, the original Uintah code had limited scalability as tasks were run in a predefined order based solely on static analysis of the task graph and used only message passing interface (MPI) for parallelism. By using a new hybrid multithread and MPI runtime system, this research has made it possible for Uintah to scale to 700K central processing unit (CPU) cores when solving challenging fluid-structure interaction problems. Those problems often involve moving objects with adaptive mesh refinement and thus with highly variable and unpredictable work patterns. This research has also demonstrated an ability to run capability jobs on the heterogeneous systems with Nvidia graphics processing unit (GPU) accelerators or Intel Xeon Phi coprocessors. The new runtime system for Uintah executes directed acyclic graphs of computational tasks with a scalable asynchronous and dynamic runtime system for multicore CPUs and/or accelerators/coprocessors on a node. Uintah's clear separation between application and runtime code has led to scalability increases without significant changes to application code. This research concludes that the adaptive directed acyclic graph (DAG)-based approach provides a very powerful abstraction for solving challenging multiscale multiphysics engineering problems. Excellent scalability with regard to the different processors and communications performance are achieved on some of the largest and most powerful computers available today.

J. Mercer, B. Pandian, A. Lex, N. Bonneel,, H. Pfister.

“Mu-8: Visualizing Differences between Proteins and their Families ,” In BMC Proceedings, Vol. 8, No. Suppl 2, pp. S5. Aug, 2014.

ISSN: 1753-6561

DOI: 10.1186/1753-6561-8-S2-S5

A complete understanding of the relationship between the amino acid sequence and resulting protein function remains an open problem in the biophysical sciences. Current approaches often rely on diagnosing functionally relevant mutations by determining whether an amino acid frequently occurs at a specific position within the protein family. However, these methods do not account for the biophysical properties and the 3D structure of the protein. We have developed an interactive visualization technique, Mu-8, that provides researchers with a holistic view of the differences of a selected protein with respect to a family of homologous proteins. Mu-8 helps to identify areas of the protein that exhibit: (1) significantly different bio-chemical characteristics, (2) relative conservation in the family, and (3) proximity to other regions that have suspect behavior in the folded protein.

M.D. Meyer, M. Sedlmair, P.S. Quinan, T. Munzner.

“The Nested Blocks and Guidelines Model,” In Journal of Information Visualization, Special Issue on Evaluation (BELIV), 2014.

We propose the nested blocks and guidelines model (NBGM) for the design and validation of visualization systems. The NBGM extends the previously proposed four-level nested model by adding finer grained structure within each level, providing explicit mechanisms to capture and discuss design decision rationale. Blocks are the outcomes of the design process at a specific level, and guidelines discuss relationships between these blocks. Blocks at the algorithm and technique levels describe design choices, as do data blocks at the abstraction level, whereas task abstraction blocks and domain situation blocks are identified as the outcome of the designer's understanding of the requirements. In the NBGM, there are two types of guidelines: within-level guidelines provide comparisons for blocks within the same level, while between-level guidelines provide mappings between adjacent levels of design. We analyze several recent papers using the NBGM to provide concrete examples of how a researcher can use blocks and guidelines to describe and evaluate visualization research. We also discuss the NBGM with respect to other design models to clarify its role in visualization design. Using the NBGM, we pinpoint two implications for visualization evaluation. First, comparison of blocks at the domain level must occur implicitly downstream at the abstraction level; and second, comparison between blocks must take into account both upstream assumptions and downstream requirements. Finally, we use the model to analyze two open problems: the need for mid-level task taxonomies to fill in the task blocks at the abstraction level, as well as the need for more guidelines mapping between the algorithm and technique levels.

M. Milanič, V. Jazbinšek, R.S. MacLeod, D.H. Brooks, R. Hren.

“Assessment of regularization techniques for electrocardiographic imaging,” In Journal of electrocardiology, Vol. 47, No. 1, pp. 20--28. 2014.

DOI: 10.1016/j.jelectrocard.2013.10.004

A widely used approach to solving the inverse problem in electrocardiography involves computing potentials on the epicardium from measured electrocardiograms (ECGs) on the torso surface. The main challenge of solving this electrocardiographic imaging (ECGI) problem lies in its intrinsic ill-posedness. While many regularization techniques have been developed to control wild oscillations of the solution, the choice of proper regularization methods for obtaining clinically acceptable solutions is still a subject of ongoing research. However there has been little rigorous comparison across methods proposed by different groups. This study systematically compared various regularization techniques for solving the ECGI problem under a unified simulation framework, consisting of both 1) progressively more complex idealized source models (from single dipole to triplet of dipoles), and 2) an electrolytic human torso tank containing a live canine heart, with the cardiac source being modeled by potentials measured on a cylindrical cage placed around the heart. We tested 13 different regularization techniques to solve the inverse problem of recovering epicardial potentials, and found that non-quadratic methods (total variation algorithms) and first-order and second-order Tikhonov regularizations outperformed other methodologies and resulted in similar average reconstruction errors.

M. Mirzargar, R. Whitaker, R. M. Kirby.

“Curve Boxplot: Generalization of Boxplot for Ensembles of Curves,” In IEEE Transactions on Visualization and Computer Graphics, Vol. 20, No. 12, IEEE, pp. 2654-63. December, 2014.

In simulation science, computational scientists often study the behavior of their simulations by repeated solutions with variations in parameters and/or boundary values or initial conditions. Through such simulation ensembles, one can try to understand or quantify the variability or uncertainty in a solution as a function of the various inputs or model assumptions. In response to a growing interest in simulation ensembles, the visualization community has developed a suite of methods for allowing users to observe and understand the properties of these ensembles in an efficient and effective manner. An important aspect of visualizing simulations is the analysis of derived features, often represented as points, surfaces, or curves. In this paper, we present a novel, nonparametric method for summarizing ensembles of 2D and 3D curves. We propose an extension of a method from descriptive statistics, data depth, to curves. We also demonstrate a set of rendering and visualization strategies for showing rank statistics of an ensemble of curves, which is a generalization of traditional whisker plots or boxplots to multidimensional curves. Results are presented for applications in neuroimaging, hurricane forecasting and fluid dynamics

P. Muralidharan, J. Fishbaugh, H.J. Johnson, S. Durrleman, J.S. Paulsen, G. Gerig, P.T. Fletcher.

“Diffeomorphic Shape Trajectories for Improved Longitudinal Segmentation and Statistics,” In Proceedings of Medical Image Computing and Computer Assisted Intervention (MICCAI), 2014.

Longitudinal imaging studies involve tracking changes in individuals by repeated image acquisition over time. The goal of these studies is to quantify biological shape variability within and across individuals, and also to distinguish between normal and disease populations. However, data variability is influenced by outside sources such as image acquisition, image calibration, human expert judgment, and limited robustness of segmentation and registration algorithms. In this paper, we propose a two-stage method for the statistical analysis of longitu- dinal shape. In the first stage, we estimate diffeomorphic shape trajectories for each individual that minimize inconsistencies in segmented shapes across time. This is followed by a longitudinal mixed-effects statistical model in the second stage for testing differences in shape trajectories between groups. We apply our method to a longitudinal database from PREDICT-HD and demonstrate our ap- proach reduces unwanted variability for both shape and derived measures, such as volume. This leads to greater statistical power to distinguish differences in shape trajectory between healthy subjects and subjects with a genetic biomarker for Huntington's disease (HD).

J.A. Nielsen, B.A. Zielinski, P.T. Fletcher, A.L. Alexander, N. Lange, E.D. Bigler, J.E. Lainhart, J.S. Anderson.

“Abnormal lateralization of functional connectivity between language and default mode regions in autism,” In Molecular Autism, Vol. 5, No. 1, pp. 8. 2014.

DOI: 10.1186/2040-2392-5-8

Background: Lateralization of brain structure and function occurs in typical development, and abnormal lateralization is present in various neuropsychiatric disorders. Autism is characterized by a lack of left lateralization in structure and function of regions involved in language, such as Broca and Wernicke areas.

Methods: Using functional connectivity magnetic resonance imaging from a large publicly available sample (n = 964), we tested whether abnormal functional lateralization in autism exists preferentially in language regions or in a more diffuse pattern across networks of lateralized brain regions.

Results: The autism group exhibited significantly reduced left lateralization in a few connections involving language regions and regions from the default mode network, but results were not significant throughout left- and right-lateralized networks. There is a trend that suggests the lack of left lateralization in a connection involving Wernicke area and the posterior cingulate cortex associates with more severe autism.

Conclusions: Abnormal language lateralization in autism may be due to abnormal language development rather than to a deficit in hemispheric specialization of the entire brain.

Keywords: brain lateralization, brain asymmetry, autism, autism spectrum disorder, language, functional magnetic resonance imaging, functional connectivity

K.A. Nestor, J.D. Jones, C.R. Butson, T. Morishita, C.E. Jacobson, D.A. Peace, D. Chen, K.D. Foote, M.S. Okun.

“Coordinate-based lead location does not predict Parkinson's disease deep brain stimulation outcome,” In PloS One, Vol. 9, No. 4, pp. e93524. January, 2014.

ISSN: 1932-6203

DOI: 10.1371/journal.pone.0093524

PubMed ID: 24691109

BACKGROUND: Effective target regions for deep brain stimulation (DBS) in Parkinson's disease (PD) have been well characterized. We sought to study whether the measured Cartesian coordinates of an implanted DBS lead are predictive of motor outcome(s). We tested the hypothesis that the position and trajectory of the DBS lead relative to the mid-commissural point (MCP) are significant predictors of clinical outcomes. We expected that due to neuroanatomical variation among individuals, a simple measure of the position of the DBS lead relative to MCP (commonly used in clinical practice) may not be a reliable predictor of clinical outcomes when utilized alone.

METHODS: 55 PD subjects implanted with subthalamic nucleus (STN) DBS and 41 subjects implanted with globus pallidus internus (GPi) DBS were included. Lead locations in AC-PC space (x, y, z coordinates of the active contact and sagittal and coronal entry angles) measured on high-resolution CT-MRI fused images, and motor outcomes (Unified Parkinson's Disease Rating Scale) were analyzed to confirm or refute a correlation between coordinate-based lead locations and DBS motor outcomes.

RESULTS: Coordinate-based lead locations were not a significant predictor of change in UPDRS III motor scores when comparing pre- versus post-operative values. The only potentially significant individual predictor of change in UPDRS motor scores was the antero-posterior coordinate of the GPi lead (more anterior lead locations resulted in a worse outcome), but this was only a statistical trend (p<.082).

CONCLUSION: The results of the study showed that a simple measure of the position of the DBS lead relative to the MCP is not significantly correlated with PD motor outcomes, presumably because this method fails to account for individual neuroanatomical variability. However, there is broad agreement that motor outcomes depend strongly on lead location. The results suggest the need for more detailed identification of stimulation location relative to anatomical targets.

I. Oguz, M. Farzinfar, J. Matsui, F. Budin, Z. Liu, G. Gerig, H.J. Johnson, M.A. Styner.

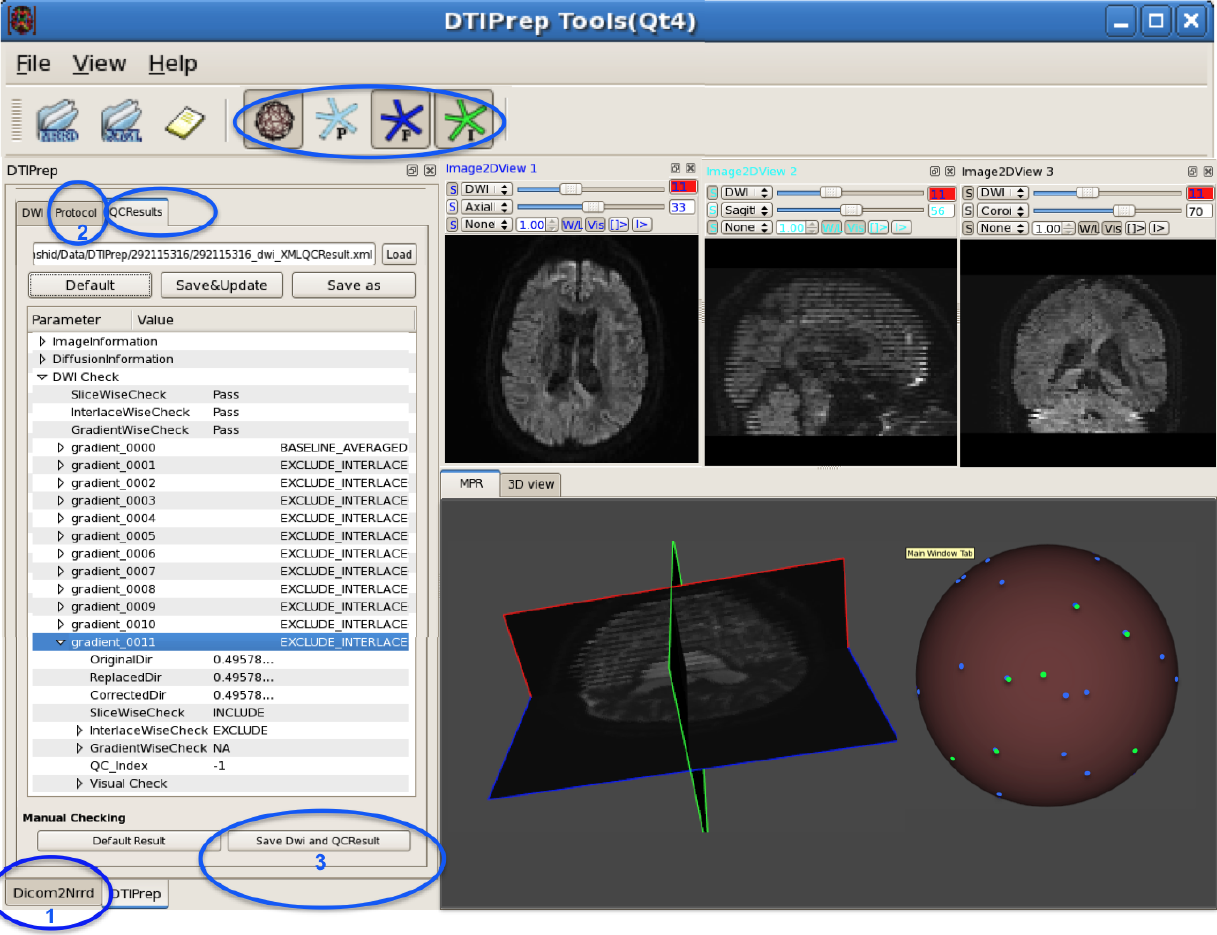

“DTIPrep: Quality Control of Diffusion-Weighted Images,” In Frontiers in Neuroinformatics, Vol. 8, No. 4, 2014.

DOI: 10.3389/fninf.2014.00004

In the last decade, diffusion MRI (dMRI) studies of the human and animal brain have been used to investigate a multitude of pathologies and drug-related effects in neuroscience research. Study after study identifies white matter (WM) degeneration as a crucial biomarker for all these diseases. The tool of choice for studying WM is dMRI. However, dMRI has inherently low signal-to-noise ratio and its acquisition requires a relatively long scan time; in fact, the high loads required occasionally stress scanner hardware past the point of physical failure. As a result, many types of artifacts implicate the quality of diffusion imagery. Using these complex scans containing artifacts without quality control (QC) can result in considerable error and bias in the subsequent analysis, negatively affecting the results of research studies using them. However, dMRI QC remains an under-recognized issue in the dMRI community as there are no user-friendly tools commonly available to comprehensively address the issue of dMRI QC. As a result, current dMRI studies often perform a poor job at dMRI QC.

Thorough QC of diffusion MRI will reduce measurement noise and improve reproducibility, and sensitivity in neuroimaging studies; this will allow researchers to more fully exploit the power of the dMRI technique and will ultimately advance neuroscience. Therefore, in this manuscript, we present our open-source software, DTIPrep, as a unified, user friendly platform for thorough quality control of dMRI data. These include artifacts caused by eddy-currents, head motion, bed vibration and pulsation, venetian blind artifacts, as well as slice-wise and gradient-wise intensity inconsistencies. This paper summarizes a basic set of features of DTIPrep described earlier and focuses on newly added capabilities related to directional artifacts and bias analysis.

Keywords: diffusion MRI, Diffusion Tensor Imaging, Quality control, Software, open-source, preprocessing

D.C.B. de Oliveira, A. Humphrey, Q. Meng, Z. Rakamaric, M. Berzins, G. Gopalakrishnan.

“Systematic Debugging of Concurrent Systems Using Coalesced Stack Trace Graphs,” In Proceedings of the 27th International Workshop on Languages and Compilers for Parallel Computing (LCPC), September, 2014.

A central need during software development of large-scale parallel systems is tools that help help to identify the root causes of bugs quickly. Given the massive scale of these systems, tools that highlight changes--say introduced across software versions or their operating conditions (e.g., inputs, schedules)--can prove to be highly effective in practice. Conventional debuggers, while good at presenting details at the problem-site (e.g., crash), often omit contextual information to identify the root causes of the bug. We present a new approach to collect and coalesce stack traces, leading to an efficient summary display of salient system control flow differences in a graphical form called Coalesced Stack Trace Graphs (CSTG). CSTGs have helped us understand and debug situations within a computational framework called Uintah that has been deployed at large scale, and undergoes frequent version updates. In this paper, we detail CSTGs through case studies in the context of Uintah where unexpected behaviors caused by different vesions of software or occurring across different time-steps of a system (e.g., due to non-determinism) are debugged. We show that CSTG also gives conventional debuggers a far more productive and guided role to play.