SCI Publications

2013

J. Schmidt, M. Berzins, J. Thornock, T. Saad, J. Sutherland.

“Large Scale Parallel Solution of Incompressible Flow Problems using Uintah and hypre,” In 2013 13th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGrid), pp. 458--465. 2013.

The Uintah Software framework was developed to provide an environment for solving fluid-structure interaction problems on structured adaptive grids on large-scale, longrunning, data-intensive problems. Uintah uses a combination of fluid-flow solvers and particle-based methods for solids together with a novel asynchronous task-based approach with fully automated load balancing. As Uintah is often used to solve incompressible flow problems in combustion applications it is important to have a scalable linear solver. While there are many such solvers available, the scalability of those codes varies greatly. The hypre software offers a range of solvers and preconditioners for different types of grids. The weak scalability of Uintah and hypre is addressed for particular examples of both packages when applied to a number of incompressible flow problems. After careful software engineering to reduce startup costs, much better than expected weak scalability is seen for up to 100K cores on NSFs Kraken architecture and up to 260K cpu cores, on DOEs new Titan machine. The scalability is found to depend in a crtitical way on the choice of algorithm used by hypre for a realistic application problem.

Keywords: Uintah, hypre, parallelism, scalability, linear equations

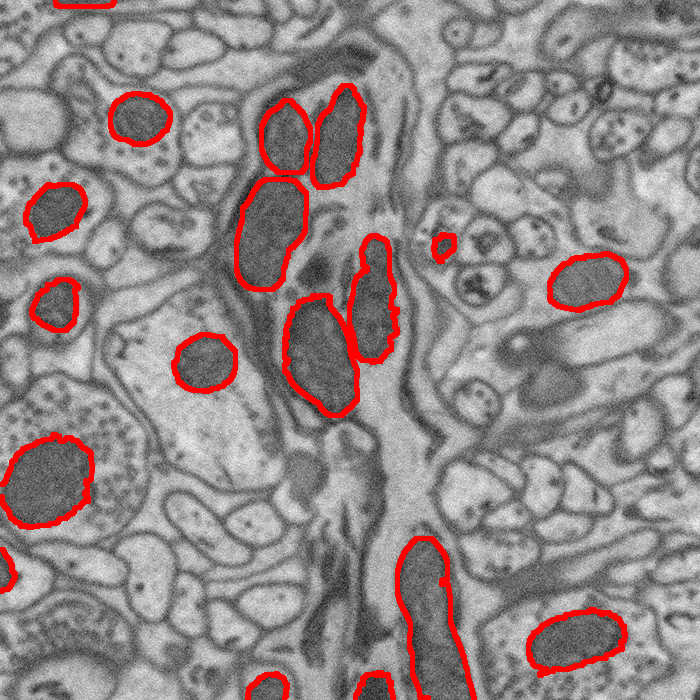

M. Seyedhosseini, M. Ellisman, T. Tasdizen.

“Segmentation of Mitochondria in Electron Microscopy Images using Algebraic Curves,” In Proceedings of the 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI), pp. 860--863. 2013.

DOI: 10.1109/ISBI.2013.6556611

High-resolution microscopy techniques have been used to generate large volumes of data with enough details for understanding the complex structure of the nervous system. However, automatic techniques are required to segment cells and intracellular structures in these multi-terabyte datasets and make anatomical analysis possible on a large scale. We propose a fully automated method that exploits both shape information and regional statistics to segment irregularly shaped intracellular structures such as mitochondria in electron microscopy (EM) images. The main idea is to use algebraic curves to extract shape features together with texture features from image patches. Then, these powerful features are used to learn a random forest classifier, which can predict mitochondria locations precisely. Finally, the algebraic curves together with regional information are used to segment the mitochondria at the predicted locations. We demonstrate that our method outperforms the state-of-the-art algorithms in segmentation of mitochondria in EM images.

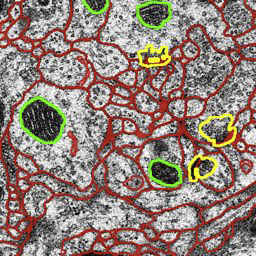

M. Seyedhosseini, T. Tasdizen.

“Multi-class Multi-scale Series Contextual Model for Image Segmentation,” In IEEE Transactions on Image Processing, Vol. PP, No. 99, 2013.

DOI: 10.1109/TIP.2013.2274388

Contextual information has been widely used as a rich source of information to segment multiple objects in an image. A contextual model utilizes the relationships between the objects in a scene to facilitate object detection and segmentation. However, using contextual information from different objects in an effective way for object segmentation remains a difficult problem. In this paper, we introduce a novel framework, called multi-class multi-scale (MCMS) series contextual model, which uses contextual information from multiple objects and at different scales for learning discriminative models in a supervised setting. The MCMS model incorporates cross-object and inter-object information into one probabilistic framework and thus is able to capture geometrical relationships and dependencies among multiple objects in addition to local information from each single object present in an image. We demonstrate that our MCMS model improves object segmentation performance in electron microscopy images and provides a coherent segmentation of multiple objects. By speeding up the segmentation process, the proposed method will allow neurobiologists to move beyond individual specimens and analyze populations paving the way for understanding neurodegenerative diseases at the microscopic level.

M. Seyedhosseini, M. Sajjadi, T. Tasdizen.

“Image Segmentation with Cascaded Hierarchical Models and Logistic Disjunctive Normal Networks,” In Proceedings of the IEEE International Conference on Computer Vison (ICCV 2013), pp. (accepted). 2013.

Contextual information plays an important role in solving vision problems such as image segmentation. However, extracting contextual information and using it in an effective way remains a difficult problem. To address this challenge, we propose a multi-resolution contextual framework, called cascaded hierarchical model (CHM), which learns contextual information in a hierarchical framework for image segmentation. At each level of the hierarchy, a classifier is trained based on downsampled input images and outputs of previous levels. Our model then incorporates the resulting multi-resolution contextual information into a classifier to segment the input image at original resolution. We repeat this procedure by cascading the hierarchical framework to improve the segmentation accuracy. Multiple classifiers are learned in the CHM; therefore, a fast and accurate classifier is required to make the training tractable. The classifier also needs to be robust against overfitting due to the large number of parameters learned during training. We introduce a novel classification scheme, called logistic disjunctive normal networks (LDNN), which consists of one adaptive layer of feature detectors implemented by logistic sigmoid functions followed by two fixed layers of logical units that compute conjunctions and disjunctions, respectively. We demonstrate that LDNN outperforms state-of-theart classifiers and can be used in the CHM to improve object segmentation performance.

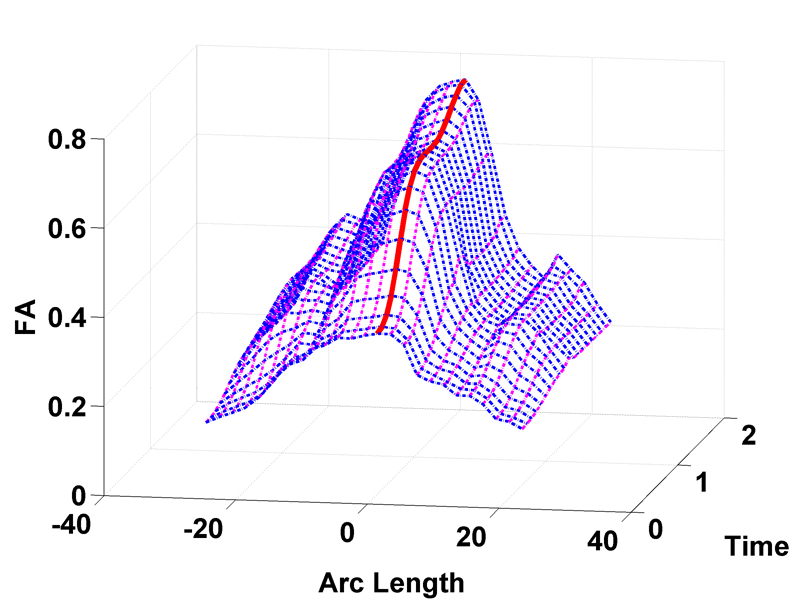

A. Sharma, P.T. Fletcher, J.H. Gilmore, M.L. Escolar, A. Gupta, M. Styner, G. Gerig.

“Spatiotemporal Modeling of Discrete-Time Distribution-Valued Data Applied to DTI Tract Evolution in Infant Neurodevelopment,” In Proceedings of the 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI), pp. 684--687. 2013.

DOI: 10.1109/ISBI.2013.6556567

This paper proposes a novel method that extends spatiotemporal growth modeling to distribution-valued data. The method relaxes assumptions on the underlying noise models by considering the data to be represented by the complete probability distributions rather than a representative, single-valued summary statistics like the mean. When summarizing by the latter method, information on the underlying variability of data is lost early in the process and is not available at later stages of statistical analysis. The concept of ’distance’ between distributions and an ’average’ of distributions is employed. The framework quantifies growth trajectories for individuals and populations in terms of the complete data variability estimated along time and space. Concept is demonstrated in the context of our driving application which is modeling of age-related changes along white matter tracts in early neurodevelopment. Results are shown for a single subject with Krabbe's disease in comparison with a normative trend estimated from 15 healthy controls.

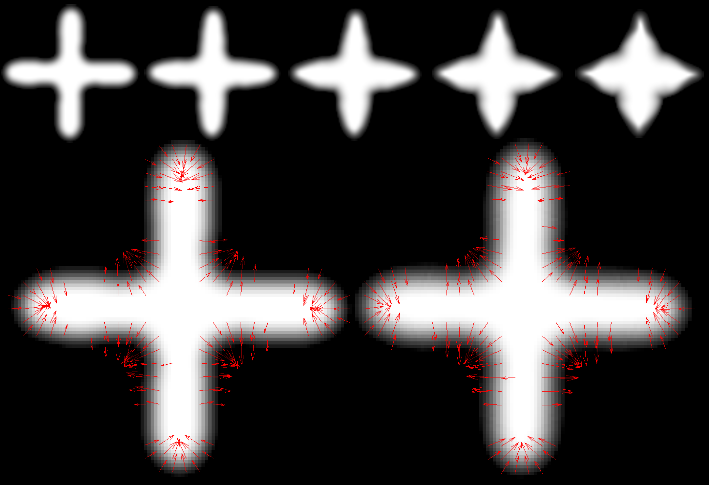

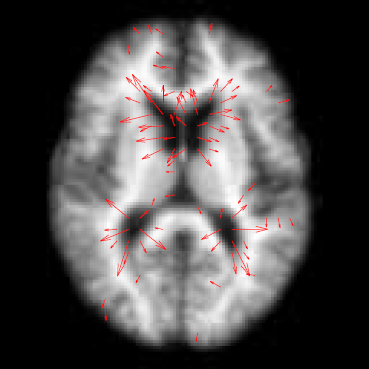

Y. Shi, G. Roger, C. Vachet, F. Budin, E. Maltbie, A. Verde, M. Hoogstoel, J.-B. Berger, M. Styner.

“Software-based diffusion MR human brain phantom for evaluating fiber-tracking algorithms,” In Proceedings of SPIE 8669, Medical Imaging 2013: Image Processing, 86692A, 2013.

DOI: 10.1117/12.2006113

PubMed ID: 24357914

PubMed Central ID: PMC3865235

Fiber tracking provides insights into the brain white matter network and has become more and more popular in diffusion magnetic resonance (MR) imaging. Hardware or software phantom provides an essential platform to investigate, validate and compare various tractography algorithms towards a "gold standard". Software phantoms excel due to their flexibility in varying imaging parameters, such as tissue composition, SNR, as well as potential to model various anatomies and pathologies. This paper describes a novel method in generating diffusion MR images with various imaging parameters from realistically appearing, individually varying brain anatomy based on predefined fiber tracts within a high-resolution human brain atlas. Specifically, joint, high resolution DWI and structural MRI brain atlases were constructed with images acquired from 6 healthy subjects (age 22-26) for the DWI data and 56 healthy subject (age 18-59) for the structural MRI data. Full brain fiber tracking was performed with filtered, two-tensor tractography in atlas space. A deformation field based principal component model from the structural MRI as well as unbiased atlas building was then employed to generate synthetic structural brain MR images that are individually varying. Atlas fiber tracts were accordingly warped into each synthetic brain anatomy. Diffusion MR images were finally computed from these warped tracts via a composite hindered and restricted model of diffusion with various imaging parameters for gradient directions, image resolution and SNR. Furthermore, an open-source program was developed to evaluate the fiber tracking results both qualitatively and quantitatively based on various similarity measures.

S. Short, J.T. Elison, B.D. Goldman, M. Styner, H. Gu, M. Connelly, E. Maltbie, S. Woolson, W. Lin, G. Gerig, J.S. Reznick, J.H. Gilmore.

“Associations Between White Matter Microstructure and Infants' Working Memory,” In Neuroimage, Vol. 64, No. 1, Elsvier, pp. 156--166. January, 2013.

DOI: 10.1016/j.neuroimage.2012.09.021

PubMed ID: 22989623

Working memory emerges in infancy and plays a privileged role in subsequent adaptive cognitive development. The neural networks important for the development of working memory during infancy remain unknown. We used diffusion tensor imaging (DTI) and deterministic fiber tracking to characterize the microstructure of white matter fiber bundles hypothesized to support working memory in 12-month-old infants (n=73). Here we show robust associations between infants' visuospatial working memory performance and microstructural characteristics of widespread white matter. Significant associations were found for white matter tracts that connect brain regions known to support working memory in older children and adults (genu, anterior and superior thalamic radiations, anterior cingulum, arcuate fasciculus, and the temporal-parietal segment). Better working memory scores were associated with higher FA and lower RD values in these selected white matter tracts. These tract-specific brain-behavior relationships accounted for a significant amount of individual variation above and beyond infants' gestational age and developmental level, as measured with the Mullen Scales of Early Learning. Working memory was not associated with global measures of brain volume, as expected, and few associations were found between working memory and control white matter tracts. To our knowledge, this study is among the first demonstrations of brain-behavior associations in infants using quantitative tractography. The ability to characterize subtle individual differences in infant brain development associated with complex cognitive functions holds promise for improving our understanding of normative development, biomarkers of risk, experience-dependent learning and neuro-cognitive periods of developmental plasticity.

S.C. Sibole, S.A. Maas, J.P. Halloran, J.A. Weiss, A. Erdemir.

“Evaluation of a post-processing approach for multiscale analysis of biphasic mechanics of chondrocytes,” In Computer Methods in Biomechanical and Biomedical Engineering, Vol. 16, No. 10, pp. 1112--1126. 2013.

DOI: 10.1080/10255842.2013.809711

PubMed ID: 23809004

Understanding the mechanical behaviour of chondrocytes as a result of cartilage tissue mechanics has significant implications for both evaluation of mechanobiological function and to elaborate on damage mechanisms. A common procedure for prediction of chondrocyte mechanics (and of cell mechanics in general) relies on a computational post-processing approach where tissue-level deformations drive cell-level models. Potential loss of information in this numerical coupling approach may cause erroneous cellular-scale results, particularly during multiphysics analysis of cartilage. The goal of this study was to evaluate the capacity of first- and second-order data passing to predict chondrocyte mechanics by analysing cartilage deformations obtained for varying complexity of loading scenarios. A tissue-scale model with a sub-region incorporating representation of chondron size and distribution served as control. The post-processing approach first required solution of a homogeneous tissue-level model, results of which were used to drive a separate cell-level model (same characteristics as the sub-region of control model). The first-order data passing appeared to be adequate for simplified loading of the cartilage and for a subset of cell deformation metrics, for example, change in aspect ratio. The second-order data passing scheme was more accurate, particularly when asymmetric permeability of the tissue boundaries was considered. Yet, the method exhibited limitations for predictions of instantaneous metrics related to the fluid phase, for example, mass exchange rate. Nonetheless, employing higher order data exchange schemes may be necessary to understand the biphasic mechanics of cells under lifelike tissue loading states for the whole time history of the simulation.

N.P. Singh, J. Hinkle, S. Joshi, P.T. Fletcher.

“A Vector Momenta Formulation of Diffeomorphisms for Improved Geodesic Regression and Atlas Construction,” In Proceedings of the 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI), Note: Received Best Student Paper Award, pp. 1219--1222. 2013.

DOI: 10.1109/ISBI.2013.6556700

This paper presents a novel approach for diffeomorphic image regression and atlas estimation that results in improved convergence and numerical stability. We use a vector momenta representation of a diffeomorphism's initial conditions instead of the standard scalar momentum that is typically used. The corresponding variational problem results in a closed form update for template estimation in both the geodesic regression and atlas estimation problems. While we show that the theoretical optimal solution is equivalent to the scalar momenta case, the simplification of the optimization problem leads to more stable and efficient estimation in practice. We demonstrate the effectiveness of our method for atlas estimation and geodesic regression using synthetically generated shapes and 3D MRI brain scans.

Keywords: LDDMM, Geodesic regression, Atlas, Vector Momentum

N.P. Singh, J. Hinkle, S. Joshi, P.T. Fletcher.

“A Hierarchical Geodesic Model for Diffeomorphic Longitudinal Shape Analysis,” In Proceedings of the International Conference on Information Processing in Medical Imaging (IPMI), Lecture Notes in Computer Science (LNCS), pp. (accepted). 2013.

Hierarchical linear models (HLMs) are a standard approach for analyzing data where individuals are measured repeatedly over time. However, such models are only applicable to longitudinal studies of Euclidean data. In this paper, we propose a novel hierarchical geodesic model (HGM), which generalizes HLMs to the manifold setting. Our proposed model explains the longitudinal trends in shapes represented as elements of the group of diffeomorphisms. The individual level geodesics represent the trajectory of shape changes within individuals. The group level geodesic represents the average trajectory of shape changes for the population. We derive the solution of HGMs on diffeomorphisms to estimate individual level geodesics, the group geodesic, and the residual geodesics. We demonstrate the effectiveness of HGMs for longitudinal analysis of synthetically generated shapes and 3D MRI brain scans.

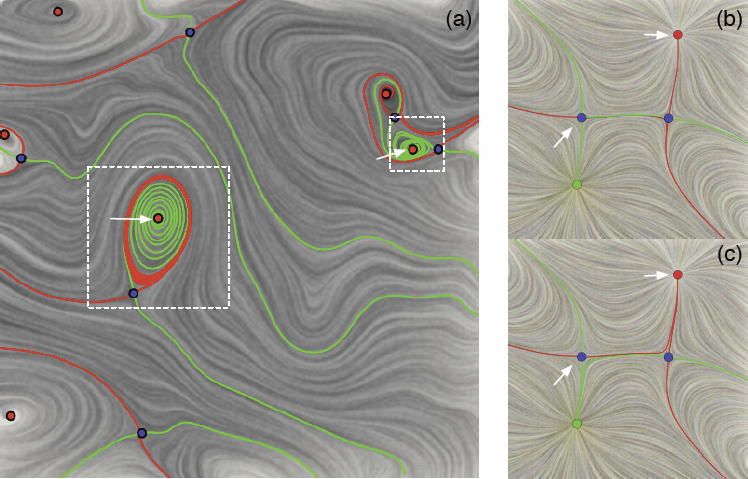

P. Skraba, Bei Wang, G. Chen, P. Rosen.

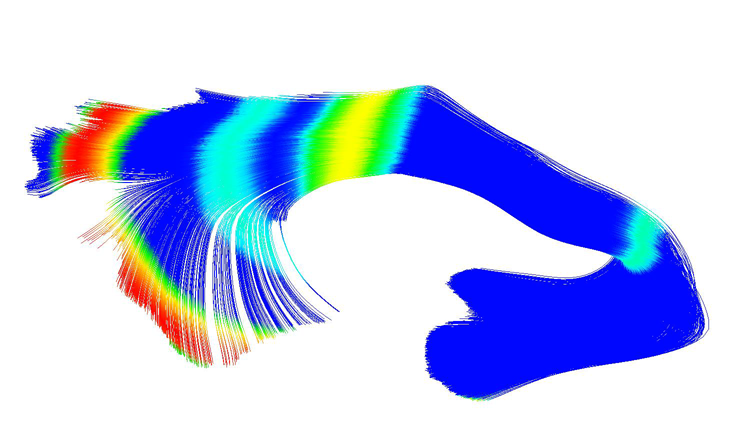

“2D Vector Field Simplification Based on Robustness,” SCI Technical Report, No. UUSCI-2013-004, SCI Institute, University of Utah, 2013.

Vector field simplification aims to reduce the complexity of the flow by removing features in order of their relevance and importance, to reveal prominent behavior and obtain a compact representation for interpretation. Most existing simplification techniques based on the topological skeleton successively remove pairs of critical points connected by separatrices using distance or area-based relevance measures. These methods rely on the stable extraction of the topological skeleton, which can be difficult due to instability in numerical integration, especially when processing highly rotational flows. Further, the distance and area-based metrics are used to determine the cancellation ordering of features from a geometric point of view. Specifically, these metrics do not consider the flow magnitude, which is an important physical property of the flow. In this paper, we propose a novel simplification scheme derived from the recently introduced topological notion of robustness, which provides a complementary flow structure hierarchy to the traditional topological skeleton-based approach. Robustness enables the pruning of sets of critical points according to a quantitative measure of their stability, that is, the minimum amount of vector field perturbation required to remove them within a local neighborhood. This leads to a natural hierarchical simplification scheme with more physical consideration than purely topological-skeleton-based methods. Such a simplification does not depend on the topological skeleton of the vector field and therefore can handle more general situations (e.g. centers and pairs not connected by separatrices). We also provide a novel simplification algorithm based on degree theory with fewer restrictions and so can handle more general boundary conditions. We provide an implementation under the piecewise-linear setting and apply it to both synthetic and real-world datasets.

W.C. Stacey, S. Kellis, B. Greger, C.R. Butson, P.R. Patel, T. Assaf, T. Mihaylova, S. Glynn.

“Potential for unreliable interpretation of EEG recorded with microelectrodes,” In Epilepsia, May, 2013.

ISSN: 00139580

DOI: 10.1111/epi.12202

T. Suter, A. Barg, M. Knupp, H.B. Henninger, B. Hintermann.

“Surgical technique: talar neck osteotomy to lengthen the medial column after a malunited talar neck fracture,” In Clinical Orthopaedics & Related research, Vol. 471, No. 4, pp. 1356--1364. 2013.

DOI: 10.1080/10255842.2013.809711

PubMed ID: 23809004

Understanding the mechanical behaviour of chondrocytes as a result of cartilage tissue mechanics has significant implications for both evaluation of mechanobiological function and to elaborate on damage mechanisms. A common procedure for prediction of chondrocyte mechanics (and of cell mechanics in general) relies on a computational post-processing approach where tissue-level deformations drive cell-level models. Potential loss of information in this numerical coupling approach may cause erroneous cellular-scale results, particularly during multiphysics analysis of cartilage. The goal of this study was to evaluate the capacity of first- and second-order data passing to predict chondrocyte mechanics by analysing cartilage deformations obtained for varying complexity of loading scenarios. A tissue-scale model with a sub-region incorporating representation of chondron size and distribution served as control. The post-processing approach first required solution of a homogeneous tissue-level model, results of which were used to drive a separate cell-level model (same characteristics as the sub-region of control model). The first-order data passing appeared to be adequate for simplified loading of the cartilage and for a subset of cell deformation metrics, for example, change in aspect ratio. The second-order data passing scheme was more accurate, particularly when asymmetric permeability of the tissue boundaries was considered. Yet, the method exhibited limitations for predictions of instantaneous metrics related to the fluid phase, for example, mass exchange rate. Nonetheless, employing higher order data exchange schemes may be necessary to understand the biphasic mechanics of cells under lifelike tissue loading states for the whole time history of the simulation.

M. Szegedi, J. Hinkle, P. Rassiah, V. Sarkar, B. Wang, S. Joshi, B. Salter.

“Four‐dimensional tissue deformation reconstruction (4D TDR) validation using a real tissue phantom,” In Journal of Applied Clinical Medical Physics, Vol. 14, No. 1, pp. 115-132. 2013.

DOI: 10.1120/jacmp.v14i1.4012

Calculation of four‐dimensional (4D) dose distributions requires the remapping of dose calculated on each available binned phase of the 4D CT onto a reference phase for summation. Deformable image registration (DIR) is usually used for this task, but unfortunately almost always considers only endpoints rather than the whole motion path. A new algorithm, 4D tissue deformation reconstruction (4D TDR), that uses either CT projection data or all available 4D CT images to reconstruct 4D motion data, was developed. The purpose of this work is to verify the accuracy of the fit of this new algorithm using a realistic tissue phantom. A previously described fresh tissue phantom with implanted electromagnetic tracking (EMT) fiducials was used for this experiment. The phantom was animated using a sinusoidal and a real patient‐breathing signal. Four‐dimensional computer tomography (4D CT) and EMT tracking were performed. Deformation reconstruction was conducted using the 4D TDR and a modified 4D TDR which takes real tissue hysteresis (4D TDRHysteresis) into account. Deformation estimation results were compared to the EMT and 4D CT coordinate measurements. To eliminate the possibility of the high contrast markers driving the 4D TDR, a comparison was made using the original 4D CT data and data in which the fiducials were electronically masked. For the sinusoidal animation, the average deviation of the 4D TDR compared to the manually determined coordinates from 4D CT data was 1.9 mm, albeit with as large as 4.5 mm deviation. The 4D TDR calculation traces matched 95% of the EMT trace within 2.8 mm. The motion hysteresis generated by real tissue is not properly projected other than at endpoints of motion. Sinusoidal animation resulted in 95% of EMT measured locations to be within less than 1.2 mm of the measured 4D CT motion path, enabling accurate motion characterization of the tissue hysteresis. The 4D TDRHysteresis calculation traces accounted well for the hysteresis and matched 95% of the EMT trace within 1.6 mm. An irregular (in amplitude and frequency) recorded patient trace applied to the same tissue resulted in 95% of the EMT trace points within less than 4.5 mm when compared to both the 4D CT and 4D TDRHysteresis motion paths. The average deviation of 4D TDRHysteresis compared to 4D CT datasets was 0.9 mm under regular sinusoidal and 1.0 mm under irregular patient trace animation. The EMT trace data fit to the 4D TDRHysteresis was within 1.6 mm for sinusoidal and 4.5 mm for patient trace animation. While various algorithms have been validated for end‐to‐end accuracy, one can only be fully confident in the performance of a predictive algorithm if one looks at data along the full motion path. The 4D TDR, calculating the whole motion path rather than only phase‐ or endpoints, allows us to fully characterize the accuracy of a predictive algorithm, minimizing assumptions. This algorithm went one step further by allowing for the inclusion of tissue hysteresis effects, a real‐world effect that is neglected when endpoint‐only validation is performed. Our results show that the 4D TDRHysteresis correctly models the deformation at the endpoints and any intermediate points along the motion path.

PACS numbers: 87.55.km, 87.55.Qr, 87.57.nf, 87.85.Tu

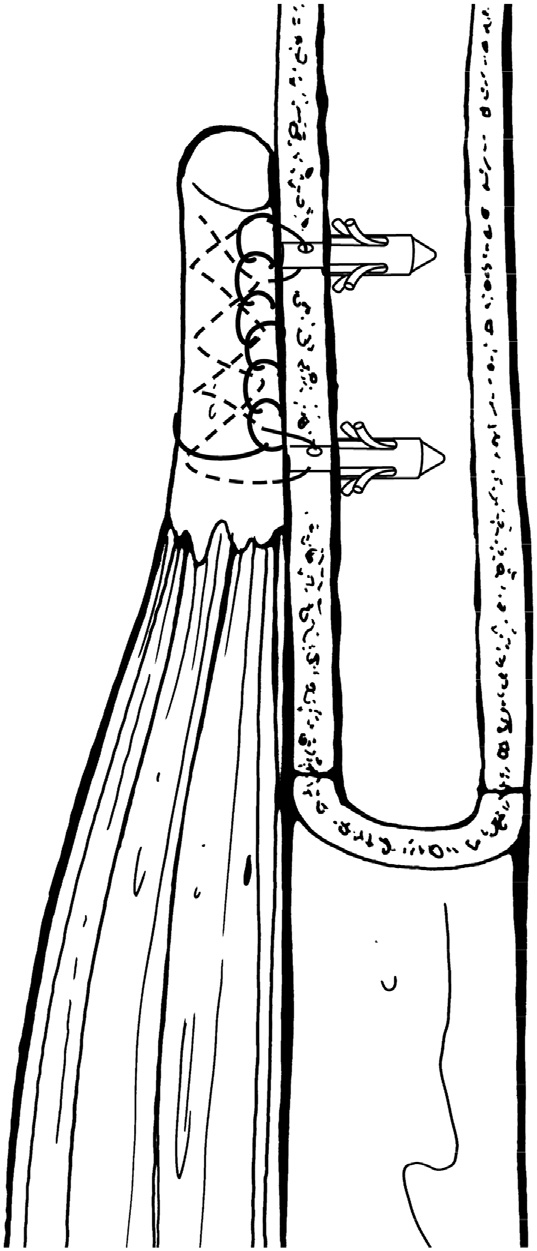

R.Z. Tashjian, H.B. Henninger.

“Biomechanical evaluation of subpectoral biceps tenodesis: dual suture anchor versus interference screw fixation,” In Journal of Shoulder and Elbow Surgery, Vol. 22, No. 10, pp. 1408–-1412. 2013.

DOI: 10.1016/j.jse.2012.12.039

Background

Subpectoral biceps tenodesis has been reliably used to treat a variety of biceps tendon pathologies. Interference screws have been shown to have superior biomechanical properties compared to suture anchors; although, only single anchor constructs have been evaluated in the subpectoral region. The purpose of this study was to compare interference screw fixation with a suture anchor construct, using 2 anchors for a subpectoral tenodesis.

Methods

A subpectoral biceps tenodesis was performed using either an interference screw (8 × 12 mm; Arthrex) or 2 suture anchors (Mitek G4) with #2 FiberWire (Arthrex) in a Krackow and Bunnell configuration in seven pairs of human cadavers. The humerus was inverted in an Instron and the biceps tendon was loaded vertically. Displacement driven cyclic loading was performed followed by failure loading.

Results

Suture anchor constructs had lower stiffness upon initial loading (P = .013). After 100 cycles, the stiffness of the suture anchor construct "softened" (decreased 9%, P < .001), whereas the screw construct was unchanged (0.4%, P = .078). Suture anchors had significantly higher ultimate failure strain than the screws (P = .003), but ultimate failure loads were similar between constructs: 280 ± 95 N (screw) vs 310 ± 91 N (anchors) (P = .438).

Conclusion

The interference screw was significantly stiffer than the suture anchor construct. Ultimate failure loads were similar between constructs, unlike previous reports indicating interference screws had higher ultimate failure loads compared to suture anchors. Neither construct was superior with regards to stress; although, suture anchors could withstand greater elongation prior to failure.

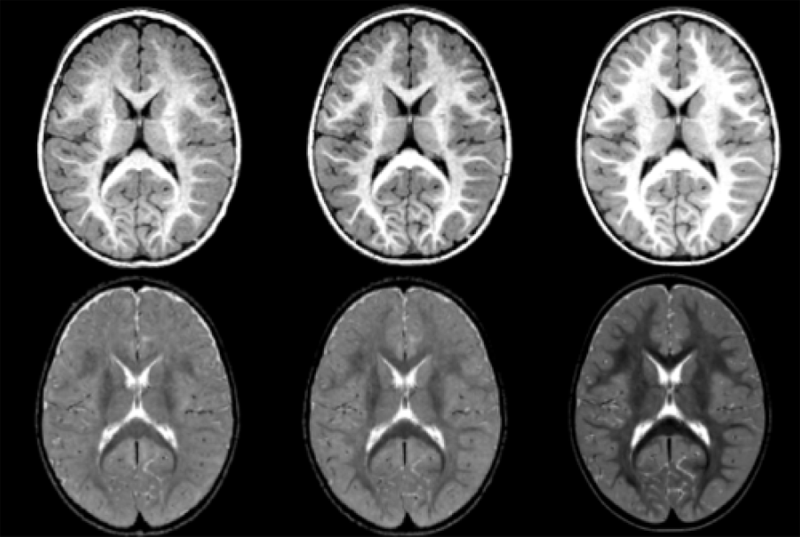

A. Vardhan, M.W. Prastawa, J. Piven, G. Gerig.

“Modeling Longitudinal MRI Changes in Populations Using a Localized, Information-Theoretic Measure of Contrast,” In Proceedings of the 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI), pp. 1396--1399. 2013.

DOI: 10.1109/ISBI.2013.6556794

Longitudinal MR imaging during early brain development provides important information about growth patterns and the development of neurological disorders. We propose a new framework for studying brain growth patterns within and across populations based on MRI contrast changes, measured at each time point of interest and at each voxel. Our method uses regression in the LogOdds space and an informationtheoretic measure of distance between distributions to capture contrast in a manner that is robust to imaging parameters and without requiring intensity normalization. We apply our method to a clinical neuroimaging study on early brain development in autism, where we obtain a 4D spatiotemporal model of contrast changes in multimodal structural MRI.

A. Vardhan, J. Piven, M. Prastawa, G. Gerig.

“A longitudinal structural MRI study of change in regional contrast in Autism Spectrum Disorder,” In Proceedings of the 19th Annual Meeting of the Organization for Human Brain Mapping OHBM, pp. (in print). 2013.

The brain undergoes tremendous changes in shape, size, structure, and chemical composition, between birth and 2 years of age [Rutherford, 2001]. Existing studies have focused on morphometric and volumetric changes to study the early developing brain. Although there have been some recent appearance studies based on intensity changes [Serag et al., 2011], these are highly dependent on the quality of normalization. The study we present here uses the changes in contrast between gray and white matter tissue intensities in structural MRI of the brain, as a measure of regional growth [Vardhan et al., 2011]. Kernel regression was used to generate continuous curves characterizing the changes in contrast with time. A statistical analysis was then performed on these curves, comparing two population groups : (i) HR+ : high-risk subjects who tested positive for Autism Spectrum Disorder (ASD), and (ii) HR- : high-risk subjects who tested negative for ASD.

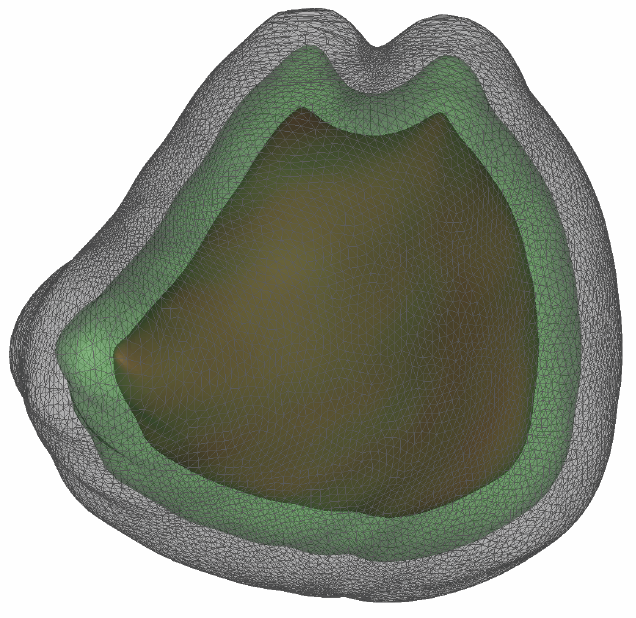

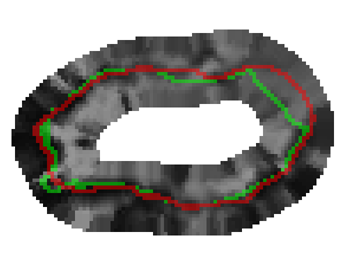

G. Veni, Z. Fu, S.P. Awate, R.T. Whitaker.

“Proper Ordered Meshing of Complex Shapes and Optimal Graph Cuts Applied to Atrial-Wall Segmentation from DE-MRI,” In Proceedings of the 2013 IEEE 10th International Symposium on Biomedical Imaging (ISBI), pp. 1296--1299. 2013.

DOI: 10.1109/ISBI.2013.6556769

Segmentation of the left atrium wall from delayed enhancement MRI is challenging because of inconsistent contrast combined with noise and high variation in atrial shape and size. This paper presents a method for left-atrium wall segmentation by using a novel sophisticated mesh-generation strategy and graph cuts on a proper ordered graph. The mesh is part of a template/model that has an associated set of learned intensity features. When this mesh is overlaid onto a test image, it produces a set of costs on the graph vertices which eventually leads to an optimal segmentation. The novelty also lies in the construction of proper ordered graphs on complex shapes and for choosing among distinct classes of base shapes/meshes for automatic segmentation. We evaluate the proposed segmentation framework quantitatively on simulated and clinical cardiac MRI.

G. Veni, S. Awate, Z. Fu, R.T. Whittaker.

“Bayesian Segmentation of Atrium Wall using Globally-Optimal Graph Cuts on 3D Meshes,” In Proceedings of the International Conference on Information Processing in Medical Imaging (IPMI), Lecture Notes in Computer Science (LNCS), Vol. 23, pp. 656--677. 2013.

PubMed ID: 24684007

Efficient segmentation of the left atrium (LA) wall from delayed enhancement MRI is challenging due to inconsistent contrast, combined with noise, and high variation in atrial shape and size. We present a surface-detection method that is capable of extracting the atrial wall by computing an optimal a-posteriori estimate. This estimation is done on a set of nested meshes, constructed from an ensemble of segmented training images, and graph cuts on an associated multi-column, proper-ordered graph. The graph/mesh is a part of a template/model that has an associated set of learned intensity features. When this mesh is overlaid onto a test image, it produces a set of costs which lead to an optimal segmentation. The 3D mesh has an associated weighted, directed multi-column graph with edges that encode smoothness and inter-surface penalties. Unlike previous graph-cut methods that impose hard constraints on the surface properties, the proposed method follows from a Bayesian formulation resulting in soft penalties on spatial variation of the cuts through the mesh. The novelty of this method also lies in the construction of proper-ordered graphs on complex shapes for choosing among distinct classes of base shapes for automatic LA segmentation. We evaluate the proposed segmentation framework on simulated and clinical cardiac MRI.

Keywords: Atrial Fibrillation, Bayesian segmentation, Minimum s-t cut, Mesh Generation, Geometric Graph

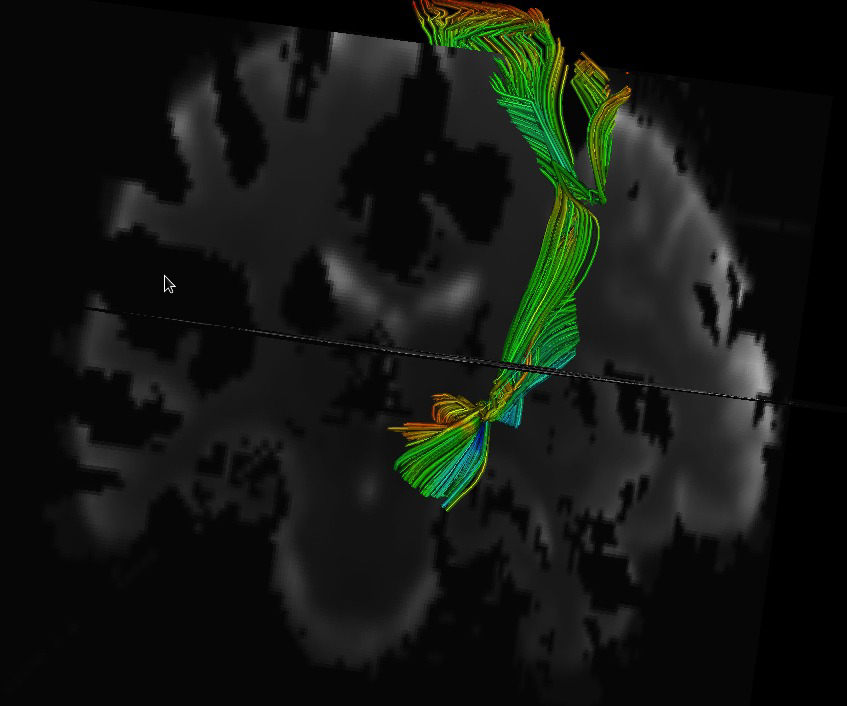

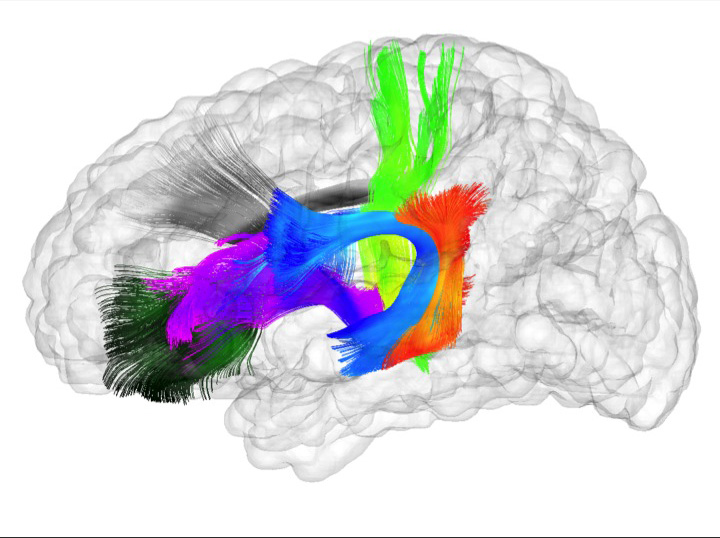

A.R. Verde, J.-B. Berger, A. Gupta, M. Farzinfar, A. Kaiser, V.W. Chanon, C. Boettiger, H. Johnson, J. Matsui, A. Sharma, C. Goodlett, Y. Shi, H. Zhu, G. Gerig, S. Gouttard, C. Vachet, M. Styner.

“UNC-Utah NA-MIC DTI framework: atlas based fiber tract analysis with application to a study of nicotine smoking addiction,” In Proc. SPIE 8669, Medical Imaging 2013: Image Processing, 86692D, Vol. 8669, pp. 86692D-86692D-8. 2013.

DOI: 10.1117/12.2007093

Purpose: The UNC-Utah NA-MIC DTI framework represents a coherent, open source, atlas fiber tract based DTI analysis framework that addresses the lack of a standardized fiber tract based DTI analysis workflow in the field. Most steps utilize graphical user interfaces (GUI) to simplify interaction and provide an extensive DTI analysis framework for non-technical researchers/investigators. Data: We illustrate the use of our framework on a 54 directional DWI neuroimaging study contrasting 15 Smokers and 14 Controls. Method(s): At the heart of the framework is a set of tools anchored around the multi-purpose image analysis platform 3D-Slicer. Several workflow steps are handled via external modules called from Slicer in order to provide an integrated approach. Our workflow starts with conversion from DICOM, followed by thorough automatic and interactive quality control (QC), which is a must for a good DTI study. Our framework is centered around a DTI atlas that is either provided as a template or computed directly as an unbiased average atlas from the study data via deformable atlas building. Fiber tracts are defined via interactive tractography and clustering on that atlas. DTI fiber profiles are extracted automatically using the atlas mapping information. These tract parameter profiles are then analyzed using our statistics toolbox (FADTTS). The statistical results are then mapped back on to the fiber bundles and visualized with 3D Slicer. Results: This framework provides a coherent set of tools for DTI quality control and analysis. Conclusions: This framework will provide the field with a uniform process for DTI quality control and analysis.